By: Greg Robidoux | Comments (43) | Related: More > Integration Services Development

Problem

When loading data using SQL Server Integration Services (SSIS) to import data from a CSV file, every single one of the columns in the CSV file has double quotes around the data. When using the Data Flow Task to import the data I have double quotes around all of the imported data. How can I import the data and remove the double quotes?

Solution

This is a pretty simple solution, but the fix may not be as apparent as you would think. Let's take a look at our example.

Here is the sample CSV file as it looks in a text editor. You can see that all of the columns have double quotes around the data even where there is no data. The file is comma delimited, so this should give us enough information to import the data column by column.

To create the package we use a Data Flow Task and then use the Flat File Source as our data flow source.

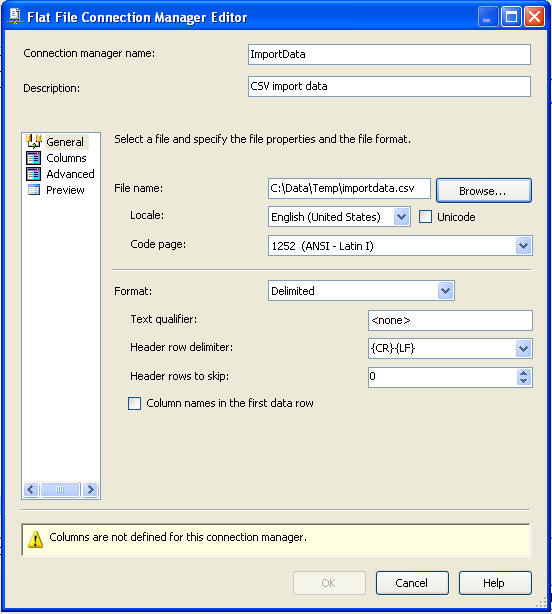

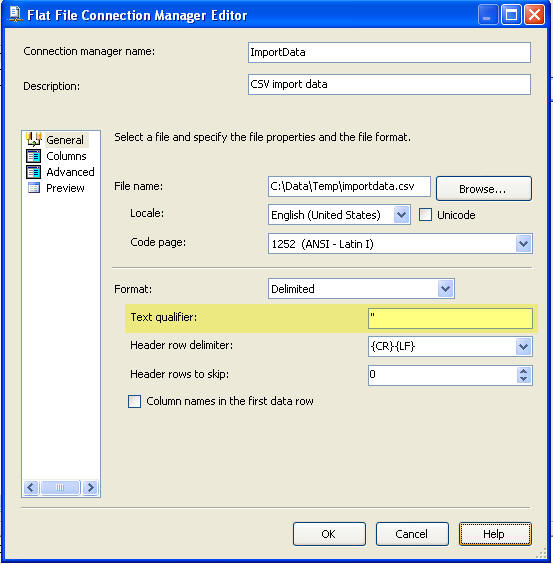

When setting up the Flat File Connection for the data source we enter the information below, basically just selecting our source file.

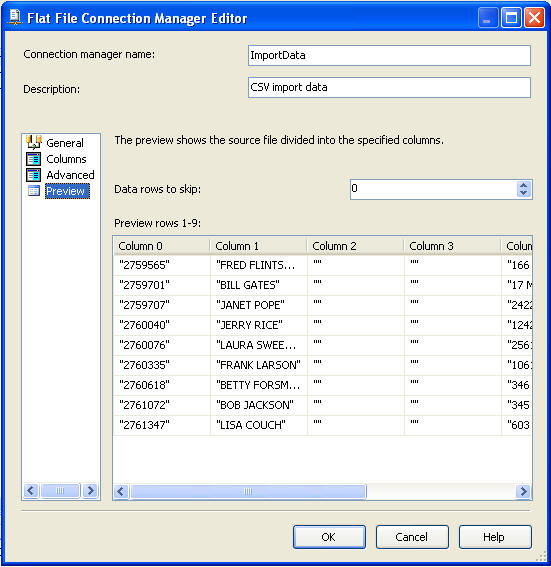

If we do a quick preview on the dataset we can see that every column has the double quotes even the columns where there is no data. If you open the text file in Excel the double quotes are automatically stripped, so what needs to be done in SSIS to accomplish this.

On this screen you can see the highlighted area and the entry that is made for the "Text qualifier". Here we enter in the double quote mark " and this will allow SSIS to strip the double quotes from all columns.

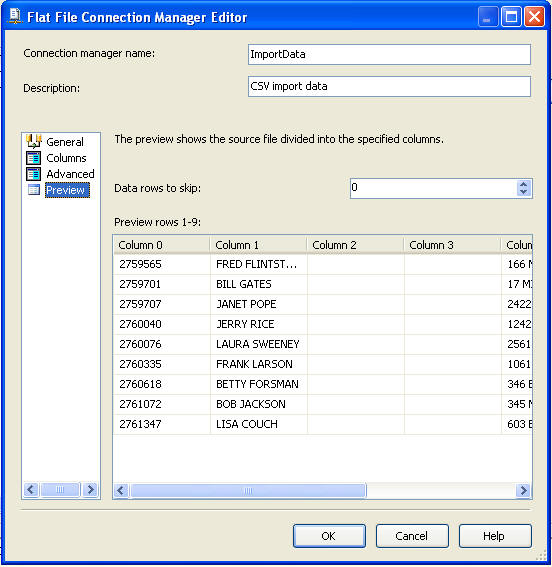

If we do another preview we can see that the double quotes are now gone and we can move on to the next part of our SSIS package development.

As mentioned above, this is a simple fix to solve this problem. If you are faced with this issue, hopefully this gives you a quick answer to get your development moving forward. This same technique can be used to strip any other text qualifier data from your files.

Next Steps

- Take a look at these other SSIS tips

About the author

Greg Robidoux is the President and founder of Edgewood Solutions, a technology services company delivering services and solutions for Microsoft SQL Server. He is also one of the co-founders of MSSQLTips.com. Greg has been working with SQL Server since 1999, has authored numerous database-related articles, and delivered several presentations related to SQL Server. Before SQL Server, he worked on many data platforms such as DB2, Oracle, Sybase, and Informix.

Greg Robidoux is the President and founder of Edgewood Solutions, a technology services company delivering services and solutions for Microsoft SQL Server. He is also one of the co-founders of MSSQLTips.com. Greg has been working with SQL Server since 1999, has authored numerous database-related articles, and delivered several presentations related to SQL Server. Before SQL Server, he worked on many data platforms such as DB2, Oracle, Sybase, and Informix.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips