By: Arshad Ali | Comments (6) | Related: > In Memory OLTP

Problem

SQL Server 2014 introduces In-memory OLTP to create memory optimized tables for OLTP workloads to significantly improve performance and reduce processing time when you have a significant amount of memory and multi-core processors. But how do you decide which tables or stored procedures might benefit if migrated to In-memory OLTP and how much estimated work is required to migrate the objects? Check out this tip to learn more.

Solution

SQL Server Management Studio in SQL Server 2014 (gets installed when you chose to install Management Tools - Complete when installing SQL Server) includes the AMR (Analysis, Migration and Reporting) tool to get recommendations on which tables or stored procedures can be considered for migration to In-memory OLTP. AMR allows you to analyze and evaluate your existing OLTP workload to determine if In-memory OLTP will improve the performance. Based on this analysis and evaluation, AMR provides recommendations about tables and stored procedures which are potential good candidates of migration to In-memory OLTP. After you identify a table or a stored procedure that you would like to migrate to In-Memory OLTP, you can use the Memory Optimization Advisor to help you migrate traditional disk-based tables to In-Memory OLTP memory optimized tables.

AMR is a tool to assist you with identifying potential candidates for migration and does not guarantee the actual performance gain will match its prediction, if any predictions are provided. The reason being, the performance of the database workload is dependent upon a variety of factors and not all of them are considered.

Configuring the SQL Server Management Data Warehouse (MDW)

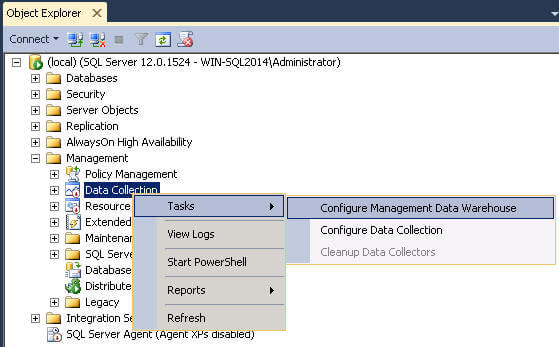

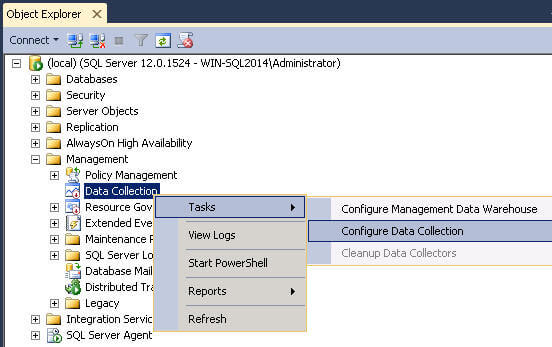

AMR leverages the Management Data Warehouse and the Data Collector for gathering information about workloads and makes recommendations based on that data. So in order to use the AMR tool, you must first configure the Management Data Warehouse. To configure, Management Data Warehouse, open Management Studio, go to Object Explorer then to the Management folder and right click on Data Collection. Then click on Configure Management Data Warehouse as shown below:

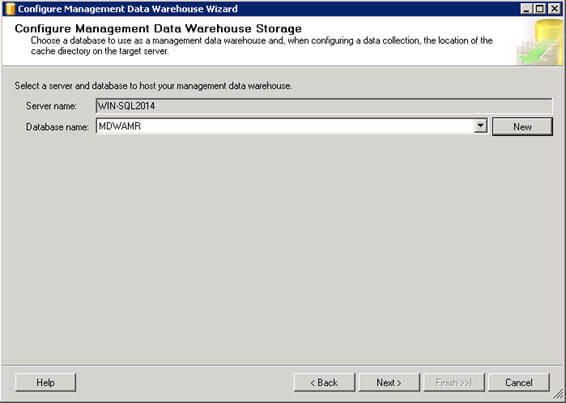

On the Configure Management Data Warehouse Storage page, specify the server name where you have a database for the management data warehouse or create a new database by clicking on the New button as shown below:

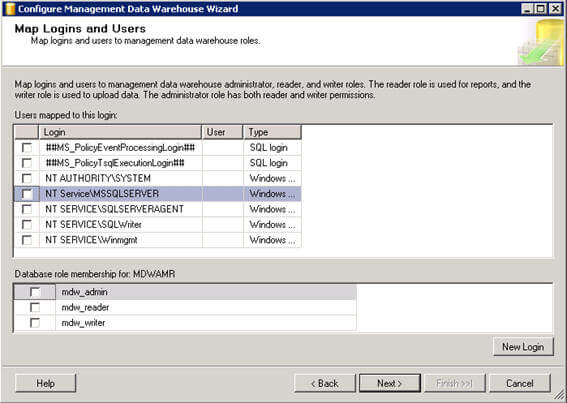

On the Map Logins and Users page of the wizard, you can map a user who is going to administer, read and write to the management data warehouse database:

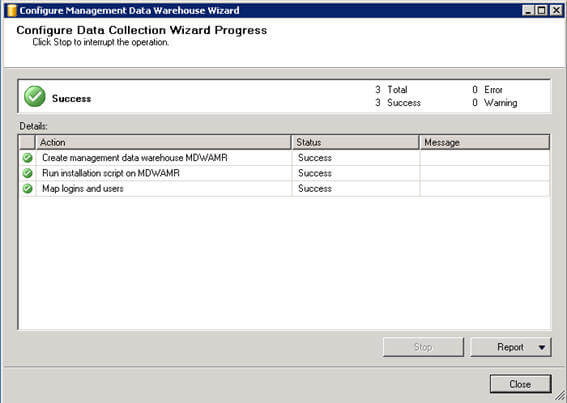

Once the management data warehouse is configured successfully, you will see a screen similar to what is shown below:

If you already have a management data warehouse configured and want to use it, you can go directly to the next step of configuring data collection.

Configuring the SQL Server Data Collection

There are two data collection sets used by the AMR tool; the first one is Table Usage Analysis which analyzes traditional disk based tables for migration to memory optimized tables. Second is Stored Procedure Analysis which analyzes stored procedures to migrate the code to a native stored procedure. These data collection sets collect data using dynamic management views (DMV) every fifteen minutes, and upload the data to the database configured to act as the Management Data Warehouse.

Please make sure SQL Server Agent is running before you start configuring the data collection.

To configure data collection, go to Object Explorer > Management, right click on Data Collection and then click on Configure Data Collection under Tasks as shown below.

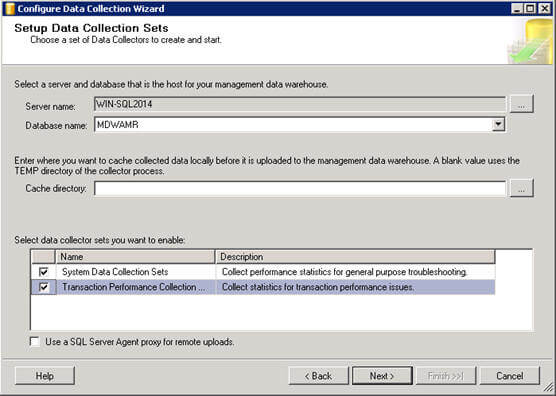

On the Setup Data Collection Sets page of the wizard, you first need to specify the server and database that is hosting your management data warehouse and then you need to choose the data collector sets. Please check Transaction Performance Collection Sets, which collects statistics for transaction performance issues as shown below.

In case of remote data collection, you must choose the SQL Server Agent proxy. This is required if the management data warehouse is not on the local instance and SQL Server Agent is not running under a domain account that has the dc_admin permissions on the remote instance.

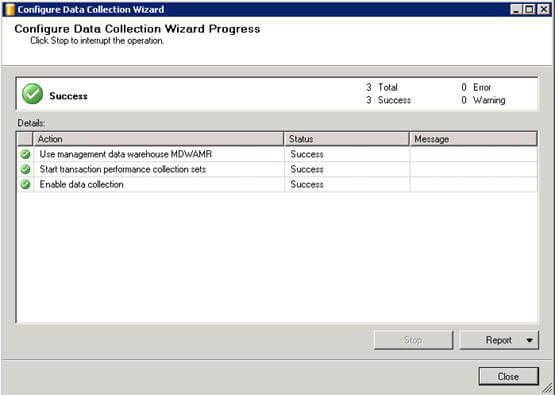

Once the data collection configuration is completed successfully, you will see a screen similar to what is shown below:

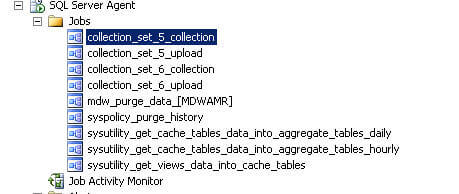

You can verify the configuration of data collectors by going to SQL Server Agent jobs. You will notice a few jobs with collection_set_<number>_collection and collection_set_<number>_upload as shown below:

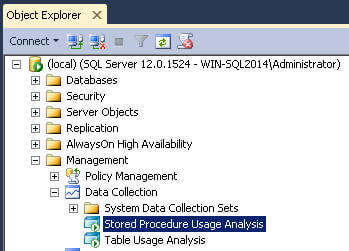

You can also verify the configuration of data collectors in SQL Server Management Studio by going to Management and expanding the Data Collection folder. You will see Stored Procedure Usage Analysis and Table Usage Analysis data collection sets. You can right click on these data collection sets to stop the data collection set or to create and upload the collection on demand.

Generating some SQL Server Processing workload for testing

AMR provides recommendations based on your workload. Hence, once you have the data collection sets configured, you need to run your workloads to get recommendations. To simulate a workload, I have created a few stored procedures as shown below:

CREATE PROCEDURE uspGetAllSalesTransactions AS SELECT * FROM Sales.SalesOrderHeader SH INNER JOIN Sales.SalesOrderDetail SD ON SH.SalesOrderID = SD.SalesOrderID GO CREATE PROCEDURE uspGetSalesTransactions @SalesOrderID INT AS SELECT * FROM Sales.SalesOrderHeader SH INNER JOIN Sales.SalesOrderDetail SD ON SH.SalesOrderID = SD.SalesOrderID WHERE SH.SalesOrderID = @SalesOrderID GO CREATE PROCEDURE uspGetSalesLineTotalSum AS SELECT SalesOrderID, SUM(SD.LineTotal) FROM Sales.SalesOrderDetail SD GROUP BY SalesOrderID GO

Now I am calling these stored procedures in a loop for 50 times to generate enough workload to get recommendations from AMR:

DECLARE @counter int = 1 DECLARE @executioncount int = 50 WHILE @counter <= @executioncount BEGIN EXEC uspGetAllSalesTransactions EXEC uspGetSalesTransactions 43666 EXEC uspGetSalesTransactions 43674 EXEC uspGetSalesTransactions 73837 EXEC uspGetSalesLineTotalSum SET @counter += 1 END GO

Please note, I have created these stored procedures to simulate a workload. In a production environment, you will get recommendations based on your real workload and you do not need to create test stored procedures.

Analyzing the SQL Server AMR reports

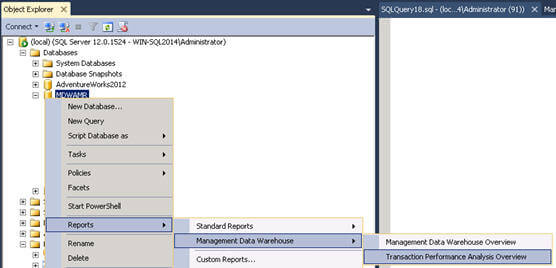

To review the recommendation based on analysis and evaluation of the workload by the AMR tool, in Management Studio you need to right click on the management data warehouse database and then click on Reports > Management Data Warehouse > Transaction Performance Analysis Overview menu item as shown below:

On the Transaction Performance Analysis Overview dashboard you can see three different analysis reports; 1. Table Usage Analysis, 2. Table Contention Analysis and 3. Stored Procedure Usage Analysis as shown below:

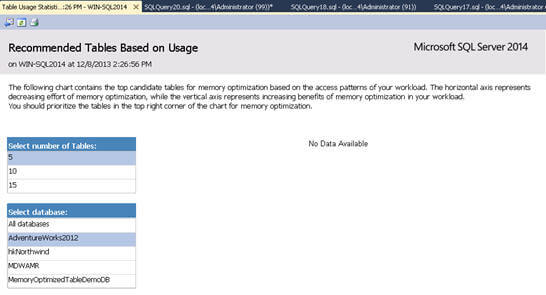

When you click on the Usage Analysis link under Table Analysis, you will see a screen like the one shown below; but wait a minute what happened here, it says no data available. The reasons is that data collection process runs on an interval, so either you can wait for this process to run or you can start it on demand by executing the appropriate SQL Server Agent Jobs.

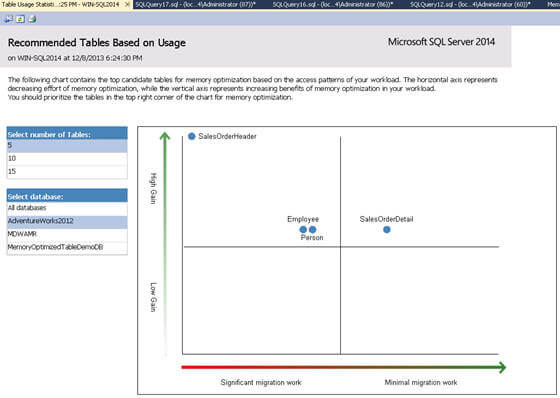

In my environment, once the data was collected and uploaded, I was able to see the chart below. The following chart contains the top candidate tables for memory optimization based on the access pattern of the workload. The horizontal axis represents decreasing effort for memory optimization whereas the vertical axis represents the increasing benefits of the memory optimization on the basis of your workload. In essence, tables in the top right corner of the chart should be the priority to migrate to memory optimization tables.

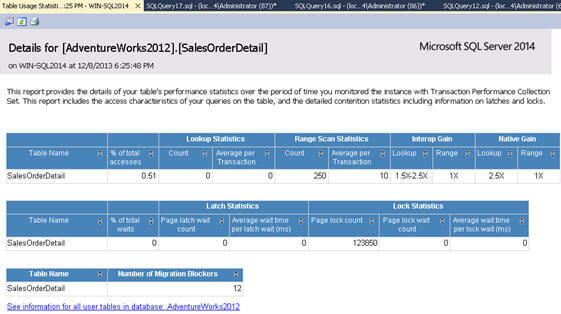

When you click on the any of the tables (on the little bubble with table name) in the chart above, it takes you to another report which provides the details of your table's performance statistics over the period of the time you monitored the instance on your workload with the Transaction Performance Collection set. The report includes the access characteristics of the queries run against the table and detailed contention status including latches and locks information.

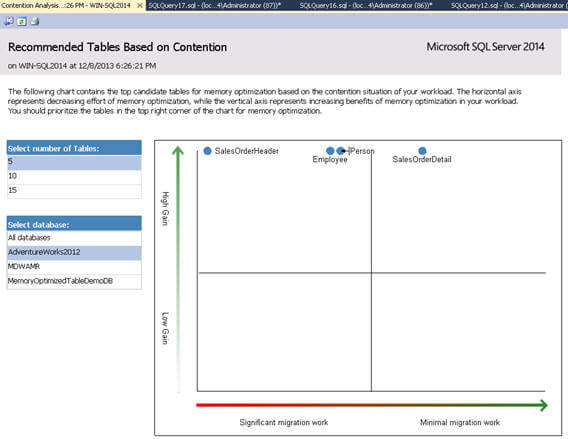

On the Transaction Performance Analysis Overview dashboard, when you click on Contention Analysis under Table Analysis, it will take you to another chart which contains the top candidate tables for memory optimization based on the contention situation of the workload. In this chart as well, the horizontal axis represents decreasing effort for memory optimization whereas the vertical axis represents the increasing benefits of the memory optimization based on your workload. Hence, tables in the top right corner of the chart should be the priority for migrating to memory optimization tables.

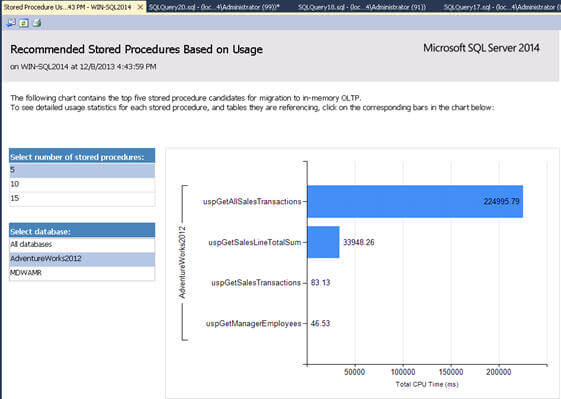

On the Transaction Performance Analysis Overview dashboard, when you click on Usage Analysis under Stored Procedure Analysis, it will take you to another chart which contains the top candidate stored procedures for migration to In-memory OLTP. A stored procedure with a high ratio of CPU time to elapsed time is a candidate for migration to In-memory OLTP.

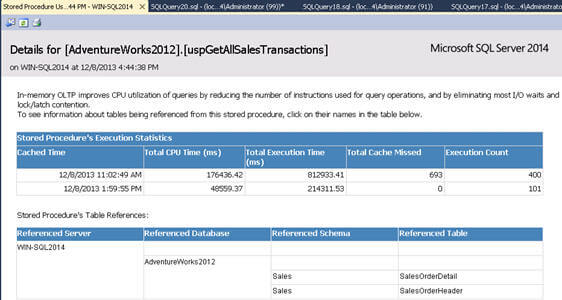

Clicking on the any of the bars (or stored procedures) takes you to another report, which has detail usage statistics for each stored procedure and tables they are referencing (because natively compiled stored procedures can only reference memory-optimized tables, which can add to the migration cost) as an example shown below.

To learn more about how to convert a stored procedure to a natively compiled stored procedure, please refer to Native Compilation Advisor and Migrate to Natively Compiled SQL Server Stored Procedures for Hekaton.

Please note, information and example shown here are based on SQL Server 2014 CTP2 release (you can download it here) and it might change in the RTM release. Please refer to Books Online for updated information.

Next Steps

- Review AAMR (Analysis, Migrate and Report) Tool on MSDN.

- Review Memory Optimization Advisor on MSDN.

About the author

Arshad Ali is a SQL and BI Developer focusing on Data Warehousing projects for Microsoft.

Arshad Ali is a SQL and BI Developer focusing on Data Warehousing projects for Microsoft.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips