By: Tim Smith | Comments (39) | Related: More > Import and Export

Problem

We're looking to minimize ETL costs by importing as much data as possible using a automated system. A data provider sends us flat file data and we extract, analyze and report on these data. Due to our limited budget and time, how can we automate this?

Solution

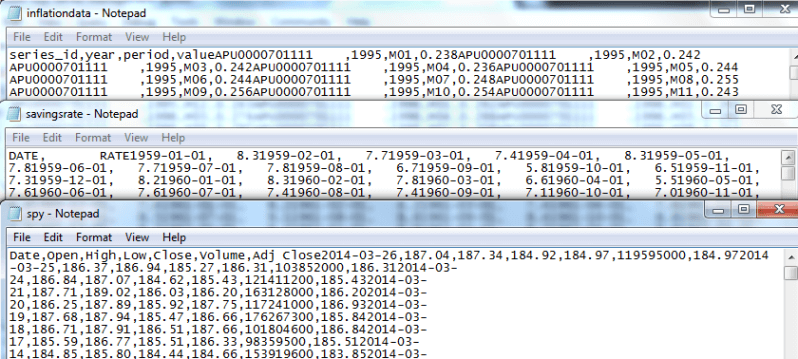

Before we begin, if your data provider possesses an API, it may be simpler to grab live data. For the sake of this example, we'll assume that the data provider sends only flat file data and that these files are named in an appropriate manner for their data (meaning, if it's stock data, the data file is "stock.txt"). If this is not the case, you will need to spend time re-naming the files you receive. I've provided three different files - savings.txt, inflationdata.csv and spy.csv as examples for this tip, but will refer to savings.txt for the written example (screenshots of the results are seen for all three).

Before importing flat file data into a table, a table needs to exist for these data. Because we are focusing on extraction, we will import these data into a staging table, accepting everything in the file. We can later filter the results. We will make some assumptions about these files and if any of these assumptions are incorrect, the below code must be changed to reflect it:

- The flat files columns are delimited by a character (in this example, a comma).

- The column names are listed in the first line of the file.

- New line characters demarcate new rows.

- The name of the file will be the name of the staging table.

These are important to note because for every exception to the rule, an exception must be noted in code. In a world of competitive data providers, most small startup shops have a large supply of who they want to work with if they don't like a specific company's data. Remember, you're paying for it, so make sure that it's what you want.

PowerShell Code to Automatically Import Data

PowerShell will automatically create our staging table using the above assumptions by reading from the file we want. We must tell PowerShell the name of the file and where the file is located for it to do this. For now, we will code this directly and later turn it into a function:

$location = "C:\files\" $file = "savingsrate" $extension = ".txt" $full = $location + $file + $extension Write-Host $full

In this step, we've stored the file location in the variable $location, the file name in the variable $file, and the extension of the file in the variable $extension. We then created a $full variable and created the full file path by concatenating the other variables and wrote out the result. This confirms that we're pointing to the correct file. Next, we want to get the first line of the file because this first line, as shown in our above assumptions, will determine the columns in the table.

$location = "C:\files\" $file = "savingsrate" $extension = ".txt" $full = $location + $file + $extension $all = Get-Content $full $columns = $all[0] $columns

Note, that if you're importing a large file, I would suggest the below syntax for columns - and this syntax will work with smaller files as well. It selects the first line from the file without loading the entire file's content to the $columns variable.

$full = $location + $file + $extension

$columns = Get-Content $full | Select -First 1

$columns = $columns.Replace(" ","")

$columns = $columns.Replace(",","] VARCHAR(100), [")

$table = "CREATE TABLE " + $file + "([" + $columns + "] VARCHAR(100))"

In this step, we add two new variables, $all which grabs all the data in the file, and $columns, which grabs only the first line of the file, which will become our columns. We'll notice that $columns returns "DATE, RATE" and we want to create a table with these two columns. Because this will be a staging table, we will use VARCHAR(100) to store the values (but, depending on their size, these can be automated to larger values) and make the name of the table to be the file name. This is shown in this next step with comments:

## Note that we want the comma to be replaced with the syntax for T-SQL column creation

## First, this will remove any unnecessary spaces so that our columns look like ColumnOne,ColumnTwo

$columns = $columns.Replace(" ","")

## Next, note that brackets are used in case of a keyword

$columns = $columns.Replace(",","] VARCHAR(100), [")

## This variable grabs CREATE TABLE, concatenates it with the file name, then concatenates it with the

## columns and ends it with the appropriate syntax to give the last column a VARCHAR of 100. Note that

## if we have larger data sets, we will need to expand it to larger values such as VARCHAR(250)

## or VARCHAR(500).

$table = "CREATE TABLE " + $file + "([" + $columns + "] VARCHAR(100))"

## Let's see our result:

Write-Host $table

We place column names in this syntax between brackets because the column names may be keywords (in this case, DATE is a keyword) and the brackets prevent our code from breaking. When we look at the results, we see that we have the appropriate syntax for creating a table in T-SQL. PowerShell reads the first line of the file, concatenates the necessary pieces and produces a table. Now we need PowerShell to actually create the table. For this we will need a connection to SQL Server and a SQL Server command; first, let's create both of those.

## $connection creates our Connection to SQL Server $connection = New-Object System.Data.SqlClient.SqlConnection ## $buildTable creates our Sql Server command we'll later execute $buildTable = New-Object System.Data.SqlClient.SqlCommand ## This establishes our connection string $connection.ConnectionString = "Data Source=OURSERVER\OURINSTANCE;Database=StagingDatabase;integrated security=true" ## This is command we'll execute when we connect to SQL Server, which as we know creates a table. $buildTable.CommandText = $table ## This ties the SQL Server connection to the SQL Server command $buildTable.Connection = $connection

Next, we need to open the connection and execute the command.

$connection.Open() $buildTable.ExecuteNonQuery() $connection.Close()

Our staging table is created! We now have a script that can read from the first line of a file and auto-create a table from it. If our delimiters are different, for instance a vertical bar (|) instead of a comma, we can adjust the script to replace "|" with "] VARCHAR(100), [" after removing the empty spaces. Now, let's wrap our code in a function so that we can re-use it:

Function CreateStagingTable($location, $file, $extension, $server, $database)

{

$full = $location + $file + $extension

$all = Get-Content $full

$columns = $all[0]

$columns = $columns.Replace(" ","")

$columns = $columns.Replace(",","] VARCHAR(100), [")

$table = "CREATE TABLE " + $file + "([" + $columns + "] VARCHAR(100))"

$connection = New-Object System.Data.SqlClient.SqlConnection

$buildTable = New-Object System.Data.SqlClient.SqlCommand

$connection.ConnectionString = "Data Source=" + $server + ";Database=" + $database + ";integrated security=true"

$buildTable.CommandText = $table

$buildTable.Connection = $connection

$connection.Open()

$buildTable.ExecuteNonQuery()

$connection.Close()

}

CreateStagingTable -location "C:\files\" -file "savingsrate" -extension ".txt" -server "OURSERVER\OURINSTANCE" -database "StagingDatabase"

Finally, we want to INSERT the data from the file to the staging table. At this point, there are numerous ways to do this. My preferred method is BULK INSERT because I've found it to be one of the most reliable tools when compared with SSIS, BCP, etc. However, feel free to use the tool that works for you. Below, I rename the function, add another SQL Command which passes two parameters into a stored procedure, shown after the PowerShell function:

Function AutoImportCommaFlatFiles($location, $file, $extension, $server, $database)

{

$full = $location + $file + $extension

$all = Get-Content $full

$columns = $all[0]

$columns = $columns.Replace(" ","")

$columns = $columns.Replace(",","] VARCHAR(100), [")

$table = "CREATE TABLE " + $file + "([" + $columns + "] VARCHAR(100))"

$connection = New-Object System.Data.SqlClient.SqlConnection

$buildTable = New-Object System.Data.SqlClient.SqlCommand

$insertData = New-Object System.Data.SqlClient.SqlCommand

$connection.ConnectionString = "Data Source=" + $server + ";Database=" + $database + ";integrated security=true"

$buildTable.CommandText = $table

$buildTable.Connection = $connection

## Added to function

$x = 0

$insertData.CommandText = "EXECUTE stp_CommaBulkInsert @1,@2"

$insertData.Parameters.Add("@1", $full)

$insertData.Parameters.Add("@2", $file)

$insertData.Connection = $connection

$connection.Open()

$buildTable.ExecuteNonQuery()

$connection.Close()

## Added to function

$x = 1

if ($x = 1)

{

$connection.Open()

$insertData.ExecuteNonQuery()

$connection.Close()

}

}

AutoImportCommaFlatFiles -location "C:\files\" -file "savingsrate" -extension ".txt" -server "OURSERVER\OURINSTANCE" -database "StagingDatabase"

CREATE PROCEDURE stp_CommaBulkInsert @file NVARCHAR(250), @table NVARCHAR(250) AS BEGIN DECLARE @f NVARCHAR(250), @t NVARCHAR(250), @s NVARCHAR(MAX) SET @f = @file SET @t = @table SET @s = N'BULK INSERT ' + @t + ' FROM ''' + @f + ''' WITH ( FIELDTERMINATOR = '','' ,ROWTERMINATOR = ''0x0a'' ,FIRSTROW=2 )' EXEC sp_executesql @s END

Check the Imported Data

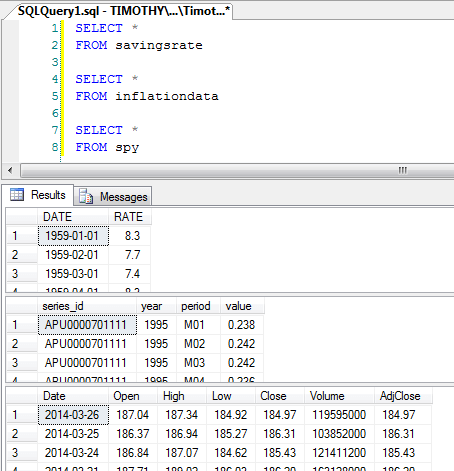

When we execute the below, we see all the data:

SELECT * FROM StagingDatabase.dbo.savingsrate

Importing Other Files Using PowerShell Function

Now, let's call the same function for our inflationdata.csv and spy.csv files:

AutoImportCommaFlatFiles -location "C:\files\" -file "spy" -extension ".csv" -server "OURSERVER\OURINSTANCE" -database "StagingDatabase"

SELECT * FROM StagingDatabase.dbo.inflationdata SELECT * FROM StagingDatabase.dbo.spy

Summary

Finally, we only need to make a few changes if our delimiters are different. You'll notice that my final naming conventions specify what type of flat file data it deals with - stp_CommaBulkInsert and AutoImportCommaFlatFiles. I can re-name and add to my functions and procedures as needed, and ideally, the only thing that should ever change when calling the function is the name. Depending on your company, you may only deal with a few file formats such as commas and vertical bars and thus will only need a few scripts. From there, we can just change the file name, and everything will be done for us.

While this tip is initially intended for small or startup shops, I've implemented this same code at large shops too, and they've seen the benefit of automatic imports without the use of tools that require hours to debug, or need to be rebuilt for a new set of data. Provided that our flat files are similar in column and row format delimiters, we can re-use this for more than one or two files.

Watch Video Version of Tip

Next Steps

- Test comma delimited files and other formats, noting that you may want to expand the VARCHAR length above 100 if the data are large.

- Use the Rename and Move file script from the previous tip I wrote to archive the imported files.

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips