By: Fikrat Azizov | Comments (3) | Related: > Indexing

Problem

I was recently involved in troubleshooting data warehouse queries for one of my customers who had an ETL package running longer than usual. The package was quite simple – it was inserting a large number of rows from a staging table into a large table containing hundreds of millions of rows. The initial investigation revealed that the package's OLEDB destination adapter was responsible for most of the execution time. The destination table had a number of indexes, some of them larger than 200 GB and the execution plan for the bulk inserts has showed that index updates were significantly contributing to the slowness of the inserts.

The total execution costs for a few large non clustered indexes had more than a 40% share in the entire execution plan. We've considered disabling the largest indexes, however it turned out they were heavily used by some daily reports and disabling them could lead to significant performance impact on those reports.

Disabling large indexes prior to ETL and enabling them afterwards also was not an option, because the index rebuild required significant time and reports on those indexes needed to be run immediately after the ETL package. So, I started looking into ways to reduce index sizes, assuming that smaller indexes would require less index updates and therefore better performance for data insert and update transactions.

Solution

Fully covering and partially covering SQL Server indexes

A closer examination has showed that even though the largest indexes had only a few key fields, they contained a high number of columns in their INCLUDED columns logic, apparently some developers created them in order to satisfy queries with large SELECT lists. For those of you who are not familiar with covering indexes, I'd recommend reading this article by Joe Webb.

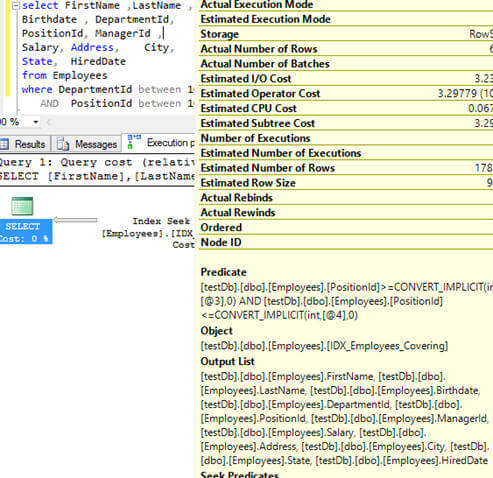

The biggest benefit of covering index is that when it contains all the fields required by query, it may greatly improve query performance and best results are often achieved by placing fields required in filter or join criteria into the index key and placing fields required in the SELECT list into the INCLUDE part of the covering index. As you can see from the sample execution plan below, the index IDX_Employees_Covering provides all the fields included in the SELECT list and therefore completely satisfies the query:

Query:

SELECT FirstName, LastName, Birthdate, DepartmentId, PositionId, ManagerId, Salary, Address, City, State, HiredDate from Employees where DepartmentId between 10 and 70 AND PositionId between 10 and 80

Index:

CREATE NONCLUSTERED INDEX IDX_Employees_Covering ON Employees (DepartmentId,PositionId) INCLUDE (FirstName,LastName,Birthdate,ManagerId,Salary,Address,City,State,HiredDate)

Figure1. Execution plan for fully covering index:

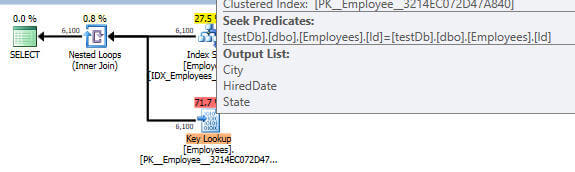

However, in some cases a fully covering index may become partially covering, due to table/query enhancements. As you can see from the example below for the same query, index IDX_Employees_PartiallyCovering doesn't provide all the required fields and SQL Server is forced to use an additional operator, a Key Lookup in order to bring missing columns (City, HiredDate, State in this example) from the clustered index:

Index:

CREATE NONCLUSTERED INDEX IDX_Employees_PartiallyCovering ON Employees DepartmentId PositionId INCLUDE FirstName LastName Birthdate ManagerId

Figure2. Execution plan for partially covering index:

Index optimization

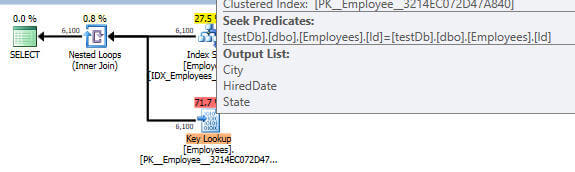

In the above mentioned problem my goal was to optimize the existing indexes without compromising the performance of read queries. So, I've found all the queries from the query cache which had reference to large indexes and analyzed their execution plans, using this query described by Jonathan Kehayias in this article. This analysis has revealed that none of the large covering indexes were fully satisfying queries, in other words they had Lookup operators in their execution plans. So I thought of removing some of the columns from the INCLUDE part of the index would not change the query since a Lookup operator had to bring more fields in anyway as shown below.

Execution plans for partially covering indexes:

| Figure 3. Large index, before optimization |

|

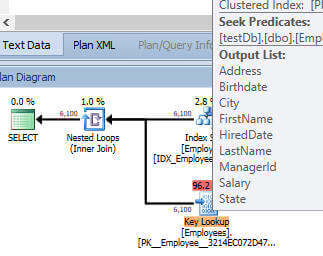

| Figure 4. Trimmed index, after optimization |

|

I've removed most of the fields from the INCLUDE part of the covering indexes

without touching the main index and index sizes have dropped up to 40%. As a result,

query performance improvements have exceeded all my expectations- the execution

costs of related

SELECT queries have dropped and they ran faster. Moreover,

we've gained more than 45% improvement on data warehouse

bulk

insert transactions. Below I've tried to reproduce the problem and provide

a comparison between various indexing options.

Test scenario: Measuring partially covering index performance

We'll create a test table within a test database and measure key performance metrics: duration, number of reads and query execution cost against the following two queries:

1. SELECT FirstName, LastName, Birthdate, DepartmentId, PositionId, ManagerId, Salary, Address, City, State, HiredDate FROM Employees WHERE DepartmentId between 10 and 70 AND PositionId between 10 and 80 2. INSERT INTO Employees SELECT FirstName, LastName, Birthdate, DepartmentId, PositionId, ManagerId, Salary, Address, City, State, HiredDate FROM [testDb].[dbo].[Employees_Archive]

The measurements would be taken with the following indexing conditions:

1. Without non-clustered indexes,

with only

clustered index

2. Large non-clustered partially covering index on top of

the clustered index

3. Fully covering non-clustered index on top of clustered

index

4. Trimmed (non-covering) index on top of clustered index

In order to get accurate results, we'll clean the cache by running the following commands prior to each test:

dbcc dropcleanbuffers; dbcc freeproccache;

We'll also run the following commands at the start of each query session to be able to get I/O and time statistics and will turn on the Actual Execution Plan in SSMS:

set statistics time on; set statistics io on;

This query will create the Employees table in the testDb database and populate it with 500K rows (it took a few minutes on my test server) :

CREATE DATABASE testDb

Go

USE TestDB

GO

SET NOCOUNT ON;

CREATE TABLE Employees (

Id int Identity(1,1) Primary Key not null,

FirstName varchar(500),LastName varchar(500),Birthdate smalldatetime,DepartmentId int,

PositionId int,ManagerId int,Salary decimal(10,2),Email varchar(50),Address varchar(500),

City varchar(200),State varchar(200),HiredDate date);

GO

DECLARE @cntvar int=1,@RowCnt int=500000

WHILE @cntvar<=@RowCnt

BEGIN

INSERT INTO Employees

(FirstName,LastName,Birthdate,DepartmentId,PositionId,

ManagerId,Salary,Email,Address,City,State,HiredDate)

VALUES

('TestName'+replicate('X',90),

'TesLastName'+replicate('X',90),

dateadd(hour,@cntvar,'1950-01-01'),

@cntvar%1000,

@cntvar%10000,

@cntvar%1000,

50000,

'[email protected]',

'testStreet'+replicate('X',90),

'testCity'+replicate('X',90),

'testState'+replicate('X',85),

dateadd(hour,@cntvar,'1980-01-01')

);

SET @cntvar=@cntvar+1;

ENDThe next query will create a duplicate of this table in order to test bulk inserts into the ‘Employees' table:

SELECT * INTO Employees_Archive from Employees;

Below are the scripts for creating indexes:

1. Partially covering

index:

CREATE NONCLUSTERED INDEX IDX_Employees_PartiallyCovering ON Employees (DepartmentId,PositionId) INCLUDE (FirstName ,LastName ,Birthdate ,ManagerId ,Salary,Address,email);

2. Fully covering index:

CREATE NONCLUSTERED INDEX IDX_Employees_Covering ON Employees (DepartmentId,PositionId) INCLUDE (FirstName,LastName,Birthdate,ManagerId,Salary,Address,City,State,HiredDate);

3. Trimmed (non-covering) index:

CREATE NONCLUSTERED INDEX IDX_Employees_Trimmed ON Employees (DepartmentId,PositionId);

Here is the summary of the test results (full test results could be found here: Test Results):

|

Index conditions |

Select query | Bulk insert | ||||

|---|---|---|---|---|---|---|

| Duration (ms) | Logical reads | Execution cost | Duration (ms) | Logical reads | Execution cost | |

| Only clustered index | 4715 | 42367 | 29.227 | 41770 | 1786510 | 453.955 |

| Partially covering index | 1563 | 15252 | 5.877 | 182407 | 3880338 | 1426.57 |

| Fully covering index | 396 | 2156 | 1.65 | 140775 | 4133971 | 1679.55 |

| Trimmed index | 378 | 14152 | 4.851 | 67328 | 3393525 | 462.294 |

In this test, the best performance results for read queries were achieved by applying a fully covering index, however the insert queries involving fully covering indexes performed worst in terms of execution cost, I/O and duration. On the other hand, the trimmed index (index with all columns removed from the INCLUDED logic) proved to be the best for insert queries, while performing slightly worse for read queries in comparison to a fully covering index. The most important takeaway from the above experiment is that the removal of some columns from the INCLUDED part of the index helps to improve performance for both read and write queries in cases where the execution plans do not change and this happens due to the fact that smaller indexes require less memory and disk I/O.

Conclusion

Covering indexes are a great tools for improving read queries, however they can also significantly degrade performance of write transactions on large tables. When write queries are performing poorly, indexes on underlying tables need to be reviewed. If an index is fully covering for at least some of the important queries, I'd be hesitant to reduce its size as it may become partially covering and less efficient for those queries. However, in cases when an index is partially covering for all key queries and I/O transactions are underperforming, downsizing it would certainly be useful.

Next Steps

- Read this article to identify queries involving lookup operators.

- Take a look at find duplicate indexes article to see how duplicate or overlapping indexes could be identified.

- Review this article to identify unused indexes.

- Check out all of the indexing and performance tuning tips on MSSQLTips.com

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips