By: Koen Verbeeck | Comments (3) | Related: > Integration Services Performance

Problem

With the release of SQL Server 2016, a property has been added to the Integration Services (SSIS) data flow: AutoAdjustBufferSize. What is this property and how can I use it?

Solution

The AutoAdjustBufferSize Property

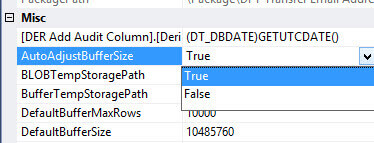

This new property (available for SSIS 2016 and higher) has been added to the data flow and you can find it in the Misc. section of the data flow properties as shown below.

The property can only be set to True or False (the default). But what does this property do?

As explained in the tip Improve SSIS data flow buffer performance, it is the size of the buffers that have a huge impact on performance. The data flow uses buffers to transfer and transform the data. The bigger the buffer, the more data can be manipulated at once in memory. Most of the time, having bigger buffers results in faster data flow performance. This is dependent though on other factors, such as the speed of the sources and the destinations, and the type of transformations involved.

The size of the buffer is determined by two properties: DefaultBufferMaxRows - with a default of 10,000 rows - and DefaultBufferSize - with a default of 10MB. However, those defaults are very small compared to the amount of memory that modern servers have available. So every time you developed a new SSIS package, you had to bump up the size specifications of the buffer (for example to 40MB and 100,000 rows). But this could lead to a bit of trial and error, since buffers that are too big might slow down performance.

Imagine you have to fill buckets of water and pass them along a line to put out a fire. If you have bigger buckets, you can transfer more water and the fire will be put out more quickly. But if your buckets are too big, it takes too long to fill them with water while the fire is raging on. So you have to find the optimal size of the buckets to balance the amount of water being passed and the time to fill those buckets. The same is true with data flow buffers: you have to find the optimal size of the buffers.

Instead of manually looking for a good buffer size, the AutoAdjustBufferSize property will do this task for you. When enabled, the data flow will automatically change the size of its buffers so it matches the number of rows configured with DefaultBufferMaxRows. This makes it easier to determine the throughput of your data flow. Let's test to see if using this property actually leads to better performance.

Test SSIS Data Flow Buffer Performance

This tip uses the same test set-up as this tip Improve SSIS data flow buffer performance. You can find all the scripts to create the test tables and test data in that tip. The SSIS package used to test is also the same. I created three versions of the package:

- one with all the properties set to their defaults (this means AutoAdjustBufferSize is set to False). This package is called AutoBufferSize_Default.dtsx.

- one with the buffer size set to 20MB and 30,000 rows. This package is called AutoBufferSize_LargerBuffer.dtsx.

- one with only the property AutoAdjustBufferSize set to True. The name of the package is AutoBufferSize.dtsx.

Using a stored procedure I ran each package 20 times in a row. The creation of this stored procedure is explained in the blog post SSIS Performance Testing. Aside from just logging the start and end time of the package (which allows us to calculate the runtime duration), I also enabled two performance counters.

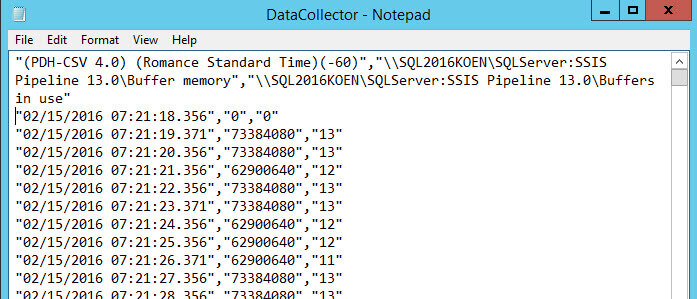

You can find more information about collecting performance data in the tip Performance Monitor. The buffer memory counter collects the amount of bytes that is allocated in memory by all the packages. Since we are running only one single package at a time, this is the memory consumption of the package being tested. The buffers in use counter indicates how many buffers there are in use. The output of these counters looks like this:

The Test Results

With the following T-SQL query, we can easily analyze the run durations of the different packages:

SELECT

[RunID]

,[PackageName]

,[Duration] = DATEDIFF(MILLISECOND,StartDate,EndDate) / 1000.0

,[Mean] = PERCENTILE_CONT(0.5) WITHIN GROUP

(ORDER BY DATEDIFF(MILLISECOND,StartDate,EndDate) / 1000.0)

OVER (PARTITION BY PackageName)

FROM [dbo].[PackageLogging]

ORDER BY ID;

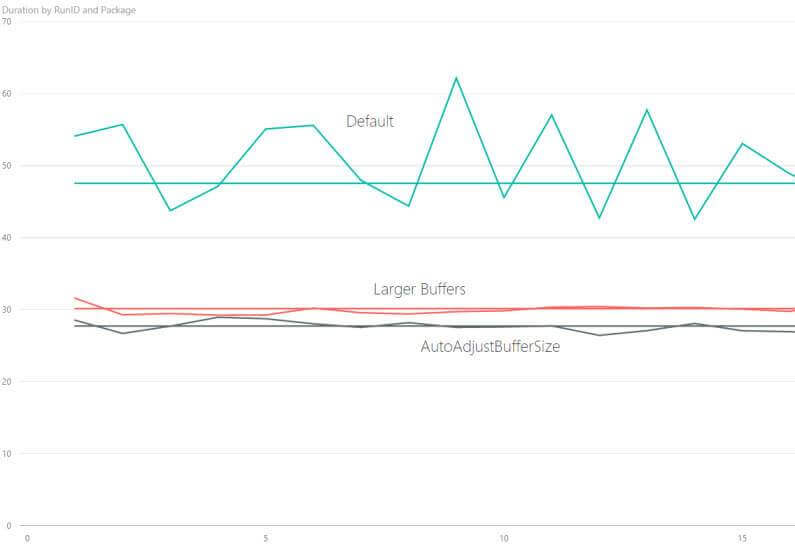

Visualized in Power BI Desktop, the results look like this:

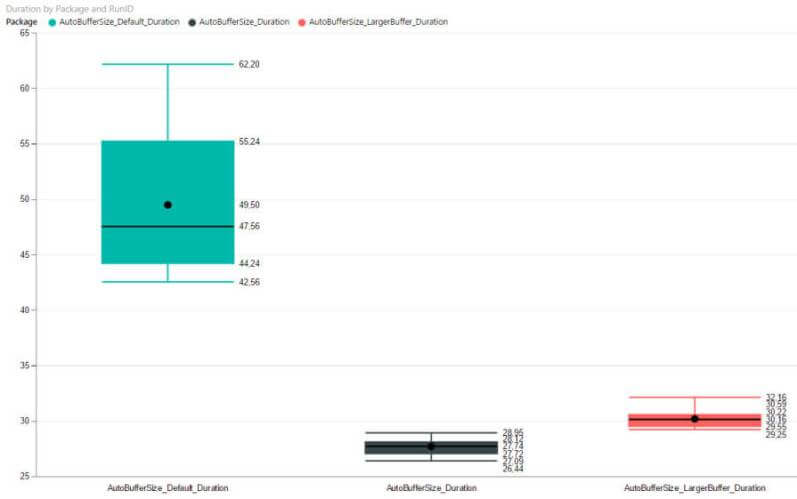

We can see that enlarging the buffers from the default values to 20MB and 30,000 rows already had a great impact on performance. The median runtime went from just below 50 seconds to about 30 seconds. The new AutoAdjustBufferSize property scored slightly better with a median value of 27 seconds. Using a box and whisker plot, we can see that the runtimes of the two fastest packages are indeed close together. However, the entire set of samples of the AutoAdjustBufferSize package falls clearly below the set of samples of the larger buffer package. The default package obviously performs the worst. It has a quite erratic distribution of runtimes, where the minimum and maximum values are quite spread out.

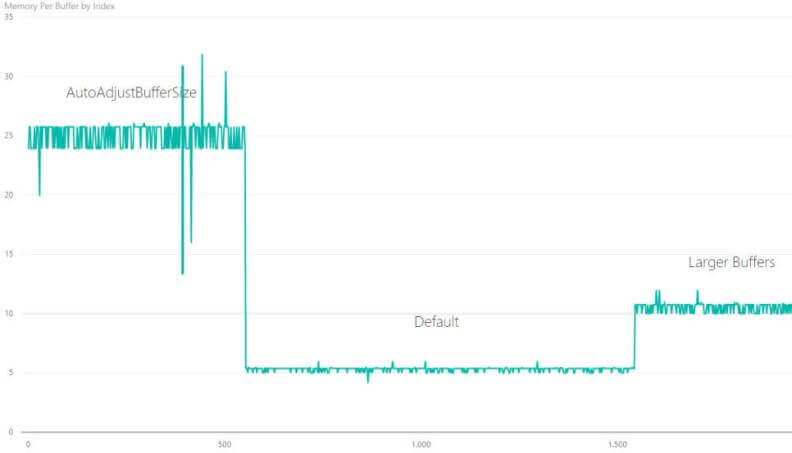

Now let's take a look at the memory usage. By dividing the total memory consumption by the amount of buffers used, we can find the memory per buffer. The X-axis represents the ID of the sample number.

The default package uses the lowest amount of memory, with a buffer size of about 5MB. This means the maximum of rows (DefaultBufferMaxRows) was a limiting factor on the buffer size. Setting the number of rows to 30,000 and the buffer size to 20MB leads to an average buffer memory of about 10.5MB. Finally, the AutoAdjustBufferSize uses the most memory, with an average buffer size of about 25MB. It's important to note though that using about 2.5 times the amount of memory than the package with the larger buffers results in a gain of only 3 seconds.

Conclusion

The AutoAdjustBufferSize property lives up to its promise: it automatically scales the buffer of the data flow in order to improve performance. The only downside is that this new property is turned off by default.

Next Steps

- If you want to reproduce this test, you can use the code from the tip Improve SSIS data flow buffer performance and the blog post SSIS Performance Testing. You can download the SSIS packages here.

- For more tips about SSIS best practices:

- Also read these excellent articles:

- Top 10 SQL Server Integration Services Best Practices by the SQLCat Team

- Suggested Best Practices and naming conventions by Jamie Thomson

- Data Flow Performance Features

- For more SQL Server 2016, read these other SQL Server 2016 Tips.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips