By: Artemakis Artemiou | Comments (2) | Related: > In Memory OLTP

Problem

In-Memory OLTP in SQL Server is a very powerful feature. In one of my previous tips, I suggested three approaches you can follow in order to get an indication whether you can benefit from using this feature for your specific workload. In this tip I will present a simple, yet comprehensive example of workload processing that was optimized using In-Memory OLTP which led to a performance boost up to 79x times faster processing over disk-based execution.

Solution

Let's start this tip by first defining the scenario we are going to use. The scenario is quite simple as the purpose of this tip is to show how easy it is to boost performance when using In-Memory OLTP in SQL Server.

So here's the scenario: A worldwide retail sales organization has 50 million customers. As the organization celebrates its 30 successful years of operation, the Management decided to organize local celebrations in the major countries where the organization operates. As it is not possible to have celebrations in all countries all around the world, the Management requested Information Technology at HQ to generate two reports. The requirements for the reports are:

- Generate a list with the top 25 countries based on the number of customers

- Generate a list with the top 25 countries based on the total sales per country

Based on the above two reports, the Management will decide in which countries the company will host its events.

The organization uses SQL Server as their data platform solution. However, they have not yet implemented In-Memory OLTP. Information technology sees this as a great opportunity to showcase the benefits of applying In-Memory OLTP within the organization.

Below you can see the "customers" table's (disk-based) definition:

--Disk-Based Table Definition CREATE TABLE [dbo].[tblCustomers]( [id] [INT] NOT NULL PRIMARY KEY, [code] [CHAR](6) NOT NULL, [firstName] [VARCHAR](100) NOT NULL, [lastName] [VARCHAR](100) NOT NULL, [address1] [VARCHAR](150) NOT NULL, [address2] [VARCHAR](150) NULL, [zipCode] [CHAR](5) NOT NULL, [countryCode] [CHAR](3) NOT NULL INDEX IX_CountryCode NONCLUSTERED, [lastPurchaseDate] [DATE] NOT NULL, [totalPurchasesAmount] [FLOAT] NOT NULL ); GO

Transforming a disk-based table to memory-optimized can be very easy. You first need to identify what types of indexes you should use (there is a very useful MSDN article about this) as well as the table's durability. Durability sets whether the memory-optimized table's data will be lost in a case of a server failover or crash (SCHMA_ONLY) or will be permanent (SCHEMA_AND_DATA). As we want to compare apples with apples, the durability for the memory-optimized table will be set to SCHEMA_AND_DATA in order for the data in the table to be permanent. Also, in a similar manner to the disk-based table, we will add a nonclustered Hash index for the primary key and a nonclustered index for the "countryCode" column.

The definition for the memory-optimized table is provided below:

--Memory-Optimized Table Definition CREATE TABLE [dbo].[tblCustomersMemOpt]( [id] [INT] NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 70000000), [code] [CHAR](6) NOT NULL, [firstName] [VARCHAR](100) NOT NULL, [lastName] [VARCHAR](100) NOT NULL, [address1] [VARCHAR](150) NOT NULL, [address2] [VARCHAR](150) NULL, [zipCode] [CHAR](5) NOT NULL, [countryCode] [CHAR](3) NOT NULL INDEX IX_CountryCode NONCLUSTERED, [lastPurchaseDate] [DATE] NOT NULL, [totalPurchasesAmount] [FLOAT] NOT NULL )WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_AND_DATA ); GO

Another important part of defining a memory-optimized table is the "BUCKET_COUNT" setting. The recommended values for BUCKET_COUNT must be between 1.5 and 2 times the estimated value of unique values. In this example, the unique values (customers) are 50M. Based on that I should set the BUCKET_COUNT to 75M, but I wanted to save some RAM, so I set it to 70M.

The two queries to be executed against the disk-based table are:

Query 1:

--Clear buffer CHECKPOINT; GO DBCC DROPCLEANBUFFERS; GO --Disk-Based Processing: Query 1 SET STATISTICS TIME ON; SELECT TOP 25 countryCode, COUNT(*) AS totalCustomers FROM dbo.tblCustomers GROUP BY countryCode ORDER BY 2 DESC; SET STATISTICS TIME OFF;

Query 2:

--Clear buffer CHECKPOINT; GO DBCC DROPCLEANBUFFERS; GO --Disk-Based Processing: Query 2 SET STATISTICS TIME ON; SELECT TOP 25 countryCode, SUM(totalPurchasesAmount) AS totalSales FROM dbo.tblCustomers GROUP BY countryCode ORDER BY 2 DESC; SET STATISTICS TIME OFF;

The two queries to be executed against the memory-optimized table are:

Query 1:

--Clear buffer CHECKPOINT; GO DBCC DROPCLEANBUFFERS; GO --Memory-Optimized Processing: Query 1 SET STATISTICS TIME ON; SELECT TOP 25 countryCode, COUNT(*) AS totalCustomers FROM dbo.tblCustomersMemOpt GROUP BY countryCode ORDER BY 2 DESC; SET STATISTICS TIME OFF;

Query 2:

--Clear buffer CHECKPOINT; GO DBCC DROPCLEANBUFFERS; GO --Memory-Optimized Processing: Query 2 SET STATISTICS TIME ON; SELECT TOP 25 countryCode, SUM(totalPurchasesAmount) AS totalSales FROM dbo.tblCustomersMemOpt GROUP BY countryCode ORDER BY 2 DESC; SET STATISTICS TIME OFF;

Execution of Query 1 Results for Disk-Based and In-Memory

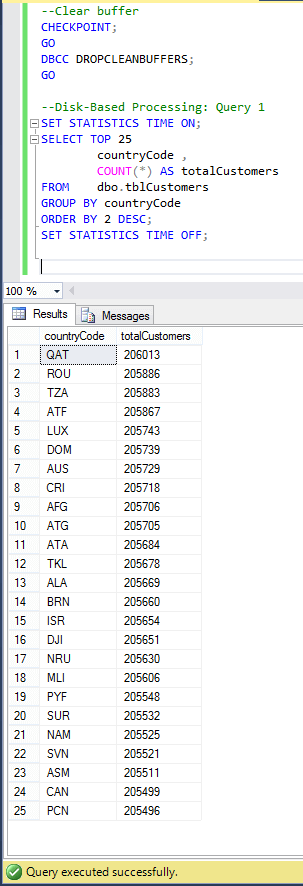

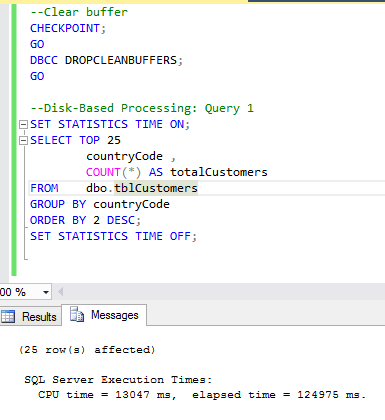

Disk-Based Execution - Query 1 (result and execution time):

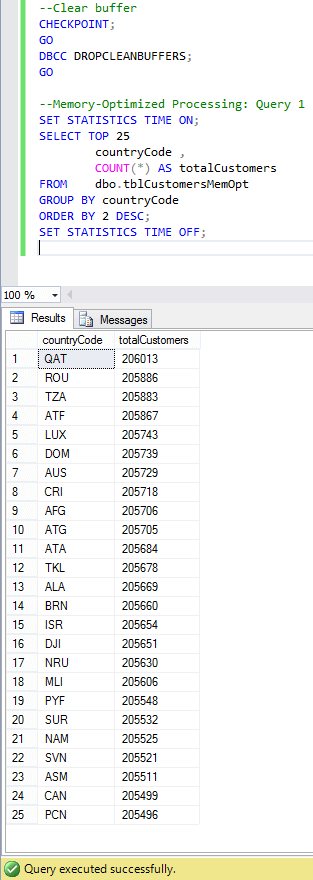

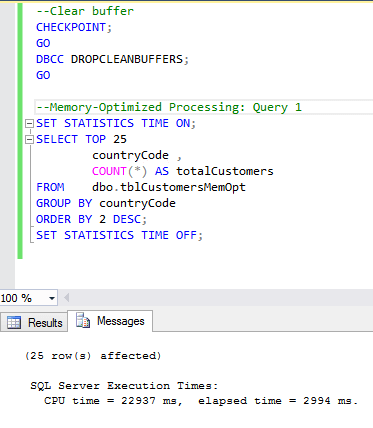

Memory-Optimized Execution - Query 1 (result and execution time)

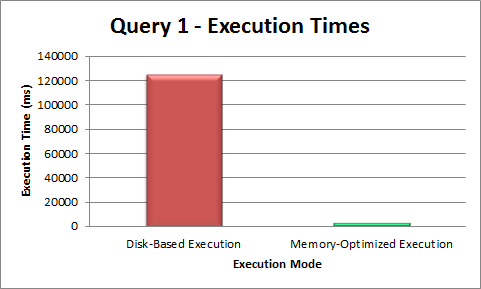

Analysis of Query 1 Results

As you can see from the above screenshots, the results are identical thus proving that both queries produce the same result. If we compare the elapsed execution times, we can see that the disk-based execution's elapsed time was 124975 ms and the memory-optimized execution's elapsed time was 2994 ms. This means that the memory-optimized execution was 41.74 times faster over disk-based execution!

Execution of Query 2 Results for Disk-Based and In-Memory

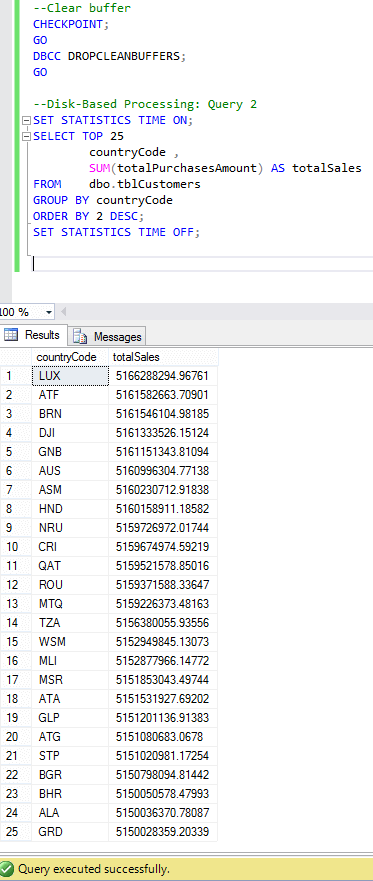

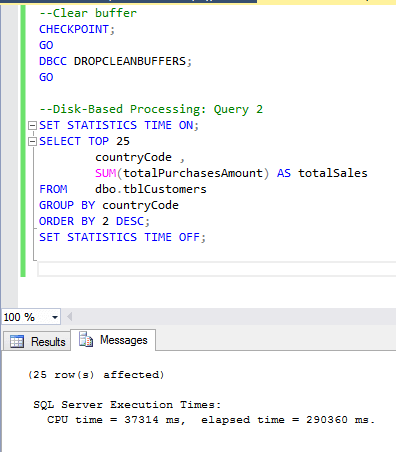

Disk-Based Execution - Query 2 (result and execution time)

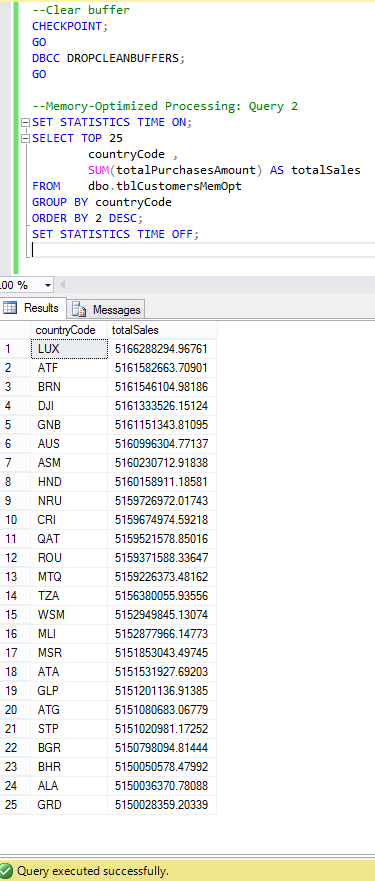

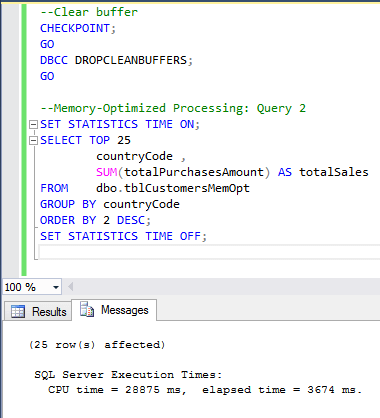

Memory-Optimized Execution - Query 2 (result and execution time)

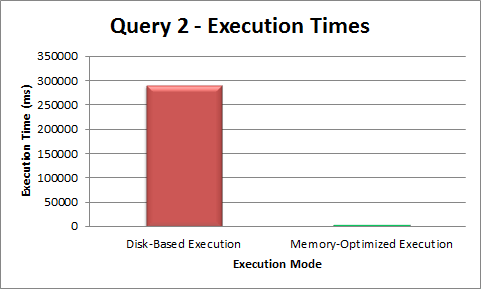

Analysis of Query 2 Results

As you can see from the above screenshots, again, the results are identical thus proving that both queries produce the same result. If we compare the elapsed execution times, we can see that the disk-based processing's elapsed time was 290360 ms and the memory-optimized processing's elapsed time was 3674 ms. This means that the memory-optimized execution was 79 times faster over disk-based execution!

Comparison Graphs

The execution time comparisons for Query 1 and 2 are also plotted on the below graphs:

Conclusion

In-Memory OLTP Engine is a very powerful feature in SQL Server 2014 and later. Especially in SQL Server 2016, there are significant improvements that make it even easier to work with In-Memory OLTP. In this example I have processed 50 million records of data using two queries. The first query executed 41x faster using In-Memory OLTP and the second 79x faster. This simple example gives only a small indication on what you can achieve with In-Memory OLTP in SQL Server.

In-Memory OLTP is seamlessly integrated into SQL Server thus making it easy to use it. Get the Developer Edition of SQL Server 2016 today, it's free. Start experimenting with In-Memory OLTP and you will soon start realizing the performance benefits of using this powerful engine.

Next Steps

Review the following tips and other resources:

- MSDN Article: Reorganize and Rebuild Indexes

- MSSQLTips Article: Assessing the SQL Server In-Memory OLTP Performance Benefits

- Blog Article: In-Memory Optimization in SQL Server: Will my Workload Execute Faster?

- Blog Article: In-Memory OLTP: Comparison of Features/Limitations between SQL Server 2014 and SQL Server 2016

- Check out all of the In-Memory OLTP Tips

About the author

Artemakis Artemiou is a Senior SQL Server and Software Architect, Author, and a former Microsoft Data Platform MVP (2009-2018).

Artemakis Artemiou is a Senior SQL Server and Software Architect, Author, and a former Microsoft Data Platform MVP (2009-2018).This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips