By: Bhavesh Patel | Comments (3) | Related: > Performance Tuning

Problem

In SQL Server we use the UPDATE statement for modifying data. Updating data can be done in various ways such as row by row, one big batch or in several smaller batches. In this tip we will look at the differences to perform UPDATEs using these methods.

Solution

Setup SQL Server Test environment

First, we'll setup a SQL Server database table in order to run UPDATE operations. This will create a table and insert 1 million rows.

USE MASTER

GO

CREATE DATABASE UpdateDB

GO

USE UpdateDB

GO

CREATE Table CustTransaction

(

id INT PRIMARY KEY IDENTITY(1,1),

col1 NVARCHAR(500),

Col2 NVARCHAR(500),

Col3 NVARCHAR(500),

Col4 NVARCHAR(500),

COL5 NVARCHAR(500),

COL6 NVARCHAR(500)

)

GO

INSERT INTO CustTransaction

SELECT

'C1' + replicate ('0',200),

'C2' + replicate ('0',200),

'C3' + replicate ('0',200),

'C4' + replicate ('0',200),

'C5' + replicate ('0',200),

'C6' + replicate ('0',200)

GO 1000000

Basics about SQL Server Updates

To limit the rows that are updated when you execute an UPDATE statement, a WHERE clause can be used. The WHERE clause limits the search conditions that define which rows to update in the base table.

Here are examples in which data is updated based on a filter condition.

UPDATE TABLENAME SET COLUMNNAME = (SomeValue) WHERE COLUMNNAME = (SomeValue)

In addition, the filtering of rows can be done by referencing another table with a join.

UPDATE TABLENAME SET COLUMNNAME = (SomeValue) FROM TABLENAME AS Alias INNER JOIN OTHERTABLE AS OtherAlias ON Alias.columnName = OtherAlias.columnName WHERE OtherAlias.COLUMNNAME = (SomeValue)

In addition, you can update the data in one table based on the value from another table.

UPDATE TABLENAME SET COLUMNNAME = OtherAlias.columnName FROM TABLENAME AS Alias INNER JOIN OTHERTABLE AS OtherAlias ON Alias.columnName = OtherAlias.columnName WHERE OtherAlias.columnName = (SomeValue)

For this tip, we are going to keep things simple and just use one table for the update.

UPDATE Scenarios

I need to update a big SQL Server table and will use the following options:

- Data update by row by row

- Data update in bulk

- Data update in small batches

We need to aware of these difference methods. Which one will be faster. Is there some performance impact due to infrastructure, isolation level, concurrent processes that are running, etc.

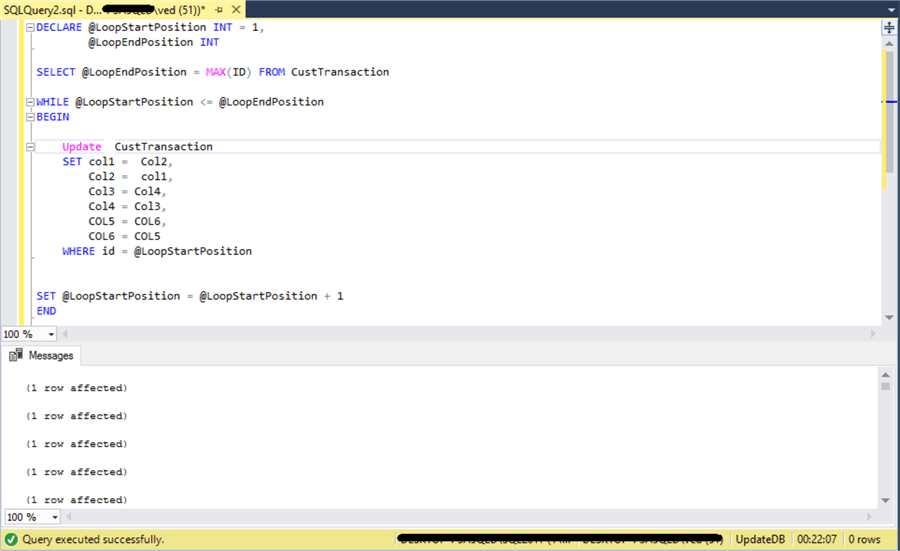

UPDATE Row By Row

I am going to update the table CustTransaction using a row by row method with a loop. I have prepared the sample query and executed as follows.

The CustTransaction table has 1 million rows and this took around 22 minutes to update all rows. Updating row by row is very slow, but less resource intensive and the consistency is at a statement level. If you want all updates to be consistent for the table, you would need to use a transaction, because if one update fails, the rest will succeed. Also, in a transaction when updating row by row with a large volume, it might be possible that deadlocks occur because row iteration in the transaction takes more time.

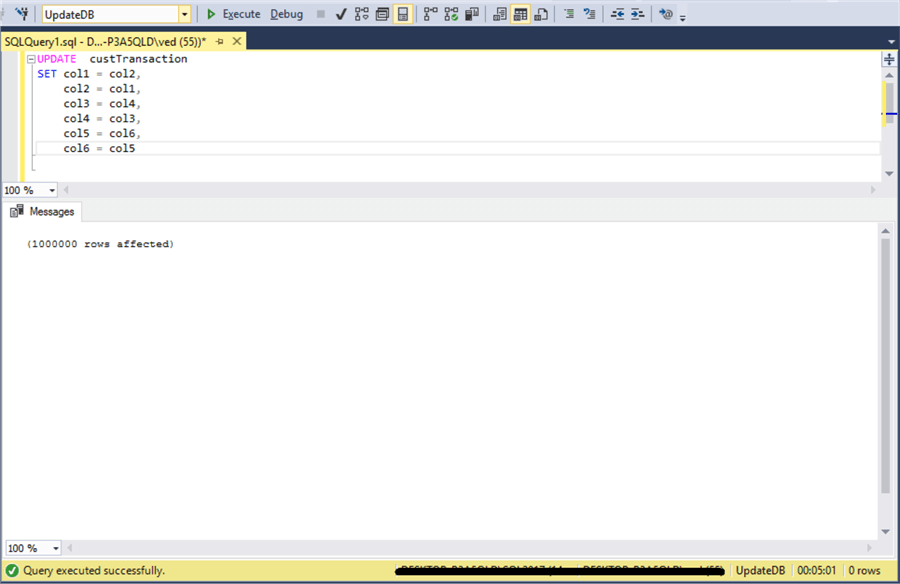

UPDATE in Bulk

I am going to update the CustTransaction table with one command. I have prepared the sample query and executed as follows.

This took around 5 minutes. It’s a faster update than a row by row operation, but this is best used when updating limited rows. A bulk update is an expensive operation in terms of query cost, because it takes more resources for the single update operation. It also takes time for the update to be logged in the transaction log. Also, long running updates can cause blocking issues for other processes.

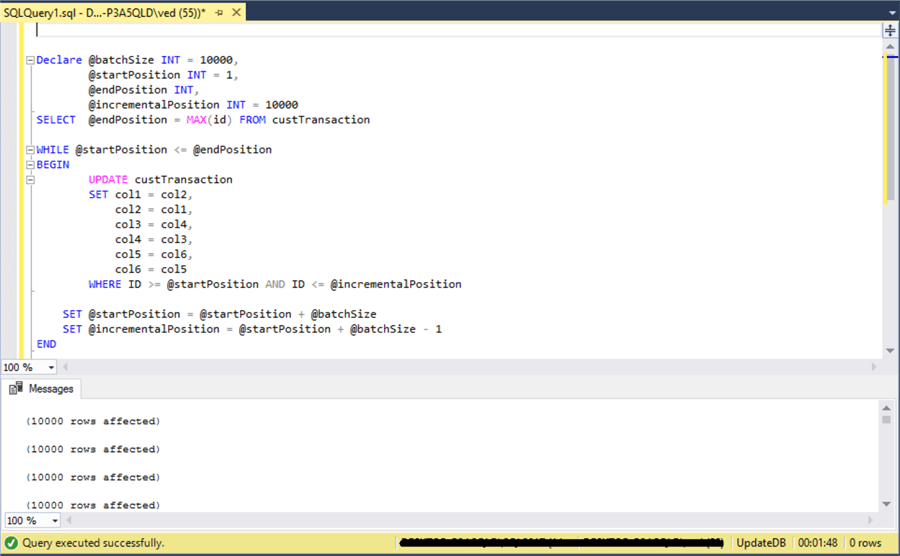

UPDATE in Batches

Another approach is to update the data in smaller batches. So instead of doing row by row updates or one big update, I have divided the data into batches of 10K rows and performed the update operation as shown below.

This completed in less than 2 minutes, which is much faster than the other two approaches. In addition, the transaction size is minimized and also the data logged and resources used are minimized.

I prefer to update data in batches for large volumes of data. You can set your own batch size after analyzing your data and environment to see what makes the most sense. Another option for batching updates is to use the top statement for configuring the batch size.

Things to Consider

- For optimizing update operations you should try to minimize the transaction size.

- Always make sure you use a WHERE clause unless you want to update the entire table.

- Do large updates during low peak usage times to minimize blocking of other processes.

- If triggers are used on a table, it is helpful to disable the triggers prior to doing large updates if possible.

- Make sure you have the correct indexes in place to optimize the update.

- If you have indexes that are not being used for the table, removing them could help optimize the update.

- Understand the Isolation Level because this could directly or indirectly impact query performance.

- Make sure you understand the recovery model for the database. Different recovery models can help reduce the use of the transaction log when updates are done in batches, but understand the differences before making changes.

Next Steps

- Make sure you test in test environments first to understand the impact and to help optimize the process.

- Check out these other SQL Server Performance Tuning Tips.

About the author

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips