By: Koen Verbeeck | Comments (8) | Related: > Azure Functions

Problem

We have blob files arriving in a blob container continuously throughout the day. A new file should be read and the contents should be written to a table in an Azure SQL Database, as soon as the file is dropped in the blob container. Is there a way to automate this?

Solution

In the Azure ecosystem there are a number of ways to process files from Azure Blob Storage:

- Azure Logic Apps. With these you can easily automate workflows without writing any code. You can find an example in the tip Transfer Files from SharePoint To Blob Storage with Azure Logic Apps. They are better suited though to process the contents of a file in its whole, and not for writing data to Azure SQL Database. The reason for this is because they will process the file line by line, which is not optimal for performance.

- Azure Data Factory is most likely the easiest tool to copy data into an Azure SQL Database. However, the Copy activity doesn't allow for any transformations on the data. A mapping data flow is a good alternative, but since this runs on top of an Azure Databricks cluster, it might be overkill for a small file. ADF has support for blog triggers.

- Azure Functions are little pieces of event-driven code which run on serverless compute. There are many programming languages available and there's also a template for using a blob trigger. This means the Azure Function will automatically run every time a new file is created in a blob container.

In this tip, we'll give you an example of a simple Azure Function which will read a Json file from a blob container and write its contents to an Azure SQL Database table. Even though there's some code involved, Azure Functions are a flexible and powerful tool and they are useful in your "cloud ETL toolkit". To create an Azure Function, we need the following prerequisites:

- Visual Studio with the Azure Development workload enabled. You can also create an Azure Function directly in the portal, but Visual Studio is preferred when you want easy source control integration.

- An Azure storage account

- An Azure SQL Database

The Sample Data

We're going to load a small Json file holding the top 250 movies. A sample of the data:

{

"TopMovies": [

{

"Index": 1,

"Title": "The Shawshank Redemption"

},

{

"Index": 2,

"Title": "The Godfather"

},

{

"Index": 3,

"Title": "The Godfather: Part II"

},

{

"Index": 4,

"Title": "The Dark Knight"

},

{

"Index": 5,

"Title": "12 Angry Men"

},

{

"Index": 6,

"Title": "Schindler's List"

},

…

}

]

}

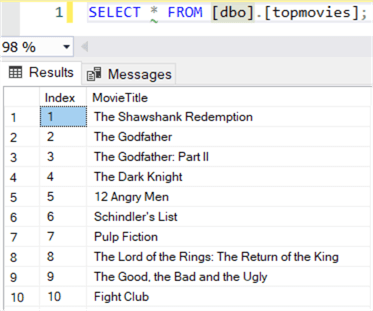

You can download the sample file here. The destination table is created with the following SQL statement:

CREATE TABLE [dbo].[topmovies]( [Index] [BIGINT] NULL, [MovieTitle] [NVARCHAR](500) NULL );

Creating the Azure Function

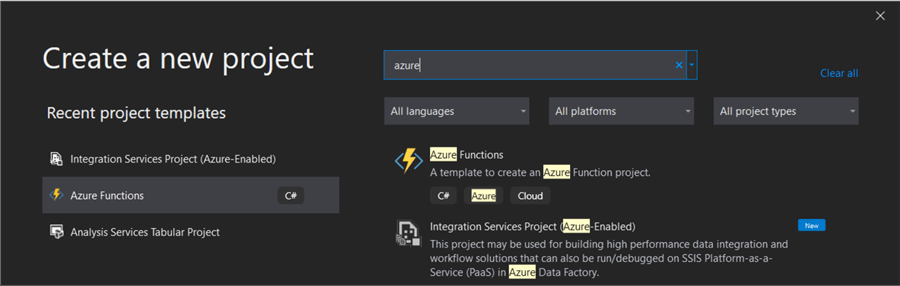

Start Visual Studio and create a new project. Choose Azure Functions from the list of available project templates:

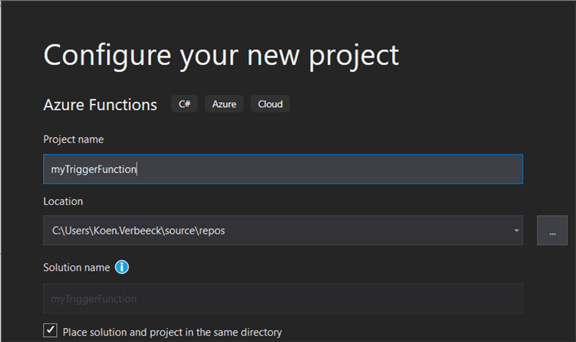

In the next screen, give your project a name and location:

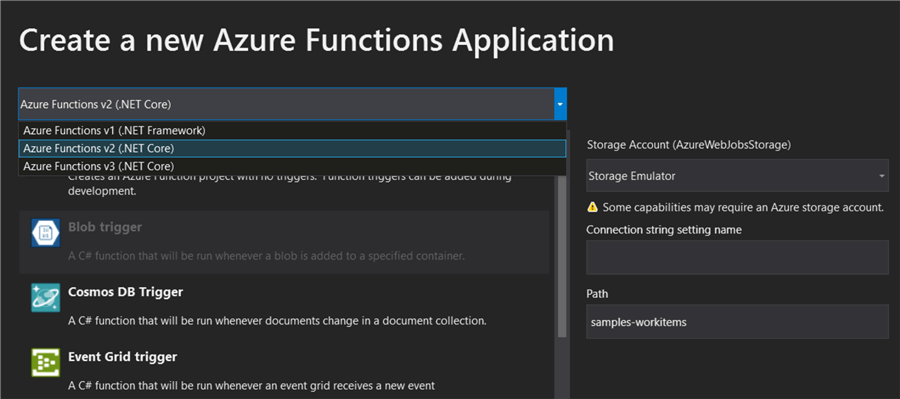

In the following screen, you can configure the Azure Function itself. You can choose the version and the type of trigger.

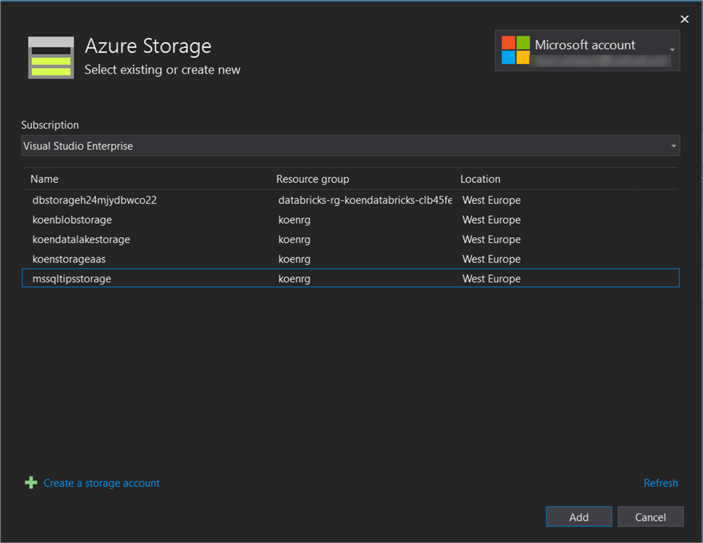

Even though v3 is the newest version, we're choosing v2 as at the time of writing, there were no templates for v3. Choose the Blob trigger template. On the right-hand side, you need to choose a storage account. You can either choose to emulate one, create a new account or choose an existing one:

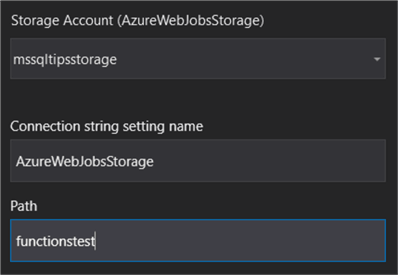

Finally, you need to specify a name for the connection string to your blob storage and the blob container where the files will be dropped:

Once you click on create, the Azure Function will be created using the template for the blob trigger. The following code will be added to a file called Function1.cs.

using System; using System.IO; using Microsoft.Azure.WebJobs; using Microsoft.Azure.WebJobs.Host; using Microsoft.Extensions.Logging; namespace myTriggerFunction { public static class Function1 { [FunctionName("Function1")] public static void Run([BlobTrigger("functionstest/{name}", Connection = "AzureWebJobsStorage")]Stream myBlob, string name, ILogger log) { log.LogInformation($"C# Blob trigger function Processed blob\n Name:{name} \n Size:{myBlob.Length} Bytes"); } } }

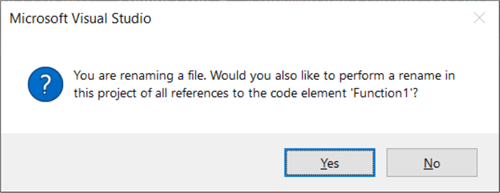

You might want to rename the actual function to something more clear than Function1. If you rename the file, you get the following warning:

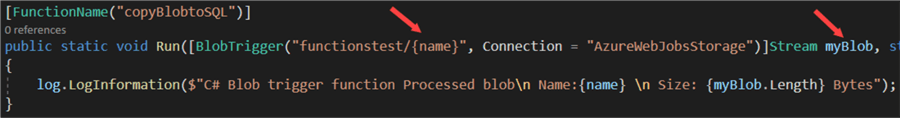

Click yes. In our example, I renamed the function to copyBlobtoSQL. In the boiler plate code, there are two important variables created: name and myBlob.

The name variable will hold the name of the file found in the blob container, while myBlob will hold the actual contents of the file.

Setting up the Configuration

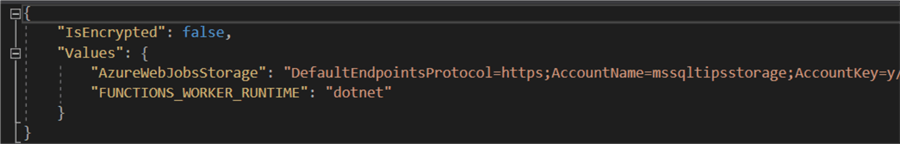

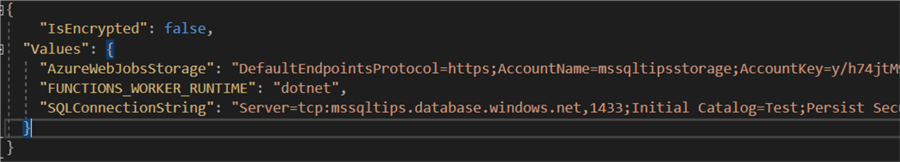

When the Azure Function runs, it needs to know how to connect to the blob container and the Azure SQL Database. Visual Studio has created a file called local.settings.json. In this file, we can already find the connection information for the storage account:

The connection name is AzureWebJobsStorage, which is what we specified in the set-up of the Azure Function. Now we also need to add the database connection information:

An example of a connection string:

"SQLConnectionString": "Server=tcp:mydb.database.windows.net,1433;Initial Catalog=myDB;Persist Security Info=False;User ID=myUser;Password=myPW;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;"

Keep in mind this config file will not be deployed to Azure, so you'll need to specify the information there as well. It's also a good idea to not include this file in source control.

To make use of this config file, we need to add the following directive:

using Microsoft.Extensions.Configuration;

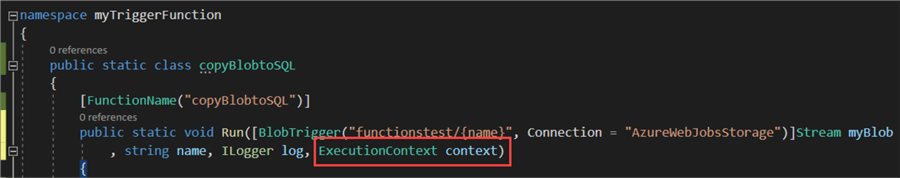

We also need to add an ExecutionContext to the function:

public static void Run([BlobTrigger("functionstest/{name}", Connection = "AzureWebJobsStorage")]Stream myBlob, string name, ILogger log, ExecutionContext context)

In the code itself, we specify where we can find the configuration info and we store the connection information into a variable:

var config = new ConfigurationBuilder()

.SetBasePath(context.FunctionAppDirectory)

.AddJsonFile("local.settings.json", optional: true, reloadOnChange: true)

.AddEnvironmentVariables()

.Build();

string myConnectionString = config["SQLConnectionString"];

Reading the Json data

Before we read in the data, we check first if we're dealing with a Json file, by simply checking the extension of the file:

if (name.EndsWith(".json")){ … }

Then we use a StreamReader object to read in the blob data and store the result into a string.

StreamReader reader = new StreamReader(myBlob);

string jsonContent = reader.ReadToEnd();

An easy way to write data to SQL Server is using SqlBulkCopy class. There's a method WriteToServer, but it has a DataTable as input, not a string. Luckily we can convert the Json data into a table. The method used is found in this StackOverflow question.

public static DataTable Tabulate(string json)

{

var jsonLinq = JObject.Parse(json);

// Find the first array using Linq

var srcArray = jsonLinq.Descendants().Where(d => d is JArray).First();

var trgArray = new JArray();

foreach (JObject row in srcArray.Children<JObject>())

{

var cleanRow = new JObject();

foreach (JProperty column in row.Properties())

{

// Only include JValue types

if (column.Value is JValue)

{

cleanRow.Add(column.Name, column.Value);

}

}

trgArray.Add(cleanRow);

}

return JsonConvert.DeserializeObject<DataTable>(trgArray.ToString());

}

To use all this code, the following using directives are needed:

using Newtonsoft.Json; using Newtonsoft.Json.Linq; using System.Data; using System.Linq;

Now we can convert the Json to a DataTable and write it to our destination table:

var dt = Tabulate(jsonContent);

SqlBulkCopy bulkcopy = new SqlBulkCopy(myConnectionString);

bulkcopy.DestinationTableName = "topmovies";

try

{

bulkcopy.WriteToServer(dt);

}

catch (Exception e)

{

System.Diagnostics.Debug.WriteLine(e.Message.ToString());

}

System.Diagnostics.Debug.WriteLine("Wrote some JSON blob to Azure SQL!");

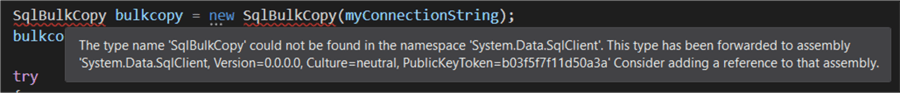

Even though we're using System.Data, it's possible you get the following error:

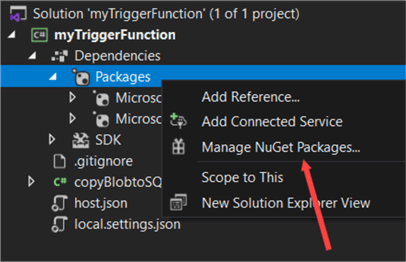

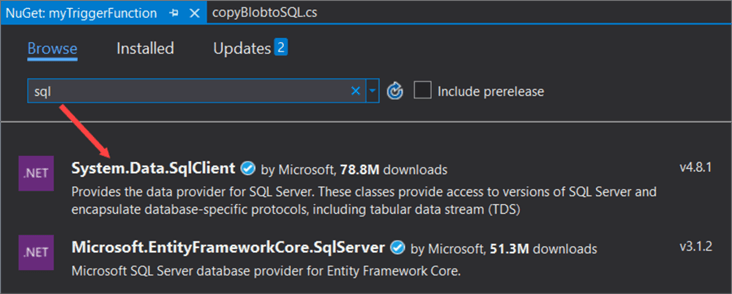

In this case, we'll have to add the System.Data.SqlClient manually to the project. Right-click on Packages in the solution and choose Manage NuGet Packages…

In the NuGet package manager, go to Browse and search for the term sql.

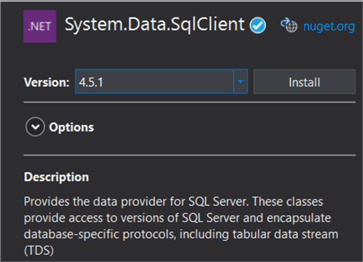

Install the package:

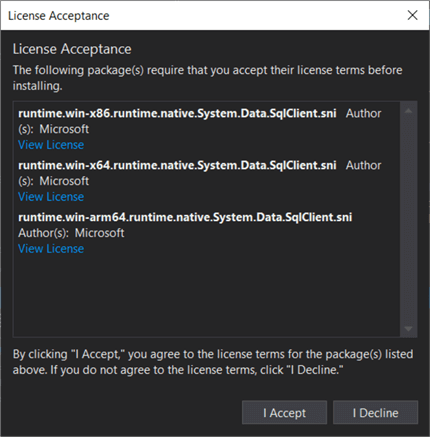

Accept the license:

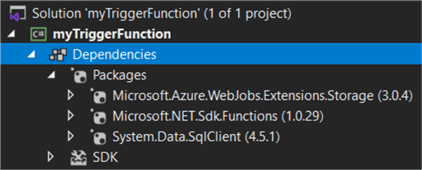

And then the package is added to your project:

The entire code now looks like this:

using System; using System.IO; using Microsoft.Azure.WebJobs; using Microsoft.Azure.WebJobs.Host; using Microsoft.Extensions.Logging; using Microsoft.Extensions.Configuration; using Newtonsoft.Json; using Newtonsoft.Json.Linq; using System.Data; using System.Linq; using System.Data.SqlClient; namespace myTriggerFunction { public static class copyBlobtoSQL { [FunctionName("copyBlobtoSQL")] public static void Run([BlobTrigger("functionstest/{name}", Connection = "AzureWebJobsStorage")]Stream myBlob , string name, ILogger log, ExecutionContext context) { log.LogInformation($"C# Blob trigger function Processed blob\n Name:{name} \n Size:{myBlob.Length} Bytes"); var config = new ConfigurationBuilder() .SetBasePath(context.FunctionAppDirectory) .AddJsonFile("local.settings.json", optional: true, reloadOnChange: true) .AddEnvironmentVariables() .Build(); string myConnectionString = config["SQLConnectionString"]; if (name.EndsWith(".json")) { StreamReader reader = new StreamReader(myBlob); string jsonContent = reader.ReadToEnd(); var dt = Tabulate(jsonContent); SqlBulkCopy bulkcopy = new SqlBulkCopy(myConnectionString); bulkcopy.DestinationTableName = "topmovies"; try { bulkcopy.WriteToServer(dt); } catch (Exception e) { System.Diagnostics.Debug.WriteLine(e.Message.ToString()); } System.Diagnostics.Debug.WriteLine("Wrote some JSON blob to Azure SQL!"); } } public static DataTable Tabulate(string json) { var jsonLinq = JObject.Parse(json); // Find the first array using Linq var srcArray = jsonLinq.Descendants().Where(d => d is JArray).First(); var trgArray = new JArray(); foreach (JObject row in srcArray.Children<JObject>()) { var cleanRow = new JObject(); foreach (JProperty column in row.Properties()) { // Only include JValue types if (column.Value is JValue) { cleanRow.Add(column.Name, column.Value); } } trgArray.Add(cleanRow); } return JsonConvert.DeserializeObject<DataTable>(trgArray.ToString()); } } }

Testing the Azure Function

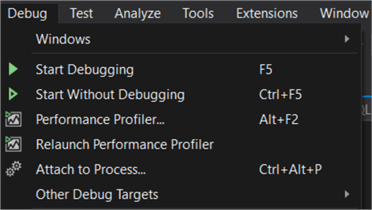

Now it's time to see if the code actually works. In Visual Studio, hit F5 or click on the "play" icon to start debugging.

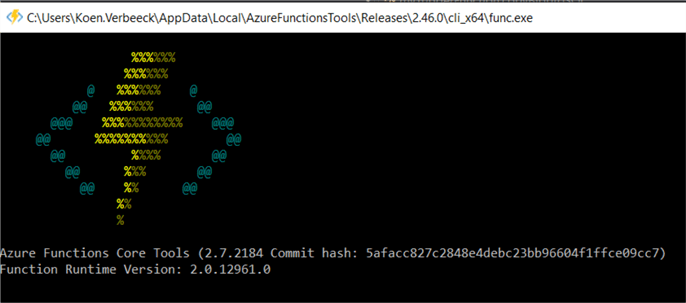

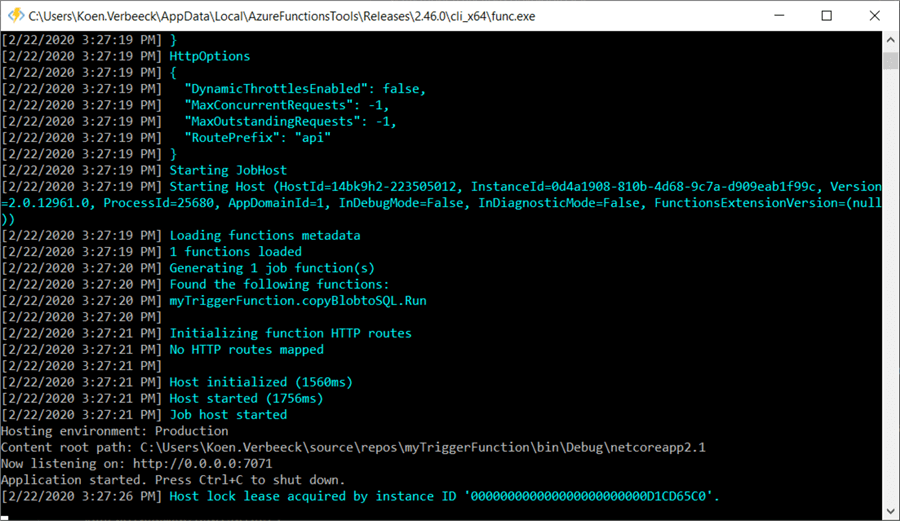

This will open up new command line window. There, a host will be created that will run the Azure Function on your local machine.

When the booting has finished, you can see the application is now waiting for a trigger to arrive:

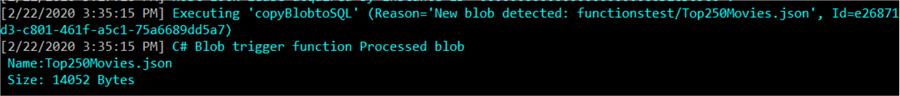

When you drop a file in the blob container (you can use Azure Storage Explorer for this task), you can see it will be picked up by our function:

And the data has been loaded to the Azure SQL Database:

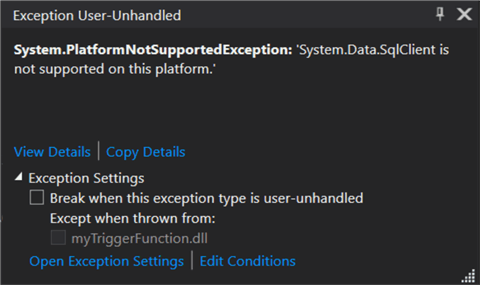

If you receive the following error during debugging, it's likely there's a compatibility issue between the version of the SqlClient package and the .NET environment the Azure Function is using:

System.PlatformNotSupportedException: 'System.Data.SqlClient is not supported on this platform.'

The issue is described in this GitHub post.

The issue can be fixed by downgrading the package to an earlier version. In this tip, version 4.5.1 is used.

If you want more information on publishing the Function to Azure and configuring the connections, you can refer to the tip Create an Azure Function to execute SQL on a Snowflake Database - Part 2, where a similar set-up is used.

Next Steps

- If you want to follow along, you can find the sample data here.

- Other tips on Azure Functions:

- More information on v3 of Azure Functions.

- For more Azure tips, check out these articles.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips