By: Haroon Ashraf | Comments (7) | Related: 1 | 2 | More > Integration Services Development

Problem

As a Data Warehouse or SQL Server BI (Business Intelligence) Developer, how I can make sure that my SQL Server Integration Services (SSIS) Packages use Azure UTC time to log events rather than my local system time while my packages are built locally, but run against Azure SQL Database during test runs?

Solution

The solution is the use of out-of-the-box UTC time function available in Integration Services Project in the form of T-SQL function as this can help to get away from time difference during logging events and test runs when your local dev machine uses GMT and your staging database is Azure SQL Database is UTC based.

This is the second part of the solution where the first part was all about the basics of a Data Warehouse and setting up an environment to create and run Integration Services Project along with setting up source and destination sample databases and a basic event logging system.

Pre-Requisites

The following things are recommended, if not essential, to understand this tip properly:

- Azure account setup

- Data Warehouse concepts particularly staging environment

- Basics of logging related to Data Warehouse

- Source database WatchesV4

- Staging database WatchesV4DW

- SSIS Package to run staging extract and implement basic logging

Please refer to the first part of the tip series if you would like to go through the details of all the above pre-requisites.

Adding Begin and End Date to Event Logging

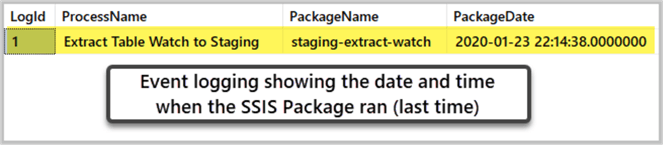

Assuming you have successfully followed the walkthrough in the first part of the tip and now if you look at the existing event logging strategy we adopted (in the first part) it gives us information about when the package ran the last time.

Let's quickly check the logging table by running the following script:

-- (2) View Log table after extract to staging package with simple logging ran SELECT d.LogId ,d.ProcessName ,d.PackageName ,d.PackageDate FROM dbo.DWLog d

We can see the date and time when the SSIS Package ran from the above output, but this information is not enough in the long run, particularly when it comes to logging Data Warehouse events.

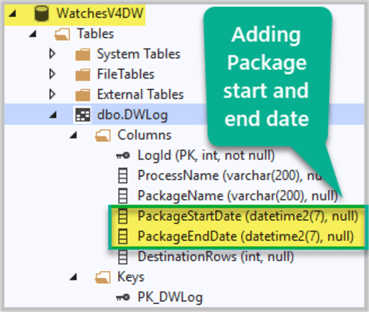

Adding Package Start and End Date Columns

Let's think about two scenarios which cannot be covered by this simple logging:

- What if the package began, but did not finish successfully due to some internal or external errors?

- What if we are interested to know the duration of a staging extract?

To cover the above scenarios, we do need to improve the simple logging by adding Package Start and End Date.

Let's now drop the existing audit table and create a new one with package start and end date as follows:

-- (1) Connect to WatchesV4DW database via SSMS or Azure Portal -- (2) Drop existing log table DROP TABLE DWLog ---- (2) Create mini logging events table (part-2) CREATE TABLE DWLog ( LogId INT IDENTITY(1,1), ProcessName VARCHAR(200), PackageName VARCHAR(200), PackageStartDate DATETIME2, PackageEndDate DATETIME2, DestinationRows INT CONSTRAINT [PK_DWLog] PRIMARY KEY (LogId), )

The table should look as follows:

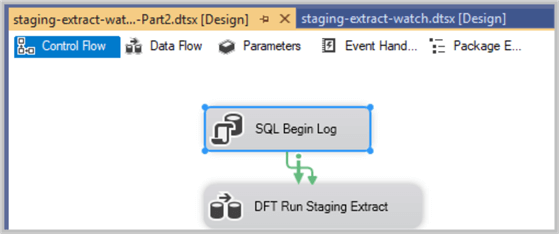

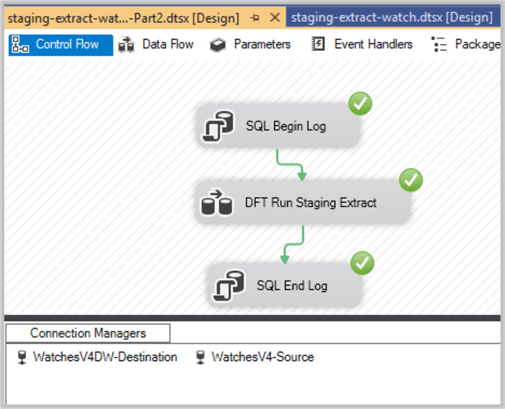

Adding SQL Tasks to SSIS Package

We have to remove the single SQL Task meant to note down Package date (we created earlier on) in the control flow of the staging extract SSIS Package.

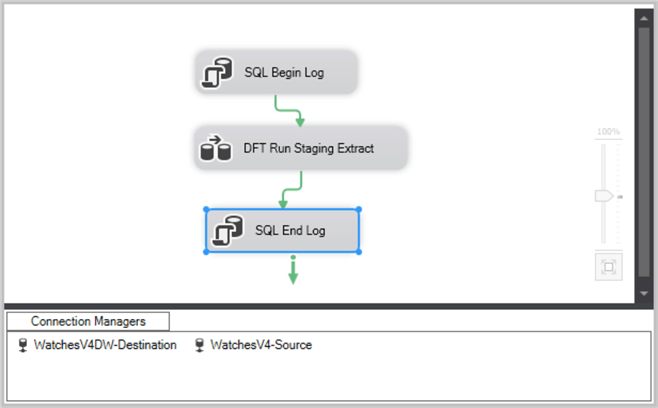

Create the following SQL Tasks before and after the data flow task which runs the staging extract:

- SQL Begin Log (SQL Task)

- SQL End Log (SQL Task)

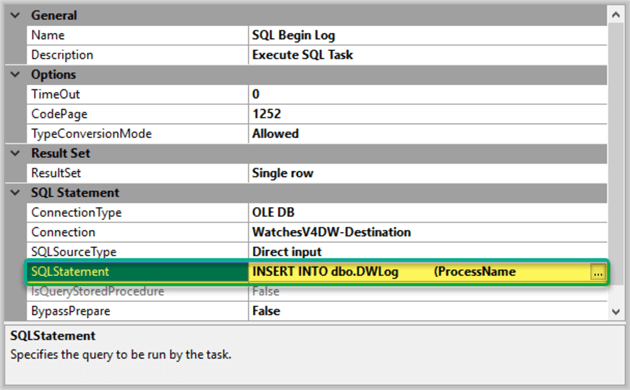

Adding SQL Begin Log Task

Please remove SQL Log Event task and add a new SQL Task called SQL Begin Log:

Double click the task and add the following code as SQL Statement:

INSERT INTO dbo.DWLog

(ProcessName

,PackageName

,PackageStartDate

,PackageEndDate

,DestinationRows)

VALUES

('Extract Table Watch to Staging'

,?

,?

,NULL

,NULL)

SELECT LogId FROM dbo.DWLog WHERE ProcessName='Extract Table Watch to Staging' and PackageStartDate=?

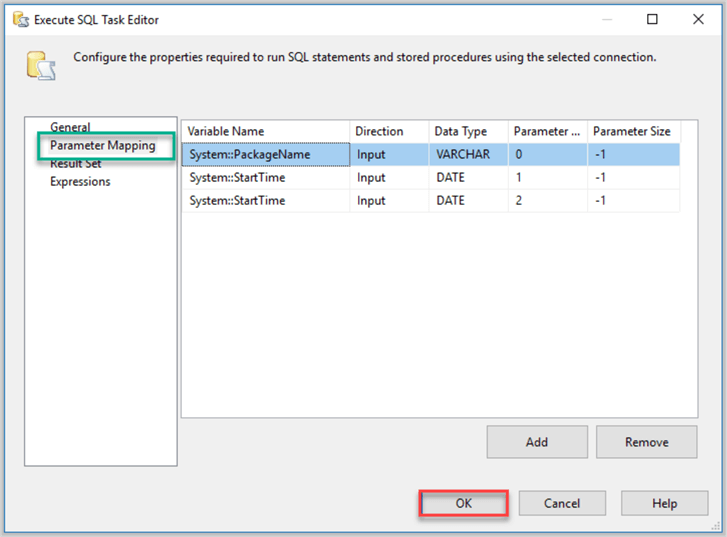

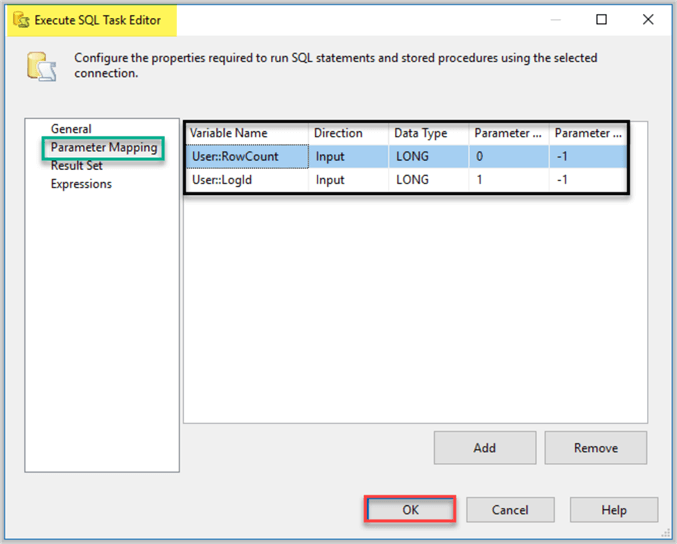

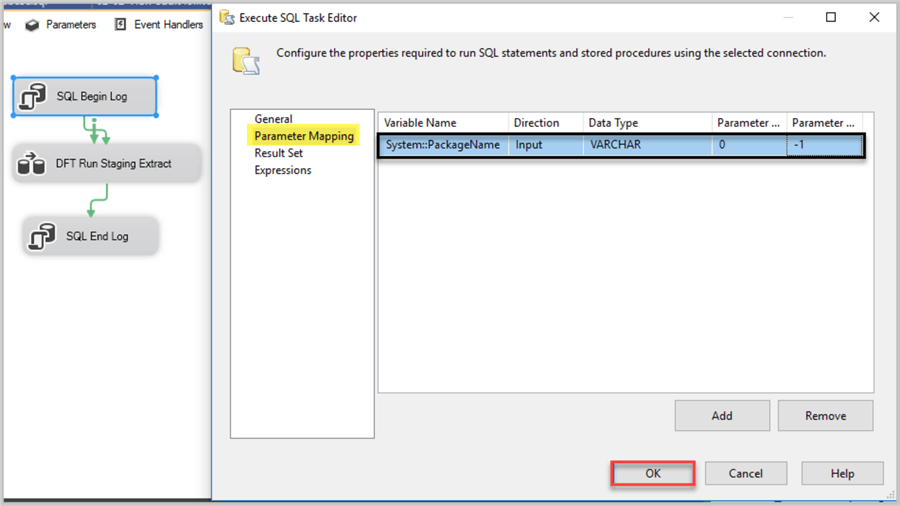

Add the Parameter Mapping as follows:

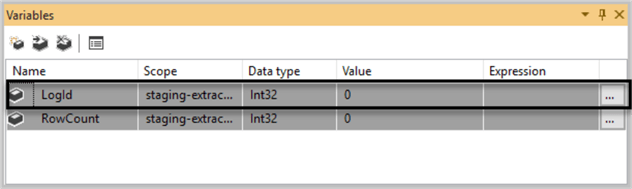

Add a new variable called LogId as follows:

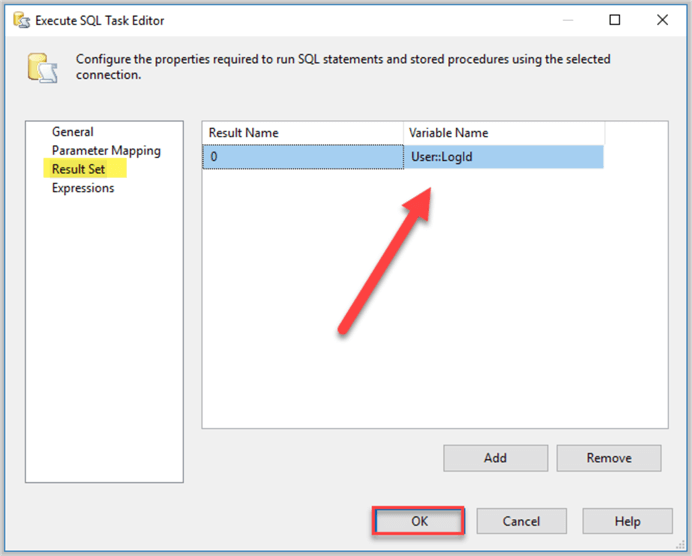

Add the newly created variable LogId in the Variable Name under Result Set table as follows:

Adding SQL End Log Task

Keeping the Data Flow Task the same we have to add SQL End Log task:

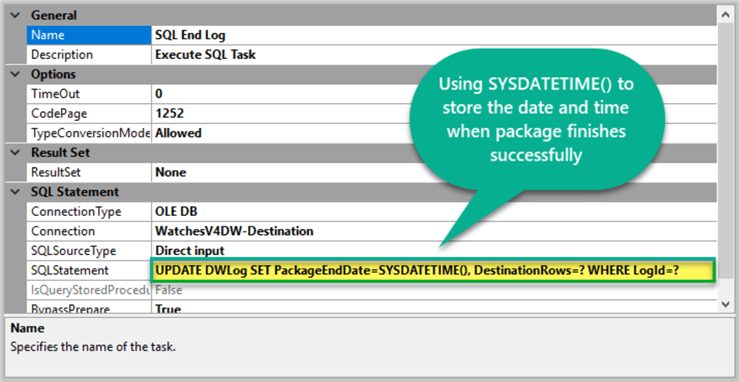

Double click SQL End Log Task in the control flow and add the following T-SQL next to SQL statement under General left navigation bar:

UPDATE DWLog SET PackageEndDate=SYSDATETIME(), DestinationRows=? WHERE LogId=?

Add the Parameter Mapping as shown below:

No need to be add anything in the Result Set.

Using Local System Time for Audit Logging

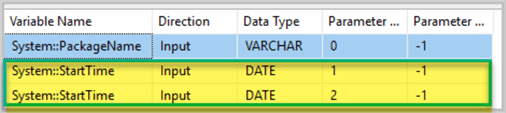

Now if you look closely at the logic of SQL Begin Log task it is obvious we are using built-in System variable to mark the start of the package as shown below:

Next, to mark the finishing time of the package we are using SYSDATETIME() T-SQL function:

Now when the package starts the system variable System::StartTime stores the current date and time from the system and as the package ends T-SQL SYSDATETIME() stores the ending time of the package again from the system, but there is an issue.

For example, if a developer runs this package on his dev machine then the start time of his local machine is going to be taken into account by PackageStartTime and since the database is an Azure SQL Database the end date and time is going to be read from Azure environment.

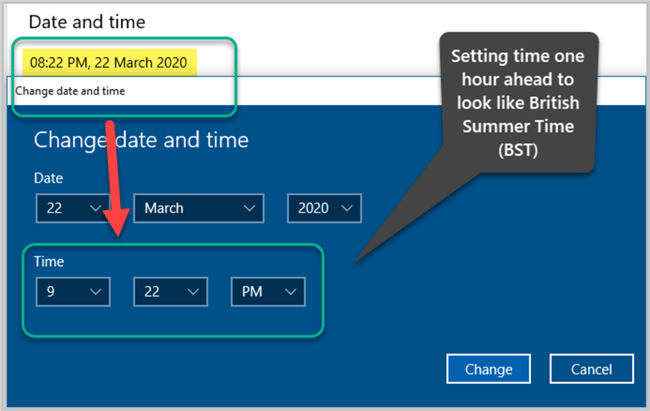

At the time of writing this tip, both the local UK time is the same as UTC so we may not feel any difference when the package runs, however during the summer time (BST) the local UK time is one hour ahead of UTC.

This means if I run this package during British Summer Time (BST) the end date and time (taken from Azure environment which is using UTC) of the package is going to be before the start date and time which is a technical flaw.

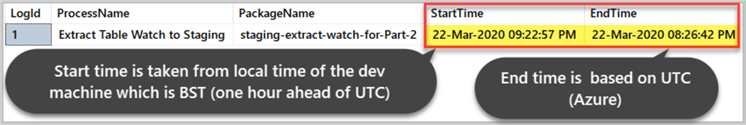

To prove this, I am going to change my current local time (8:22 PM) of the system to one hour ahead (9:22 PM) in order to replicate BST (British Summer Time).

Run the staging extract SSIS Package now:

Let's check the log table:

-- (1) Connect to WatchesV4DW database via SSMS or Azure Portal -- View audit log -- (2) View Log table after setting local time one hour ahead to replicate BST (British Summer Time) SELECT d.LogId ,d.ProcessName ,d.PackageName ,FORMAT(d.PackageStartDate,'dd-MMM-yyyy hh:mm:ss tt') as StartTime ,FORMAT(d.PackageEndDate,'dd-MMM-yyyy hh:mm:ss tt') as EndTime FROM dbo.DWLog d

The output is as follows:

We can clearly see that the Project end time is before the project began (Start Time) which is why using the local time of the dev machine is not going to work all the year because as soon as British Summer Time kicks in, there is going to be a problem as shown above.

Let's run the staging extract (SSIS Package) with the same settings one more time.

Using System UTC for Audit Logging

Now to solve the one hour ahead problem when we it is British Summer Time, we need to replace System::PackageStart variable that stores UTC time using GETUTCDATE() function.

This can be done by replacing the existing SQL Statement used in SQL Begin Log task with the following:

INSERT INTO dbo.DWLog

(ProcessName

,PackageName

,PackageStartDate

,PackageEndDate

,DestinationRows)

VALUES

('Extract Table Watch to Staging'

,?

,GETUTCDATE()

,NULL

,NULL)

SELECT LogId FROM dbo.DWLog WHERE ProcessName='Extract Table Watch to Staging'

ORDER BY LogId DESC

Remove all the other variables in the Parameter Mapping except PackageName:

Now run the staging extract package (making sure we have still replicated British Summer Time which is one hour ahead):

Check the audit log this time as follows:

-- (1) Connect to WatchesV4DW database via SSMS or Azure Portal -- View audit log -- (2) View Log table after setting local time one hour ahead to replicate BST (British Summer Time) SELECT d.LogId ,d.ProcessName ,d.PackageName ,FORMAT(d.PackageStartDate,'dd-MMM-yyyy hh:mm:ss tt') as StartTime ,FORMAT(d.PackageEndDate,'dd-MMM-yyyy hh:mm:ss tt') as EndTime FROM dbo.DWLog d

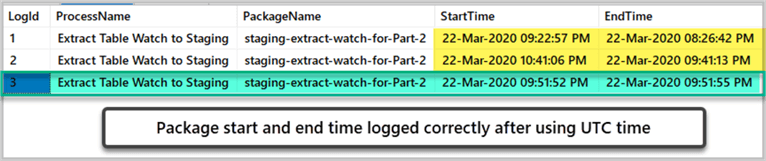

The output is as follows:

Congratulations, you have successfully resolved time difference problem when running the staging extract (SSIS Package) locally on your dev machine by exclusively specifying UTC to mark the start time rather than using the system start time.

Next Steps

- Please try adding duration of the package by adding a new column Duration and calculating it using a date time function to improve your data warehouse audit log strategy

- Please try adding another staging extract package by adding the same logging method and then run both packages by a master package

- Please go through the tip SQL Server Date and Time Functions with Examples to get a few more ideas of how to use date and time

About the author

Haroon Ashraf's interests are Database-Centric Architectures and his expertise includes development, testing, implementation and migration along with Database Life Cycle Management (DLM).

Haroon Ashraf's interests are Database-Centric Architectures and his expertise includes development, testing, implementation and migration along with Database Life Cycle Management (DLM).This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips