Problem

More than before, organizations now aim for a well-defined approach to manage their data storage effectively. Some reasons for this include operational efficiency, cost management, regulatory compliance, and strategic decision-making. In this article, I will describe an approach on data retention management for Lakehouse files to manage data storage when the data exists as files in the Fabric Lakehouse.

Solution

A Fabric Lakehouse has “Tables” and “Files” sections. The Tables section typically holds delta parquet tables, whereas the Files section typically holds files like CSV, JSON, parquet, etc.

Defining the Business Problem

So, assuming we have a Fabric Lakehouse with partitioned data (Year, Month, and Day), which tends to grow over time. This might result in increased operational costs for the organization. Thus, there is a need to define a robust retention policy. Another reason might be that the organization needs to delete certain data after a defined time range to meet regulatory compliance.

In both cases, the following assumptions about the organization’s business problem will be addressed in this article:

- The Lakehouse contains partitioned data for Year, Month, and Day.

- The data retention policy requires data to be deleted from the Lakehouse folder when the data is older than 3 days.

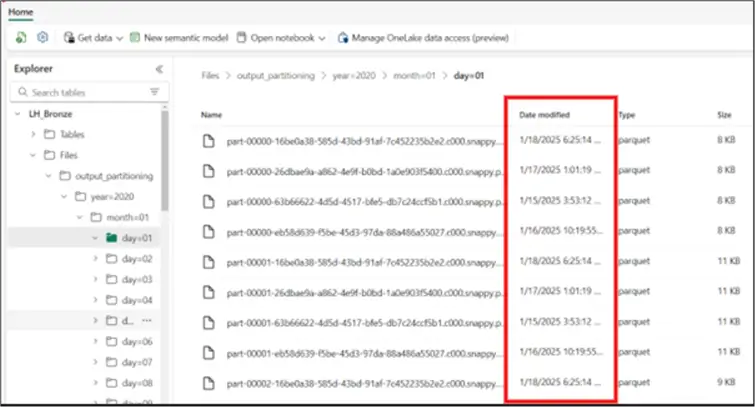

The snapshot below shows the partitioned data within the Lakehouse folders. As you can observe, there are parquet files uploaded up to 5 days ago.

The organizational data retention policy requires that only data uploaded within 3 days should be retained. To achieve this, I will be leveraging Fabric pipelines to ensure the process can be automated as well.

Creating a Fabric Pipeline

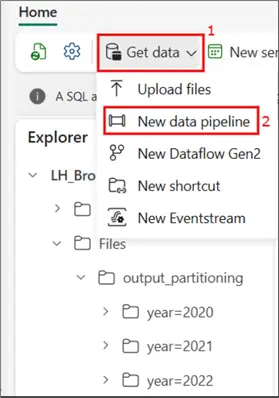

To create a Fabric Pipeline, you simply follow the steps in the image below.

I have named the pipeline “Delete activity”.

Delete Data Activity

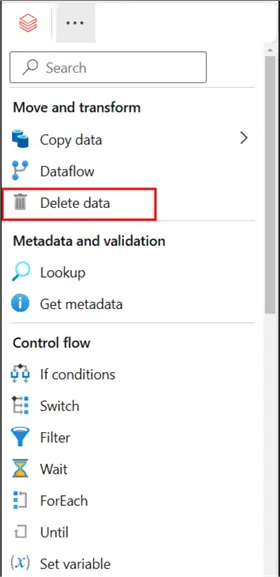

Next, I have selected a Delete data activity, as seen in the image below. You can learn more about the Delete data activity from this Microsoft Fabric documentation.

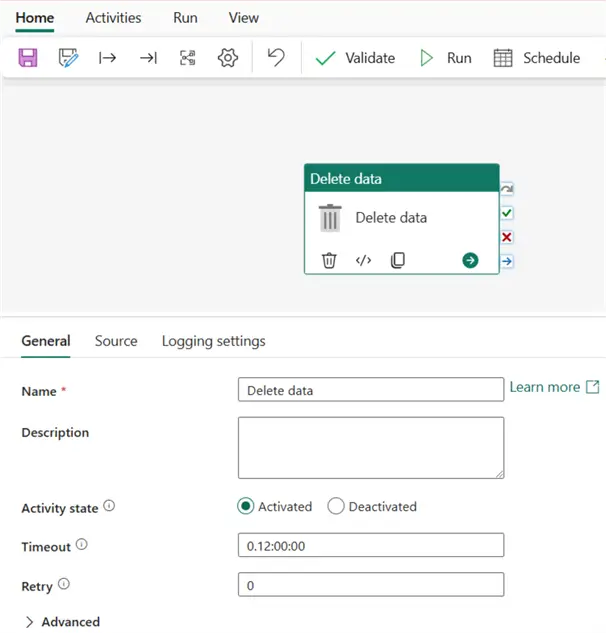

I have not changed anything on the General settings tab apart from the only mandatory entry, Name of the activity, which I have named “Delete data”.

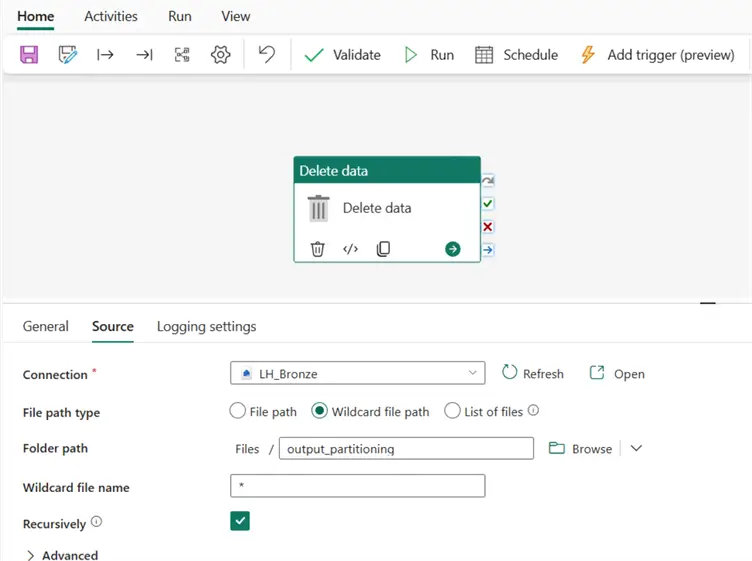

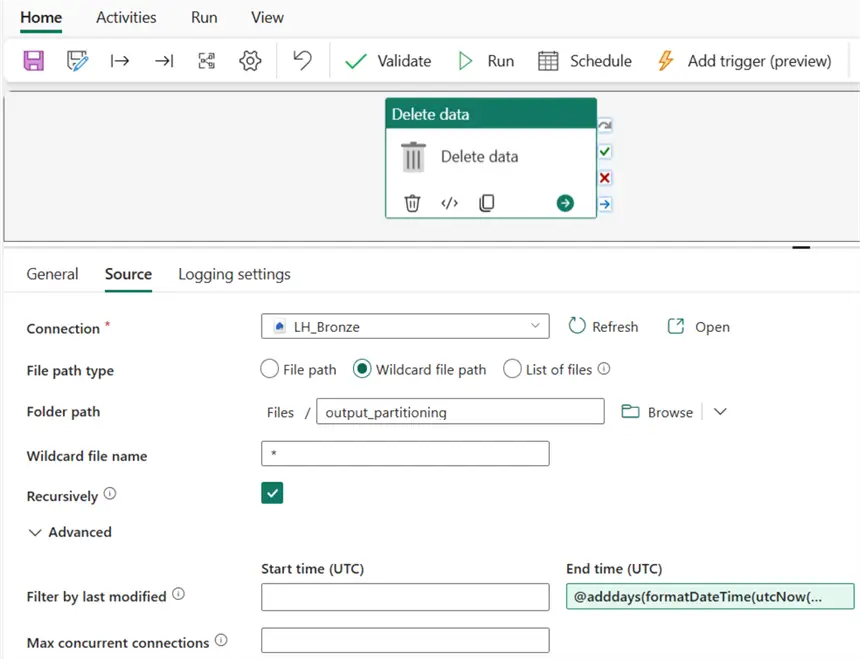

On the Source settings tab, the only mandatory entry is Connection. Here I have connected to the Lakehouse where the partitioned files are saved. I have also selected a Wildcard file path as the File path type. See the image below.

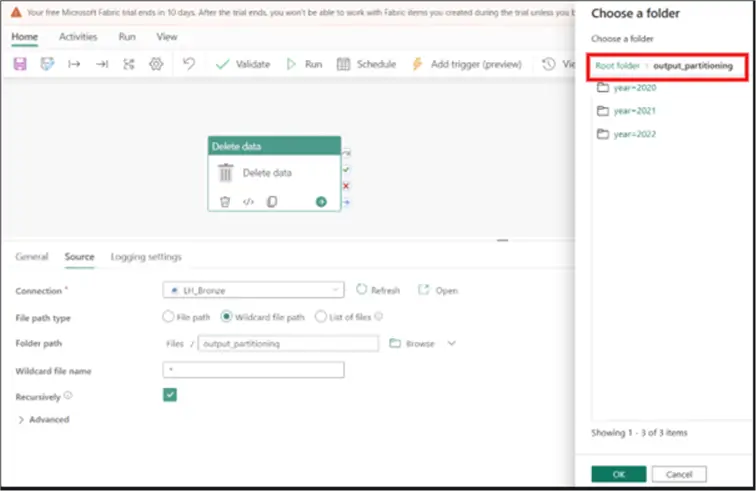

For the Folder path, you can use the root folder to the files in the Lakehouse. See the image below on how to get to the root folder name.

On the Wildcard file name, I am using an asterisk (*), as seen in the image above.

Creating Expression

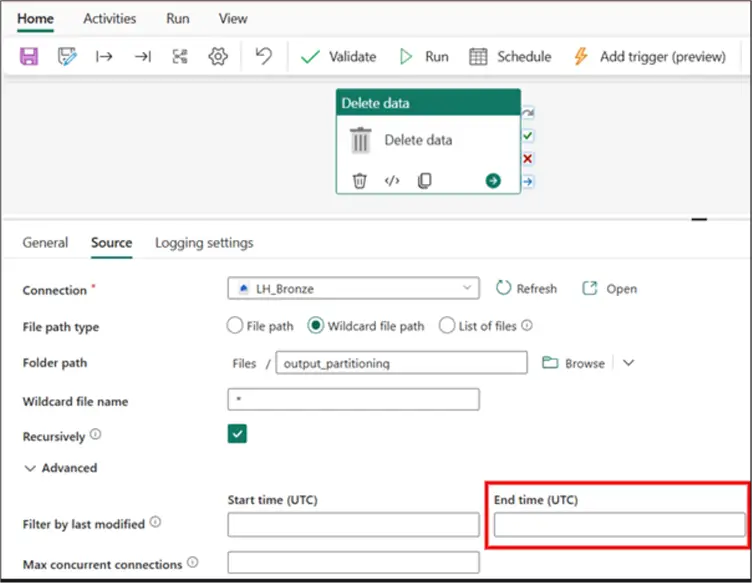

The actual, magic is done within the Advanced section of this Source settings tab. We need to enter the expression below on the “End time (UTC)” well, as seen in the image below.

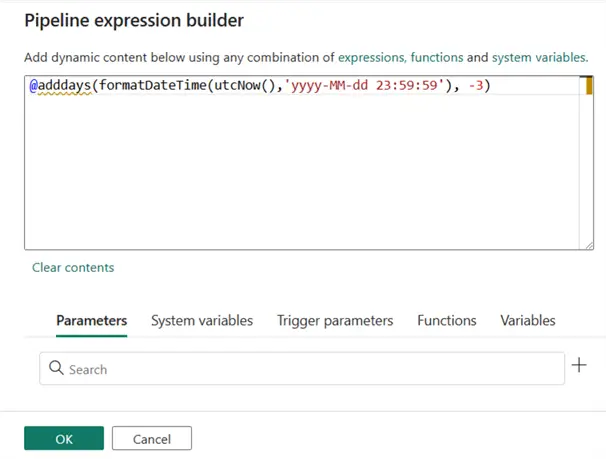

@adddays(formatDateTime(utcNow(),'yyyy-MM-dd 23:59:59'), -3)

To enter the expression, click within the “End time (UTC)” well and select “Add dynamic content …”. A new window will pop up on the right-hand side where you can enter the expression, as seen in the image below.

After clicking OK, you should see the screen like the image below.

Logging Settings

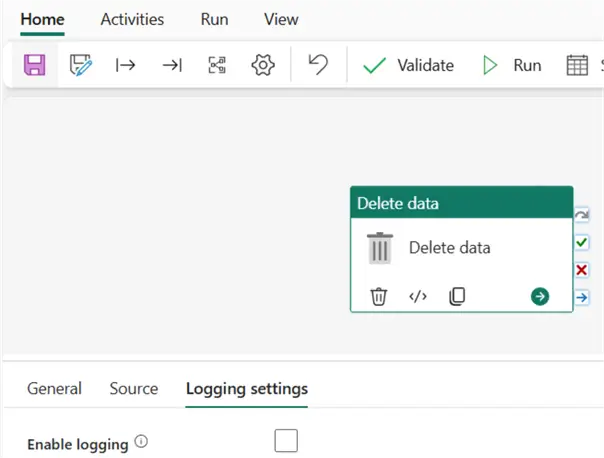

If you have a business requirement to log deleted files, you can do so using the Logging settings tab, as seen in the image below. But, in this article, that’s not the objective, so I haven’t elaborated on that.

How does the expression code snippet work?

In order to understand what the expression is doing (which can help you further in case you need to adapt/modify it to your own use case), here is a brief description of the different components that make up the code snippet as follow:

- utcNow(): Returns the UTC (Coordinated Universal Time) date and time. Learn more about date and time functions in Fabric pipelines.

- formatDateTime(utcNow(),’yyyy-MM-dd 23:59:59′): Formats the current UTC date to a specific format. The yyyy-MM-dd part ensures the date is in the format year-month-day. The 23:59:59 part ensures the time is set to the last second of the day.

- @adddays(…, -3): Takes the formatted date from the previous step and subtracts 3 days (-3 means minus 3) from it.

So, the end product is the return of an exact timestamp of 11:59:59 PM three days ago in UTC. In the case of the business problem for this article, we need to ensure the pipeline activity deletes those files that are older than three days in the Lakehouse root folder.

Thus, if I run this pipeline on 18 January, I expect all files on or before 15 January to be deleted from the folder.

Automate the Process

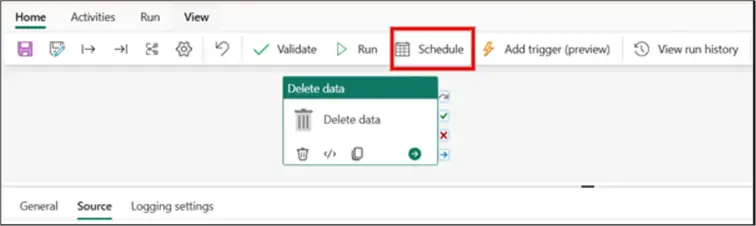

Once the pipeline is configured, you may want to automate the process for efficiency by scheduling the run of the Delete data activity.

Click Schedule, as seen in the image below.

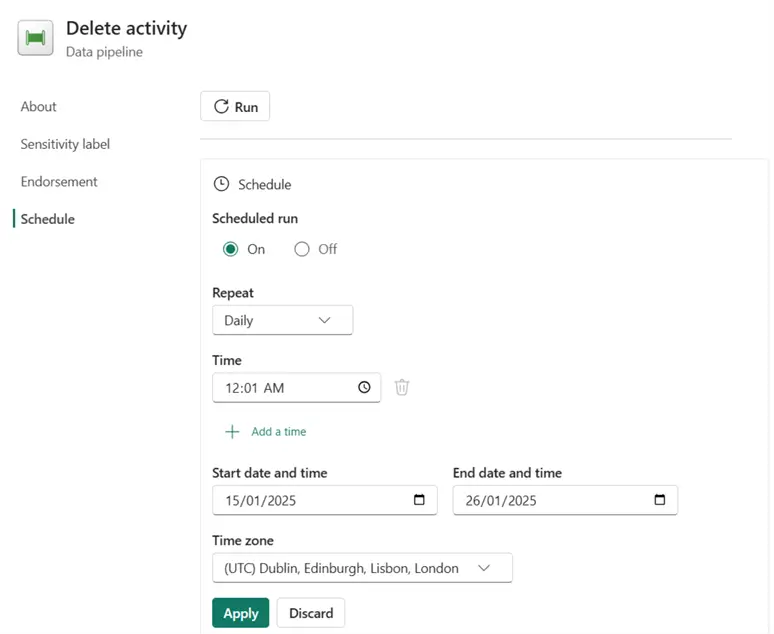

Then, in the window that opens on the right-hand side, enter how often you want the pipeline to run. For example, I have entered that the pipeline should run on a daily repeat, starting at 12:01 AM daily from 15 January 2025 to 26 January 2025. This is just for description purposes; yours might be different. See the image below.

In this article, I have described an approach how to efficiently automate data retention management for Lakehouse files and manage data retention in a Fabric Lakehouse folder. It is important to note that there are ways you can leverage parameters and variables in your pipeline expressions for more efficiency. However, I have written this to make it easier for anyone to adapt it into their business scenario and solve problems easily.

In summary, by managing data storage effectively, organizations can optimize their resources, position themselves for growth and innovation, and ensure compliance. This is the importance of data retention management for Lakehouse files in Microsoft Fabric.

Next Steps

- Read more about Delete data activity in Microsoft Fabric.

- Learn about date and time functions from this Microsoft Fabric documentation.

- Read more on Microsoft Fabric pipelines.

- Read more about how you can manage your deleted files using logging in Microsoft Fabric.

- Additional Microsoft Fabric articles.