By: Ahmad Yaseen | Comments (1) | Related: > SQL Server 2016

Problem

Statistics are small objects that describe the data distribution for tables and indexes in a SQL Server database. These statistics are used by the SQL Server Query Optimizer to determine the best plan to execute a query. Because of this importance, these statistics should be up to date in order to get the best performance when executing a query. The mechanism that automatically updates the statistics in SQL Server depends on the number of rows that are modified in a table which exceeds a specific threshold. The downside is that the threshold is very high for tables with a large number of rows which leads to updates occurring less frequently which can cause performance issues.

Solution

In order to trigger the auto update statistics feature in SQL Server, the number of updated rows should exceed 20% of the table rows. In the case of large tables, such as a table with 1 million rows, more than 200,000 records need to be updated in order to update the table statistics. This can lead to performance problems due to bad query plans created by the query optimizer that still uses the old statistics.

To overcome this issue, trace flag 2371 was introduced in SQL Server 2008 R2 SP1 that overrides the default threshold of the auto update statistics feature for tables with more than 25,000 rows. If you turn on this trace flag, the new threshold to update the statistics will be lower for tables with a high number of rows. In the previous example of a 1 million row table, there is no longer the need to update 200,000 rows to update the statistics on the table if trace flag 2371 is activated.

In SQL Server 2016, you don't need to turn on trace flag 2371 because this new method is used by default by the SQL Server engine when determining when to update the statistics.

Let's walk through a small demo to see how the auto update statistics behaves.

SQL Server 2014 Compatibility Mode Database Test

We will create a simple table in the MSSQLTipsDemo database and fill it with 60,000 rows:

USE [MSSQLTipsDemo]

GO

-- set to SQL 2014

ALTER DATABASE [MSSQLTipsDemo] SET COMPATIBILITY_LEVEL = 120

GO

CREATE TABLE [dbo].[StatisticsDemo](

[ID] [int] IDENTITY(1,1) NOT NULL,

[Name] [nvarchar](50) NULL

) ON [PRIMARY]

GO

DECLARE @NumberOfRows INT

SET @NumberOfRows = 60000;

WHILE (@NumberOfRows > 0)

BEGIN

INSERT INTO [dbo].[StatisticsDemo]([Name]) VALUES ('AABBCC')

SET @NumberOfRows = @NumberOfRows - 1

END

In order to create the statistics on the table, we will run a heavy SELECT statement as below:

SELECT * FROM StatisticsDemo WHERE ID>1000

From SQL Server Management Studio (SSMS), expand the table node from the Object Explorer, then expand the Statistics node to view the newly created statistics. The statistics that start with _WA means that it is automatically created using the AUTO_CREATE_STATISTICS feature:

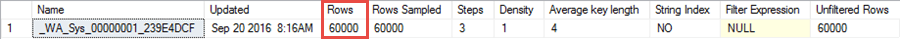

The DBCC Show_Statistics command can be used to show the statistics details, such as the updated date and the number of rows in the table.

To use, you just need to specify the table name and statistic name as follows:

DBCC Show_Statistics('StatisticsDemo','_WA_Sys_00000001_27F8EE98') with stat_header

As previously mentioned, to trigger the auto update statistics feature, more than 20% of the table rows should be updated. In our case, the table has 60,000 rows, so more than 12,000 records should be modified to update the statistics, but using SQL Server 2016 the database engine will override this rule for large tables.

If we query the compatibility_level property of our database from the sys.databases system table we can see the current compatibility level:

SELECT compatibility_level FROM sys.databases WHERE name = 'MSSQLTipsDemo';

The result is (120), which is SQL Server 2014 compatibility:

Although we are using SQL Server 2016 version, we need to make sure the compatibility level of the database is (130) which is the default compatibility level for newly created databases in SQL Server 2016. But if the SQL Server instance is upgraded from an old version or the database is restored from a backup taken from earlier SQL Server version, the compatibility level will not be (130) and the new change for the auto update statistics will not be applicable.

We will delete 10,000 records from our test table, which is less than the 12,000 threshold (20% of 60K):

DELETE FROM StatisticsDemo WHERE ID <=10000

SELECT * FROM StatisticsDemo WHERE ID>1000

DBCC Show_Statistics('StatisticsDemo','_WA_Sys_00000001_27F8EE98') with stat_header

Querying the statistics again, the statistics do not update and the number of rows did not change:

SQL Server 2016 Compatibility Mode Database Test

We will change the compatibility level of the database to (130) using the below ALTER DATABASE statement and drop and recreate the table.

USE [MSSQLTipsDemo]

GO

ALTER DATABASE [MSSQLTipsDemo] SET COMPATIBILITY_LEVEL = 130

GO

DROP TABLE [dbo].[StatisticsDemo]

GO

CREATE TABLE [dbo].[StatisticsDemo](

[ID] [int] IDENTITY(1,1) NOT NULL,

[Name] [nvarchar](50) NULL

) ON [PRIMARY]

GO

DECLARE @NumberOfRows INT

SET @NumberOfRows = 60000;

WHILE (@NumberOfRows > 0)

BEGIN

INSERT INTO [dbo].[StatisticsDemo]([Name]) VALUES ('AABBCC')

SET @NumberOfRows = @NumberOfRows - 1

END

The compatibility level can also be changed in SSMS from the Options tab of the Database Properties:

Then run the statements again which will refresh the statistics:

SELECT * FROM StatisticsDemo WHERE ID>1000

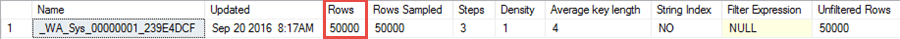

DBCC Show_Statistics('StatisticsDemo','_WA_Sys_00000001_239E4DCF') with stat_header

Here are the stats showing 60,000 rows.

If we run these statements again, we can see the stats will update.

DELETE FROM StatisticsDemo WHERE ID <=10000

SELECT * FROM StatisticsDemo WHERE ID>1000

DBCC Show_Statistics('StatisticsDemo','__WA_Sys_00000001_239E4DCF') with stat_header

The statistics are now updated and the number of rows has changed even though 10,000 rows is under the default 20% threshold and we didn't use trace flag 2371 in our demo.

It is clear from the results that the threshold becomes dynamic in SQL Server 2016 and the threshold is decreased with increasing number of rows in a table. In this way the statistics will be updated more frequently and will guarantee better query performance.

Next Steps

- Read more about What are the SQL Server _WA_Sys... statistics?

- Check out Execute UPDATE STATISTICS for all SQL Server Databases

- Check also How to Identify Useful SQL Server Table Statistics

About the author

Ahmad Yaseen is a SQL Server DBA with a bachelorís degree in computer engineering as well as .NET development experience.

Ahmad Yaseen is a SQL Server DBA with a bachelorís degree in computer engineering as well as .NET development experience.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips