By: Koen Verbeeck | Updated: 2022-04-07 | Comments (6) | Related: > Azure SQL Database

Problem

We are thinking about building a new data warehouse. It's not gigantic in size – a couple of gigabytes, certainly not terabytes – and we would like to build it in the cloud. We are hesitating if we should choose Azure SQL Database or Snowflake cloud data warehouse offering. Any pointers?

Solution

For readers not familiar with Snowflake: it's a data warehouse vendor and its database solution is a cloud data warehouse offering which is available on Azure, but also on AWS and the Google Cloud platform. You can learn more about Snowflake in this tutorial, or you can read the tip Why Choose Snowflake to discover some of the advantages of the Snowflake platform. You can also read the tip Snowflake vs SQL Server, where a comparison has already been made between Snowflake and an on-premises installation of SQL Server (or SQL Server in an Azure Virtual Machine).

In this tip specifically, we will take a look at Azure SQL DB. On-premises SQL Server, Azure SQL Managed Instance or Azure Synapse Analytics are out of scope.

Size of the Data Warehouse

A first indicator if you should choose for Snowflake rather than an Azure SQL DB instance, is the size of the data warehouse. Azure SQL DB is an OLTP database in its core and it's not designed to process very large volumes of data with analytical queries. In the general purpose and business critical tiers of Azure SQL DB, storage is limited to 4TB. If you need more, you can go for the hyperscale service tier which can go up to 100TB. However, the storage costs aren't cheap: for my region, it's $0.119 per GB per month. This is $119 per TB per month. Snowflake has prices ranging between $25 and $40 per TB/month (depending if you use pre-allocated storage or on-demand) and Azure Synapse Analytics has a storage cost of about $23 per TB/month. This all means hyperscale is a rather expensive offering for storing your data. Like the regular Azure SQL DB, it's main purpose is handling OLTP workloads, maybe hybrid workloads (where you combine OLTP with some analytical workloads).

For very large data warehouses, Snowflake seems a better option since it can scale up to the petabyte range and the storage costs are considerably cheaper. If you want to stay inside the Microsoft Data Platform, Azure Synapse Analytics is a better option to process large amounts of data than Azure SQL DB.

Performance

If you would load a data set in both Snowflake and Azure SQL DB - and the data set is suited for Azure SQL DB, for example a couple of million rows – and you would run some queries, chances are Snowflake will outperform Azure SQL DB. Snowflake is built to run large analytical queries, while Azure SQL DB is not. Azure SQL DB's main purpose is to run OLTP workloads. An important performance impact is for example that Azure SQL DB always runs in full recovery model to enable point-in-time restores. This cannot be set to bulk-logged or simple recovery model. In other words, all DML queries (INSERT, UPDATE, DELETE and MERGE) are fully logged. Because of this, the performance of IO operations will be limited. It's possible that when you migrate an on-premises SQL Server database to Azure SQL DB, you get slower query executions times. You cannot fine tune an Azure SQL DB like you can with a physical box.

You can obviously improve performance in Azure SQL DB by having appropriate indexes and decent data modelling, or even by using columnstore indexes. Keep in mind those are not available in all tiers of Azure SQL DB (S3 and up). Eventually you'll hit some limit though and the only option is to scale up to a higher tier.

Snowflake is faster out-of-the-box, but you have limited tuning options. There are no indexes you can create, so if a query is slow you'll need to rewrite it (for example to use intermediate steps by dumping data in a temp table) or you can scale up to a higher tier of computing power.

This article does a performance comparison of Snowflake and Azure SQL DB: Testing Snowflake vs Azure.

Scaling

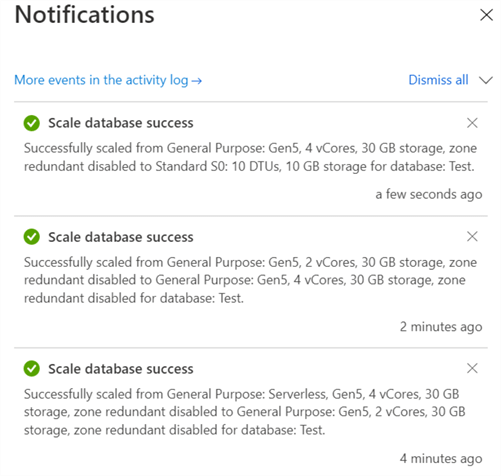

Both databases can scale resources up or down. In Azure SQL DB, this depends on the purchasing model. When you use the vCore-based purchasing model, you can scale the number of vCores, the memory, and the amount and speed of storage. In the DTU-based purchasing model, you can scale only the DTUs, which are a mix of compute, memory and IO resources. Microsoft refers to the scalability of both purchasing models as dynamic scalability. This means you can manually adjust the scale of the service. This can be done in the portal, and can take a bit of time and cause for some minimal downtime. You can switch between purchase models when scaling:

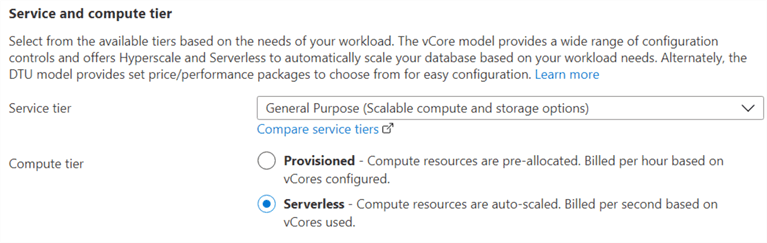

Both the DTU and the vCore-based purchasing model use pre-allocated (provisioned) resources. You can also choose the serverless option for the compute tier when you use vCores:

The advantages of using Serverless over provisioned are:

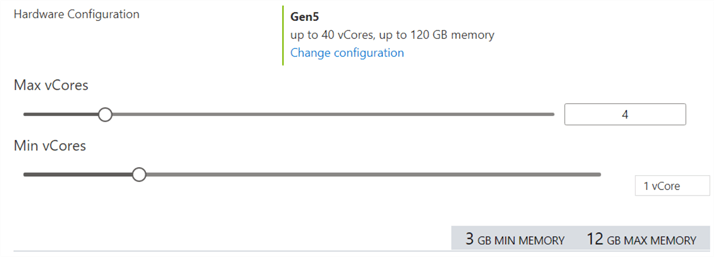

- autoscale of the service. Depending on the workload, the database engine will scale the number of vCores. You can set a maximum amount and a minimum amount:

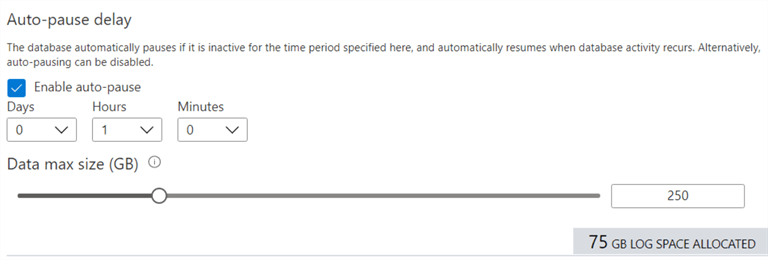

- autopause. When the service is not used for a specified amount of time, the database engine will pause itself. No costs will be accrued when the service is paused.

There are some downsides though: when the service is paused, the first connection will always fail. It has also been reported that it might take some time to auto scale up after a pause if lots of CPU is being consumed.

You can script out the scaling of Azure SQL DB. You can use the ALTER DATABASE command, as demonstrated in the tip Auto Scale Azure SQL DB using Azure Logic Apps. Or you can use PowerShell, as demonstrated in this blog post: How-to auto-scale Azure SQL Databases.

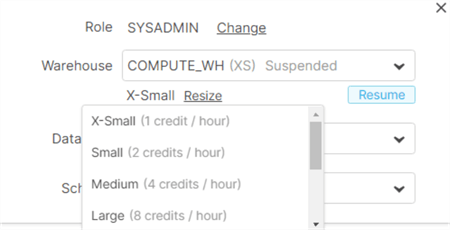

Snowflake can scale its virtual warehouses up and down. The scaling is done transparently and almost instantaneous (in contrast with Azure SQL DB where it can take a minute or more).

Warehouses in Snowflake can scale up and down, but they can also be clustered into a multi-cluster warehouse. In this case you're scaling for concurrency. Autoscale can be configured for a multi-cluster warehouse. For example, if you have a cluster consisting of 3 warehouses and the load increases, Snowflake can scale the cluster to 4 warehouses to handle the extra load. Snowflake can however not autoscale the actual size of a single warehouse. You can scale a warehouse in the user interface, or by using the ALTER WAREHOUSE command.

A warehouse can automatically suspend (pause) and resume. Like scaling up and down, this is also transparent and instantaneous. Unlike Azure SQL DB, Snowflake doesn't lose a connection when a warehouse needs to resume.

Cost

In both cases, the calculation of the cost per month is straight forward:

- For Snowflake, you pay a certain amount of dollars per terabyte. The price depends on the decision to go with pre-allocated storage (which is cheaper) and storage allocated on-the-fly (which costs more). In dollars, prices are somewhere between $23 and $40. You also pay for the amount of time the warehouses have been running. You pay for the full first minute, and after that for every second the warehouse is running. The price of the compute depends on which edition of Snowflake you're using. There are also other services that might inflict some costs. For more info, check out the pricing guide.

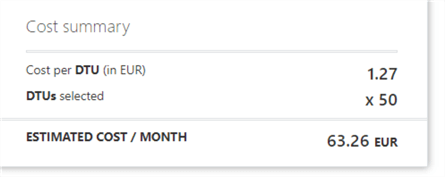

- For Azure SQL DB, the cost is more fixed (except if you're using serverless). You pay for the tier the database is in. The higher the DTU or the vCores, the more amount you pay per month.

An example for DTUs:

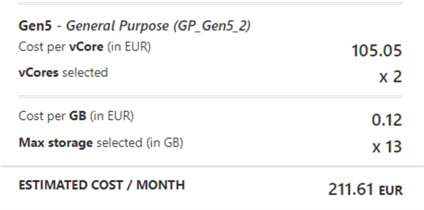

For vCores:

The minimum amount of vCores is two, which already results in a cost substantially higher than an Azure SQL DB in a standard tier. The cost for storage is separate though. Azure SQL DB is a costly method for storing data, over three times as expensive as Snowflake. For very large volumes of data, Azure Data Lake Storage might be a better option if the Azure data platform is preferred.

Conclusion

Snowflake has many advantages. It performs really well out of the box, can handle unstructured data, has a lower price point for storage and it scales up and down instantaneously. However, if the database is queried often, compute costs can rise to a significant amount. There are methods to save on costs, for example you can make sure virtual warehouses are paused when they're not used, you can consolidate virtual warehouses to make sure there's no underutilization or you can pull the data into a Power BI dataset so there's no need to query the actual data warehouse.

Azure SQL DB can't handle very large volumes of data as well as Snowflake, and there are some performance issues due to the logging bottleneck. However, for smaller data sets, Azure SQL DB can still be an attractive option. For example, if data is loaded only once a day, you can just load it in Azure SQL DB, model the data and then load all the data into a Power BI dataset. This means the database is only active during the data refresh. There are some options to save even more on costs:

- You can use serverless, if you can work around the "first connection failing" problem

- You can scale down the database when it is not used during the day.

Next Steps

- Check out these other Snowflake resources:

- And these related articles:

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-04-07