By: Koen Verbeeck | Updated: 2022-06-06 | Comments (3) | Related: More > Import and Export

Problem

I've been working with data warehouses and ETL tools like Integration Services for some time now. Recently I saw the term "ELT" in a Business Intelligence (BI) presentation. What does it mean exactly and why is it different from the traditional ETL data integration processes?

Solution

The term ETL has been around for quite some time now, and it is an acronym for Extract, Transform, and Load. It's the process where raw data is extracted from one or more sources, the extracted data is subsequently shaped into a desired format and calculations are performed as data transformations, to be finally loaded into a target system. The term is explained in detail in the tip What is ETL?, which is recommended reading before you read this tip.

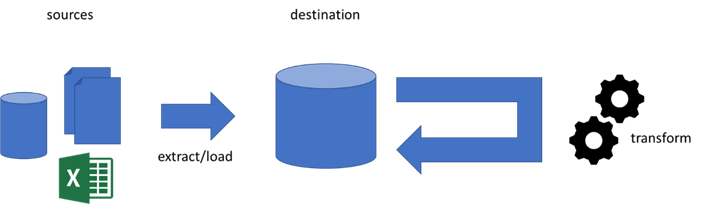

In the past years, there's been a push to "ELT", where the L and the T are reversed: Extract, Load, and Transform. Basically, this means we're extracting the data from the sources and writing them as-is (or with minimal transformations) to the destination (which can be a data lake, a database, or a NoSQL data store). Once the data is loaded, it's transformed using some compute resource.

The reason why a distinction is often made between an ETL process and an ELT process is the intent. With ETL, you use a tool to extract the data, run the data through the tool and perform certain transformations on the data, and finally load the data to the destination, all in the same tool. With ELT, some method/tool is used to extract the data but instead of manipulating the data it's directly loaded into some staging area. The "ETL tool" plays a minimal role in the whole data pipeline since the transformations are moved to the destination. Typically, ELT processes involve more code, while ETL processes developed in ETL tools are more low-code and visual in nature.

Example Use Case

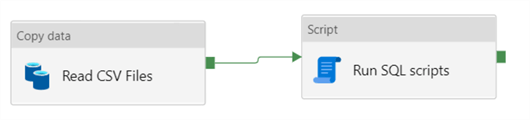

Let's give an example of why one would implement an ELT data pipeline. Suppose you're using Azure Data Factory (ADF) to load data from a bunch of CSV files into an Azure SQL database. In itself, ADF doesn't have many transformation capabilities. The Copy data activity will pick up the data from the CSV files and write it to a relation table, but that's about the only transformation it can do. The Copy data activity can also convert JSON to CSV for example, or unzip files, but it doesn't have the capability to do calculations.

If you want to build your data pipeline in ADF, you're going to use the Copy data activity to load the data into the database and then it will execute a bunch of SQL scripts that will read the data from the staging tables, perform calculations on it and then write it to other tables in the database.

This means most of the business logic, such as cleaning up data, calculating new columns, checking integrity, and loading data into dimensions and fact tables for example are all done in SQL. This is a more code-intensive solution that if you would build the same process in an Integration Services data flow, where there are built-in transformations available.

Another example is when you're running against the limitations of Integration Services (SSIS). Suppose you need to read a large batch of data and perform some aggregations on it. If you use the Aggregate transformation, SSIS will read all of the data in memory before it starts outputting any data. If the data size is too big, it cannot all be loaded into the memory of the server and SSIS will start swapping data out to disk. At that point, the process typically grinds to a halt, and it might take hours or even days to finish the transformation. However, the database engine of SQL Server is better equipped to handle such transformations. A solution is to read the data, write it to a staging table in SQL Server and use a SELECT query with a GROUP BY clause to calculate the aggregations. In essence, the process has now shifted from ETL to ELT, since the calculations are now done by the destination (SQL Server).

ELT Tools in the Microsoft Data Platform

Azure Data Factory

The most prominent ELT tool in the Microsoft Data Platform is Azure Data Factory. It can handle a variety of tasks, but it cannot directly perform calculations on the data. The exception are data flows in ADF, which are an extra feature build on top of Databricks clusters to provide you with the option to create ETL pipelines in ADF. Data flows are however more suited for big data processing and not for smaller data loads. In ADF, you extract and load data using the Copy data activity. Afterwards, you can orchestrate the transformations using activities like the Script activity, the Stored Procedure activity or any type of activity that can start some sort of external compute (e.g. Azure Logic Apps, Azure Functions, Azure Databricks notebook).

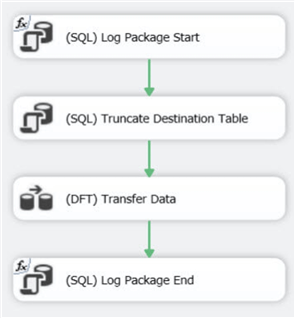

Integration Services

Integration Services – which is a traditional ETL tool – can be used for ELT scenarios as well. Instead of overly using transformations in the data flow, the data flow itself is only used to move data from one data store to another. Once the data is at its destination, you can orchestrate the execution of SQL statements using Execute SQL Tasks.

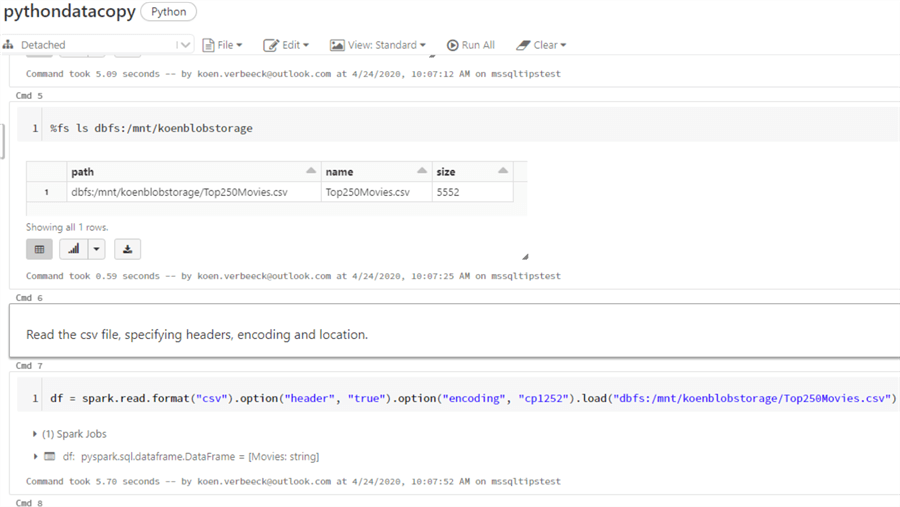

Azure Databricks

Azure Databricks is another popular ELT option for cloud data warehouses. You can write code inside notebooks using a programming language of your choice: SQL, Scala, R or Python. You can connect to any data source and write the data to the Databricks file system, which is basically Azure Data Lake storage. Using any of the mentioned languages, you can transform the data as you please and write it back to the data lake. Since languages such as R or Python have many external libraries available, there are more options for transformations available than in plain SQL. Support for regular expressions is a good example, but in the Databrick notebooks you can build entire machine learning models.

The "EL" part of the "ELT" process can be done through numerous tools: Azure Logic Apps, Azure Functions, IoT Hubs, Azure Databricks and so on. The "T" part can be done by any tool that is able to transform data: Azure Functions, SQL inside an Azure SQL database or an Azure Synapse Analytics data warehouse (dedicated pools), Azure Databricks, even an Azure Automation runbook using PowerShell, the possibilities are endless. A fairly new option is Azure Synapse Analytics Serverless SQL Pool. With this service, you can query external data using the familiar SQL language. This allows you to build a "logical layer" on top of your data lake where the logic is encapsulated in SQL views. You pay for the number of terabytes read, not for the number of CPU used or the duration of the query. You can for example put a Serverless SQL Pool between your data lake and Power BI, and use the SQL to shape the data into a dimensional model before it's loaded into Power BI.

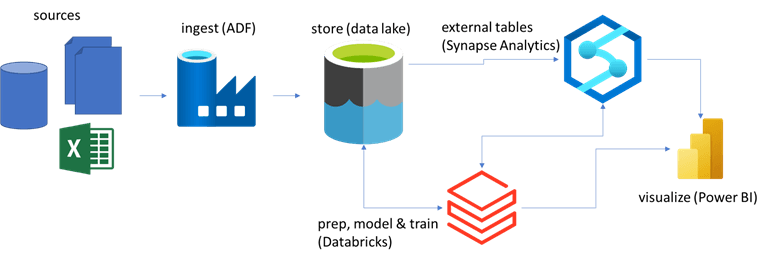

A possible architecture diagram in the Microsoft Data Platform could look like this:

What is ELTL?

When you load for example data from some CSV files from your data lake into a data frame in Azure Databricks and you perform a couple of transformations with Python and you write the results to a parquet file in the data lake, you've essentially done ELTL: extract-load-transform-load. There's an extra "L" because you loaded the data back to a destination after the transformations. Sometimes people use the ELTL acronym, but most of the time people just stick with ELT.

ETL vs ELT

Although they look very similar and sometimes you can use the same tool to implement both methodologies, there are some differences.

- ETL is typically on-premises, with tools like SSIS or Pentaho. ELT on the other hand is often found in cloud scenarios and there are many PaaS (Azure Databricks) or SaaS (Azure Data Factory, Serverless SQL Pools) offerings available. This can mean that ETL tools are more expensive, but this really depends on the use case. There are cloud ETL tools available, but they're not situated in the Microsoft Data Platform and hence out of scope for this tip.

- ETL deals with smaller data sets, usually because the data is transformed in memory. ELT can more easily deal with very large data sets.

- ELT often requires good coding skills (the data engineer role is a good example), while with ETL tools there's less code to write.

- ETL can be useful in compliance and data privacy scenarios, as you can deal with sensitive data before it's loaded into the destination.

- In modern data warehousing scenarios, where you use a data lake or even a data lakehouse (where you build a data warehouse on top of your data lake using technology like Azure Databricks Delta Tables), ELT is dominant.

As usual, one is not necessarily better than the other. Careful consideration of the use case and the data involved should lead you to a choice between ETL and ELT.

Next Steps

For more information about ETL tools, check out the tips Overview of ETL Tools in the Microsoft Data Platform – Part 1 and Part 2.

- If you want to learn more about Azure Data Factory, there's a tutorial you can follow. You can also find many tips in this overview.

- There's also a tutorial for SSIS.

- You can find tips about Azure Databricks in this overview.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-06-06