By: Jared Westover | Updated: 2022-07-22 | Comments | Related: > Compression

Problem

Recently I faced a challenge with a big static table containing over two billion rows in a busy database. The business uses the table for historical reference, and purging was out of the question. The good news is the business no longer allowed insert, update, and delete operations. However, it consumed a lot of space and made backup files larger. Additionally, we could not move it to a different database and needed to run periodic SELECT statements against it. The challenge we faced was reducing its footprint without making it unusable.

Solution

Since truncating was out of the question, the first solution I thought of was applying compression. In this tutorial, I will walk you through the various compression methods we explored and highlight the one we picked. Luck was on our side, and the table consisted of primarily integer data types. However, this may not be the best approach if your table is mostly strings. I still suggest you test it out.

Creating Our Dataset

To get started, let's create a sample dataset. The following code creates a database with a single table called SalesTrackingHistory. As always, please don't run these sample scripts in a production environment.

USE [master];

GO

IF DATABASEPROPERTYEX ('SqlHabits','Version') IS NOT NULL

BEGIN

ALTER DATABASE SqlHabits SET SINGLE_USER

WITH ROLLBACK IMMEDIATE;

DROP DATABASE SqlHabits;

END

GO

CREATE DATABASE SqlHabits;

GO

ALTER DATABASE SqlHabits SET RECOVERY SIMPLE;

GO

USE SqlHabits;

GO

CREATE TABLE dbo.SalesTrackingHistory

( Id bigint identity (1,1),

SalesCode int,

CustomerId int,

OrderId int,

TrackingId int,

TrackingCount int,

StartDate date,

EndDate date

);

GO

Next, I am incorporating a script from Aaron Bertrand for creating a numbers table. Whenever I am building datasets, a numbers table comes in handy. This numbers table will have integers from one to ten million. The script is straightforward if you would like to increase the upper range.

DECLARE @UpperBound INT = 10000000; ;WITH cteN(Number) AS ( SELECT ROW_NUMBER() OVER (ORDER BY s1.[object_id]) FROM sys.all_columns AS s1 CROSS JOIN sys.all_columns AS s2 ) SELECT [Number] INTO dbo.Numbers FROM cteN WHERE [Number] <= @UpperBound;

Now let's populate our table with some data. The code below generates one hundred million rows. If you have the time and space, feel free to make the table larger. Please be aware of the additional disk space and time it will take. On my system, this entire process took about seven minutes. Depending on how beefy your machine is, your mileage may vary.

DECLARE @Count AS int;

DECLARE @StartDate AS date;

DECLARE @EndDate AS date;

DECLARE @DaysBetween AS int;

SELECT @Count = 0,

@StartDate = '1/1/2016',

@EndDate = '6/25/2022',

@DaysBetween = (1+DATEDIFF(DAY, @StartDate, @EndDate));

WHILE (@Count < 100000000)

BEGIN

INSERT INTO dbo.SalesTrackingHistory

( SalesCode,

CustomerId,

OrderId,

TrackingId,

TrackingCount,

StartDate,

EndDate

)

SELECT ABS(CHECKSUM(NEWID()) % 1000) + 10 AS SalesCode,

ABS(CHECKSUM(NEWID()) % 2000) + 20 AS CustomerId,

ABS(CHECKSUM(NEWID()) % 3000) + 30 AS OrderId,

ABS(CHECKSUM(NEWID()) % 4000) + 40 AS TrackingId,

ABS(CHECKSUM(NEWID()) % 100000) + 1000 AS TrackingCount,

DATEADD(DAY, RAND(CHECKSUM(NEWID()))*(@DaysBetween),@StartDate) AS StartDate,

DATEADD(DAY, RAND(CHECKSUM(NEWID()))*(@DaysBetween),@StartDate) AS EndDate

FROM dbo.Numbers

SET @Count = @Count + @@ROWCOUNT;

END

GO

After we create the table, run the following code to check the size.

EXEC sp_spaceused N'dbo.SalesTrackingHistory'; GO

You can see we have one hundred million rows, and the size is a bit over 4.2GB. In the next section, I will cover three of SQL Server's compression methods.

Page Compression

The first compression method we tested was page compression. Since page compression includes row compression, I skipped over it. Page compression also includes prefix and dictionary compression. The order in which compression takes place is first row, then prefix, and finally dictionary. To learn more about the compression details please review this document from Microsoft.

Let's execute the following code to apply page compression to our table. Then we can verify how much space we saved.

ALTER TABLE dbo.SalesTrackingHistory REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE); GO EXEC sp_spaceused N'dbo.SalesTrackingHistory'; GO

Applying page compression gave us a 47% savings in size. That percentage is a win in my book. However, I think we can do better.

Columnstore

People often associate columnstore indexes with large fact tables requiring routine aggregations. That assumption is perfectly fair. However, one of the features of columnstore indexes is their compression abilities.

Since SQL Server stores data in a columnstore index by column, the potential to compress increases immensely. For example, if you have a column where an integer value repeats twenty million times in your one hundred million row table, the compression possibilities skyrocket.

Now let's review the syntax to compress our table by converting it to a clustered columnstore index. Remember, once we run the command, SQL physically stores the table in columnar format. On my system, this took about four minutes to run. To get the full benefits of columnstore, I would advise being on SQL Server 2016 or greater.

CREATE CLUSTERED COLUMNSTORE INDEX cci_SalesTrackingHistory ON dbo.SalesTrackingHistory; GO EXEC sp_spaceused N'dbo.SalesTrackingHistory'; GO

Our table dropped to about 1.4GB, a 100% savings from the original. Thankfully, we can still query against the table without tanking the performance. I would not apply this method to a table where you performed frequent singleton lookups. In our situation, the business might run one query against it monthly.

Archive Compression

Hold on; we are not done yet. With columnstore, the ability exists to compress an index even further. Since the business rarely accesses the data, you can apply archival compression. SQL uses the Microsoft XPRESS compression algorithm to an index with archival compression. Using this method might cause a slight delay in the time it takes to return the data, but that was not a concern for us.

Let's run the syntax below to apply archival compression and check out the size.

ALTER TABLE dbo.SalesTrackingHistory REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = COLUMNSTORE_ARCHIVE); GO EXEC sp_spaceused N'dbo.SalesTrackingHistory'; GO

We did not get a significant difference on this one, about 1.35GB or 102% of the original, but I will take it!

Conclusion

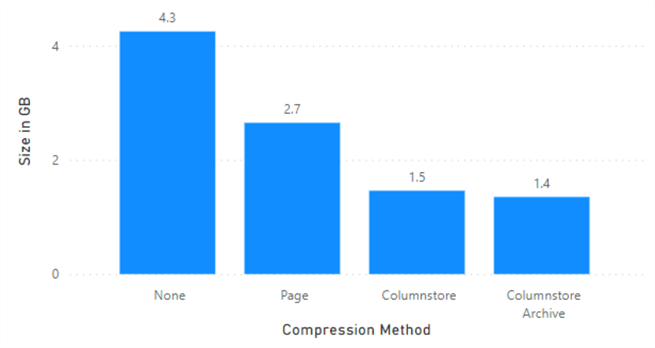

I have compiled the results of each compression method compared to the original table.

As you can see from the chart above, stopping at columnstore would have given us the bulk of space savings. With a larger test dataset, the columnstore archive may be more impactful.

In this tip, I demonstrated how to reduce the footprint on a large static table. We first applied page compression, which implicitly includes row. Hoping to reduce the size further, we first converted the table to a clustered columnstore index. We pushed the boundaries even further and applied archival compression. Performance may be a bigger concern if you need to access the data regularly. Ultimately, I was happy with the results of a columnstore index.

Next Steps

- Are you facing a similar challenge with a large static table? If so, I would highly recommend applying compression to the table and watch the space savings add up.

- If you want to learn more about columnstore indexes, please explore this tip by Jayendra Viswanathan.

- Please check out Aaron Bertrand's tip for creating a numbers table.

- Derek Colley provides an excellent overview of compression in SQL Server. Compression is also a valuable tool for tables and indexes which are not static.

About the author

Jared Westover is a passionate technology specialist at Crowe, helping to build data solutions. For the past two decades, he has focused on SQL Server, Azure, Power BI, and Oracle. Besides writing for MSSQLTips.com, he has published 12 Pluralsight courses about SQL Server and Reporting Services. Jared enjoys reading and spending time with his wife and three sons when he's not trying to make queries go faster.

Jared Westover is a passionate technology specialist at Crowe, helping to build data solutions. For the past two decades, he has focused on SQL Server, Azure, Power BI, and Oracle. Besides writing for MSSQLTips.com, he has published 12 Pluralsight courses about SQL Server and Reporting Services. Jared enjoys reading and spending time with his wife and three sons when he's not trying to make queries go faster.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-07-22