By: John Miner | Updated: 2022-12-19 | Comments | Related: > Azure SQL Managed Instance

Problem

In this article, we look at how to enable auditing for an Azure SQL Managed Instance.

Solution

On 1 October 2018, Microsoft announced the general availability of Azure SQL Database, Managed Instance edition. This cloud version of the database engine is the most compatible with the on-premises version. However, we can no longer save audit log files to local storage. In this Platform as a Service (PaaS) edition, the audit logs can be stored to blob storage, event hub, and log analytics.

Business Problem

Our manager has asked us to use the straightforward HIPPA database schema for a fictitious urgent care center called "The Last Stop." We have been asked to create a proof-of-concept that showcases auditing in Azure SQL Managed Instance. Storing logs to Azure Blob Storage and Azure Log Analytics will be investigated.

HIPPA Database

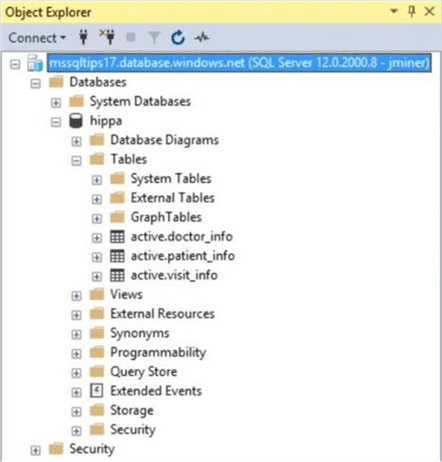

The article assumes that an Azure SQL Managed Instance has been deployed and configured with a Jump Server in the virtual network. Execute the enclosed script to create this database from scratch. The image below shows a previous deployment to the Azure SQL database named mssqltips17 from an earlier article. However, the same tables should now exist in the Azure SQL Managed Instance named svr4asqlmi.

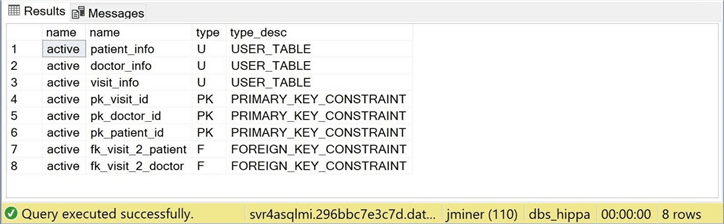

If we execute the following query, we will see details behind each table in the managed database called dbs_hippa and the managed server named svr4asqlmi.

-- -- Show database definition -- -- Choose the correct database USE dbs_hippa; GO -- User defined objects select s.name, o.name, o.type, o.type_desc from sys.objects o join sys.schemas s on o.schema_id = s.schema_id where o.is_ms_shipped = 0 order by o.type desc; GO

The image below shows that three tables exist in the active schema. Each table has a primary key and two foreign keys that relate the tables together.

To recap, we will use the HIPPA sample database to capture events to either Azure Blob Storage or Azure Log Analytics.

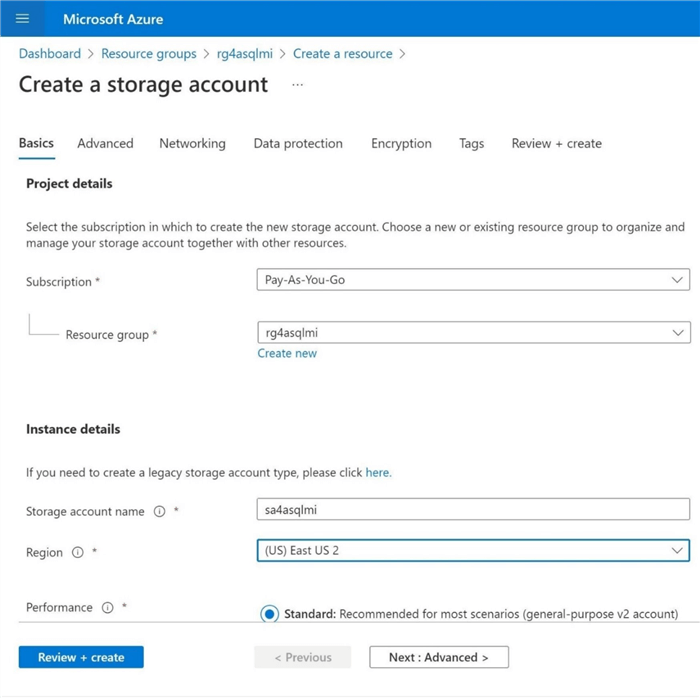

Azure Blob Storage

The first step to enable auditing to blob storage is to create both a storage account (sa4asqlmi) and a storage container (sc4asqlmi). Since all resources are stored in the resource group rg4asqlmi, we will supply this name when deploying the resource via the Azure Portal. The image below shows the start of a blob storage deployment via the portal. Please see this MSDN page for details.

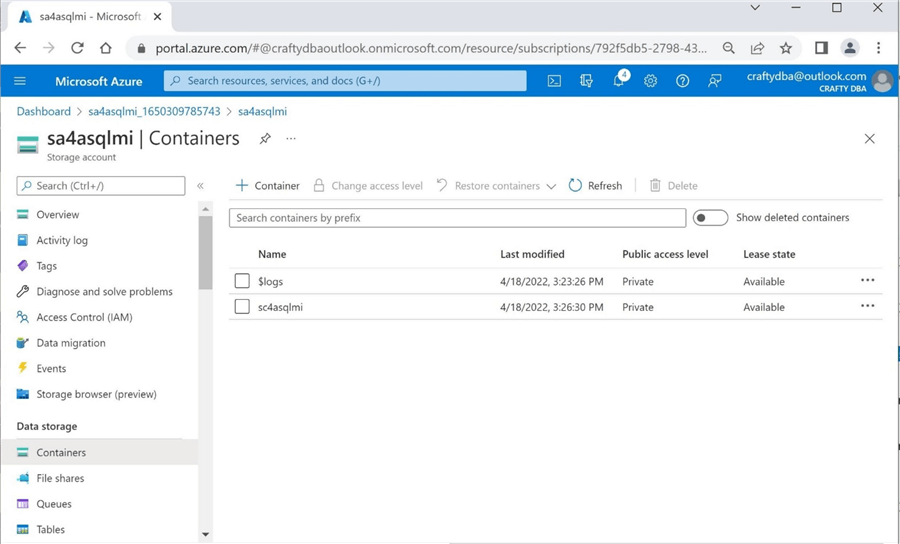

The image below shows the newly deployed storage container sc4asqlmi.

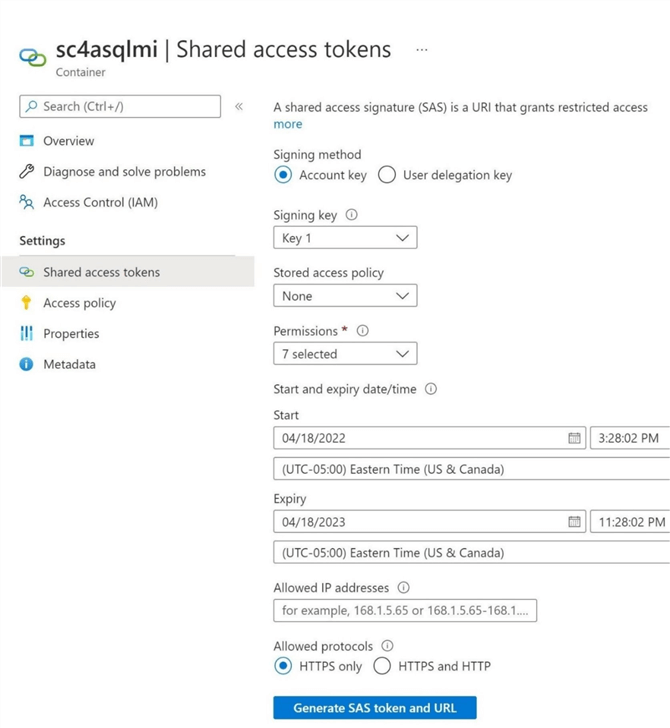

The current T-SQL statement for auditing depends on a Shared Access Signature. At the container level, we want to give the Azure SQL Managed Instance rights to list, read, and write to the container. In the image below, we are stating that the SAS is valid for only 8 hours. In real life, pick a time frame that works for your company. It is a best practice to rotate keys every so many months. Click Generate SAS token and URL to complete the task after all options are checked.

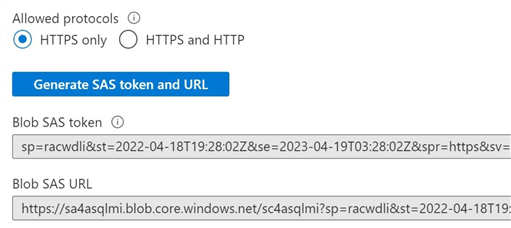

The last step is to grab the token and save it securely somewhere. This token will be used to create a SQL Server credential using T-SQL for the Azure storage container.

In a nutshell, we deployed the Azure Blob Storage account and container in this section. In the end, we generated and saved a Shared Access Signature (SAS) for the container.

Azure Blob Storage – Audit Server Events

There are two types of audits in the SQL Server database engine: server and database. We will create a server audit to gather information on successful logins, successful logouts, and failed logins. A corresponding database audit will collect information on DML actions such as SELECT, INSERT, UPDATE, and DELETE. There are many more event activities that can be tracked. Please look at the MSDN web pages for details.

The following table shows each statement used to create and enable the server-level audit.

| Step | Command | Purpose |

|---|---|---|

| 1 | CREATE CREDENTIAL | Define credential for storage account using SAS. |

| 2 | CREATE SERVER AUDIT | Define blob storage location to place audit logs. |

| 3 | CREATE SERVER AUDIT SPECIFICATION | Define the actions to record in the audit log. |

| 4 | ALTER SERVER AUDIT | Turn on or off auditing. |

The below T-SQL code should be executed from the master database.

--

-- Enable server auditing - blob storage

--

-- Switch database

use master

GO

-- Create credential

CREATE CREDENTIAL [https://sa4asqlmi.blob.core.windows.net/sc4asqlmi]

WITH IDENTITY='SHARED ACCESS SIGNATURE',

SECRET = 'sp=racwdli&st=2022-04-18T19:28:02Z&se=2023-04-19T03:28:02Z&spr=https&sv=2020-08-04&sr=c&sig=010h0HYh3ZJG%2FP4OaeZ2rYSRBtnXzg%2B9OIsphdpuFMs%3D'

GO

-- Create server audit

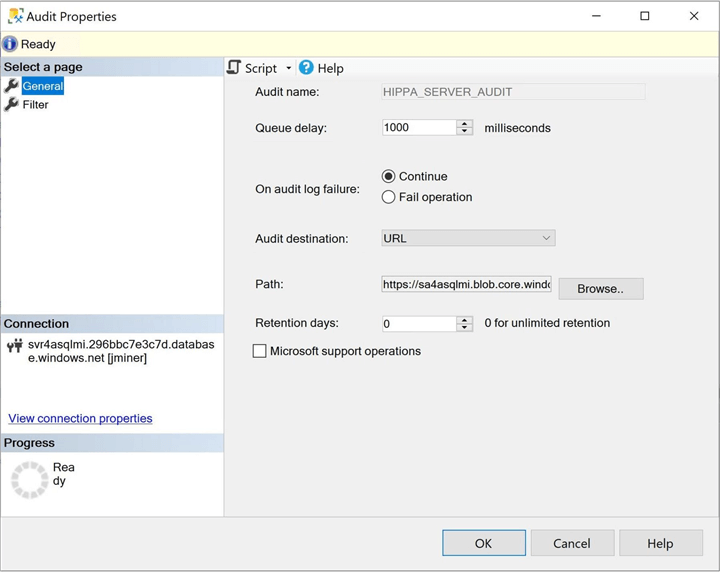

CREATE SERVER AUDIT [HIPPA_SERVER_AUDIT]

TO URL ( PATH ='https://sa4asqlmi.blob.core.windows.net/sc4asqlmi' , RETENTION_DAYS = 0 )

GO

-- Create server specification

CREATE SERVER AUDIT SPECIFICATION [HIPPA_SERVER_AUDIT_SPEC]

FOR SERVER AUDIT [HIPPA_SERVER_AUDIT]

ADD (FAILED_LOGIN_GROUP),

ADD (LOGOUT_GROUP),

ADD (SUCCESSFUL_LOGIN_GROUP)

WITH (STATE=ON);

GO

-- Set state on

ALTER SERVER AUDIT [HIPPA_SERVER_AUDIT] WITH (STATE = ON);

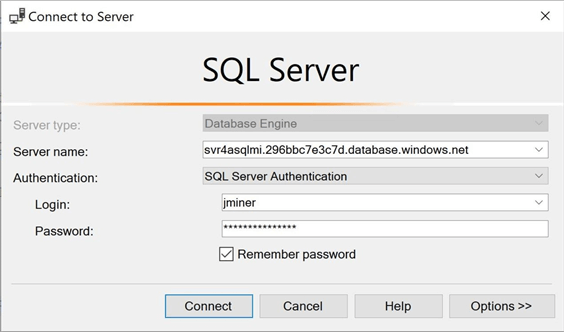

Let's log into the Azure SQL Managed Instance now. Unlike Azure SQL database, there is always some random alphanumeric string between the name of the server and the windows service.

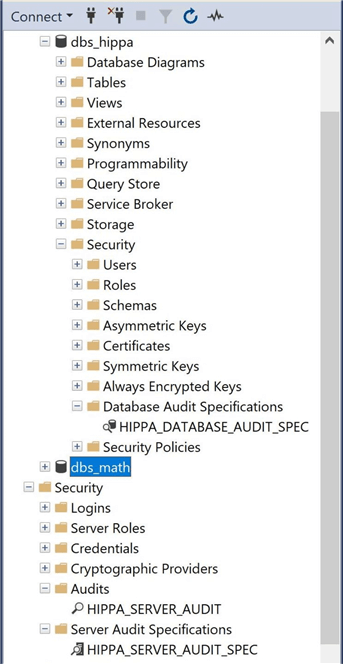

We can use SQL Server Management Studio (SSMS) to validate the creation of the server audit that writes to blob storage. Please see the image below for details on the server audit location.

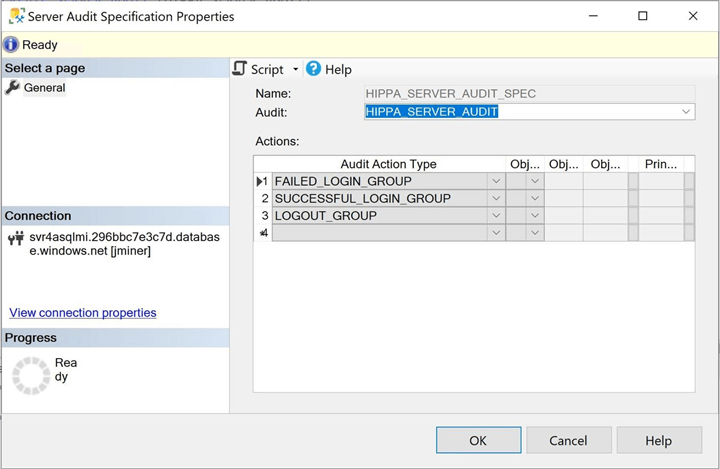

The next task is to validate the creation of the server audit specification. Again, these actions are under the server section in the object explorer. The image below shows three action types being audited.

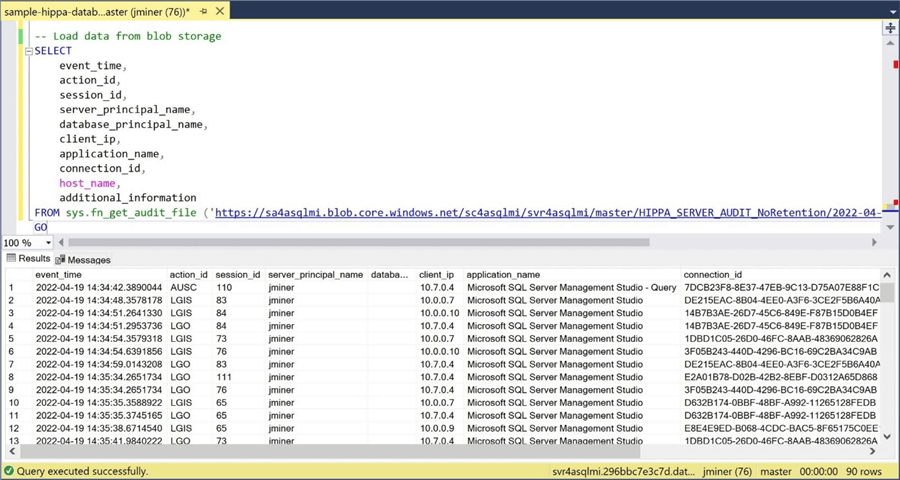

The sys.fn_get_audit_file function takes a blob storage path as a parameter and returns a table of data. Wild cards can be used to read one or more files at the same time. We can see in the image below that three events are being recorded: LGIS – login succeeded, LGO – logout succeeded, and AUSC – audit session changed. The SSMS application executes many connections to the database.

In summary, we must define a SERVER AUDIT to save actions to blob storage. The SERVER SPECIFICATION defines what events (actions) to write to the log files. In the next section, we will create a database audit specification and run T-SQL statements to create log entries.

Azure Blob Storage – Audit Database Events

The below T-SQL code should be executed from the database that will be audited. In this case, it is the dbs_hippa database. I suggest using database schemas to logically group tables. We will track SELECT, INSERT, UPDATE, and DELETE actions on the ACTIVE schema.

-- -- Enable database auditing - blob storage -- -- Change to target database USE dbs_hippa; GO -- Create the database audit specification. CREATE DATABASE AUDIT SPECIFICATION [HIPPA_DATABASE_AUDIT_SPEC] FOR SERVER AUDIT [HIPPA_SERVER_AUDIT] ADD (SELECT, INSERT, UPDATE, DELETE ON Schema::active BY public) WITH (STATE = ON); GO

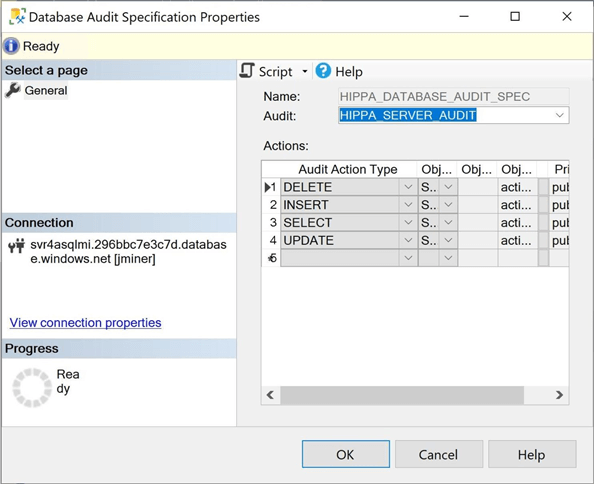

If the SSMS application is used, we can double-check that four action types are being audited in the database.

Now that auditing is in place, we can run some DML commands to create audit log entries. Run the following T-SQL script in the dbs_hippa database.

--

-- Test Azure SQL Managed Instance Auditing

--

-- Audit - Select

select * from active.patient_info where last_name = 'SCOTT'

go

-- Audit - Insert

insert into active.visit_info

values (getdate(), 125, 60, 98.6, 120, 60, 487, 'Influenza', 7, 1);

go

-- Audit - Update

update active.visit_info

set

diagnosis_desc = upper(diagnosis_desc),

patient_temp = 98.4

where visit_id = 21

go

-- Audit - Delete

delete

from active.visit_info

where visit_id = 7;

go

-- Audit - Truncate

truncate table [active].[visit_info]

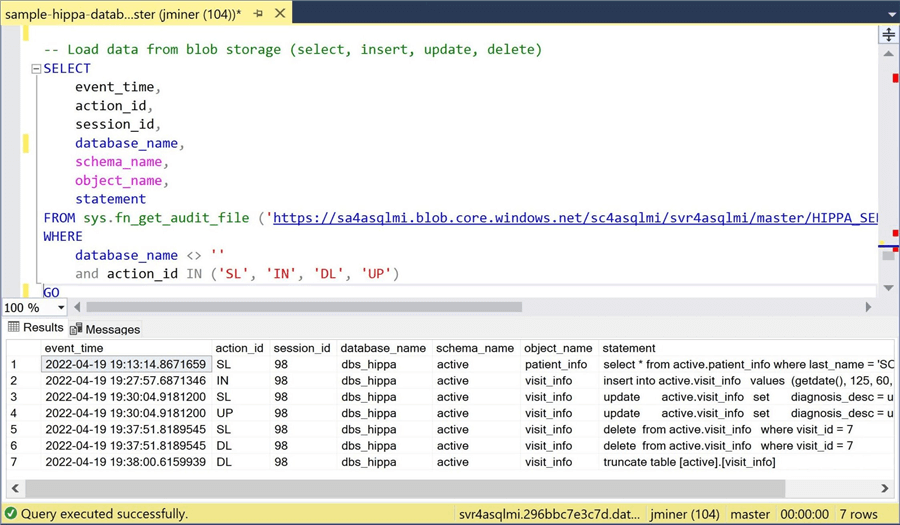

Since all actions are logged in the same file, we need to filter by SL – select, IN – insert, DL – delete, and UP – update. So far, there is nothing new going on with auditing. Other than the location being Azure Blob Storage, the same data is being collected.

Both the SERVER and DATABASE audits can be paused if needed. However, once turned on, the events will write to log files stored in Azure Blob Storage.

Finally, the above image shows where the audits and specifications can be located in the object explorer in SSMS.

Azure Log Analytics

Log Analytics - Deploy and Configure

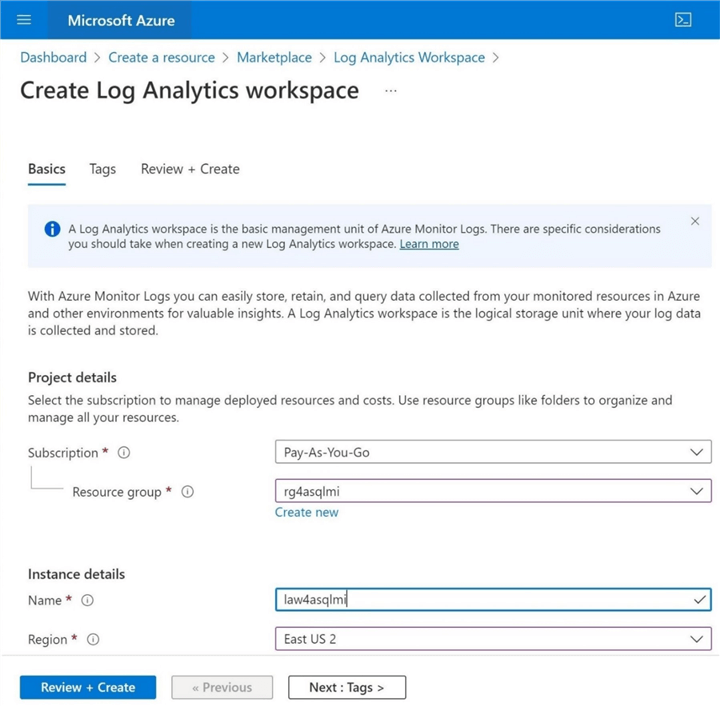

First, we must create a log analytics workspace by selecting the service from the marketplace. The Azure SQL Managed instance is part of the rg4sqlmi container. We need to add the new log analytics service to the same resource group and call it law4asqlmi.

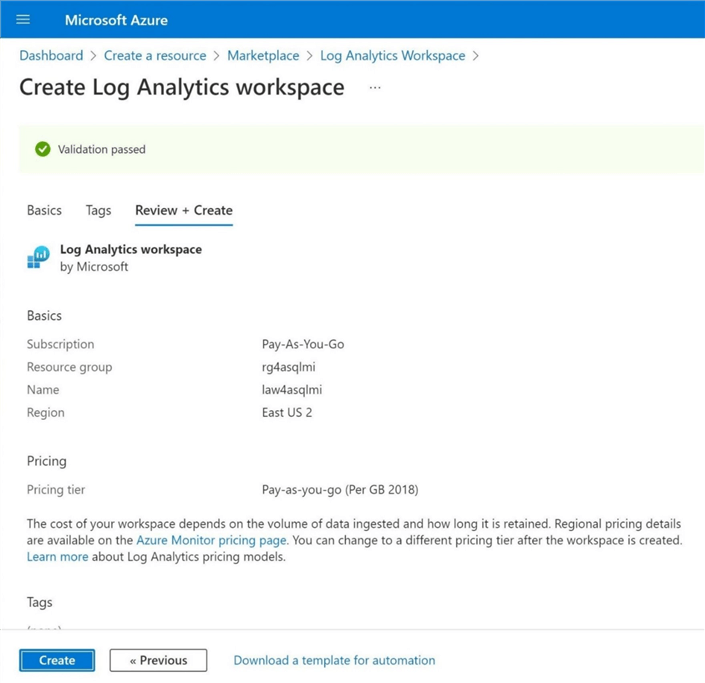

Review the settings for the new service. If you are happy with the settings, click Review + Create to start the deployment.

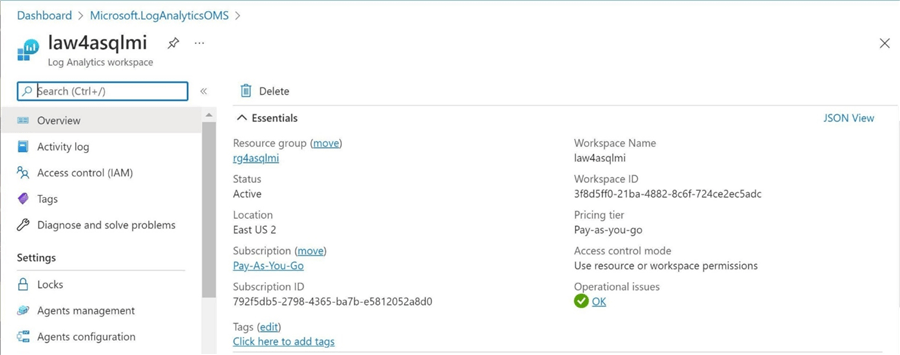

I always check to ensure the Azure Service is functioning correctly after deployment. The image below shows the overview page of the newly deployed service.

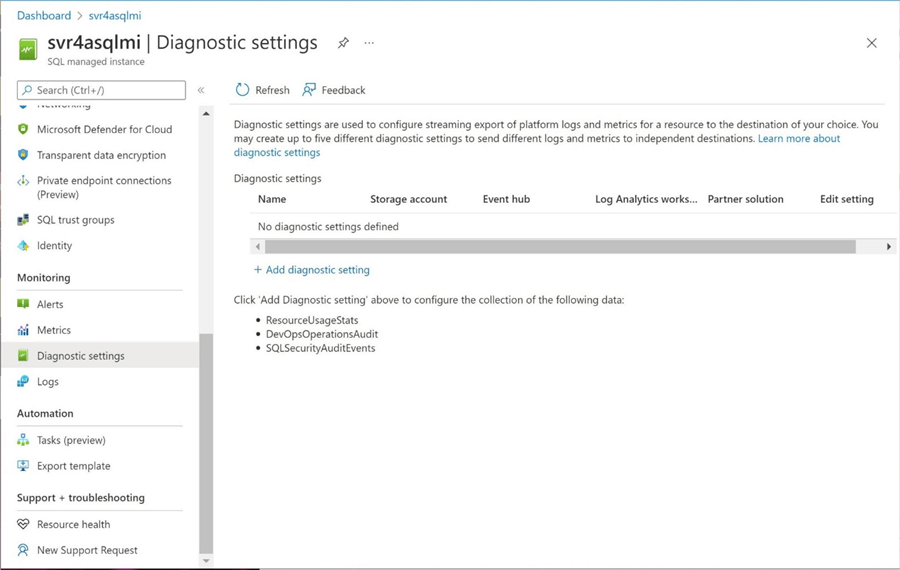

The next task is configuring SQL Managed Instance (MI) to use the Log Analytics Workspace. Find the Diagnostics settings panel under the SQL MI service. We can see that no settings have been configured.

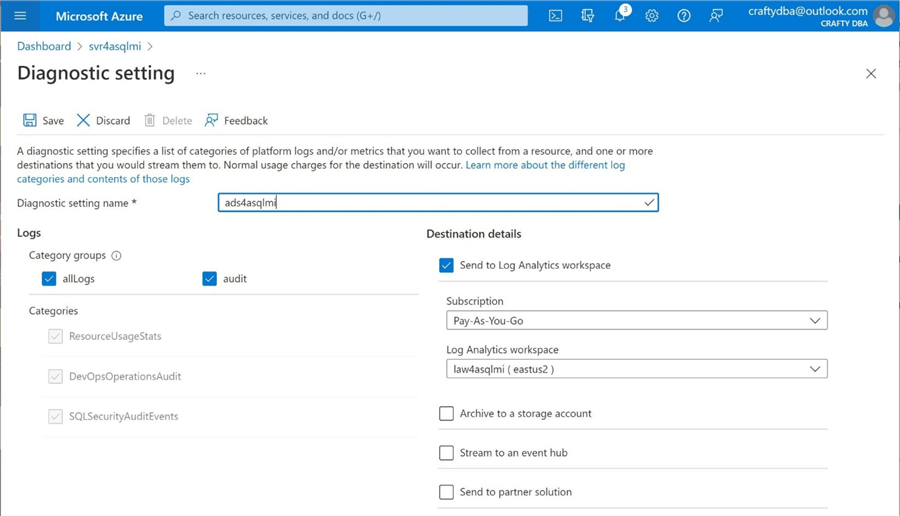

We want to not only collect audit events but capture all other telemetries. Choose the log analytics workspace as the destination of the actions. Note: This data is being enabled at the server named svr4asqlmi.

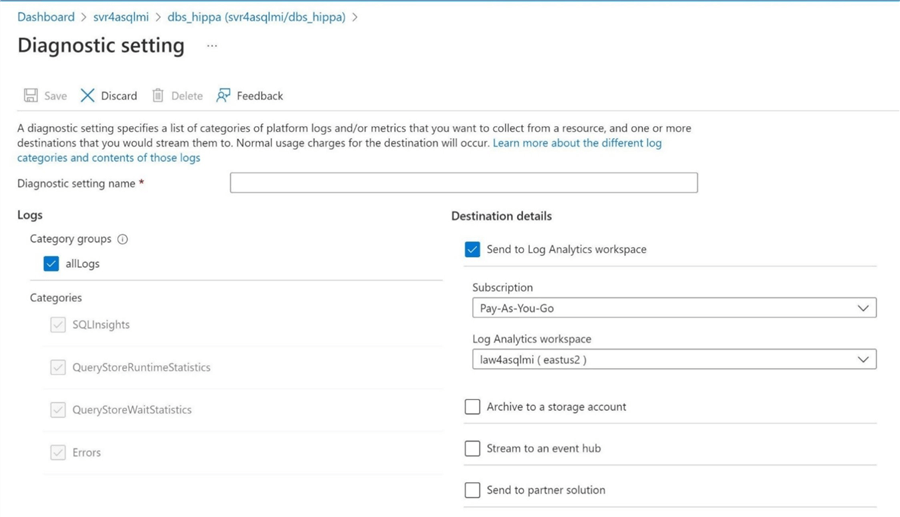

If we were executing T-SQL, we would have to enable both a SERVER and DATABASE specification. When using the Azure Portal, we need to manually enable the settings for the database named dbs_hippa.

Note: We want to enable other audit events such as query store, SQL insights, and errors. Enabling these check boxes is half the equation when sending events to log analytics. We will talk about how to enable both SERVER and DATABASE auditing in the following two sections using a variant of the T-SQL commands used before.

Log Analytics – Audit Server Events

We need to make a slight change to the CREATE SERVER AUDIT statement to complete the configuration. Use the EXTERNAL MONITOR keyword when defining the server audit. In our case, the server audit's name is LA_SERVER_AUDIT, and the server specification is named LA_SERVER_AUDIT_SPEC. Run the T-SQL code below to create the new server audit.

--

-- Enable server auditing - log analytics

--

-- Switch database

use master

GO

-- Send to log analytics

CREATE SERVER AUDIT [LA_SERVER_AUDIT] TO EXTERNAL_MONITOR;

GO

-- Create server specification

CREATE SERVER AUDIT SPECIFICATION [LA_SERVER_AUDIT_SPEC]

FOR SERVER AUDIT [LA_SERVER_AUDIT]

ADD (FAILED_LOGIN_GROUP),

ADD (LOGOUT_GROUP),

ADD (SUCCESSFUL_LOGIN_GROUP)

WITH (STATE=ON);

GO

-- Set state on

ALTER SERVER AUDIT [LA_SERVER_AUDIT] WITH (STATE = ON);

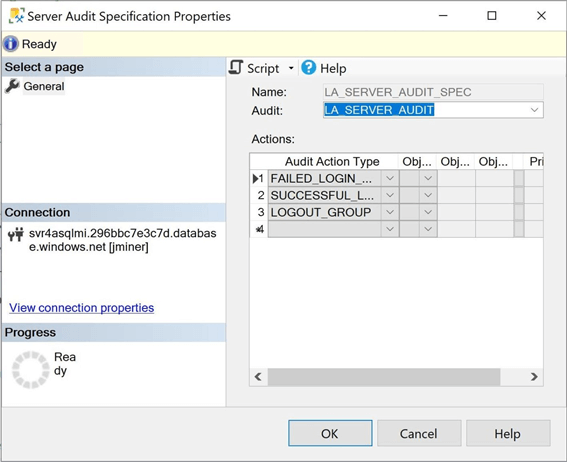

The image below was taken from SSMS and looks like the one we created previously. I used the prefix "LA" to represent log analytics instead of "HIPPA".

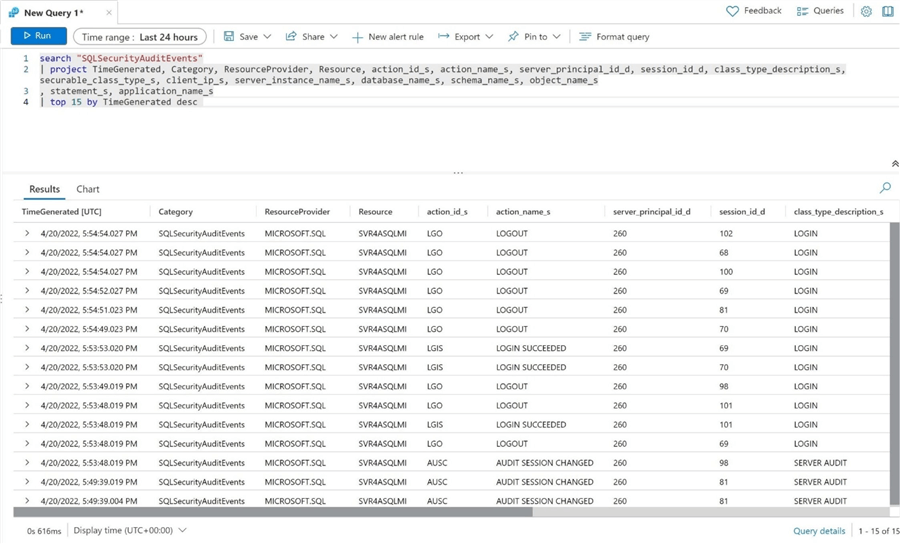

The major difference is that all data retrieval must be performed using the Kusto Query Language. The same three types of events show up in the log analytics service. We have log in, log out, and audit session changed actions.

I do not have time to go over the Kusto Query Language in real detail. However, the search keyword is like the from statement in a select query. The pipe | is used to pass data from one step to another. The project keyword is the same as using the select to reduce the number of columns in the dataset. Finally, the top keyword allows us to return the most recent 15 records.

Log Analytics – Audit Database Events

Run the T-SQL script below to enable DML auditing on the ACTIVE schema in the dbs_hippa database.

-- -- Enable database auditing - log analytics -- -- Change to target database USE [dbs_hippa] GO -- Create the database audit specification. CREATE DATABASE AUDIT SPECIFICATION [LA_DATABASE_AUDIT_SPEC] FOR SERVER AUDIT [LA_SERVER_AUDIT] ADD (SELECT, INSERT, UPDATE, DELETE ON Schema::active BY public) WITH (STATE = ON); GO

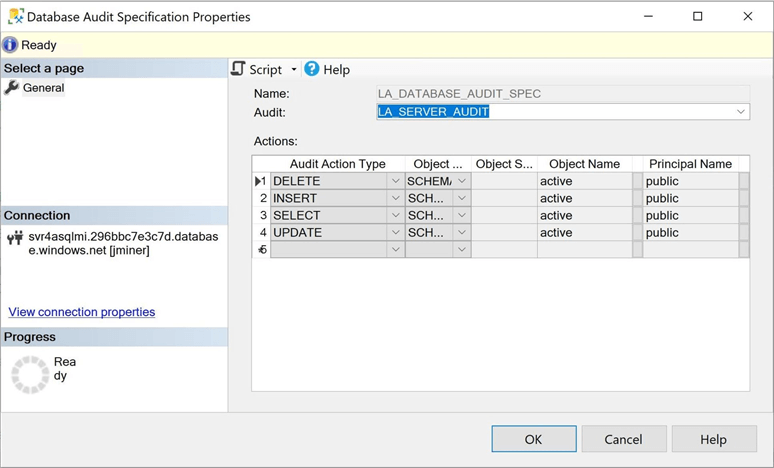

Again, we can use the SSMS application to validate the creation of the database audit specification. See the image below for details.

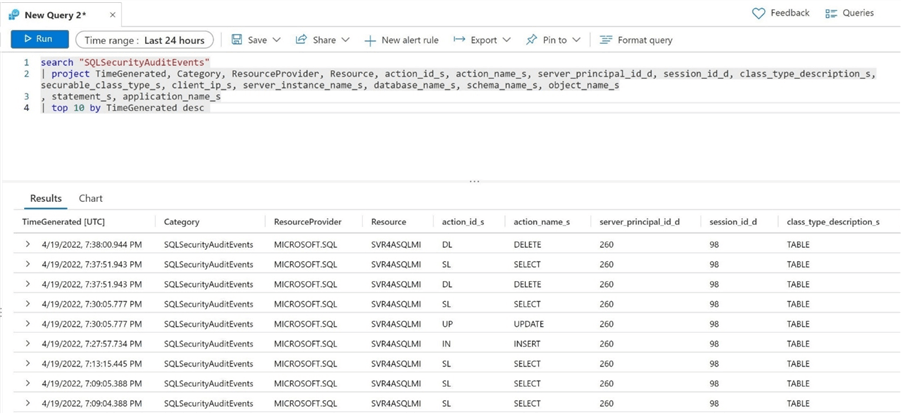

Run the above audit test script that selects, inserts, updates, and deletes records in the dbs_hippa database. In short, we are trying to generate audit actions that can be added to log analytics.

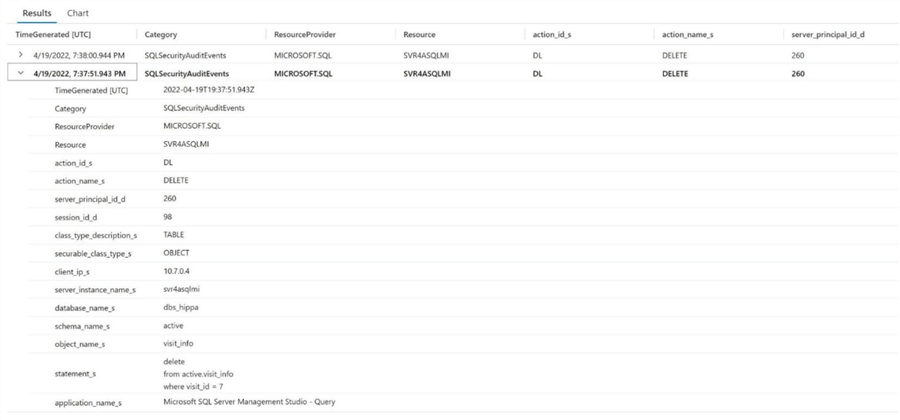

The above image shows the 10 most recent captured actions at a summary level. Hit the ">" link to expand for details. The image below contains detailed information about the client that executed the delete action. We can see that SSMS executed the query against the visit_info table in the active schema.

So far, the same information can be captured with the on-premises database product. Is there any advantage to using log analytics?

Why Use Log Analytics?

There is so much more that can be done with log analytics. Collecting audit events is a tiny part of the product. Two features that set it apart are alerting and reporting.

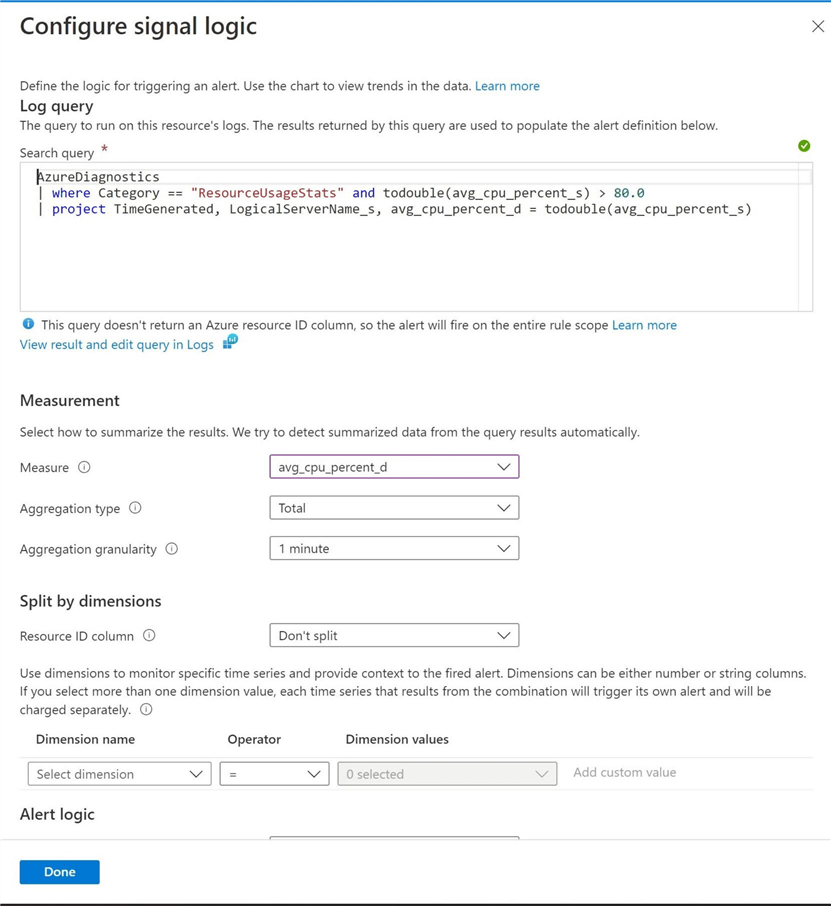

Alerting

We can use a Kusto Query to define an alert. The image below uses the Resource Usage Statistics log (table) to return the time generated, server name, and average CPU percentage when the CPU level exceeds 80%. I ran a set of queries with SSMS to tax our Azure SQL Managed Instance.

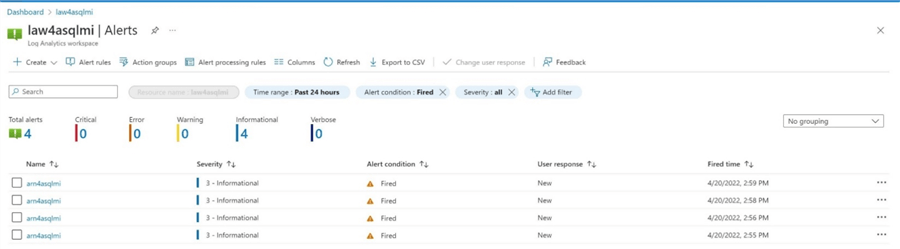

The alerts section of log analytics will show if an alert is triggered. Alerts can be tied to notifications. I would like to send an email to the on-call DBA team when the database CPU exceeds a certain level. The image below shows that the alert was triggered four times in 4 minutes.

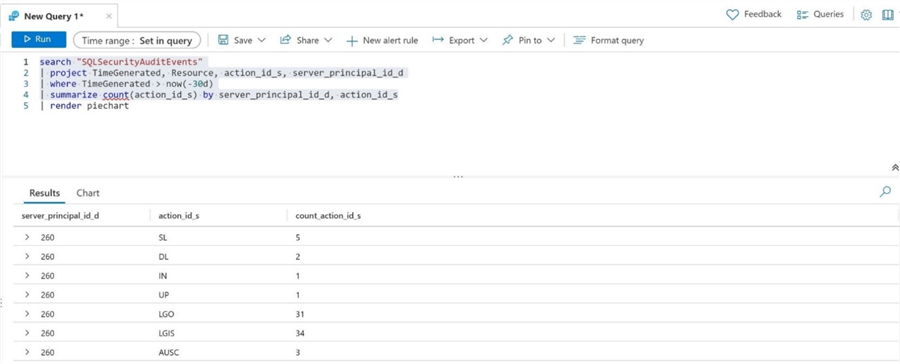

The query designer can execute only parts of a statement. The Kusto Query below summarizes the number of audit events by action id. I purposely left off generating a pie chart.

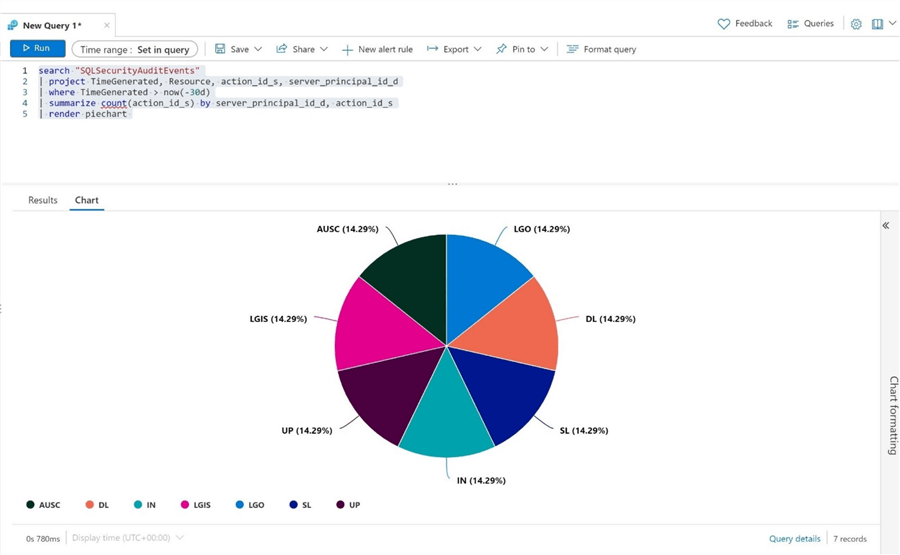

The above image shows the following actions: SL – select, DL – delete, IN – insert, UP – update, LOG – logout, LGIS – login, and AUSC – audit change. The image below shows the same data depicted as a pie chart.

A ton of information can be stored from our Azure SQL Managed Instance in the Log Analytics Workspace. For instance, we did check off the collection of query store and SQL insights telemetry. It is up to you to explore the wealth of data stored in the logs.

Next Steps

Many industries require auditing of actions performed against the tables stored in the database. Azure Managed Instance supports the storage of actions to blob storage, event hub, and log analytics. Today, we explored how to save both server and database actions to blob storage. This feature is on par with what the on-premises product can accomplish. However, what if we want to capture additional information from various Azure Services in one centralized location? Audit files in Azure Blob Storage are not sufficient for this task.

Azure Log Analytics is a full-featured service that captures actions from various Azure Services and can also capture telemetry. Each service monitors the usage of resources such as CPU, memory, and disk space. These data points are stored in the log analytics service. We can create alerts based on Kusto Queries. For instance, when CPU usage exceeds a predetermined limit, it triggers an alert. We can assign a notification for each alert, such as sending an email to a support group to look at the service.

Finally, the Kusto Query language is extremely powerful. It contains many SQL-like constructs. However, the syntax is more like Power Shell, in which the output of one action is piped to the next action. Advanced statements that support aggregation and windowing can be used to create reports for your dashboard in log analytics. In short, log analytics is a lot more useful than actions stored in a file in blob storage.

I hope you liked this article. Enclosed are code files to create the database, enable auditing, and query log analytics. Next time, I will discuss how we can create custom logging for popular ETL tools like Engineering Pipelines and/or Spark.

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-12-19