By: John Miner | Updated: 2024-04-01 | Comments | Related: > Apache Spark

Problem

A big data engineer can transform data stored in files using Spark dataframe methods or Spark SQL functions. I chose to use the Spark SQL syntax since it is more widely used. Every language has at least three core data types: strings, numbers, and date/time. How do we manipulate strings using Spark SQL?

Solution

In the last tip, I reviewed the syntax for numeric Spark SQL functions. This tip focuses on the available string functions. The sheer number of string functions in Spark SQL requires them to be broken into two categories: basic and encoding. Today, we will discuss what I consider basic functions seen in most databases and/or languages.

Business Problem

Our manager has asked us to explore the syntax of the string functions available in Azure Databricks. I will execute Spark SQL using the magic command in a Python notebook. That way, we can see the output for a given input. After testing, I usually turn the Spark SQL into a string variable that can be executed by the spark.sql method.

During our exploration, we will discuss some written and digital content: Three Blind Mice, The Three Musketeers, and Star Wars. The first two appeared in books a long time ago. I have seen a couple of variations of the “Musketeers” at the movies during my lifetime. I fondly remember seeing Star Wars at my local theater in 1977. At the end of this article, the big data engineer will have a good overview of string functions.

ASCII Codes

The American Standard Code for Information Interchange (ASCII) was created in 1961. An ASCII chart is a character-to-number translation table. The first charts came out with 128 characters, of which 95 can be printed. The problem with the ASCII character set is that certain languages cannot be represented in written form given 128 combinations. For instance, the Japanese language has over 50,000 kanji pictures. To solve this problem, the Unicode standard was developed to support text written in the world's most common languages. You are probably familiar with UTF-8, UTF-16, and UTF-32 codes by name. This new standard can easily support over a million characters.

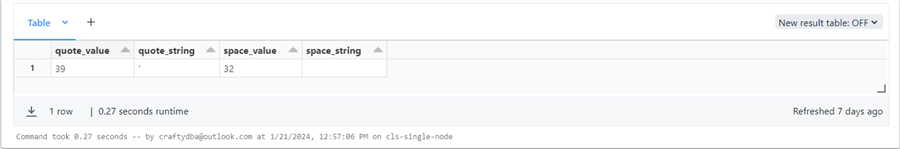

Today, we are going to review two Spark functions. The first function converts a character to a number, and the second function does the opposite. Characters are the building blocks of strings. A string contains zero or more characters. The sample code below plays with the single quote and white space characters.

%sql

select

ascii("'") as quote_value,

char(39) as quote_string,

ascii(" ") as space_value,

chr(32) as space_string;

Please see the table below that has links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | ascii | Return a numeric representation of the character. |

| 2 | char | Return a character representation of a number. |

| 3 | chr | Return a character representation of a number. |

The output below shows the results of executing the Spark SQL query.

String Length

The picture below was taken from Wikipedia and shows the cover art from The Three Musketeers, written in 1844 by French author Alexandre Dumas. For upcoming string function examples, I need a hive table containing some quotes from the novel.

The code snippet below performs two tasks. First, the table is dropped if it exists. Second, a new table is created to contain quotes from the novel.

%sql -- drop table drop table if exists musketeers; -- create table create table musketeers (quote_id int, quote_txt string, quote_len int);

The code below shows how to insert and delete records. I could have coded four insert statements. However, showing that the Spark SQL language supports derived tables (subqueries) is exciting.

%sql

-- remove records

delete from musketeers;

-- add records

insert into musketeers (quote_id, quote_txt)

select d.* from

(

select

1 as id,

"Never fear quarrels, but seek hazardous adventures." as quote_txt

union

select

2 as id,

"All for one and one for all." as quote_txt

union

select

3 as id,

"Love is the most selfish of all the passions." as quote_txt

union

select

4 as id,

"The merit of all things lies in their difficulty." as quote_txt

) as d;

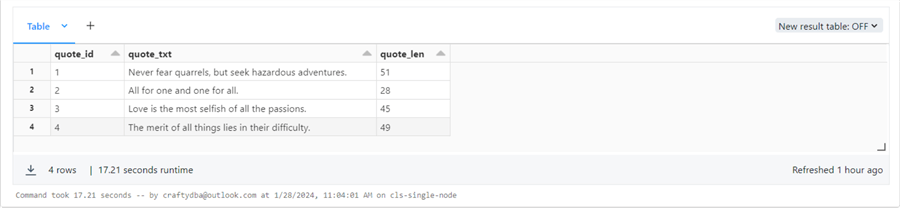

It took a few lines of code to create and load our musketeers table. Remember, when creating the table, we did not specify a database (schema) name. Thus, the table resides in the default database.

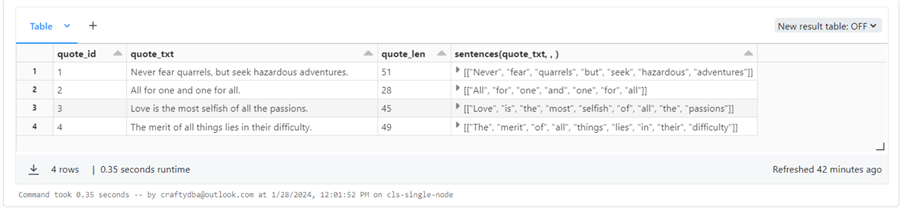

%sql -- update using various string length functions update musketeers set quote_len = char_length(quote_txt) where quote_id = 1; update musketeers set quote_len = character_length(quote_txt) where quote_id = 2; update musketeers set quote_len = len(quote_txt) where quote_id = 3; update musketeers set quote_len = length(quote_txt) where quote_id = 4; -- show result set select * from musketeers;

The above Spark SQL snippet sets the quote_len field using four different Spark SQL functions. The output of all the functions is the same if given the same data. Of course, we have four different quotes!

Please see the table below that has links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | char_length | Return the length of the string. |

| 2 | character_length | Return the length of the string. |

| 3 | len | Return the length of the string. |

| 4 | length | Return the length of the string. |

Note: This musketeer table will be used in future examples when required.

Pick Non-Null String

Depending on the designer, a missing answer can be represented as an empty or null string. Today, we will talk about two functions that can be used to return the non-null string give two or more strings.

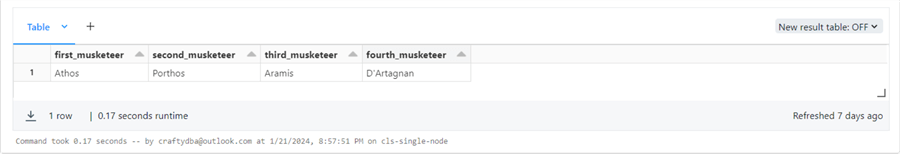

The example shows the usage of the ifnull and coalesce functions. The ifnull function takes two inputs; the coalesce function takes two or more inputs. Both functions return the first input that is not null. The purpose of the code snippet seen below is to return the names of the three musketeers in order. What is going on since there are four names?

The adventures in the French book are of a young man named D'Artagnan, who hopes to join the three Musketeers.

%sql

select

ifnull("Athos", "Aramis") as first_musketeer,

ifnull(Null, "Porthos") as second_musketeer,

coalesce("Aramis", "Athos", "Porthos", "D'Artagnan") as third_musketeer,

coalesce(Null, "D'Artagnan") as fourth_musketeer;

The table below has links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | ifnull | Return the first non-null string. |

| 2 | coalesce | Return the first non-null string. |

The output shows the four characters in Alexandre Dumas' book.

Combining Strings

The process of combining two strings is done quite often in computing. For example, we have a Spark table with a customer's first and last names. To generate our Power BI report off the data, we want to combine both fields with a space to create a column named full name.

%sql

select

concat("three", ", ", "blind", ", ", "mice") as combo1,

concat_ws(", ", "see", "how", "they", "run") as combo2,

"They" || "," || "all" || "," || "ran" || "," || "after" ||

"," || "the" || "," || "farmer's" || "," || "wife" as combo3;

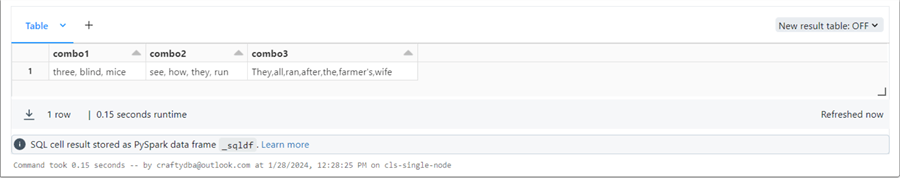

There are three ways to combine strings. Two ways involve calls to a Spark function, and one involves an operator. The above code recreates the first three sentences of the nursery rhythm Three Blind Mice. See Wikipedia for more information about the origin of this song.

The output from executing the notebook cell containing the Spark SQL query is below.

The table below has links to the two functions and one operator used in the above query.

| Example | Function | Description |

|---|---|---|

| 1 | concat | Return combined string. |

| 2 | concat_ws | Return combined string using a separator. |

| 3 | || | The concatenation operator. |

Let's talk about the output before we go on to the next set of functions. I got the first expression equal to the second expression by manually adding commas and spaces. In short, all strings are in comma-separated value format. For the last one, I left out the spaces after the comma.

Casting or Formatting Strings

During data processing, a developer might be asked to convert a number to a string or format a sentence with either a string or a number. How can we accomplish these tasks? This section will review five functions that can help solve these problems.

The first function is aptly named after the data type i.e. string.

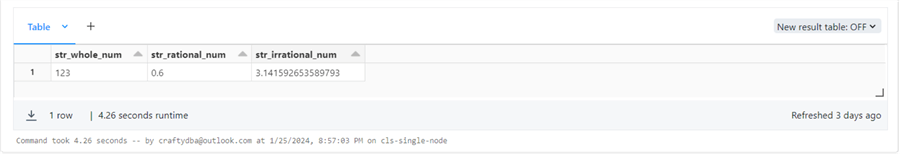

%sql select string(123) as str_whole_num, string(3/5) as str_rational_num, string(pi()) as str_irrational_num;

The string function is reliable compared to the next two functions, which might create unexpected results. The output below shows whole numbers, rational numbers, and irrational numbers. Use the hyperlinks as refreshers for those algebra concepts.

The to_char functions take both the data and a format expression as input.

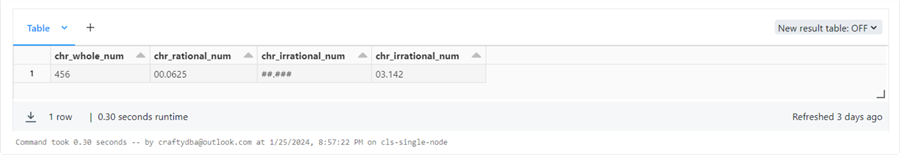

%sql select to_char(456, '999') as chr_whole_num, to_char(2/32, '00D0000') as chr_rational_num, to_char(pi(), '00D999') as chr_irrational_num, to_char(bround(pi(), 3), '00D999') as chr_irrational_num;

It is important to note that the third expression returns the format mask in which 0 or 9 are converted to # characters. Of course, the D represents the decimal within the real number. The issue is that the input has a non-terminating decimal number. The correct format must have three digits to the right of the decimal. One way to fix this issue is to use a rounding function to produce the correct number of digits. Please look at the fourth expression.

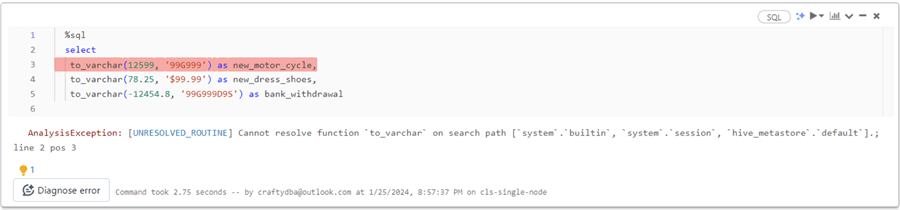

Another function that converts a number to a string is called to_varchar.

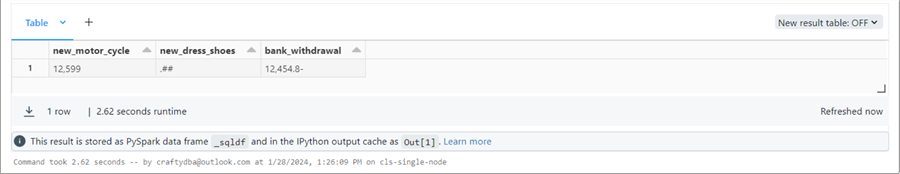

%sql select to_varchar(12599, '99G999') as new_motor_cycle, to_varchar(78.25, '$99.99') as new_dress_shoes, to_varchar(-12454.8, '99G999D9S') as bank_withdrawal

Earlier versions of the Spark Engine do not support this function. I had to update my cluster to the latest version of Spark for the unrecognized function error to go away.

The output of the function does not match what is documented. The second expression represents a new pair of dress shoes I want to buy. We want to place a currency symbol at the start of the formatted string. This error is not caused by casting too much data since the format string expects two digits before and after the decimal place. I can only assume there is a bug in the current implementation.

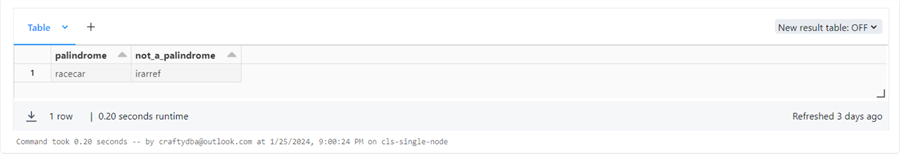

Before continuing our exploration of formatting functions, I want to talk about the reverse function. Ferrari is in the business of making high-end performance cars. What is more interesting is the word “racecar.” When reversed, it is the same word. A palindrome is when the word and its reversal are the same.

%sql

select

reverse('racecar') as palindrome,

reverse('ferrari') as not_a_palindrome;

The output of the Spark SQL query is shown below. Unfortunately, the word Ferrari is not a palindrome.

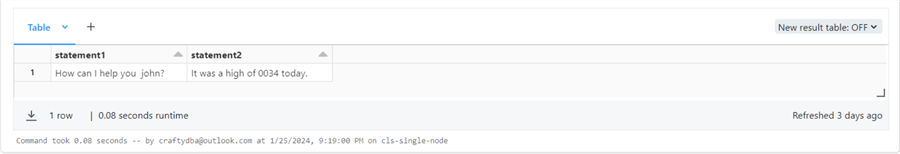

Both the printf and format_string functions use Java- or C-like format strings. The example below pads both a string and a number.

%sql

select

printf("How can I help you %5s?", "john") as statement1,

printf("It was a high of %04d today.", 34) as statement2

The expected output is shown below.

What happens if we want to format a decimal number?

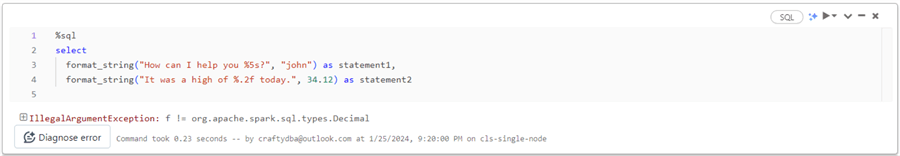

The code below uses the f notation to format a floating-point number with two digits to the right of the decimal point.

%sql

select

format_string("How can I help you %5s?", "john") as statement1,

format_string("It was a high of %.2f today.", 34.12) as statement2;

Unfortunately, we found another bug!

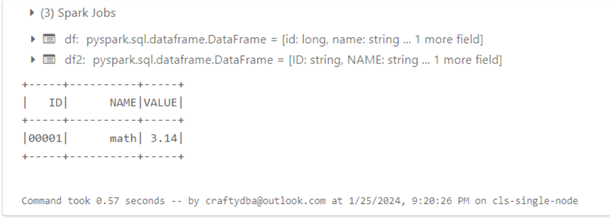

How do I know this is a bug? Well, all these functions have equivalent methods in the Spark dataframe library. We can code up a test right now to confirm it works correctly when using dataframe methods versus Spark SQL functions.

The Python code below creates a dataframe and uses the format_string method. The number stored in the data frame is the constant PI rounded to two digits.

%python

# import library

from pyspark.sql.functions import format_string

# create dataframe

data = [(1, "math", 3.14)]

columns = ["id", "name", "value"]

df = spark.createDataFrame(data, columns)

# format dataframe

df2 = df.select("id", "name", "value"). select(

format_string("%05d", "id").alias("ID"),

format_string("%10s", "name").alias("NAME"),

format_string("%.2f", "value").alias("VALUE")

)

df2.show()

The output below shows the correct representation of our math constant as a string.

The table below has links to the functions used in the above queries.

| Example | Function | Description | Issues |

|---|---|---|---|

| 1 | string | Convert number to a string. | |

| 2 | to_char | Convert number to string using format mask. | Shows mask when input is too big. |

| 3 | to_varchar | Convert number to string using format mask. | Check Spark Version. Possible Bug. |

| 4 | reverse | Reverse the input string. | |

| 5 | printf | Format strings and/or numbers. | Bug with floating point numbers. |

| 6 | format_string | Format strings and/or numbers. | Bug with floating point numbers. |

Out of all the formatting functions I tested, the string function is the most reliable.

String Search – Contains or Position

A few functions return a boolean if the main string contains a substring. Another set of functions returns the position that the substring starts in the main string.

We need a table with a couple of paragraphs of text for each record to adequately test these functions. What came to mind is one of the first movies I saw at the theaters all by myself as a kid. Back in those days, they had a Saturday double feature. Typically, the theater would show one A-listed movie with a B-listed movie. Parents loved this event since kids were dropped off at the movie theaters for a few hours to give them a break.

The first movie I remember seeing was Star Wars. The image below shows the opening crawling text featured at the beginning of Star Wars Episode IV: A New Hope, and a crawl screen appears in the first six Star Wars films. Nowadays, this can be simply done with a computer. Back in 1977, this was done by backlighting the text and panning the TV camera.

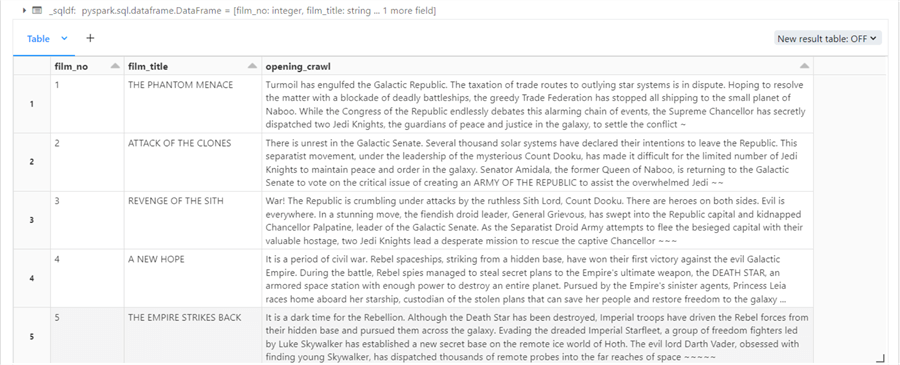

Our task is to create a table that contains the crawling text from each of the first six Star Wars movies. Of course, the movies were shot out of order, but I am not recording the release dates in the table. The Python code below drops and creates the table named star_wars.

%python # drop table stmt = "drop table if exists star_wars"; spark.sql(stmt) # create table stmt = "create table star_wars(film_no int, film_title string, opening_crawl string);" spark.sql(stmt)

I will review the technique to add one row to the table. The complete code is enclosed at the end of the tip. Three variables named num, title, and crawl are defined below. The crawl variable contains the text shown at the movie's beginning. The string replace function in Python is used to escape out single quotes. Otherwise, we would end up with invalid Spark SQL. I like using the shorthand string format. Just prefix a string with f and place the variables within curly brackets {}.

%python

#

# Episode I

#

num = 1

title = "THE PHANTOM MENACE"

crawl = """

Turmoil has engulfed the

Galactic Republic. The taxation

of trade routes to outlying star

systems is in dispute.

Hoping to resolve the matter

with a blockade of deadly

battleships, the greedy Trade

Federation has stopped all

shipping to the small planet

of Naboo.

While the Congress of the

Republic endlessly debates

this alarming chain of events,

the Supreme Chancellor has

secretly dispatched two Jedi

Knights, the guardians of

peace and justice in the

galaxy, to settle the conflict ~

"""

# fix single quotes

crawl = crawl.replace("'", "\\'")

# insert record

stmt = f"insert into star_wars(film_no, film_title, opening_crawl) values ({num}, '{title}', '{crawl}');"

spark.sql(stmt)

To get six records in the table, I repeated the code with different values for the three variables. Please see the code bundle at the end of the article for all statements. The simple select statement shows the data in the table.

%sql select s.* from star_wars as s order by s.film_no;

The image below shows 5 of the 6 records. The screen in the web browser was not big enough to capture all the output.

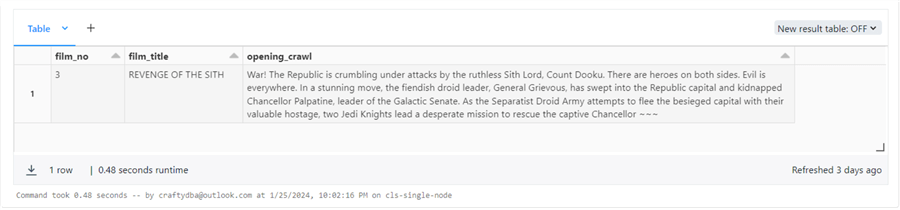

There are three boolean functions called endswith, startswith, and contains. Only the last function works with both single and multiline text.

%sql select 'ends_with', s.* from star_wars as s where endswith(s.opening_crawl, "~~") union select 'starts_with', s.* from star_wars as s where startswith(s.opening_crawl, 'War!') union select 'contains', s.* from star_wars as s where contains(s.opening_crawl, "War!")

The output shows Star Wars: Revenge of the Sith contains the “War!” search string.

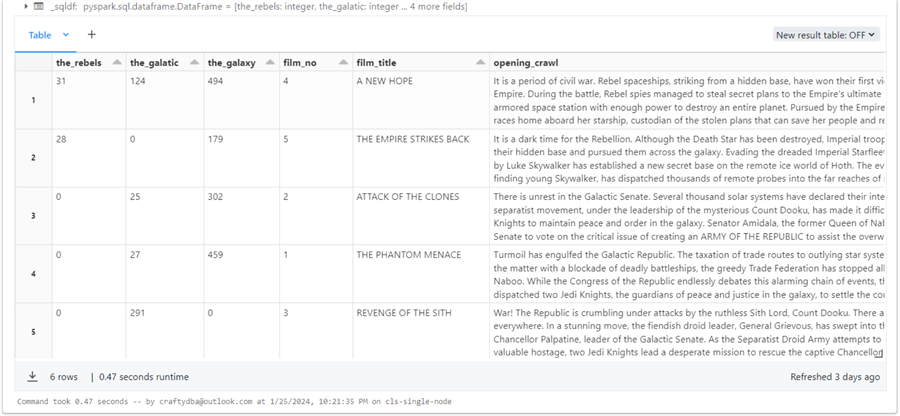

Additionally, three functions return the position of the occurrence of a search string (substring). The locate, position, and instr functions return the same results given the same input. Just be careful of the position of the parameters that you pass.

%sql

select

locate('Rebel', s.opening_crawl) as the_rebels,

position('Galactic', s.opening_crawl) as the_galatic,

instr(s.opening_crawl, 'galaxy') as the_galaxy,

*

from star_wars as s

The image below shows the location of the following search strings in the opening crawl text: “Rebel,” “Galactic,” and “galaxy.”

Let's repeat these tests with a single-line string. The code below searches the first sentence of Star Wars: A New Hope crawl for words.

%sql

select

endswith('It is a period of civil war.', 'war.') as ends_test,

startswith('It is a period of civil war.', 'It ') as starts_test,

contains('It is a period of civil war.', 'civil') as contains_test,

locate('civil', 'It is a period of civil war.') as locate_test,

position('civil', 'It is a period of civil war.') as position_test,

instr('It is a period of civil war.', 'civil') as instr_test;

The output below shows that all functions work.

The functions related to searching for a substring are shown below. The function either returns a boolean value or an integer representing a position in the string.

| Example | Function | Description |

|---|---|---|

| 1 | endswith | Does string end with substring? |

| 2 | startswith | Does string start with substring? |

| 3 | contains | Does string contain substring? |

| 4 | locate | Return substring position. |

| 5 | position | Return substring position. |

| 6 | instr | Return substring position. |

In a nutshell, the endswith and startswith functions do not work correctly with multi-line text.

String Part - Return or Replace

There are a few functions that return parts of a string. The first function we are going to review is the left string function. We will leverage the musketeers table containing quotes from the book.

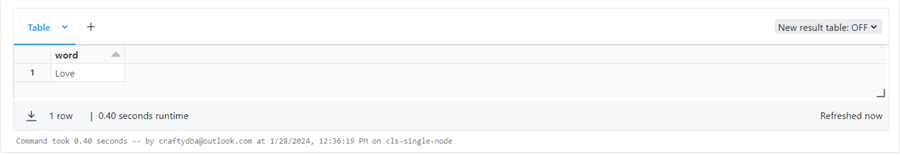

%sql select left(quote_txt, 4) as word from musketeers where quote_id = 3;

The above code returns the word “love.” See the image below.

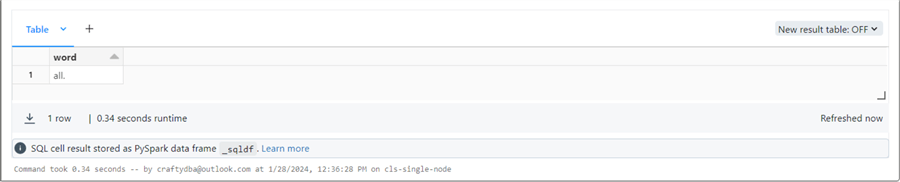

The opposite of the left string function is the right string function.

%sql select right(quote_txt, 4) as word from musketeers where quote_id = 2;

The above code returns the word “all.” See the image below.

The substr and substring functions are probably aliases for each other. The first example uses the comma syntax.

%sql select quote_txt, substr(quote_txt, 26, 4) as start_end_loc, substr(quote_txt, 41) as positive_loc, substr(quote_txt, -11) as negative_loc from musketeers where quote_id = 1;

The second example uses the FROM and FOR keywords.

%sql select quote_txt, substring(quote_txt FROM 26 FOR 4) as start_end_loc, substring(quote_txt FROM 41) as positive_loc, substring(quote_txt FROM -11) as negative_loc from musketeers where quote_id = 1;

Note: A negative value for position means counts are from the end of the string. The output of both queries is the same and shown below.

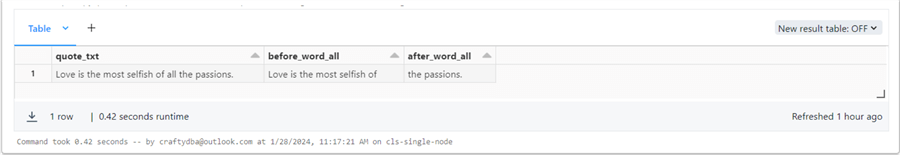

The substring_index function is unique. It finds the substring within a main string.

%sql select quote_txt, substring_index(quote_txt, 'all', 1) as before_word_all, substring_index(quote_txt, 'all', -1) as after_word_all from musketeers where quote_id = 3;

A positive value returns the string before the search string but not including the search string. A negative value produces the string after the search string. The example below looks for the word “all” and returns the before and after parts of the string.

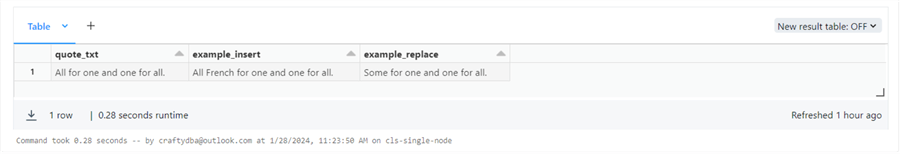

The overlay function uses the same FROM and FOR keywords. A zero value for the FOR clause causes an insert action, while a positive value causes an overlay action.

%sql select quote_txt, overlay(quote_txt placing ' French' FROM 4 FOR 0) as example_insert, overlay(quote_txt placing 'Some' FROM 1 FOR 3) as example_replace from musketeers where quote_id = 2;

The output is a play on words of the famous Musketeer quote.

The last function I will review is named replace, which works exactly like I expected. The function is case-sensitive. I want to replace the word “all” with “some.”

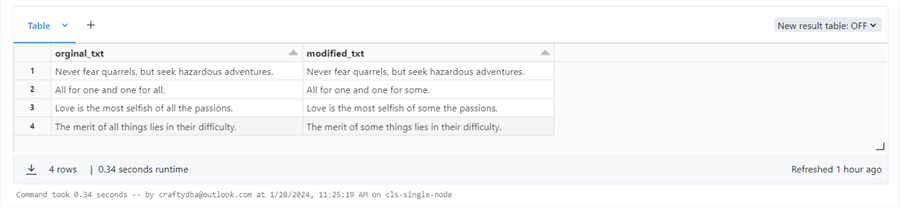

%sql select quote_txt as orginal_txt, replace(quote_txt, 'all', 'some') as modified_txt from musketeers;

The expected output is shown below. The left, right, and replace functions should not be new concepts to experienced developers. The other functions have interesting parameters and outputs.

The functions related to searching for a substring are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | substr | Does string end with substring? |

| 2 | substring | Does string start with substring? |

| 3 | substring_index | Does string contain substring? |

| 4 | left | Return left part of string. |

| 5 | right | Return right part of string |

| 6 | overlay | Overlay part of string |

| 7 | replace | Replace one substring using another. |

String replacement is a very common technique that you will use in the future.

String Case

The Spark language contains multiple functions that do the same thing. For instance, the upper and lower functions change the case of each letter in the alphabet.

%sql select quote_txt as orginal_txt, replace(quote_txt, 'all', 'some') as modified_txt from musketeers where quote_id = 4;

The lcase and ucase functions perform the same actions as the previous functions.

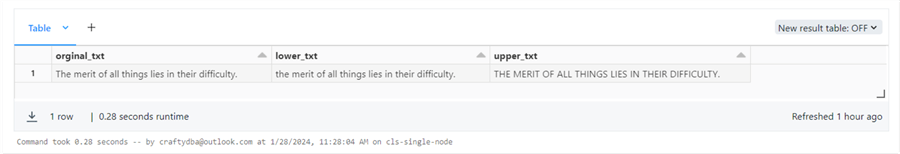

%sql select quote_txt as orginal_txt, lcase(quote_txt) as lower_txt, ucase(quote_txt) as upper_txt from musketeers where quote_id = 4;

The output from both snippets can be seen below.

There might be a need to capitalize the start of each word. A great example is a person's full name. The initcap function is available to solve that business problem.

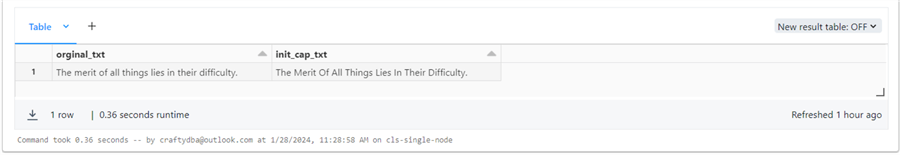

%sql select quote_txt as orginal_txt, initcap(quote_txt) as init_cap_txt from musketeers where quote_id = 4;

The output from executing the above query is seen below.

The functions related to string case are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | lower | Convert to lowercase. |

| 2 | upper | Convert to upper case. |

| 3 | lcase | Convert to lowercase. |

| 4 | ucase | Convert to upper case. |

| 5 | initcap | Capitalize each word. |

Increase or Decrease Padding

Writers have been formatting documents since the invention of word-processing software. One of the earlier packages, LaTeX, was created in the 1980s by Leslie Lamport. Today, let's discuss adding spacing before or after words. The Spark SQL language supplies the developer with the lpad and rpad functions.

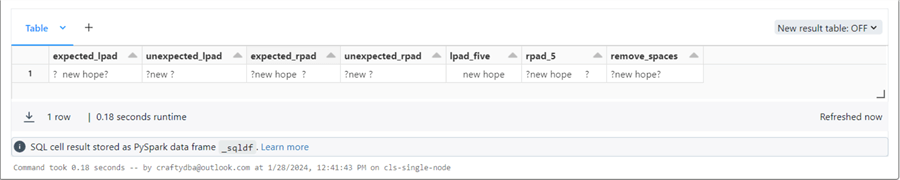

%sql

select

'?' || lpad('new hope', 10) || '?' as expected_lpad,

'?' || lpad('new hope', 4, ' ') || '?' as unexpected_lpad,

'?' || rpad('new hope', 10) || '?' as expected_rpad,

'?' || rpad('new hope', 4, ' ') || '?' as unexpected_rpad,

space(5) || 'new hope' as lpad_five,

'?' || 'new hope' || space(5) || '?' as rpad_5,

'?' || trim(' new hope ') || '?' as remove_spaces;

The output of the above query is shown below. The size parameter is the total size of the resulting string. This definition is unlike other programming languages in which you specify the number of spaces you want to add to the left or right of the string. If the size is smaller than the original string size, truncation occurs. The second and fourth expressions were not expected. Of course, define your padding using the space function seen in the last few examples or remove padding using the trim function.

The functions related to string padding are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | lpad | Add spaces to the left. |

| 2 | rpad | Add spaces to the right. |

| 3 | space | Create a string of n spaces. |

| 4 | trim | Remove spaces. |

Splitting Strings

Typically, the string split function converts a delimited string into an array. The split_part function defined in Spark SQL returns the nth element of the resulting array.

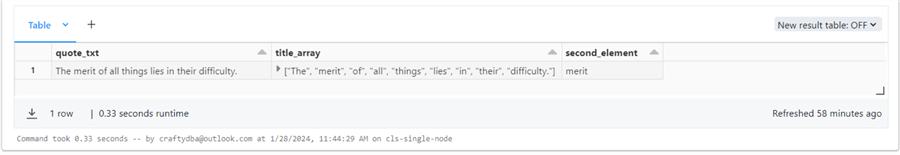

%sql select quote_txt, split(quote_txt, ' ') as title_array, split_part(quote_txt, ' ', 2) as second_element from musketeers where quote_id = 4;

The output shown below was generated from the above query. The sentence is broken up by spaces between words, and the second word is “merit.”

The functions related to string splitting are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | split | Split the string on a delimiter into an array. |

| 2 | split_part | After string split, return the nth array element. |

String Compare

Three functions can be used to compare a string to a pattern: like – case sensitive matching, ilike – case insensitive matching, and rlike – matching using regular expressions.

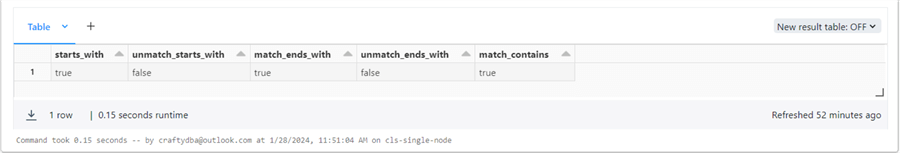

%sql

select

like('python spark', 'python%') as starts_with,

like('python spark', '%python') as unmatch_starts_with,

like('python spark', '%spark') as match_ends_with,

like('python spark is real cool', '%spark') as unmatch_ends_with,

like('python spark is real cool', '%spark%') as match_contains;

When using like and ilike, the wild card % represents 1 to n characters. Depending on the match pattern, one can find strings at the start, middle, and end. I suggest reviewing the string data and match pattern to ensure the expected result is returned. The image below shows the output from the query.

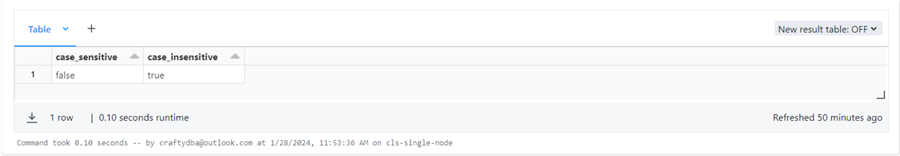

The example below showcases the case sensitive and case insensitive functions.

%sql

select

like('python spark', 'Python%') as case_sensitive,

ilike('python spark', 'Python%') as case_insensitive;

The expected results are shown below.

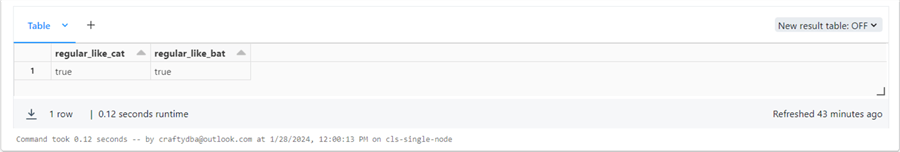

Complete coverage of regular expressions can be an article in itself. The first expression matches any sentence that starts with a cat or hat. The second expression matches any expression that ends with bat, cat, or hat.

%sql

select

rlike('cat in the hat', '^[hc]at') as regular_like_cat,

rlike('dog with a bat', '[hcb]at$') as regular_like_bat

What is a match? These functions always return true or false. The output shown below completes our comparison functions.

The functions related to string comparing are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | like | Case sensitive compare. |

| 2 | Ilike | Case insensitive compare. |

| 3 | rlike | Compare using regular expressions |

Natural Language

The last set of functions for this tip is built around our natural language. Every paragraph can be broken down into sentences. Each sentence can be divided into words. What makes one word different from another is how it sounds. The first function is named sentences. The query below breaks each quote into an array per sentence, each word being an element.

%sql select *, sentences(quote_txt) from musketeers;

This query is nifty because we can easily traverse an array. The image below shows the output.

Let's make sure the function works with multiline text.

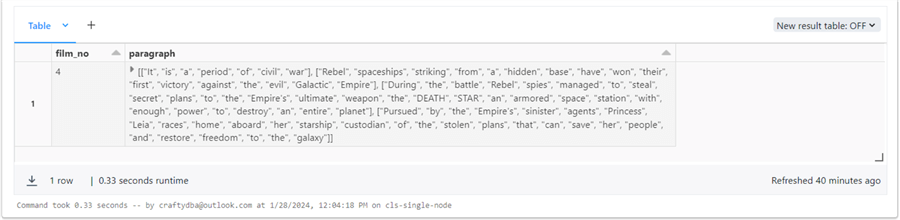

%sql select film_no, sentences(opening_crawl) as paragraph from star_wars;

The query seen above creates an array of arrays. The outputted paragraph below contains the words that make up the opening crawl for Star Wars: A New Hope.

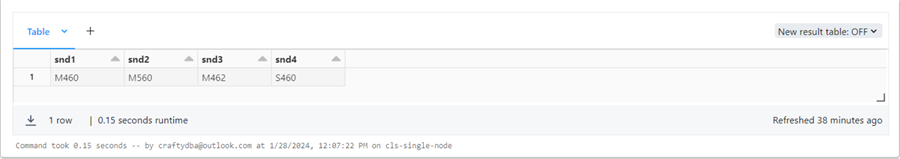

The soundex function converts a string into code. The query below shows names that are close to each other.

%sql

select

soundex('Miller') as snd1,

soundex('Miner') as snd2,

soundex('Millers') as snd3,

soundex('Siller') as snd4;

The first character of the code relates to the start of the word. The first and last entries sound the same but start with different letters.

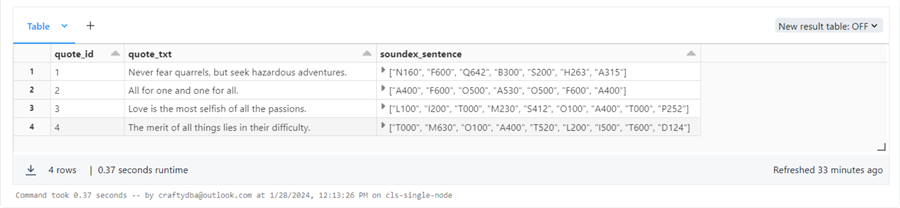

What you might not realize is that arrays are very powerful structures. Not all databases or languages support them. To show their power, I will use the transform function to convert each word in the Musketeer quote to soundex code.

%sql select quote_id, quote_txt, transform(sentences(quote_txt)[0], x -> soundex(x)) as soundex_sentence from musketeers;

We can see that the word “all,” regardless of case, has the Soundex code of A400.

Converting natural language into codes allows the developer to compare and/or contrast written words differently. The functions related to natural language are shown below.

| Example | Function | Description |

|---|---|---|

| 1 | sentences | Break a sentence into arrays of words. |

| 2 | soundex | Convert a word into a soundex code. |

Summary

The Spark Language contains many functions that deal with strings. This tip covered the following topics: translating to or from ASCII coding; finding the length of a string; picking the first non-null string; combining one or more strings; casting and/or formatting strings; finding the existence or position of a substring; returning or replacing part of a string; changing the case of a string; increasing or decreasing padding; splitting strings into arrays; comparing strings using different methods; splitting paragraphs into sentences and sentences into arrays; and understanding how words sound. Each of these topics might be of valuable use in the future.

Reviewing the formatting functions revealed some quirks and/or bugs. First, one function did require the most recent version of the Spark engine. A few functions returned the formatting mask if the input data was larger than the mask size. Finally, two bugs were found. Please revisit the section for details.

The padding functions did not work as I expected. The size parameter passed as an argument is the total size of the resulting string, not the size of the padding to add to the left or right of the string. We can easily create our custom function if we want.

Overall, having a good understanding of how to manipulate the basic data types (date/time, numbers, and strings) is very important for the big data engineer. Today's article focused on basic string functions. Enclosed is the notebook used in this article. Next time, we will focus on working with array functions.

Next Steps

- Learn about statistical functions.

- Learn about binary functions.

- Learn about string encoding functions.

- Learn about array functions.

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-04-01