By: Koen Verbeeck | Comments (17) | Related: More > Integration Services Development

Problem

In my previous tips about BIML, I first introduced the concepts in Introduction to Business Intelligence Markup Language (BIML) for SSIS and I showed how you can import flat files in Using BIML to Generate an SSIS Import Package. Although BIML is very powerful, you wouldn't use it to generate one package at a time. It is surely much faster to create an SSIS package using the Visual Studio development environment instead of typing all that XML, right? Using metadata however, we can generate multiple packages on the fly. In this tip, I'll convert the BIML script for generating the import package of the previous tip to a dynamic package generating machine. I recommend reading the previous tips, as this tip builds upon their foundations.

Solution

In order to generate multiple packages, BIML allows the nesting of .NET code inside the scripts to make them more dynamic and flexible. Let's illustrate with an example. Consider the following BIML code that generates an OLE DB connection manager:

<Connection Name ="OLE_BIML" ConnectionString="Data Source=.;InitialCatalog=BIML;Provider=SQLNCLI11.1;Integrated Security=SSPI;Auto Translate=False;"/>

Now assume we have the following C# data table called ConnectionInfo with the following metadata:

| ID | Server | Database |

| 1 | localhost | AdventureWorks |

| 2 | localhost | Contoso |

Using the special tags <# #> we can add .NET code to the BIML script. We're going to loop over the rows of the data table and insert the relevant pieces of data into the BIML code. This gives us the following code:

<#

int ID;

string DestinationServer;

string DestinationDatabase;

/* Loop over each server-database pair and create the corresponding connection. */

foreach(DataRow row in ConnectionInfo.Rows){

ID = row[0].ToString();

DestinationServer = row[1].ToString();

DestinationDatabase = row[2].ToString();

#>

<Connection Name="OLE_<#=ID#>"

ConnectionString="Data Source=<#=DestinationServer#>;

Initial Catalog=<#=DestinationDatabase#>;

Provider=SQLNCLI11.1;Integrated Security=SSPI;Auto Translate=False;">

</Connection>

<#

}

#>Using the <#=variableName#> tag, we can insert the value of C# variables into BIML. When the compiler interprets the previous code, he turns it into the following BIML code:

<Connection Name ="OLE_1" ConnectionString="Data Source=localhost;InitialCatalog=AdventureWorks; Provider = SQLNCLI11.1 ; Integrated Security=SSPI;Auto Translate=False;"/> <Connection Name ="OLE_1" ConnectionString="Data Source=localhost;InitialCatalog=Contoso; Provider = SQLNCLI11.1 ; Integrated Security=SSPI;Auto Translate=False;"/>

This piece of BIML on its turn will generate two connection managers - based on the metadata - in the resulting SSIS package.

Now that we know the basics, let's turn the import package of the previous tip into a dynamic metadata based script.

Create metadata repository

Use the following T-SQL script to create a database, the tables that will store the metadata and the destination tables for the flat file data.

USE [master];

GO

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'BIML')

DROP DATABASE [BIML];

GO

IF NOT EXISTS (SELECT name FROM sys.databases WHERE name = N'BIML')

CREATE DATABASE [BIML];

GO

USE [BIML];

GO

-- This table will store the metadata about the flat files we are going to load:

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[FileMetadata]')

AND type IN (N'U'))

BEGIN

CREATE TABLE [dbo].[FileMetadata](

[ID] [int] IDENTITY(1,1) NOT NULL,

[FileName] [varchar](100) NOT NULL,

[ImportFolder] [varchar](250) NOT NULL,

[Delimiter] [varchar](5) NULL,

[TextQualifier] [varchar](5) NULL,

[DefaultColumnLength] [int] NOT NULL,

[Nullability] [bit] NOT NULL,

[DestinationServer] [varchar](100) NOT NULL,

[DestinationDatabase] [varchar](100) NOT NULL,

[DestinationSchema] [varchar](10) NOT NULL,

[DestinationTable] [varchar](50) NOT NULL,

[TruncateOnLoad] [bit] NOT NULL

) ON [PRIMARY]

END

-- This table will store metadata about the different columns of the flat files:

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ColumnMetadata]')

AND type IN (N'U'))

BEGIN

CREATE TABLE [dbo].[ColumnMetadata]

(

[ID] [int] IDENTITY(1,1) NOT NULL,

[FileID] [int] NOT NULL,

[ColumnName] [varchar](100) NOT NULL

);

END

-- Destination tables

IF NOT EXISTS (SELECT * FROM sys.schemas WHERE name = N'Import')

EXEC sys.sp_executesql N'CREATE SCHEMA [Import]';

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[Import].[CustomerImport]')

AND type IN (N'U'))

BEGIN

CREATE TABLE [Import].[CustomerImport](

[CustomerAlternateKey] [varchar](250) NULL,

[Title] [varchar](250) NULL,

[FirstName] [varchar](250) NULL,

[MiddleName] [varchar](250) NULL,

[LastName] [varchar](250) NULL,

[BirthDate] [varchar](250) NULL,

[MaritalStatus] [varchar](250) NULL,

[Suffix] [varchar](250) NULL,

[Gender] [varchar](250) NULL,

[EmailAddress] [varchar](250) NULL,

[YearlyIncome] [varchar](250) NULL,

[TotalChildren] [varchar](250) NULL,

[NumberChildrenAtHome] [varchar](250) NULL,

[EnglishEducation] [varchar](250) NULL,

[EnglishOccupation] [varchar](250) NULL,

[HouseOwnerFlag] [varchar](250) NULL,

[NumberCarsOwned] [varchar](250) NULL,

[AddressLine1] [varchar](250) NULL,

[AddressLine2] [varchar](250) NULL,

[Phone] [varchar](250) NULL,

[DateFirstPurchase] [varchar](250) NULL,

[CommuteDistance] [varchar](250) NULL

);

END

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[Import].[SalesImport]')

AND type in (N'U'))

BEGIN

CREATE TABLE [Import].[SalesImport](

[ProductAlternateKey] [varchar](250) NULL,

[CustomerAlternateKey] [varchar](250) NULL,

[SalesOrderNumber] [varchar](250) NULL,

[SalesOrderLineNumber] [varchar](250) NULL,

[RevisionNumber] [varchar](250) NULL,

[OrderQuantity] [varchar](250) NULL,

[UnitPrice] [varchar](250) NULL,

[ExtendedAmount] [varchar](250) NULL,

[UnitPriceDiscountPct] [varchar](250) NULL,

[DiscountAmount] [varchar](250) NULL,

[ProductStandardCost] [varchar](250) NULL,

[TotalProductCost] [varchar](250) NULL,

[SalesAmount] [varchar](250) NULL,

[TaxAmt] [varchar](250) NULL,

[Freight] [varchar](250) NULL,

[OrderDate] [varchar](250) NULL,

[DueDate] [varchar](250) NULL,

[ShipDate] [varchar](250) NULL

);

END

Use the following script to insert the metadata of the flat files into the tables:

-- Insert file metadata (change the path to the import folder if necessary) INSERT [dbo].[FileMetadata] ([FileName], [ImportFolder], [Delimiter], [TextQualifier], [DefaultColumnLength] ,[Nullability], [DestinationServer], [DestinationDatabase], [DestinationSchema] ,[DestinationTable], [TruncateOnLoad]) VALUES (N'DimCustomer_*.txt', N'D:\FlatFiles', N'|', N'', 250, 1, N'localhost', N'BIML', N'Import', N'CustomerImport', 1) ,(N'DimProducts_*.csv', N'D:\FlatFiles', N';', N'"', 250, 1, N'localhost', N'BIML', N'Import', N'ProductsImport', 1); GO -- Insert column metadata INSERT [dbo].[ColumnMetadata] ([FileID], [ColumnName]) VALUES (1, N'CustomerAlternateKey'),(1, N'Title'),(1, N'FirstName'),(1, N'MiddleName'),(1, N'LastName'),(1, N'BirthDate') ,(1, N'MaritalStatus'),(1, N'Suffix'),(1, N'Gender'),(1, N'EmailAddress'),(1, N'YearlyIncome'),(1, N'TotalChildren') ,(1, N'NumberChildrenAtHome'),(1, N'EnglishEducation'),(1, N'EnglishOccupation'),(1, N'HouseOwnerFlag'),(1, N'NumberCarsOwned') ,(1, N'AddressLine1'),(1, N'AddressLine2'),(1, N'Phone'),(1, N'DateFirstPurchase'),(1, N'CommuteDistance'),(2, N'ProductAlternateKey') ,(2, N'WeightUnitMeasureCode'),(2, N'SizeUnitMeasureCode'),(2, N'EnglishProductName'),(2, N'StandardCost') ,(2, N'FinishedodsFlag'),(2, N'Color'),(2, N'SafetyStockLevel'),(2, N'ReorderPoint'),(2, N'ListPrice'),(2, N'Size'),(2, N'SizeRange') ,(2, N'Weight'),(2, N'DaysToManufacture'),(2, N'ProductLine'),(2, N'DealerPrice'),(2, N'Class'),(2, N'Style'),(2, N'ModelName'); GO

The metadata will be retrieved using the following stored procedures:

USE [BIML]

GO

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[BIML].[GetColumns]')

AND type IN (N'P', N'PC'))

BEGIN

EXEC dbo.sp_executesql @statement = N'CREATE PROC [BIML].[GetColumns]

@FileID INT

AS

--DECLARE @FileID INT = 1;

SELECT ColumnName FROM [dbo].[ColumnMetadata] WHERE [FileID] = @FileID;'

END

GO

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[BIML].[GetConnectionInfo]')

AND type in (N'P', N'PC'))

BEGIN

EXEC dbo.sp_executesql @statement = N'CREATE PROC [BIML].[GetConnectionInfo] AS

SELECT DISTINCT DestinationServer, DestinationDatabase

FROM [dbo].[FileMetadata];'

END

GO

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[BIML].[GetMetadata]')

AND type in (N'P', N'PC'))

BEGIN

EXEC dbo.sp_executesql @statement = N'CREATE PROC [BIML].[GetMetadata] AS

SELECT

ID

,[FileName]

,ImportFolder

,Delimiter

,TextQualifier = CASE WHEN TextQualifier = '''' THEN ''<none>'' ELSE CASE WHEN TextQualifier = ''"'' THEN ''"'' ELSE TextQualifier END END

,DefaultColumnLength

,DestinationServer

,DestinationDatabase

,DestinationSchema

,DestinationTable

,TruncateOnLoad

FROM [dbo].[FileMetadata];'

END

GO

Now that the metadata is set-up, we can use it inside BIML.

Extending the script

First of all, we need to declare to BIML which .NET programming language we'll use - Visual Basic or C# - and which namespaces we're going to use, followed by the BIML root node.

<#@ template language="C#" hostspecific="true"#> <#@ import namespace="System.Data"#> <#@ import namespace="System.Data.SqlClient"#> <#@ import namespace="System.IO"#> <Biml xmlns="http://schemas.varigence.com/biml.xsd">

Next we declare all the variables we are going to use in the script, together with the data tables. I already retrieve the metadata about the files and about the different connections. This information will be used to create the connection managers.

<#

/* Declare variables */

string MetadataConnection = "Server=localhost;Initial Catalog=BIML;Integrated Security=SSPI;Provider=SQLNCLI11.1"; // The connection string to the metadata database. This is hardcoded.

string FileID;

string FileName="";

string ImportFolder ="";

string ColumnDelimiter ="";

string Qualifier ="";

int DefaultColumnLength;

string DestinationServer = "";

string DestinationDatabase ="";

string DestinationSchema ="";

string DestinationTable ="";

bool TruncateOnLoad;

DataTable FileMetadata;

DataTable ColumnMetadata;

DataTable ConnectionInfo;

string ColumnName ="";

string FileNameClean ="";

/* Retrieve the metadata for the packages. */

FileMetadata = ExternalDataAccess.GetDataTable(MetadataConnection,"BIML.GetMetadata");

ConnectionInfo = ExternalDataAccess.GetDataTable(MetadataConnection,"BIML.GetConnectionInfo");

#>We start with the file formats. Add the FileFormat element first, followed by a piece of C# code looping over the metadata stored in the data table. For each iteration, we assign the current values to the different variables. Inside the loop, code we close the code element and add actual BIML code. This code contains C# variables to make it dynamic. This gives us the following script:

<FileFormats>

<#

/* Loop over each file and create its corresponding flatfile format. */

foreach(DataRow row in FileMetadata.Rows){

FileID = row[0].ToString();

FileName = row[1].ToString();

ImportFolder = row[2].ToString();

ColumnDelimiter = row[3].ToString();

Qualifier = row[4].ToString();

DefaultColumnLength = (int)row[5];

DestinationServer = row[6].ToString();

DestinationDatabase = row[7].ToString();

DestinationSchema = row[8].ToString();

DestinationTable = row[9].ToString();

TruncateOnLoad = (bool)row[10];

#>

<FlatFileFormat FlatFileType="Delimited"

ColumnNamesInFirstDataRow="true"

RowDelimiter="CRLF"

Name="FFF <#=DestinationTable#>"

TextQualifer="<#=Qualifier#>"

IsUnicode="false">

Now we need to add the various columns to the flat file format. We start with a new code element, retrieve the column metadata for the current file and start an inner for each loop. In each iteration, we add another column to the fileformat. For the sake of simplicity, we give each column the same data type and size. At the end, we close off with the columns, flatfileformat and fileformat tags. Don't forget end the for each loops as well!

<Columns>

<#

ColumnMetadata = ExternalDataAccess.GetDataTable(MetadataConnection,"BIML.GetColumns " + FileID); // Retrieve the column info for the current flat file.

int i = 0;

int ColumnCount = ColumnMetadata.Rows.Count;

/* Loop over each column and add it to the flatfile format. */

foreach(DataRow r in ColumnMetadata.Rows){

ColumnName = r[0].ToString();

if(i == ColumnCount-1)

{

ColumnDelimiter = "CRLF"; // the last column is not delimited by the column delimiter, but by row delimiter. This is hardcoded to CRLF.

}

#>

<Column ColumnType="Delimited" DataType= "AnsiString" Delimiter="<#=ColumnDelimiter#>" Length="<#=DefaultColumnLength#>" Name="<#=ColumnName#>" CodePage="1252"></Column>

<# i = i+1;

}

#>

</Columns>

</FlatFileFormat>

<#

}

#>

</FileFormats>

Next up are the connections. We loop over the ConnectionInfo data table to create the OLE DB connections (remember our example earlier).

<Connections>

<#

/* Loop over each server-database pair and create the corresponding connection. */

foreach(DataRow row in ConnectionInfo.Rows){

DestinationServer = row[0].ToString();

DestinationDatabase = row[1].ToString();

#>

<Connection Name="OLE_<#=DestinationServer#>_<#=DestinationDatabase#>"

ConnectionString="Data Source=<#=DestinationServer#>;Initial Catalog=<#=DestinationDatabase#>;Provider=SQLNCLI11.1;Integrated Security=SSPI;Auto Translate=False;">

</Connection>

Now we are going to loop over the metadata of the different files again and create the corresponding flat file connection managers, using the fileformats created earlier. Some clean-up is necessary, as the connection manager name cannot contain some characters, such as the wildcard symbol * and the underscore. The flat file connection managers also get an expression to configure their connectionstring by a variable set by the SSIS for each loop. If you're not following, read the previous tip Using BIML to Generate an SSIS Import Package.

<#

}

/* Loop over the files again and create the corresponding flat file connections. */

foreach(DataRow row in FileMetadata.Rows){

FileID = row[0].ToString();

FileName = row[1].ToString();

ImportFolder = row[2].ToString();

ColumnDelimiter = row[3].ToString();

Qualifier = row[4].ToString();

DefaultColumnLength = (int)row[5];

DestinationServer = row[6].ToString();

DestinationDatabase = row[7].ToString();

DestinationSchema = row[8].ToString();

DestinationTable = row[9].ToString();

TruncateOnLoad = (bool)row[10];

FileNameClean = FileName.Replace("*","").Replace("_",""); // replace the asterix and underscores in the filename with blanks. These symbols are not allowed in the name of a connection manager.

#>

<FlatFileConnection Name="FF_<#=FileNameClean#>"

FilePath="<#=ImportFolder#>\\invalid.txt"

FileFormat="FFF <#=DestinationTable#>">

<Expressions>

<Expression PropertyName="ConnectionString">

@[User::sFullFilePath]

</Expression>

</Expressions>

</FlatFileConnection>

<#

}

#>

</Connections>

The time has come to declare the packages. We will loop once more over the flat file metadata. This needs to be done in different loops instead of just one big loop. Some BIML elements, such as Connections and Packages, can only appear once. If we would put everything in one loop, they would be generated multiple times and the result would be invalid BIML code. The package contain parameters for easy configuration.

<Packages>

<#

/* Loop once more over the flat file and create an SSIS package for each one. */

foreach(DataRow row in FileMetadata.Rows){

FileID = row[0].ToString();

FileName = row[1].ToString();

ImportFolder = row[2].ToString();

ColumnDelimiter = row[3].ToString();

Qualifier = row[4].ToString();

DefaultColumnLength = (int)row[5];

DestinationServer = row[6].ToString();

DestinationDatabase = row[7].ToString();

DestinationSchema = row[8].ToString();

DestinationTable = row[9].ToString();

TruncateOnLoad = (bool)row[10];

FileNameClean = FileName.Replace("*","").Replace("_",""); // replace the asterix and underscores in the filename with blanks. These symbols are not allowed in the name of a connection manager.

#>

<Package Name="Import <#=DestinationTable#>" ConstraintMode="Linear">

<Parameters>

<Parameter Name ="sSourceFolder" DataType="String"><#=ImportFolder#></Parameter>

</Parameters>

<Variables>

<Variable Name="sFullFilePath" DataType="String"><#=ImportFolder#>\invalid</Variable>

</Variables>

<Tasks>

The metadata contains a flag if we want to truncate the destination table or not. We can solve this with a simple if construct in C#: we only add the Execute SQL Task with the TRUNCATE TABLE statement if necessary.

<#

if(TruncateOnLoad){

#>

<!-- If TruncateOnLoad is true, add an Execute SQL Task that will truncate the destination table. -->

<ExecuteSQL Name="(SQL) Truncate Destination Table" ConnectionName="OLE_<#=DestinationServer#>_<#=DestinationDatabase#>">

<DirectInput>TRUNCATE TABLE <#=DestinationSchema#>.<#=DestinationTable#>;</DirectInput>

</ExecuteSQL>

<#

}

#>

We can finish with the rest of the package, by declaring the for each loop and the data flow.

<ForEachFileLoop Name="(FELC) Loop over Files" Folder="<#=ImportFolder#>"

FileSpecification="<#=FileName#>"

ConstraintMode="Parallel"

RetrieveFileNameFormat="FullyQualified">

<VariableMappings>

<VariableMapping Name="Mapping" VariableName="User.sFullFilePath"/>

</VariableMappings>

<Expressions>

<Expression PropertyName="Directory">@[$Package::sSourceFolder]</Expression>

</Expressions>

<Tasks>

<Dataflow Name="(DFT) Import Flat File">

<Transformations>

<FlatFileSource Name="(FF_SRC) Read Flat File"

ConnectionName="FF_<#=FileNameClean#>"

RetainNulls="true"/>

<OleDbDestination Name="(OLE_DST) Write Data to DB"

ConnectionName="OLE_<#=DestinationServer#>_<#=DestinationDatabase#>"

UseFastLoadIfAvailable="true">

<ExternalTableOutput Table="<#=DestinationSchema#>.<#=DestinationTable#>"/>

</OleDbDestination>

</Transformations>

</Dataflow>

</Tasks>

</ForEachFileLoop>

</Tasks>

</Package>

<#

}

#>

</Packages>

</Biml>

And that's it, the BIML script is ready to generate some packages! Make sure the destination tables exist before you compile the BIML code.

The Result

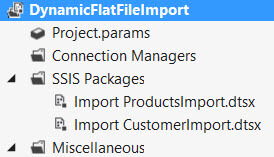

When running the BIML script, multiple packages are added to the project.

The code can easily be extended to create a master package as well, that will execute every import package in parallel.

Conclusion

This tip demonstrates the pure power of BIML: generating multiple SSIS packages on the fly using metadata from a repository. It takes some effort to create the BIML script first, but it will pay off in every ETL project you will use it.

Next Steps

- Read the two previous tips about BIML if you haven't already:

- Download the script and the flat files here and try it out yourself! The final BIML script has a master package added to execute the child packages.

- Think about your ETL processes and which packages are repetitive. Try to replace those with BIML.

- Check out the following blogs for more BIML goodness:

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips