By: Tim Smith | Comments | Related: More > Import and Export

Problem

What are some tools we can use for various ETL tasks or related tasks, such as extracting, transforming or loading or tasks such as metadata management for files or SQL Server data?

Solution

Where you work or consult will mostly determine what tools you use for ETL processes. In the exceptional cases where you may get to choose, we'll look at three technologies to consider while covering an overview of each with benefits and drawbacks of using each.

SQL Server Integration Services for ETL

1. SQL Server Integration Services (SSIS). In SQL Server environments, SSIS is one of the most popular ETL tools, as it features tools that you can use for APIs, flat files, data transforms, cross-server communication, etc. SSIS also allows you to use T-SQL either directly, or through calls to stored procedures and features the ability to add script tasks using the .NET library.

Benefits to using SSIS:

- SSIS is popular among many organizations which use SQL Server and run ETL processes, making it easy to find talent familiar with SSIS.

- SSIS comes with a visual interface that allows people to see the flow of data or the process of tasks. For some tasks, this can simplify process troubleshooting.

- SSIS uses many other tools like .NET and T-SQL, making it work for most ETL tasks depending on its version (some features may be limited depending on what you're doing).

- From data flows to FTP tasks to data transformations, SSIS comes with many ETL tools built into it where a developer can grab the task, include it in the ETL flow, and adjust the properties of the task.

- Since SSIS offers the ability to use script tasks, you can use script tasks to obtain and retain file metadata.

- One of the biggest drawbacks to SSIS is the hammer and nail problem; it tends to be overused when it's not necessary. I've seen numerous SSIS packages that could have been one stored procedure, or a PowerShell script that would have taken less than a second or two to resolve when an issue arose. Provided that a package is validated in terms of its need, this can be avoided.

- Depending on how an SSIS package is created and set up, change may require significant work. This can generally be avoided by using configuration files or tables where change may be required in the future.

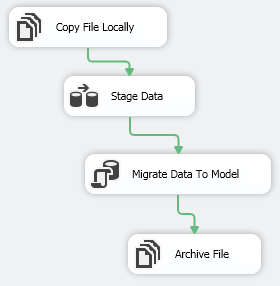

A simple example of an ETL flow using SSIS is shown in the below image - a flat file is copied with a file system task, then imported into a table using a data flow task, and the data are transformed and migrated within an execute sql task, and finally the file is archived using another file system task.

As a tool, SSIS is used in a multitude of ETL environments from energy to finance to health care. Due to its relative ease of use along with its visual appeal (you don't need to write code to use SSIS, unless you wish), it's easy to find ETL talent with SSIS experience.

T-SQL Code for ETL

2. T-SQL. T-SQL is the native SQL language for SQL Server and can be used for some ETL tasks, such as bulk inserts of flat files, data transformations, and data loads for cubes, reports, or to other SQL Servers. While I'm not a fan of environments which use replication for ETL (it's a high availability solution, not a replication solution), some companies use replication, which uses T-SQL underneath the architecture.

Benefits to using T-SQL:

- T-SQL is the language for SQL Server developers and database administrators, which means if your SQL Server environment has a few ETL processes it needs to create, you may be able to use the existing skills of these positions.

- For transforms or loads that involve large sets with filters and joins, T-SQL offers a high performing language. While I use Entity Framework in many of my custom applications, any time I must use aggregates with a lot of filters, joins, cross-applies, self-joins, or query hints and additions (like MAXDOP), I switch to T-SQL because I can almost always out-perform entity framework with these custom T-SQL queries.

- T-SQL's best use is for sets. Some ETL requires object-based processes, such as iterating over each row, storing the row in an object, and transforming that object. T-SQL does not perform well with these situations.

- You may face significant limitations to cleansing data before inserting data. With bulk insert, as an example, you can dispose of bad data by skipping lines, but this "bad data" may be data you want for records.

- While developers can also use extended stored procedures for ETL tasks, they should consider permissions issues in these cases. In many companies, developers have lower levels of access, or more restricted levels of access.

In the below code sample, we insert the ExampleFile comma delimited file to the ExampleTable. Bulk insert is one tool in T-SQL, which can extract data from flat files such as delimited files (comma, bars, etc.), format files, and many custom files (though T-SQL may be required to parse). In addition, many transformations occur within T-SQL post import and T-SQL can be used to migrate data to other servers.

BULK INSERT ExampleTable FROM 'C:\ETLFiles\ExampleFile.csv' WITH ( FIELDTERMINATOR = ',' ,ROWTERMINATOR = '0x0a' -- Skip the header row: ,FIRSTROW=2 -- Logs errors during bulk insert ,ERRORFILE='C:\ETLFiles\20150101\logfile.log' )

Using T-SQL in every step of an ETL process depends on the architecture; I prefer to use it most in the transform step, especially when the transform applies to a set of data.

C# Code for ETL

3. C#. In addition to use within SSIS, C# is useful in larger ETL environments, especially when importing custom data. C# is the most comprehensive tool on this list because you can create ETL applications, web applications, windows applications, etc. In addition, you can compile a DLL and use PowerShell instead, which in syntax is very similar to C#.

Benefits to using C#:

- Offers the ability to enhance performance of any ETL process, as well as put an interface in front of it to allow people with less experience to do some of the work.

- You can take a C# project that handles multiple types of imports, transforms, and loads and add it to an application project. Many of my ETL classes in C# have never been re-written; they're simply added to what I need because they'll handle data types that I need or transforms that I need.

- Since it's an object-oriented language, it excels at object-level tasks. Iterating over a data set row-by-row can be done quickly with C# and with a low impact to the system, if built correct. In addition, Entity Framework performs well for most database queries (see the exception list above this).

Drawbacks to using C#:

- The major drawback to using C# in an ETL environment is that some ETL developers do not have experience with C#, making it difficult to find talent.

- Over development wastes time and in some situations, you may only need to import one type of file - like CSV files. If you have other tools to do this, building a custom tool that saves no time costs money.

In situations where we may be dealing with a large custom data object, such as geometric data combined with resource information (i.e.: lithium presence within a geographic region), a custom C# ETL application may help us parse the data into meaningful pieces prior to import so that we are able to skip a step. In the simple example below, I use four total pieces of data per line, but these data are delineated differently - the first part is delimited by commas while the latter part is delimited by vertical bars. Imagine that each line of data, or each call to an API, returns different formats. We could import each line by using T-SQL and try to parse the data using T-SQL (which in this simple example would be easy), but as we have larger custom data, it would cost more to use T-SQL. The T-SQL shows how these data would be stored within two tables through a procedure call, and the C# shows a model that we use, along with the iteration over each line in the file along with the output of what a procedure call could look like (create a new console app in Visual Studio just to see the test output).

CREATE TABLE tblTests( TestID INT IDENTITY(1,1) PRIMARY KEY, TestNumber INT, TestDate SMALLDATETIME ) CREATE TABLE tblTestsDegree( DegreeID INT IDENTITY(1,1), TestID INT, Direction VARCHAR(2), DirectionDegree SMALLINT, CONSTRAINT FK_tblTestsFOREIGN KEY (TestID) REFERENCES tblTests (TestID) ) -- Example procedure: CREATE PROCEDURE insDirectionalPass @TestNumber INT, @TestDate SMALLDATETIME, @Direction VARCHAR(2), @DirectionDegree SMALLINT AS BEGIN DECLARE @link INT INSERT INTO tblTests (TestNumber,TestDate) VALUES (@TestNumber,@TestDate) SET @link = SCOPE_IDENTITY() INSERT INTO tblTestsDegree (TestID,Direction,DirectionDegree) VALUES (@link,@direction,@directiondegree) END

using System;

using System.IO;

namespace DemoApp

{

public class CustomFileExampleModel

{

public int TestNumber { get; set; }

public DateTime TestDate { get; set; }

public string Direction { get; set; }

public int DirectionDegree { get; set; }

}

class Program

{

static void Main(string[] args)

{

/* Data from the file C:\ETLFiles\demo.txt for this example:

fileread,1,20,2015-01-01,SE|23

fileread,7,30,2015-02-01,NE|22

fileread,12,40,2015-03-01,SW|24

fileread,3,50,2015-04-01,NE|28

fileread,6,60,2015-05-01,NW|20

*/

foreach (string singleLine in File.ReadLines(@"C:\ETLFiles\demo.txt"))

{

CustomFileExampleModel saveobject = new CustomFileExampleModel();

saveobject.TestNumber = Convert.ToInt16(singleLine.Split(',')[1]);

saveobject.TestDate = Convert.ToDateTime(singleLine.Split(',')[3]);

string[] strArrayExampleFurther = singleLine.Split(',')[4].Split('|');

saveobject.Direction = strArrayExampleFurther[0];

saveobject.DirectionDegree = Convert.ToInt16(strArrayExampleFurther[1]);

/// Output example of the procedure call:

Console.WriteLine("EXECUTE insDirectionalPass {0},'{1}','{2}',{3}", saveobject.TestNumber, saveobject.TestDate, saveobject.Direction, saveobject.DirectionDegree);

}

Console.ReadLine();

}

}

}

If I am building a custom application for ETL, in the least, my solution will have:

- Models project, for modeling the data prior to import to allow for direct inserting of data to appropriate tables and possible cleaning or modeling of data prior to importing data.

- Data layer project, for interactions with the database.

- Functions projects, for reusable functions that are requires, such as validating data (often called the common library).

- Extract project, for connections to external data sources, like files or APIs

- Logging project, for logging errors or other important points during the import.

This means that in one solution, there is at least five projects and we can see that this could be costly as far as time, which is why I wouldn't use a tool like C# for small ETL projects.

In the above example, I only demonstrate how I would create a model to hold the data prior to import - the model would be a separate project with all the models required (there may be multiple models), along with the functions to parse the file's data in a larger solution. Also, in steps where I load data into models, the model would perform the validation - such as checking if a string is null, or if a number is between a range, etc.

C# is one tool used commonly used in environments where large and custom data must be imported, such as specialized health care data or financial data.

Next Steps

- For most SQL Server environments, SSIS offers one of the better tools for ETL since you can find SSIS talent easier, it covers most ETL cases, and incorporates both T-SQL and C#.

- In this tip, I use SSIS to loop through multiple flat files and insert them to an existing table. This is an example of SSIS's flexibility and can be used on flat files with the same data schema.

- For environments that must consider performance, speed, and customization, I would suggest using C# as the ETL tool.

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips