Problem

In a previous article, I ran a SQL script against a Fabric Warehouse 100 times without needing to click ‘Execute’ each time. A WHILE loop could work, but Query Insights treats it as a single execution. While using GO was an option, I wanted a different approach because I’m always trying to expand my skill set. I need a scalable way to run scripts for performance testing.

Solution

I wrote a custom Python script with the pyodbc package. It wasn’t time-consuming to write, and it has a lot of room for growth. In this article, I’ll present the script and walk you through its execution. Who knows, maybe you’ll improve the script in your spare time and post your version in the comments below.

Why Choose Python

Let me clarify that I am not a full-stack developer; however, I worked as a C# and ASP.NET developer years ago, mainly focusing on the database layer. I also had the chance to work with Python on a web application, though I wouldn’t consider myself an expert. With that in mind, let’s explore why I chose Python for this task.

As stated in the problem above, I need to execute a query 100 times without clicking the Execute button 100 times. I envisioned a tool similar to SQLQueryStress or OStress, but simpler. First, I considered the GO command. Next, I turned to the WHILE loop, which does work in a Warehouse; the DMV queryinsights.exec_requests_history sees the loop as one query. I initially considered using the Microsoft Fabric Portal to create something in a notebook, but I’m still not the biggest fan of it. Then I turned to an old friend, Python.

Python is a general-purpose programming language used for various tasks, including data science, scripting, and web development. There’s a tremendous developer-led community surrounding it with packages for nearly any task and third-party tools.

Why choose Python for a task like this? Easy, Python is one of the simplest object-oriented languages, especially for quick scripts, which is exactly what I wanted here. As I tend to do, I kept adding to it until I decided that enough was enough. I didn’t need to recreate SQLQueryStress for a Fabric Warehouse. I’m sure you could have built something in C++ or C#, but it would have taken me longer.

Getting Started

Now that I’ve addressed why I used Python, let’s focus on how to use it. Below are the three primary components I installed. You can substitute any editor if you’re not a fan of Visual Studio Code, but it’s free and more lightweight than Visual Studio, which makes it a fan favorite.

Once you have Python installed and a terminal open (I’m using the one in VS Code), we need to install a module with the pip command. After installing the latest version of Python, the installer should have installed pip. You can check this by running the following command in the terminal.

pip --versionResults:

pip 24.3.1 from C:\Installation\Python_312\Lib\site-packages\pip (python 3.12)With pip installed, next we need to install pyodbc. The documentation refers to pyodbc as an open source Python module that makes accessing ODBC databases simple, and who doesn’t like simple? Microsoft released a new driver called the Python Driver for SQL Server, also known as mssql-python. I didn’t use the new one here because I only learned about it while writing. The command below installs pyodbc.

python -m pip install pyodbcFabric Warehouse

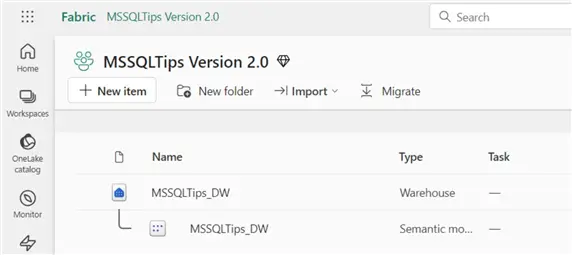

We’ll run the script against a Fabric Warehouse, which is optimized for storing and processing structured and even semi-structured data, such as JSON. Fabric stores the underlying data in the delta-parquet format, similar to a Fabric Lakehouse. Unlike lakehouses, warehouses support multi-table transactions and strongly typed data at the schema level, providing traditional structured tables. Its compute uses the distributed Polaris SQL engine, similar to Azure Synapse.

I’ll assume you already have a Fabric Warehouse; if not, set one up as shown below.

Setting the Stage

The entire script is provided below. Before using it, you need to modify the connection string to your Warehouse. Also, ensure that your SQL files (.sql) are located in the same directory as the .py file.

# Import the required modules

import pyodbc

import os

# Build the connection string

conn_str = (

"Driver={ODBC Driver 18 for SQL Server};"

"Server=tcp:youwarehouseendpoint.datawarehouse.fabric.microsoft.com;";"

"Database=MSSQLTips_DW;"

"Authentication=ActiveDirectoryInteractive;"

"UID=EntraId;"

"Encrypt=yes;"

"TrustServerCertificate=no;"

)

# Connect

cursor = None

try:

conn = pyodbc.connect(conn_str, timeout=30)

cursor = conn.cursor()

except pyodbc.Error as e:

print(f"Database connection error: {e}")

exit(1)

print("Connected. Enter 'q' to quit.\n")

# Get current script directory

script_dir = os.path.dirname(os.path.abspath(__file__))

# The next try block is where most of the magic happens.

try:

while True:

filename = input(".sql file name or 'q' to quit: ").strip()

if filename.lower() == "q":

break

sql_path = os.path.join(script_dir, filename)

if not os.path.isfile(sql_path):

print("File not found. Try again.\n")

continue

with open(sql_path, "r") as f:

query_str = f.read()

# View the query before executing

print("\nQuery Preview: \n" + "-"*60)

print(query_str)

print("-"*60)

# Ensure the user wants to execute the query

confirm = input("Do you want to execute this query (y/n): ").strip().lower()

if confirm != "y":

print("Skipping execution. Enter a different query.\n")

continue

try:

iterations = int(input("How many times to execute? "))

except ValueError:

print("Invalid number.\n")

continue

for i in range(iterations):

try:

cursor.execute(query_str)

cursor.fetchall()

print(f"\rQuery {i+1}/{iterations} complete", end="", flush=True)

except (KeyboardInterrupt, SystemError) as e:

print(f"\n[!] Execution interrupted during iteration {i+1}. Returning to file prompt.\n")

break

except pyodbc.Error as e:

print(f"\nQuery execution failed: {e}")

break

else:

print('\nAll executions complete!\n')

finally:

if cursor:

cursor.close()

if 'conn' in locals():

conn.close()

print("Connection closed. Goodbye.")Connection String

Above, I’m building my connection string and storing it in a variable called conn_str. If desired, you could ask the user for input on the server and database to build the string dynamically. Additionally, you could create an environment file, but I didn’t want to add more than a single file.

conn_str = (

"Driver={ODBC Driver 18 for SQL Server};"

"Server=tcp:youwarehouseendpoint.datawarehouse.fabric.microsoft.com;"

"Database=MSSQLTips_DW;"

"Authentication=ActiveDirectoryInteractive;"

"Encrypt=yes;"

"TrustServerCertificate=no;"

)Below is a screenshot of the final VS Code setup. I have one SQL file, but you can use as many as you want.

Running the Script

After you save the script as a .py file, run it by executing the following command in the terminal.

PS python warehouse_tester.pyNext, the script prompts you for the file name. Ensure you include the .sql file as I’ve illustrated below.

PS C:\code\repos\mssqltips\T-SQL\Python> python warehouse_tester.py

Connected. Enter 'q' to quit.

.sql file name or 'q' to quit: Inline_CASE.sqlThe terminal presents a preview of the script that will run.

Query Preview:

------------------------------------------------------------

SELECT TOP 100000

t1.Id,

t2.Id,

CASE

WHEN t1.RandomNumber1 = 1

AND t2.RandomNumber1 = 2

AND t1.RandomDate1 >= '1980-12-01' THEN

'Important Message 1'

WHEN t1.RandomNumber2 > 90

AND t2.RandomNumber1 > 5

AND t1.RandomDate1 <= '1992-12-31' THEN

'Important Message 2'

WHEN t1.RandomNumber2 = 2

AND t2.RandomNumber1 > 90

AND t1.RandomDate1 <= '1991-01-02' THEN

'Important Message 3'

ELSE

'No Message for you!'

END AS DailyMessage

FROM dbo.BigTable1 t1

INNER JOIN dbo.BigTable2 t2

ON t1.Id = t2.Id

OPTION (LABEL = 'Inline_CASE100');

------------------------------------------------------------

Do you want to execute this query (y/n):Next, indicate the number of times to execute the script and press Enter.

How many times to execute? 100

Query 100/100 completeAfter a few seconds, the execution finishes. We could run another, but I’m going to enter ‘q’ for quit.

.sql file name or 'q' to quit: q

Connection closed. Goodbye.

PS C:\code\repos\mssqltips\T-SQL\Python> If you want to cancel execution, you can use the keyboard interrupt by pressing CTRL+C, which stops any further executions after the current one finishes. Please note that this doesn’t terminate the current session. This is helpful if you entered a large number, such as 100,000. Another way to prevent a high number of executions is to set an upper limit to the iterations variable.

Wrapping Up

My goal was to share a solution for running SQL scripts at scale in a Fabric Warehouse. I hope you can improve what I’ve put together. Feel free to use what I’ve provided as a starting point and build on it. Use the comments to share the improvements you would make; I might even include them myself.

Next Steps

- Hristo Hristov wrote an amazing article titled “Python for Data Professionals,” where he details how you can use Python libraries for data wrangling, analysis, and visualization.

- Are you running into problems connecting Python to your SQL Server? Check out Levi Masonde’s article “Python Connect to SQL Server with Code Examples” to get unstuck.

- Even though I didn’t use the latest Microsoft-released Python driver, Sebastiao Pereira provides step-by-step instructions for getting set up in his article, “Microsoft Python Driver for SQL Server”.