Problem

As the need and use of data grows within any organization, there is a corresponding rising issue for the need of data quality validation. Most organizations have large stores of data but most of it are not managed efficiently in terms of data quality assurances, thus leading to inaccurate insights for the business which in turn leads to distrust in the data.

Organizations have now, more than ever, realized the importance of an efficient data quality process as part of their Business Intelligence and Analytics processes. The issue is, how can they implement data quality for their data? For larger and more data-centric organizations, they might be using pre-built data management and validation tools like Microsoft Purview or other Master Data Management tools like Informatica, Talend, SAP, Talend, and Stibo Systems. But for those organizations that can not commit to subscribing to pre-built options, or they are operating primarily on On-Premises environments, they might want to build one themselves, that’s where this article comes in.

Solution

In this article, I will be describing how to implement data quality validation with SQL in a simple step by step approach that you can adapt to your business data.

In reality, you might already have your data tables and you just need to implement data quality checks. However, to ensure I accommodate for those who don’t have an idea of how this works or those who needs to see sample scenarios on how to implement this capability, I will demonstrate this end-to-end in the following steps.

It is important to note that you can run the SQL scripts I am providing in this post in any T-SQL environment including SQL Server, Azure SQL, or Microsoft Fabric SQL.

STEP 1: Create sample operational tables and insert sample rows (Includes intentional data quality issues)

Here I have created three sample tables as seen in the SQL script below.

-- Create a Products Table

CREATE TABLE dbo.Products (

ProductKey INT NULL,

Product NVARCHAR(200) NULL,

Standard_Cost DECIMAL(18,2) NULL,

Color NVARCHAR(50) NULL,

Subcategory NVARCHAR(100) NULL,

Category NVARCHAR(100) NULL

;

-- Create a Customers Table

CREATE TABLE dbo.Customers (

CustomerID INT NULL,

CustomerName NVARCHAR(200) NULL,

Email NVARCHAR(200) NULL,

Country NVARCHAR(100) NULL

);

-- Create a Orders Table

CREATE TABLE dbo.Orders (

OrderID INT NULL,

CustomerID INT NULL,

ProductKey INT NULL,

OrderDate DATE NULL,

Quantity INT NULL

); For each table, I will insert some sample data, with deliberate errors to use as a demo for data quality later.

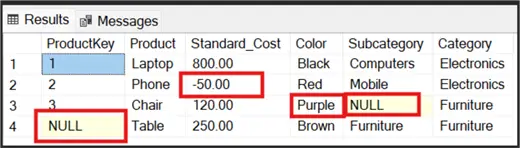

-- Insert sample rows in Products table created

INSERT INTO dbo.Products (ProductKey, Product, Standard_Cost, Color, Subcategory, Category) VALUES

(1, 'Laptop', 800.00, 'Black', 'Computers', 'Electronics'),

(2, 'Phone', -50.00, 'Red', 'Mobile', 'Electronics'),

(3, 'Chair', 120.00, 'Purple', NULL, 'Furniture'),

(NULL, 'Table', 250.00, 'Brown', 'Furniture', 'Furniture');

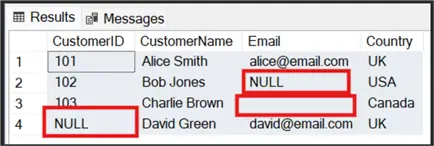

INSERT INTO dbo.Customers (CustomerID, CustomerName, Email, Country) VALUES

(101, 'Alice Smith', 'alice@email.com', 'UK'),

(102, 'Bob Jones', NULL, 'USA'),

(103, 'Charlie Brown', '', 'Canada'),

(NULL, 'David Green', 'david@email.com', 'UK');

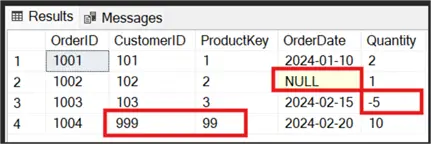

INSERT INTO dbo.Orders (OrderID, CustomerID, ProductKey, OrderDate, Quantity) VALUES

(1001, 101, 1, '2024-01-10', 2),

(1002, 102, 2, NULL, 1),

(1003, 103, 3, '2024-02-15', -5),

(1004, 999, 99, '2024-02-20', 10); For the Products table we have these issues:

- row 2 has a negative Standard Cost -> fail Range

- row 3 has invalid colour + missing subcategory

- row 4 has missing ProductKey -> fail NotNull

For the Customers table we have these issues:

- row 2 has missing email -> fail NotNull

- row 3 has empty email -> treat as present unless you add explicit check

- row 4 has missing CustomerID

For the Orders table we have these issues:

- row 2 has missing OrderDate -> fail NotNull

- row 3 has negative quantity -> fail Range

- row 4 has references missing Customer/Product -> fail Reference

STEP 2: Create metadata tables (rules) (Validation Rules includes: RecordKeyColumn to identify the record in the table)

In this step we will create a metadata table which will hold business validation rules. An example of how to create this is as seen in the SQL script below.

CREATE TABLE dbo.ValidationRules (

RuleID INT PRIMARY KEY,

RuleName NVARCHAR(200) NOT NULL,

TableName NVARCHAR(128) NOT NULL,

ColumnName NVARCHAR(128) NOT NULL,

RecordKeyColumn NVARCHAR(128) NULL,

RuleType NVARCHAR(50) NOT NULL,

RuleValue NVARCHAR(200) NULL,

Severity NVARCHAR(20) NOT NULL DEFAULT('Error'),

IsActive BIT NOT NULL DEFAULT(1)

); From the SQL code above, among the other columns created in the table, I will like to point out three columns:

- RecordKeyColumn : Will represent column to use as RecordID in ValidationResults (e.g. ProductKey),

- RuleType : NotNull | Range | Lookup | Reference

- RuleValue : e.g. “0-99999” or “Red,Blue,Green” or “Customers.CustomerID”

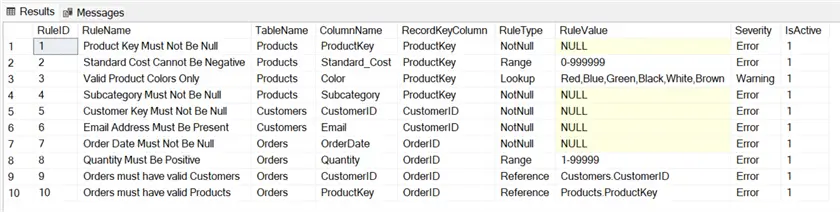

Next, we will need to insert some values into the dbo.ValidationRules table. Since we have three tables to include in our validation engine, we need to ensure the rules are sufficient and applicable to the tables as seen in the SQL script below.

INSERT INTO dbo.ValidationRules (RuleID, RuleName, TableName, ColumnName, RecordKeyColumn, RuleType, RuleValue, Severity, IsActive)

VALUES

(1, 'Product Key Must Not Be Null', 'Products', 'ProductKey', 'ProductKey', 'NotNull', NULL, 'Error', 1),

(2, 'Standard Cost Cannot Be Negative', 'Products', 'Standard_Cost', 'ProductKey', 'Range', '0-999999', 'Error', 1),

(3, 'Valid Product Colors Only', 'Products', 'Color', 'ProductKey', 'Lookup', 'Red,Blue,Green,Black,White,Brown', 'Warning', 1),

(4, 'Subcategory Must Not Be Null', 'Products', 'Subcategory', 'ProductKey', 'NotNull', NULL, 'Error', 1),

(5, 'Customer Key Must Not Be Null', 'Customers', 'CustomerID', 'CustomerID', 'NotNull', NULL, 'Error', 1),

(6, 'Email Address Must Be Present', 'Customers', 'Email', 'CustomerID', 'NotNull', NULL, 'Error', 1),

(7, 'Order Date Must Not Be Null', 'Orders', 'OrderDate', 'OrderID', 'NotNull', NULL, 'Error', 1),

(8, 'Quantity Must Be Positive', 'Orders', 'Quantity', 'OrderID', 'Range', '1-99999', 'Error', 1),

(9, 'Orders must have valid Customers', 'Orders', 'CustomerID', 'OrderID', 'Reference', 'Customers.CustomerID', 'Error', 1),

(10, 'Orders must have valid Products', 'Orders', 'ProductKey', 'OrderID', 'Reference', 'Products.ProductKey', 'Error', 1);The output of the above inserts into the dbo.ValidationRules table is as seen in the image below.

STEP 3: Create Validation Runs and Validation Results tables

To ensure the solution is history-aware and robust, we need to create two additional tables (ValidationRuns & ValidationResults) as seen in the SQL script below. In a nutshell, the ValidationRuns table holds details of the amount of validation runs that have been done, whereas the ValidationResults table holds details of each run failure.

CREATE TABLE dbo.ValidationRuns (

RunID INT IDENTITY(1,1) PRIMARY KEY,

RunDate DATETIME NOT NULL DEFAULT(GETDATE())

);

CREATE TABLE dbo.ValidationResults (

ResultID INT IDENTITY(1,1) PRIMARY KEY,

RunID INT NOT NULL,

RuleID INT NOT NULL,

TableName NVARCHAR(128) NOT NULL,

ColumnName NVARCHAR(128) NOT NULL,

RecordID NVARCHAR(200) NULL,

FailedValue NVARCHAR(200) NULL,

Severity NVARCHAR(20) NULL,

ValidationDate DATETIME NOT NULL DEFAULT(GETDATE())

); STEP 4: Create Table Weights for Enterprise Weighted DQS

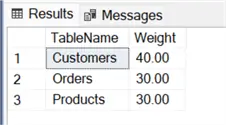

This is an optional step, you might consider adding this step for a more robust Data Validation insight. You should agree on these values with your business, but for the purpose of this post I have added the weights as follows:

- Product Table: 30

- Customers Table: 40

- Orders Table: 30.

The key point to note is that the total weights across all tables must add up to 100 ideally. The SQL script for the TableWeights is as seen in the script below.

CREATE TABLE dbo.TableWeights (

TableName NVARCHAR(128) PRIMARY KEY,

Weight DECIMAL(5,2) NOT NULL

); Next, we need to add the weights values to the table as seen in the script below.

INSERT INTO dbo.TableWeights (TableName, Weight) VALUES

('Products', 30.00),

('Customers', 40.00),

('Orders', 30.00); The output of the dbo.TableWeights table is as seen in the image below.

STEP 5: Create reusable stored procedure

We will now put together all we have been doing from Step 1 to Step 4 by creating a stored procedure that will perform the tasks in the earlier steps automatically based on the schedules of the execution of the stored procedure. The stored procedure will read each validation rule and apply them dynamically.

For the purpose of this demo, I have only created the procedure to support NotNull, Range, Lookup, and Reference types of Validation Rules. There are other types of validation rules which I will describe in another post.

So, in a nutshell, this Stored Procedure will write failures into the dbo.ValidationResults table with a RunID for history purposes. The way I have written this stored procedure is that it will return multiple result sets with details and summaries (this is optional). The SQL script below shows the stored procedure code.

CREATE OR ALTER PROCEDURE dbo.RunValidations

AS

BEGIN

SET NOCOUNT ON;

DECLARE @RunID INT;

-- Create a new run record

INSERT INTO dbo.ValidationRuns DEFAULT VALUES;

SET @RunID = SCOPE_IDENTITY();

DECLARE

@RuleID INT,

@RuleName NVARCHAR(200),

@TableName NVARCHAR(128),

@ColumnName NVARCHAR(128),

@RecordKeyColumn NVARCHAR(128),

@RuleType NVARCHAR(50),

@RuleValue NVARCHAR(200),

@Severity NVARCHAR(20),

@SQL NVARCHAR(MAX),

@MinVal NVARCHAR(50),

@MaxVal NVARCHAR(50),

@RefTable NVARCHAR(128),

@RefColumn NVARCHAR(128),

@InList NVARCHAR(MAX);

DECLARE RuleCursor CURSOR LOCAL FAST_FORWARD FOR

SELECT RuleID, RuleName, TableName, ColumnName, RecordKeyColumn, RuleType, RuleValue, Severity

FROM dbo.ValidationRules

WHERE IsActive = 1

ORDER BY RuleID;

OPEN RuleCursor;

FETCH NEXT FROM RuleCursor INTO @RuleID, @RuleName, @TableName, @ColumnName, @RecordKeyColumn, @RuleType, @RuleValue, @Severity;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @SQL = N'';

-- default RecordKey falls back to validated column if not supplied

IF @RecordKeyColumn IS NULL OR LEN(@RecordKeyColumn) = 0

SET @RecordKeyColumn = @ColumnName;

IF @RuleType = 'NotNull'

BEGIN

SET @SQL = N'

INSERT INTO dbo.ValidationResults (RunID, RuleID, TableName, ColumnName, RecordID, FailedValue, Severity)

SELECT ' + CAST(@RunID AS NVARCHAR(10)) + N', ' + CAST(@RuleID AS NVARCHAR(10)) + N', N''' + @TableName + N''', N''' + @ColumnName + N''',

COALESCE(CAST(' + QUOTENAME(@RecordKeyColumn) + N' AS NVARCHAR(200)), N''N/A''),

N''NULL'', N''' + @Severity + N'''' + N'

FROM ' + QUOTENAME(@TableName) + N'

WHERE ' + QUOTENAME(@ColumnName) + N' IS NULL;';

END

ELSE IF @RuleType = 'Range'

BEGIN

-- parse min-max from RuleValue (format expected: min-max)

SET @MinVal = PARSENAME(REPLACE(@RuleValue, '-', '.'), 2);

SET @MaxVal = PARSENAME(REPLACE(@RuleValue, '-', '.'), 1);

SET @SQL = N'

INSERT INTO dbo.ValidationResults (RunID, RuleID, TableName, ColumnName, RecordID, FailedValue, Severity)

SELECT ' + CAST(@RunID AS NVARCHAR(10)) + N', ' + CAST(@RuleID AS NVARCHAR(10)) + N', N''' + @TableName + N''', N''' + @ColumnName + N''',

COALESCE(CAST(' + QUOTENAME(@RecordKeyColumn) + N' AS NVARCHAR(200)), N''N/A''),

CAST(' + QUOTENAME(@ColumnName) + N' AS NVARCHAR(200)), N''' + @Severity + N'''' + N'

FROM ' + QUOTENAME(@TableName) + N'

WHERE TRY_CAST(' + QUOTENAME(@ColumnName) + N' AS FLOAT) < ' + @MinVal + N' OR TRY_CAST(' + QUOTENAME(@ColumnName) + N' AS FLOAT) > ' + @MaxVal + N';';

END

ELSE IF @RuleType = 'Lookup'

BEGIN

-- Build quoted literal list for IN clause

-- e.g. RuleValue = 'Red,Blue' -> '''Red'',''Blue''' in the dynamic SQL

SET @InList = N'''' + REPLACE(@RuleValue, ',', ''',''') + N'''';

SET @SQL = N'

INSERT INTO dbo.ValidationResults (RunID, RuleID, TableName, ColumnName, RecordID, FailedValue, Severity)

SELECT ' + CAST(@RunID AS NVARCHAR(10)) + N', ' + CAST(@RuleID AS NVARCHAR(10)) + N', N''' + @TableName + N''', N''' + @ColumnName + N''',

COALESCE(CAST(' + QUOTENAME(@RecordKeyColumn) + N' AS NVARCHAR(200)), N''N/A''),

CAST(' + QUOTENAME(@ColumnName) + N' AS NVARCHAR(200)), N''' + @Severity + N'''' + N'

FROM ' + QUOTENAME(@TableName) + N'

WHERE ' + QUOTENAME(@ColumnName) + N' IS NULL OR ' + QUOTENAME(@ColumnName) + N' NOT IN (' + @InList + N');';

END

ELSE IF @RuleType = 'Reference'

BEGIN

-- RuleValue expected as ReferencedTable.ReferencedColumn

SET @RefTable = PARSENAME(@RuleValue, 2);

SET @RefColumn = PARSENAME(@RuleValue, 1);

SET @SQL = N'

INSERT INTO dbo.ValidationResults (RunID, RuleID, TableName, ColumnName, RecordID, FailedValue, Severity)

SELECT ' + CAST(@RunID AS NVARCHAR(10)) + N', ' + CAST(@RuleID AS NVARCHAR(10)) + N', N''' + @TableName + N''', N''' + @ColumnName + N''',

COALESCE(CAST(' + QUOTENAME(@RecordKeyColumn) + N' AS NVARCHAR(200)), N''N/A''),

CAST(' + QUOTENAME(@ColumnName) + N' AS NVARCHAR(200)), N''' + @Severity + N'''' + N'

FROM ' + QUOTENAME(@TableName) + N' T

WHERE ' + QUOTENAME(@ColumnName) + N' IS NOT NULL

AND NOT EXISTS (

SELECT 1 FROM ' + QUOTENAME(@RefTable) + N' R WHERE R.' + QUOTENAME(@RefColumn) + N' = T.' + QUOTENAME(@ColumnName) + N'

);';

END

IF LEN(@SQL) > 0

BEGIN

-- Execute this rule's SQL

EXEC sp_executesql @SQL;

END

FETCH NEXT FROM RuleCursor INTO @RuleID, @RuleName, @TableName, @ColumnName, @RecordKeyColumn, @RuleType, @RuleValue, @Severity;

END

CLOSE RuleCursor;

DEALLOCATE RuleCursor;

-- Summaries for this run: per-rule counts, per-table DQS, weighted DQS

-- 1) Per-rule summary for the run

SELECT

V.RuleID,

V.RuleName,

V.TableName,

V.Severity,

COUNT(*) AS FailedCount

FROM dbo.ValidationResults R

INNER JOIN dbo.ValidationRules V ON R.RuleID = V.RuleID

WHERE R.RunID = @RunID

GROUP BY V.RuleID, V.RuleName, V.TableName, V.Severity

ORDER BY FailedCount DESC;

-- 2) Per-table Data Quality Score (DQS)

-- We will iterate tables discovered in ValidationRules (active)

DECLARE @Tbl NVARCHAR(128), @Total INT, @Failed INT;

CREATE TABLE #TableScores (

TableName NVARCHAR(128) PRIMARY KEY,

FailedRecords INT,

TotalRecords INT,

DataQualityScore DECIMAL(5,2)

);

DECLARE TableCursor CURSOR LOCAL FAST_FORWARD FOR

SELECT DISTINCT TableName FROM dbo.ValidationRules WHERE IsActive = 1;

OPEN TableCursor;

FETCH NEXT FROM TableCursor INTO @Tbl;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @Total = 0; SET @Failed = 0;

-- get total rows for the table dynamically

DECLARE @cntSQL NVARCHAR(MAX) = N'SELECT @cnt = COUNT(*) FROM ' + QUOTENAME(@Tbl);

EXEC sp_executesql @cntSQL, N'@cnt INT OUTPUT', @cnt = @Total OUTPUT;

-- count distinct failed record ids for this run/table

SELECT @Failed = COUNT(DISTINCT RecordID) FROM dbo.ValidationResults WHERE RunID = @RunID AND TableName = @Tbl;

INSERT INTO #TableScores (TableName, FailedRecords, TotalRecords, DataQualityScore)

VALUES (

@Tbl,

ISNULL(@Failed, 0),

ISNULL(@Total, 0),

CASE WHEN ISNULL(@Total, 0) = 0 THEN 100.00 ELSE CAST((1.0 - (CAST(ISNULL(@Failed, 0) AS FLOAT) / CAST(@Total AS FLOAT))) * 100.0 AS DECIMAL(5,2)) END

);

FETCH NEXT FROM TableCursor INTO @Tbl;

END

CLOSE TableCursor;

DEALLOCATE TableCursor;

-- Return per-table scores

SELECT TS.TableName, TS.FailedRecords, TS.TotalRecords, TS.DataQualityScore

FROM #TableScores TS

ORDER BY TS.TableName;

-- Join with table weights to compute weighted score and overall DQS

SELECT

TS.TableName,

TS.DataQualityScore,

TW.Weight,

CAST((TS.DataQualityScore * TW.Weight) / 100.0 AS DECIMAL(6,2)) AS WeightedScore

FROM #TableScores TS

LEFT JOIN dbo.TableWeights TW ON TS.TableName = TW.TableName;

SELECT CAST(SUM((TS.DataQualityScore * ISNULL(TW.Weight, 0)) / 100.0) AS DECIMAL(6,2)) AS OverallDataQualityScore

FROM #TableScores TS

LEFT JOIN dbo.TableWeights TW ON TS.TableName = TW.TableName;

-- Optional: return detailed validation results for this run

SELECT * FROM dbo.ValidationResults WHERE RunID = @RunID ORDER BY TableName, RuleID, ResultID;

END;

GOThe above Store Procedure might be a bit complex, thus you might want to simplify it for clarity. In that case, follow these recommendations below:

- Try to avoid multiple cursors

- Consider the use of INSERT … SELECT wherever possible.

- You can consider to keep only one cursor or outrightly removing by processing rules in a set-based way.

- You could consider simplifying dynamic SQL

- You can try to use only dynamic SQL for table/column substitution.

- Consider keeping the rule templates small and readable.

- You could try to break code into smaller steps

- Consider separating run creation, validation inserts, as well as summary computation into logical blocks. Add comments where possible.

- You might want to consider the use of descriptive variable names

- Try to avoid abbreviations like @tbl instead of @CurrentTable.

- Optionally, consider extracting per-rule SQL templates to a mapping table or simple CASE statement for readability where applicable.

STEP 6: Run the Stored Procedure

This stage represents how the whole process is executed in a business setting. The Stored Procedure would usually be set to run on a schedule (like a SQL Job or a Pipeline execution). Once it runs, the process scans the three tables we created with the data earlier and if any data quality rule we defined is violated, it will flag these in the dbo.ValidationResults table and also record the particular run in the dbo.ValidationRuns table.

The SQL code below is used to execute the Stored Procedure manually for the purpose of this blog post demo.

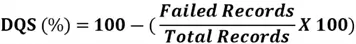

EXEC dbo.RunValidations; The output of this execution is as seen in the image below. The output includes a calculation for Data Quality Score (DQS). This is an optional metric that is useful for downstream reporting. Although you could do this with other reporting tools like Power BI DAX, but I choose to demonstrate how its done here. See the formula for the calculation below:

- Total Records – count of rows in the table.

- Failed Records – distinct rows that failed one or more rules.

- If a row fails multiple rules, its only counted once.

STEP 7: Inspect the results (use these queries)

Again, this is an optional step, but it is necessary to do these to inspect the details in the respective tables since the tables are what will be guiding your organization regarding data quality.

- To check runs:

SELECT * FROM dbo.ValidationRuns ORDER BY RunDate DESC;- To check the latest run id example (get it from ValidationRuns):

SELECT TOP 1 RunID FROM dbo.ValidationRuns ORDER BY RunDate DESC- To get detailed failures for the latest run:

DECLARE @LatestRun INT = (SELECT TOP 1 RunID FROM dbo.ValidationRuns ORDER BY RunDate DESC);

SELECT * FROM dbo.ValidationResults WHERE RunID = @LatestRun ORDER BY TableName, RuleID;Summary

In summary, we have seen in this demo how to build a data validation process for an organization from scratch using SQL. This is still basic since we were looking at a limited amount of data and tables. Where you might need to build this over a larger table sets or datasets, you will need to follow the same steps but additional rules might be required based on the business data.

It will be interesting to hear from you how you have built a data validation process or capability for your organization without out-of-box license based tools. It is also important to note that you will need to provide visibility of the results of your data validation process to the business to ensure its purpose is fulfilled. This can be done by connecting Power BI to the appropriate table, which in this case can easily be the dbo.ValidationResults table.

Next Steps

- Read more about sample SQL statements for data quality rules here.

- Read more about data quality rules from this Microsoft documentation here.

- You might want to read the Microsoft documentation on Overview of data quality in MS Purview Unified Catalog.

- Check out my other posts here.