By: Jeremy Kadlec | Updated: 2024-01-02 | Comments (2) | Related: > Integration Services Best Practices

Problem

Accurate data is imperative for an organization to conduct cost effective decision making, marketing promotions, mailings, database bloat impacting performance, storage and more use cases. Like everything else, change is constant for your data. There is a need to perform data profiling, cleansing and validation during data entry and on a regular basis to not waste resources. Unfortunately, cleansing and validating bad data is difficult with the native SQL Server toolset resulting in poor-quality data. T-SQL, Integration Services, Data Quality Services, PowerShell and .NET all include a framework to validate strings, but significant programming logic is necessary. These technologies will not completely validate, cleanse, match, enrich and de-duplicate data. How do we leverage the SQL Server tool set to have high-quality data in our databases?

Solution

Let’s walk through the SQL Server data quality process using these five steps to uncover the correct data quality management solution:

- SQL Server Data Import Options

- Poor Data Quality in Business is Expensive

- Correcting SQL Server Duplicates

- Resolving Out-of-Date SQL Server Data

- SQL Server Data Verification

SQL Server Data Import Options

SQL Server ships with a few technologies that can assist with improving the quality of your data. It is programming intensive and these technologies lack the verification and validation logic needed to deliver true data quality. These include:

- SQL Server Integration Services

- BIML

- SQL Server Data Quality Services

- T-SQL

- PowerShell

- .NET

- Power BI

SQL Server Professionals and some Data Stewards are familiar with SQL Server Integration Services (SSIS) as a means to perform real-time extraction, transformation and loading (ETL) operations. SSIS is generally the main tool used by SQL Server Professionals to execute ETL processes with interfaces to numerous database platforms, flat files, Excel, etc. SSIS also includes the ability to include T-SQL and .NET scripting logic all in a graphical interface. Although all organizations are different and each project has different requirements, SSIS is generally the tool selected when importing and exporting data via numerous data sources. To help automate the development of SSIS Packages, BIML can also be used to programmatically build SSIS Packages to include the control flow, data flow, connection managers and error handling.

Data Quality Services has shipped with SQL Server in the past. However, with the last few SQL Server releases there have been minimal updates to the products and domains.

The T-SQL language with the INSERT, INSERT…SELECT, BCP, BULK INSERT, OPENROWSET, etc. commands enable SQL Server Developers to perform many of the same tasks in SSIS. The same is true for PowerShell and .NET. There are libraries available that are able to extract, load and transform data, but all are programmatic. At times directly programming in T-SQL, PowerShell or .NET yields higher performing code, but comes at a high cost for development and overall maintenance.

Power BI also has a means to import and transform data within a report for presentation purposes, but this technique is generally beneficial for a single report and does not validate the accuracy of the data set.

The technology teams and data stewards need to work with a familiar tool to perform ETL operations while simultaneously cleansing the data.

Poor Data Quality in Business is Expensive

“Garbage in yields garbage out.” “You Reap What You Sow.” Regardless of the analogy, your decision making is only as good as the quality of your data. By some accounts, 25% of data is out of date 1 year after collected, making data validation a recurring process rather than a one-time event. Unfortunately, verifying data is very difficult with numerous internal and external applications that interact with customers, suppliers and partners. Data does not necessarily enter the organization from a single point of entry and data gets out of sync between systems when customers move, change contact information, make career advancements, have family changes, etc. and all impact the accuracy of your customer data.

Beyond the tangible costs associated with poor data related to marketing promotions, mailings and more, inaccurate data is results in time and budget waste from the significant time to process the error related data and/or promotions never reaching the intended audience. On the technology side, inaccurate data bloats databases impeding performance, extending backup times and maintenance windows which ultimately impacts the operating hours for the organization. Organizations need a means to achieve good data quality with data completeness and data consistency.

Correcting SQL Server Duplicates

When trying to validate data, there is nothing more frustrating than trying to determine if “John Smith” is the same person or if they have been entered into the system 10 different ways. What is being referred to here is not literally string matches where we can write T-SQL code to compare “John Smith” vs. “John Smith”, it is a matter of determining if multiple people are the same person based on various moves, contact information, business rules and more. Often times employees are afraid to delete data records to remove potential duplicate records, but this comes as an expense to the organization when mailings are returned and time is wasted contacting the wrong customer. What is really needed is a means to validate and consolidate duplicate customer records with confidence.

Resolving Out-of-Date SQL Server Data

Change is constant; this is the case with your data as well. Old and stale data can be considered no longer relevant, but is it? Historical customers already familiar with your brand and the quality of your product may have fallen through the cracks based on moves and life changes. If this out-of-date data is the roadblock to re-engaging with these prior customers, cleansing and enhancing the data can have a direct impact on the bottom line for the organization. Turn your data into a goldmine as a means to re-engage and retain your customers.

Organizations need a simple means to regularly validate their data which traditionally is very time consuming and resource intensive. How can organizations make this a competitive advantage?

SQL Server Data Verification

Data is one of the most valuable assets for organizations, but your data needs to be designed, developed and maintained just as a core business product. Organizations need a simple means to validate, cleanse, match and de-duplicate data. One solution that I would like to recommend is Melissa's Data Quality tools for SSIS to address critical data quality issues:

- Melissa Contact Verify

- Melissa Global Verify

- Melissa Matchup

- Melissa Fuzzy Match

- Melissa Personator

- and more…

Learn how Melissa’s data quality, data governance and data integrity solutions address real-world business challenges for data consumers:

| Business Challenge | Melissa Solution |

|---|---|

| Importing Data | Melissa’s solution directly integrates with SSIS which is the tool of choice for SQL Server Business Intelligence Professionals, Developers and DBAs for both on-premises and cloud-based ELT projects. |

| Poor Data Quality | Melissa's Contact Verify and Global Verify are fundamental components to validate name, address, email, phone and more data in the United States and globally for 200+ countries. This enables you to ensure the accuracy of your data directly in SQL Server Integration Services for existing business processes. |

| Correct Duplicate Data | Melissa’s Matchup component is able to confidently de-duplicate data providing a final golden record with a data quality score. |

| Enrich Customer Records | Melissa’s Personator, BusinessCoder, Property and IP Location components can add a myriad data values to records including latitude/longitude coordinates and location intelligence, property characteristics and mortgage details, demographic variables and business firmographic data including SIC codes, sales volume and employee size. |

| Resolve Outdated Data | Beyond the components already mentioned, Melissa’s Fuzzy Match is based on a state-of-the-art matching algorithm to validate and rank your data. |

| Data Verification | With each Melissa component, the final data is accompanied by result codes specifying the actions taken on the field and enables you to pass the updated data between components to have the best final data set. |

| Recurring Data Validation | Melissa offers two options to validate your data on a recurring basis. You can use the default behavior in SSIS with calls out to Melissa’s current data set or quarterly updates are available to use when all data must remain behind the firewall. |

| Low Learning Curve | Drag and Drop Melissa components from the SSIS Toolbox directly into the Data Flow Tasks of existing packages to improve the data quality of your projects. This yields a very low learning curve for SQL Server Professionals enabling them to quickly integrate the Melissa components and demonstrate value to the organization. |

Sample Melissa Solution

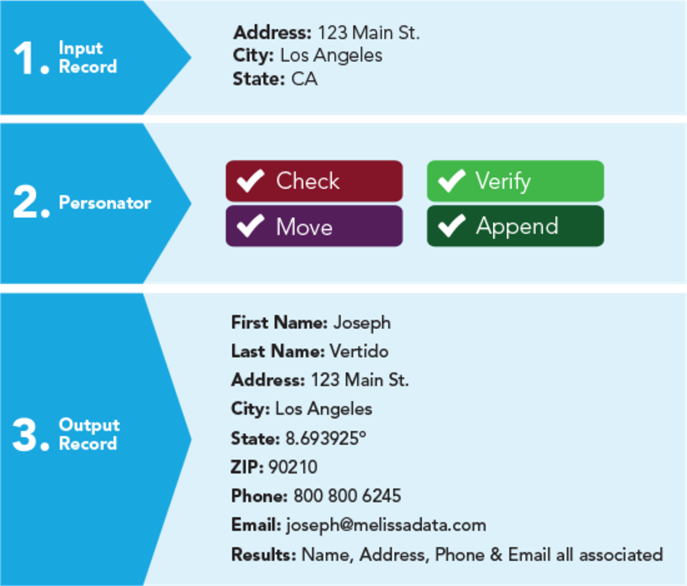

As an example, when working with Melissa’s Personator component for SSIS, your existing data is used as an input. As shown in the image below, you can see a minimal amount of data is available as the input. With integration with the Personator Melissa component the data is checked, verified and appended. The output from the process is a complete record with name, address and contact information. This enables the organization to better utilize the data for marketing, mailing promotions and more.

How do I get started with the Melissa Data Quality Components for SSIS?

- Learn more about all Melissa’s Data Quality Components for SSIS and what they will do to help your organization.

- Download your personal evaluation version of Melissa’s Data Quality Components for SSIS.

- Schedule a personal demo of Melissa’s Data Quality Components for SSIS with a member of the Melissa team and then put the tool through its paces in your environment with your data. See how easy it is to improve the data quality at your organization.

- Communicate how Melissa’s Data Quality Components for SSIS can help

your organization:

- Time savings for data validation, enrichment and cleansing

- Seamless integration into existing SSIS Processes

- Significant functionality out of the box with the ability to customize as needed

- Radically improved data quality, data standardization and data analytics for reporting and decision making

- After working with Melissa’s Data Quality Components for SSIS, measure the tangible and intangible benefits to your reporting processes and determine your next steps to radically improve the decision making in your organization.

Next Steps

- Learn about the value of Melissa’s Data Quality Components for SSIS.

- Get a personal demo of Melissa’s Data Quality Components for SSIS.

- Download Melissa’s Data Quality Components for SSIS.

- Check out these webinars:

MSSQLTips.com Product Editorial sponsored by Melissa makers of Data Quality Components for SSIS.

About the author

Jeremy Kadlec is a Co-Founder, Editor and Author at MSSQLTips.com with more than 300 contributions. He is also the CTO @ Edgewood Solutions and a six-time SQL Server MVP. Jeremy brings 20+ years of SQL Server DBA and Developer experience to the community after earning a bachelor's degree from SSU and master's from UMBC.

Jeremy Kadlec is a Co-Founder, Editor and Author at MSSQLTips.com with more than 300 contributions. He is also the CTO @ Edgewood Solutions and a six-time SQL Server MVP. Jeremy brings 20+ years of SQL Server DBA and Developer experience to the community after earning a bachelor's degree from SSU and master's from UMBC.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-01-02