Problem

The widespread availability of GenAI-driven apps is garnering interest in its capabilities and value proposition for businesses across several industries. There are multitudes of GenAI architectures, platforms, and solution offerings that oftentimes lead to confusion for in-house AI development teams that are interested in building these solutions. GenAI-driven customer chatbots that enable end users to interact with company data have become a hot topic for organizational strategic initiatives. Development teams are interested in understanding how to easily build out these custom chatbots on the Azure platform in a way that is scalable at an enterprise level.

Solution

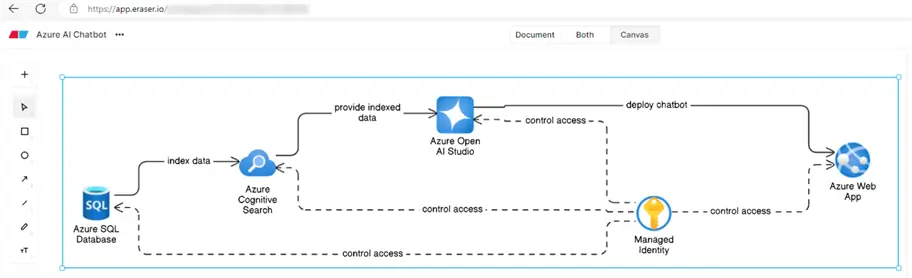

There are several ways to build a chatbot in Azure that allows you to interact with your data. This data can be in the form of structured databases or unstructured documents like PDFs. This tip will cover how to build a chatbot using Azure’s Open AI Studio, now called Azure AI Foundry. It will also require Azure Cognitive search, now called Azure AI search for indexing, vectorizing, and embedding your data stored in an Azure SQL database. Managed Identity can be used for access management. Finally, the chatbot can be deployed to either a web app or a custom CoPilot Studio.

The following diagram illustrates this Azure AI Chatbot architecture. Interestingly, this architecture diagram was also created by GenAI, using a tool called Eraser (app.eraser.io), which can create diagrams (ERD, Process Flows, Cloud Architectures, etc.) using natural language prompts.

Here is the prompt used to create the diagram:

“Create an Azure architecture diagram for a chatbot which uses an Azure SQL Database containing source data which is indexed through Azure AI Search (also called Azure Cognitive Search). It is then used as a source in Azure OpenAI Studio (also called Azure AI Foundry) and then deployed to a Web App for customers to chat with. All the authentication is controlled through Managed Identity.”

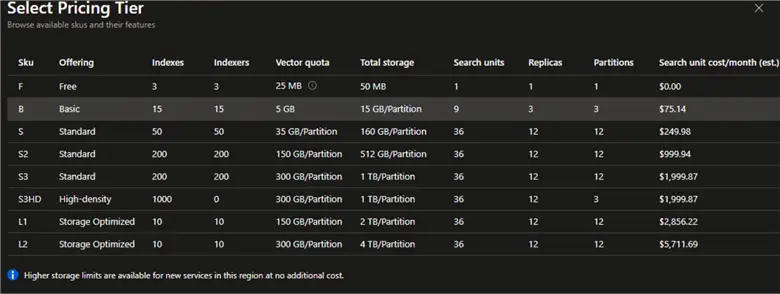

Setup Azure AI Search

Azure AI Search enhances chatbots by providing powerful search functionalities, enabling quick and efficient information retrieval, natural language processing, and personalized responses. It also supports scalability, advanced query capabilities, and seamless integration with other Azure services, improving user satisfaction and engagement. There are several pricing tiers available. There is only one free tier instance available per subscription, while the next one available is ‘Basic’, which is sufficient for our proof-of-concept testing efforts.

API Access Control Setting

Ensure the API Access control is set to Role-based access control for an enterprise grade identity and access management.

Types of Data Sources for AI Search

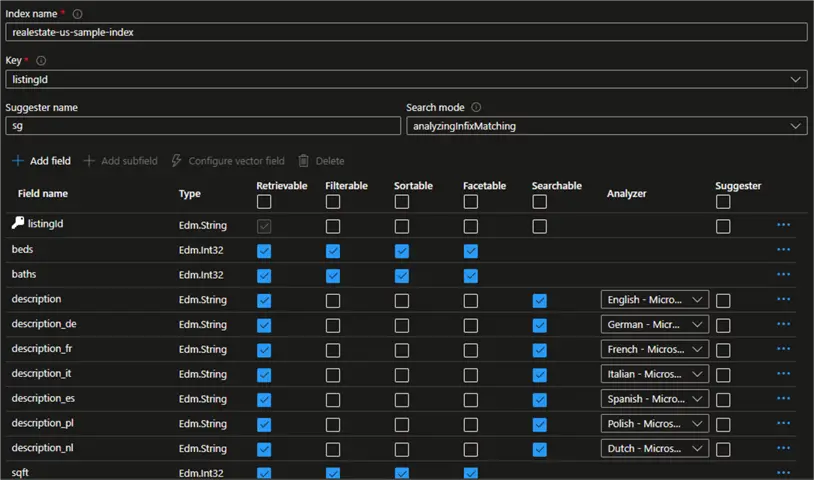

The following list shows the available data sources that can be added to the AI Search. We will use a sample Azure SQL Database as our data source. The data provides detailed descriptions of various properties located in Washington. Each property listing includes information about the type, features, and location of the residence.

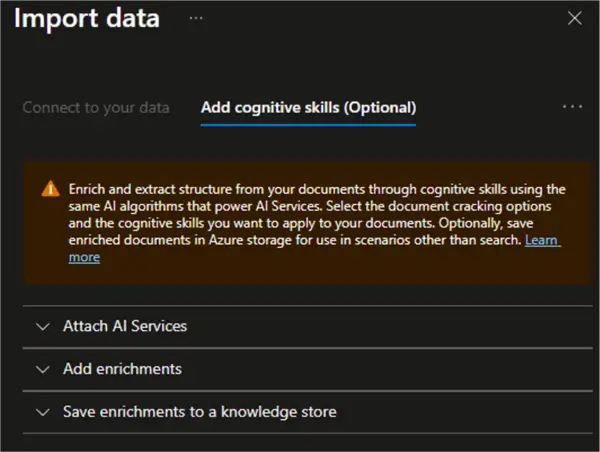

Add Cognitive Skills in Azure AI Search

Adding cognitive skills in Azure AI Search allows you to enrich the data during indexing by applying AI capabilities like language detection, entity recognition, sentiment analysis, and custom skills to enhance the search experience.

A default index is inferred and provided for you. You can delete the fields you don’t need. Everything is editable, but once the index is created, deleting, or changing, existing fields will require re-indexing your documents.

While it is possible to configure scheduled refreshes for how often the indexed data from the source is updated, a schedule can’t be configured on samples or existing data sources without change tracking. You’ll need to edit your data source to add change tracking if you wish to set up a schedule. Scheduled refresh frequencies can be once, hourly, daily, or custom, which could potentially be in increments of 30 minutes.

Semantic configurations in Azure AI Search enhance search relevance by using Microsoft’s language understanding models to re-rank search results and provide more accurate and relevant responses. They include features like semantic ranking, captions, and answers, which improve the overall search experience by understanding the context and intent behind queries. Generally, this will yield a better search experience than a standard keyword (lexical) search, which performs fast and flexible query parsing and matching over searchable fields using terms or phrases in any supported language, with or without operators. While this is an optional configuration in AI Search, it is required in Azure OpenAI Studio when you select the semantic search type.

Configure Azure OpenAI

Azure OpenAI Studio is a platform for developing and deploying generative AI applications using prebuilt and customizable models, enabling innovation at scale. It integrates with Azure AI Search to improve information retrieval, relevance tuning, and natural language understanding, ensuring accurate and contextually relevant responses.

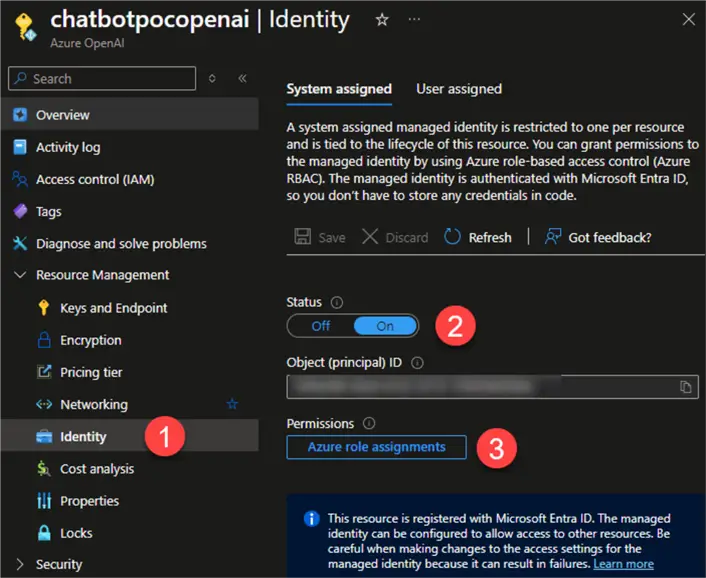

Once you have your Azure OpenAI Studio up and running, you’ll need to switch on system assigned managed identity for Role Based Access Control (RBAC) as the proper configurations for enterprise grade scalability and deployment.

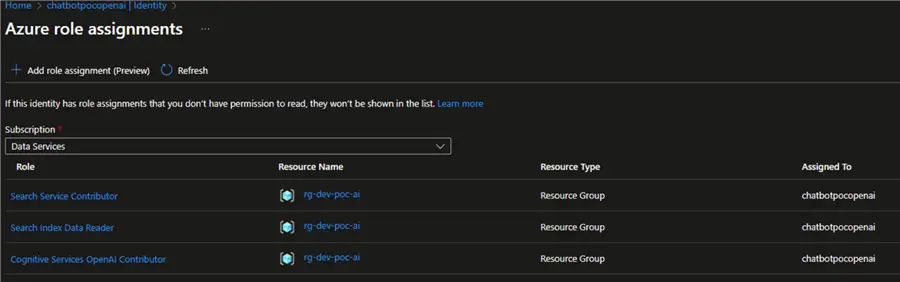

You will also need to assign the following resource group level role assignments:

- Search Index Data Reader provides read-only access to search indexes. Users with this role can query and view the data within the search indexes but cannot modify or delete any data.

- Search Service Contributor allows users to manage the search service’s indexes, indexers, and other related resources. It provides permissions to create, update, and delete indexes and indexers, but does not grant full administrative access.

- Cognitive Services OpenAI Contributor grants full access to Azure OpenAI resources, including the ability to create, fine-tune, and deploy models. Users with this role can also upload datasets for fine-tuning and view, query, and filter stored completions data.

You can find more details regarding network and access configuration for Azure OpenAI on your data in the documentation.

Chat with Your Data

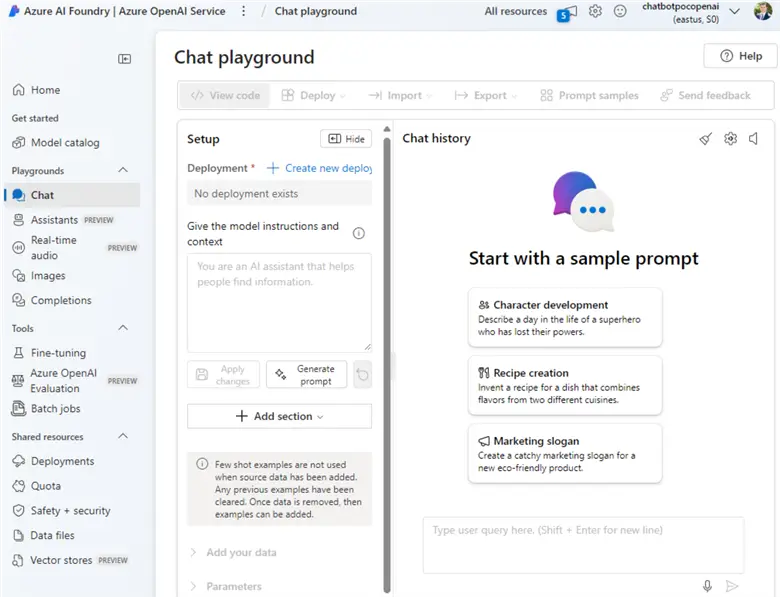

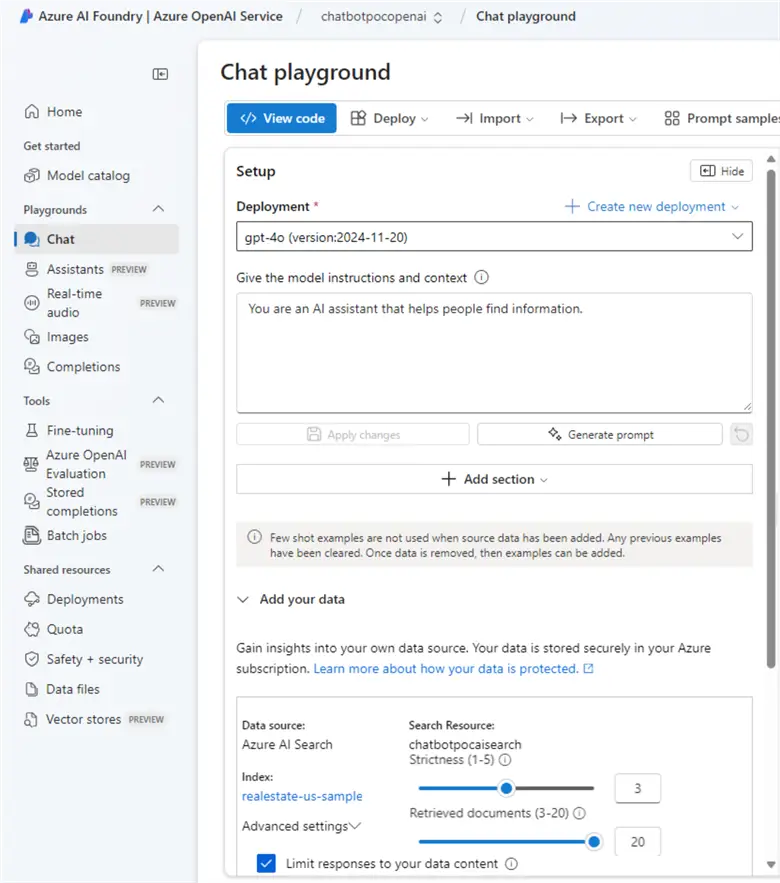

After the Azure portal configurations are complete, launch the Azure OpenAI Service (Azure AI Foundry). Notice the immense capabilities within this chat playground, including Chatbots, AI Assistants, Real-time Audio, Images, completions, and more.

You’ll need to create a new deployment with several options ranging from base to fine-tuned models. For this use case, we will select gpt-4o as our chat completion model.

The tokens per minute rate limit for a GPT-4o chatbot deployment model restricts the number of tokens that can be processed per minute, ensuring efficient resource usage and preventing overloading. For this use case, I have selected a Global Standard deployment type, so my Principle/User ID will need to have the RBAC role ‘Cognitive Services OpenAI Contributor’ assigned to it. Follow a similar RBAC assignment process for any managed deployment types.

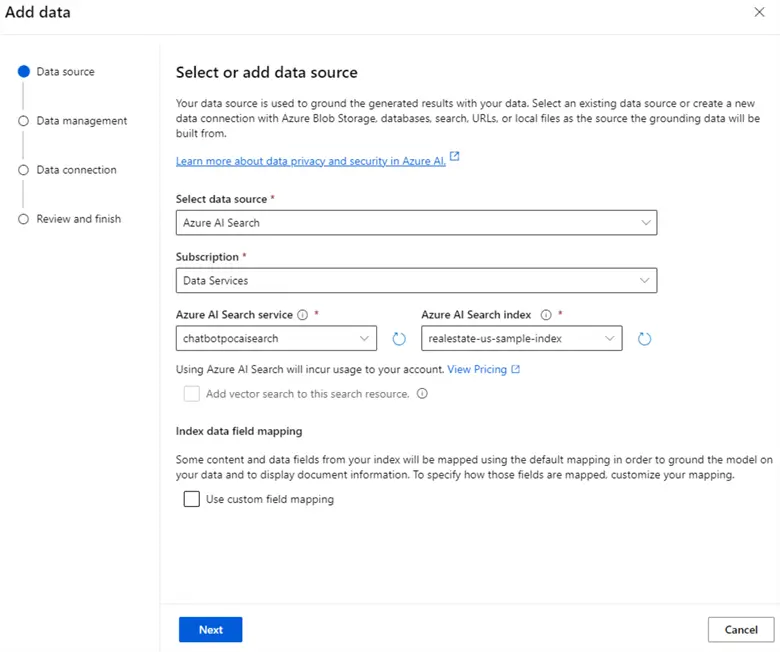

Add Data Sources

You’ll need to add your data source to the OpenAI studio chatbot project. Select Azure AI Search along with your relevant subscription. Select the relevant service and index that you configured in the AI Search resource. Vector search in Azure AI Studio allows for semantic searches using vector embeddings, enhancing the accuracy and relevance of search results. Custom field mapping ensures that data from your source is correctly indexed, improving the precision of search queries and results. For now, we will leave these unchecked.

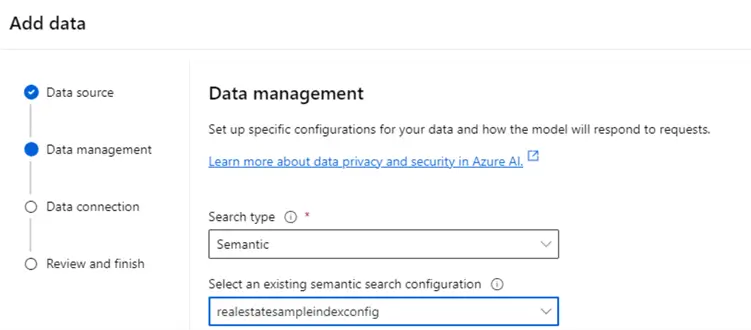

For search type, you’ll be presented with the options of Semantic or Keyword. When selecting ‘Semantic’ search, ensure that you select an existing semantic search configuration that you created in your AI Search resource in the Azure portal.

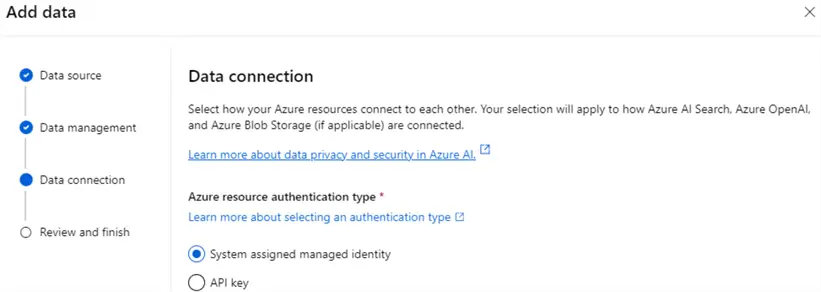

For data connection, choose System assigned managed identity.

Give Model Prompt Instructions

You can give the model prompt instructions and context as needed. You’ll also have the ability to export your code in several languages, including json, Python, c#, curl, java, go, and javascript. This is useful if you prefer to further customize the chatbot in an IDE of your choice (e.g. VS Code). Once your data is added, you can limit your responses to your data content, else the LLM will respond based on additional context as part of its available training data, when applicable.

You can also adjust strictness and retrieved document settings. Strictness determines how aggressively the system filters irrelevant documents based on their similarity scores. Retrieved documents are the relevant chunks of data fetched from the connected data source to answer user queries.

You can also adjust the following parameters, as needed. These settings help customize the chatbot’s behavior to suit your needs.

- Past Messages Included to maintain conversation context.

- Temperature for response randomness.

- Max Response Tokens for response length.

- Top P for response diversity.

- Frequency Penalty to reduce repetition.

- Presence Penalty to encourage new ideas.

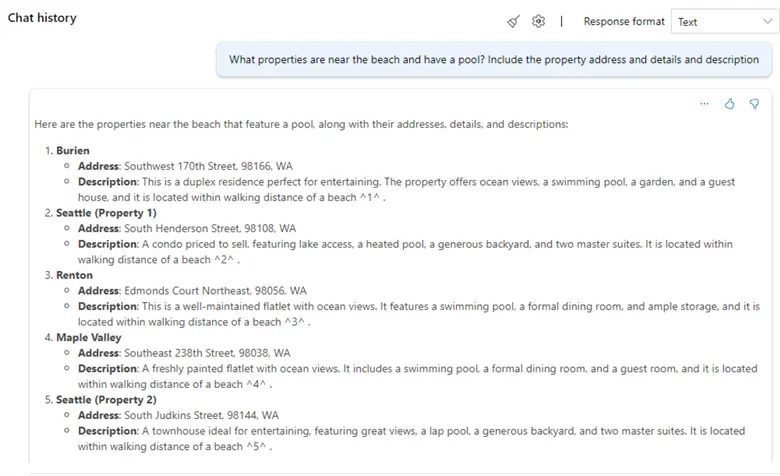

Now, after all the hard setup work is done, it’s time to play in the chat playground. Let’s ask the chatbot a question about properties that are near the beach and have a pool. This data is retrieved instantly, enabling you to chat with your data in real-time. The chatbot can be further customized via code in your IDE and can be deployed as either a web app, a Teams app or to Copilot Studio.

The chat capabilities can be further enhanced for speech in various languages.

Next Steps

- Explore additional use cases around Azure SQL indexer – Azure AI Search to build a custom chatbot with your data in Azure SQL DB.

- Read about the Azure OpenAI Service – Pricing.

- Understand Data, privacy, and security for Azure OpenAI Service – Azure AI services.

- Read more about Using your data with Azure OpenAI Service – Azure OpenAI

- Check out these additional resources: