By: Siddharth Mehta

Overview

Considering our business case, the first requirement that we have figured out is to create an online transaction system that would store sales and orders related data. Discussion on the front-end of the solution is beyond the scope of this tutorial, so we would be focusing on developing the back-end data model. This data model would become the primary data source for the sales analytics that we intend to derive. In this chapter we will look at considerations for analyzing a data source to derive the intended analytics.

Explanation

Whether a new data source is being developed or data is required to be extracted from an existing transactional or non-transactional data sources, it requires careful considerations for extracting data. We would be developing the OLTP model for our business case in the next chapter. Before that we need to have some background of a few key considerations for analyzing the data sources prior to data modeling and the data extraction strategy.

- Nature of data - Data can be flattened, continuous, discrete, numerical, textual, etc. The nature of the data is an important factor to consider for data modeling as well as data extraction. It provides key inputs while designing the data model as well as data extraction strategy.

- Nature of data model - The data model can be of different types depending upon the domain of the data. For example, Hierarchical, Entity-Attribute-Value (EAV), relational, dimensional, network, etc. The nature of the data model provides clarity on the topology of the data in the data model.

- Volume of data - Volume of data and the growth rate of the same helps to estimate the scale of data, which is an important input while developing the data extraction design.

- Frequency of data modification - Frequency of changes in the data helps to estimate the read versus writes against the data. This is an important input to consider while planning for the performance of the data model.

- Delta detection capability - The very first challenge one faces for data extraction is the source system's ability to detect changes in the data at any given point in time, also known as delta in BI terminology. If the source system does not have this capability, then one would have to setup additional snapshots of data to enable change detection. As a last option one would have to always extract the entire data set, which in large scale databases is not a feasible option at all. So if one has the opportunity of designing the data model, one should always capture auditing information in the data model to enable easy detection of changes in data. An easy way of implementing this is by including fields like CreatedBy, ModifiedBy, CreatedDateTime, LastModifiedDataTime, etc.

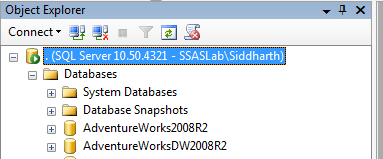

Considering the business scenario at hand, we would need to design a data model which would act as the source system for the next layers of the solution. For all the exercises going forward, we will use the AdventureWorks database as it has the all the data models as well as test data that we would be needed for our exercises. You can download the databases from here. Install the OLTP and DW databases on your machine where you are developing the rest of the exercises. You can install any version 2008 R2 / 2012 as more or less they have the same schema.

Once installed, it should be able to access these databases in

SQL Server Management Studio (SSMS) as shown below.

Additional Information

- Data modeling is a vast subject. Consider reading this article about data modeling to get more educated on the data modeling process. This would help to quickly grasp the data modeling process in the next chapter.

- Check out these tips on SQL Server Data Modeling.