By: Haroon Ashraf | Comments (5) | Related: 1 | 2 | More > Integration Services Development

Problem

As a Data Warehouse or SQL BI (Business Intelligence) developer how I can make sure that my SQL Server Integration Services (SSIS) packages use Azure UTC time to log events rather than my local system time? My packages are built locally, but run against an Azure SQL Database.

Solution

The solution is to use the built-in UTC time function available in an Integration Services Project to avoid time tracking issues particularly during test runs or logging events when you are using your local dev machine which follows GMT and your staging database is Azure SQL Database which follows UTC.

The solution is divided into two parts where the first part of this tip series is fully focused on basic data warehouse concepts related to this tip (since without this conceptual knowledge the beginners may not be able to comfortably understand this tip) and preparing the environment to create and run Integration Services Project SSIS Package alongside source and Data Warehouse database setups which need to be fully functional in order to replicate the real time scenario of using built-in UTC time which is covered in the second part series.

SQL Server Data Warehouse Basic Concepts

Let's first go through some basics of a data warehouse and the need for the built-in UTC time function provided by Integration Services Project with the help of a real-world scenario.

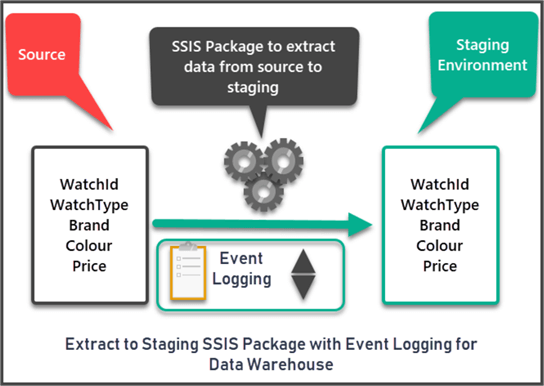

SSIS Package for Staging Extract (Requirement)

There is a business requirement to write an SSIS package for the data warehouse which runs a staging extract from the source system to your staging environment (database) alongside logging this activity.

What is Staging Extract?

A staging extract is typically one of the first steps to load the data from source into the data warehouse database where it can be staged to be processed further.

For example, if you have table called Book to store all the books of the library then your staging environment is going to be a data warehouse or staging database which also contains the Book table and it is normally one-to-one mapping of source columns with destination columns with a few exceptions.

Staging Extract Executions?

A staging extract can run through an SSIS Packages which copies the data from the data source into the destination which is either a staging database or staging schema in a data warehouse database.

For example, if you have a source database named Watches then there must a staging environment in the form a staging database WatchesStaging or a staging schema in a Data Warehouse database such as Staging.WatchOrder, Staging.WatchType, etc.

How do you create a Staging Extract?

As mentioned earlier, the staging extract can be created by creating an SSIS Package inside an Integration Services Project to copy data from source (database) to staging (database).

What is Data Warehouse logging?

Logging generally means a method to record the progress of a process in the Data Warehouse and it is really helpful in many ways including:

- Tracing an error

- Investigating an issue

- Replicating a problem

What is logging for the Staging Extract?

Logging in the staging extract of a Data Warehouse helps us to track the results of an SSIS Package run such as how many rows have been successfully transferred from the source to destination or what time the package started.

Logging is a very essential part of Data Warehouse ETL architecture since it does auditing for your Data Warehouse data workflows including staging extracts, however, the details of logging are beyond the scope of this tip.

Types of Logging Strategy

There are two types of logging strategies in the context of Data Warehouse:

- Custom Logging

- Out-of-the-box logging

This tip is mainly related to a custom logging strategy which works well with a Data Warehouse.

Prerequisites

Let's discuss the prerequisites of the tip visualizing a real-world scenario.

Azure Account Setup

This tip assumes that you have an Azure account to create an Azure SQL Databases or create your free Azure account if you would like to follow the steps and accept the terms and conditions of the Azure free account.

Prerequisites for SSIS Packages used in a Data Warehouse

One of the prerequisites of this tip is to be have some basic experience creating and running SSIS Packages particularly used in Data Warehouse extracts or similar processing.

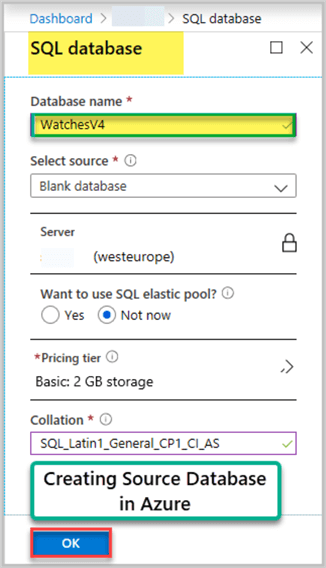

Azure SQL Database as Source (WatchesV4)

This tip assumes that you have an Azure SQL Database as the source database.

Please sign into your Azure portal and create a basic tier source database called WatchesV4:

Connect to the source database (created above) via SQL Server Management Studio (SSMS) or Azure Portal (using Query editor Preview) and run the following setup script to create and populate the tables:

-- (1) Create WatchesV4 database through Azure Portal

-- (2) Connect to WatchesV4 database via SSMS or Azure Portal

-- (3) Creating Watch table

CREATE TABLE [dbo].[Watch]

(

[WatchId] INT NOT NULL IDENTITY(1,1),

[WatchType] VARCHAR(40),

[Brand] VARCHAR(40),

[Colour] VARCHAR(20),

[Price] DECIMAL(10,2)

CONSTRAINT [PK_WatchType] PRIMARY KEY (WatchId),

);

-- (4) Populating Watch table

SET IDENTITY_INSERT dbo.Watch ON

INSERT INTO [dbo].[Watch] ([WatchId], [WatchType], [Brand], [Colour], [Price]) VALUES (1, N'Digital', N'Casio', N'Black', CAST(150.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[Watch] ([WatchId], [WatchType], [Brand], [Colour], [Price]) VALUES (2, N'Analogue', N'Casio', N'Blue', CAST(200.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[Watch] ([WatchId], [WatchType], [Brand], [Colour], [Price]) VALUES (3, N'Smart', N'Polar', N'Yellow', CAST(300.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[Watch] ([WatchId], [WatchType], [Brand], [Colour], [Price]) VALUES (4, N'Sports', N'Timex', N'Black', CAST(150.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[Watch] ([WatchId], [WatchType], [Brand], [Colour], [Price]) VALUES (5, N'Digital', N'Seiko', N'Brown', CAST(185.00 AS Decimal(10, 2)))

SET IDENTITY_INSERT dbo.Watch OFF

-- (5) Creating WatchOrder table

CREATE TABLE [dbo].[WatchOrder]

(

[WatchOrderId] INT NOT NULL IDENTITY(1,1),

[WatchId] INT NOT NULL,

[Quantity] INT NOT NULL,

[OrderDate] DATETIME2 NOT NULL,

[Price] DECIMAL(10,2) NOT NULL,

CONSTRAINT [PK_WatchOrder] PRIMARY KEY ([WatchOrderId]),

CONSTRAINT [FK_WatchOrder_ToTable] FOREIGN KEY (WatchId) REFERENCES [Watch]([WatchId]),

)

-- (6) Populating WatchOrder table

SET IDENTITY_INSERT [dbo].[WatchOrder] ON

INSERT INTO [dbo].[WatchOrder] ([WatchOrderId], [WatchId], [Quantity], [OrderDate], [Price]) VALUES (1, 1, 1, N'2019-01-10 00:00:00', CAST(150.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[WatchOrder] ([WatchOrderId], [WatchId], [Quantity], [OrderDate], [Price]) VALUES (3, 1, 1, N'2019-01-20 00:00:00', CAST(150.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[WatchOrder] ([WatchOrderId], [WatchId], [Quantity], [OrderDate], [Price]) VALUES (4, 2, 1, N'2019-02-03 00:00:00', CAST(200.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[WatchOrder] ([WatchOrderId], [WatchId], [Quantity], [OrderDate], [Price]) VALUES (5, 2, 2, N'2019-02-15 00:00:00', CAST(400.00 AS Decimal(10, 2)))

INSERT INTO [dbo].[WatchOrder] ([WatchOrderId], [WatchId], [Quantity], [OrderDate], [Price]) VALUES (6, 4, 2, N'2019-03-01 00:00:00', CAST(300.00 AS Decimal(10, 2)))

SET IDENTITY_INSERT [dbo].[WatchOrder] OFF

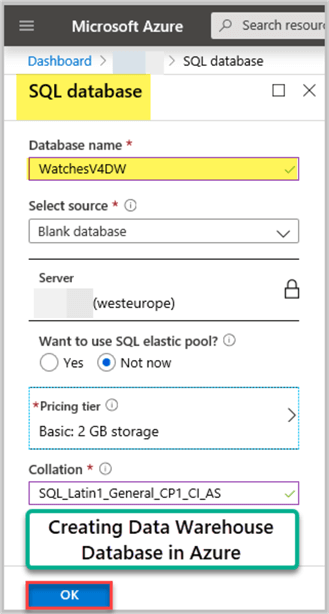

Azure Staging Database (WatchesV4DW)

This tip assumes that you have an Azure SQL Database for the staging environment.

Create Azure SQL Database WatchesV4DW using the Azure Portal:

Please run the following setup script after connecting to the newly created Azure SQL Database (WatchesV4DW) via SSMS or Azure Portal Query editor preview:

-- (1) Create WatchesV4DW database through Azure Portal -- (2) Connect to WatchesV4DW database via SSMS or Azure Portal -- (3) Creating Staging Schema CREATE Schema [Staging] Authorization DBO; GO -- (3) Creating Watch table in Staging environment CREATE TABLE [Staging].[Watch] ( [WatchId] INT NOT NULL IDENTITY(1,1), [WatchType] VARCHAR(40), [Brand] VARCHAR(40), [Colour] VARCHAR(20), [Price] DECIMAL(10,2) );

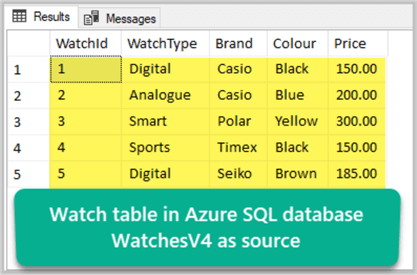

Data Check

Please connect to your source database WatchesV4 via SSMS and run the following query to check the data which has been inserted through the setup script earlier:

-- Connect to Azure SQL Database WatchesV4 via SSMS or Azure Portal -- View Watch table records SELECT w.WatchId, w.WatchType, w.Brand, w.Colour, w.Price FROM dbo.Watch w

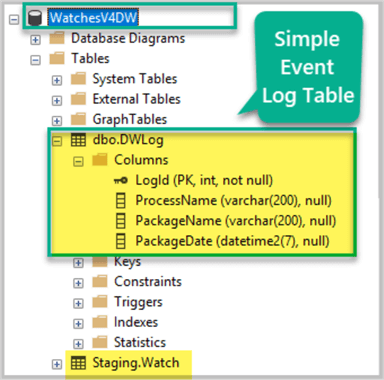

Simple Event Logging Table

We also need to create a table in the Data Warehouse database which is going to save \ log the events that take place during Data Warehouse processing including running the SSIS Packages to extract data from the source to staging environment.

Please create a small table DWLog to log data warehouse events in EvolviV4DWas follows:

-- (1) Connect to WatchesV4DW Azure SQL database via SSMS or Azure Portal -- (2) Create simple logging events table CREATE TABLE DWLog ( LogId INT IDENTITY(1,1), ProcessName VARCHAR(200), PackageName VARCHAR(200), PackageDate DATETIME2, CONSTRAINT [PK_DWLog] PRIMARY KEY (LogId), )

The table once created can be seen as follows:

Create SQL Login and User for databases

To create and run SSIS Packages you either have to supply Windows credentials or SQL database user credentials in a typical Integration Services Project development scenario.

Please create a new login DW from the master database on Azure SQL Server resource and add DW-User for both databases with access.

-- User master database on Azure CREATE LOGIN [DW-User] WITH PASSWORD='AJKD123*'

Then create DW-User giving read and write access to both databases by using the following script:

-- (1) Connect to WatchesV4 source database via SSMS or Azure Portal -- (2) Creating [DW-User] user for [DW-User] Login CREATE USER [DW-User] FOR LOGIN [DW-User] -- (3) Giving full access (to be only used for demo purposes because of the risks with full access) to the user EXEC sp_addrolemember 'db_owner', 'DW-User'; -- (4) Connect to WatchesV4DW destination database via SSMS or Azure Portal -- (5) Creating [DW-User] user for [DW-User] Login CREATE USER [DW-User] FOR LOGIN [DW-User] -- (6) Giving full access (to be only used for demo purposes because of the risks with full access) to the user EXEC sp_addrolemember 'db_owner', 'DW-User';

Setup Staging Extracts for Event Logging

Let's now create an SSIS Package which is going to extract data from the source (WatchesV4) to the destination (WatchesV4DW).

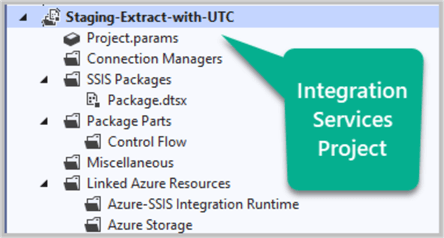

Create Integration Services Project

Open Visual Studio (we are using VS 2019 in this tip) and create a new Integration Services Project called Staging-Extract-with-UTC under the Solution as follows:

Rename Package

Please rename the Package.dtsx as staging-extract-watch.dtsx under SSIS Packages folder.

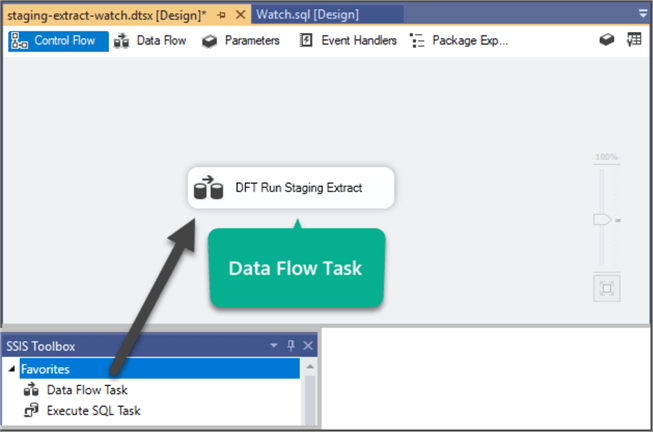

Create Data Flow Task

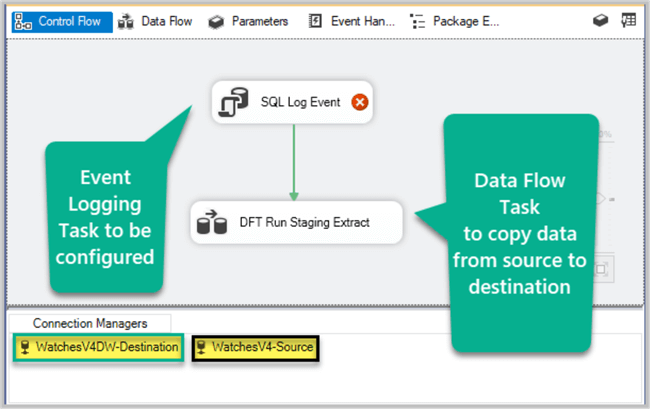

Drag and drop a Data Flow Task from the SSIS Toolbox onto the Control Flow designer and rename it as DFT Run Staging Extract:

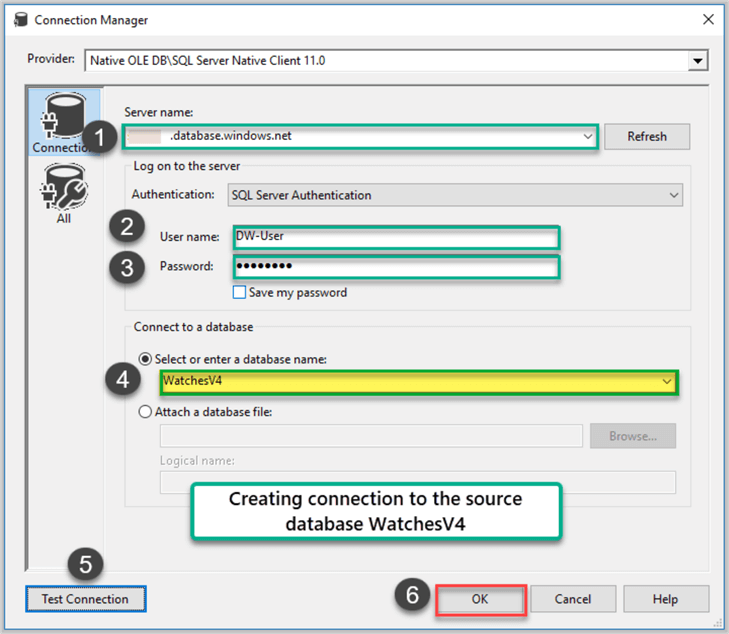

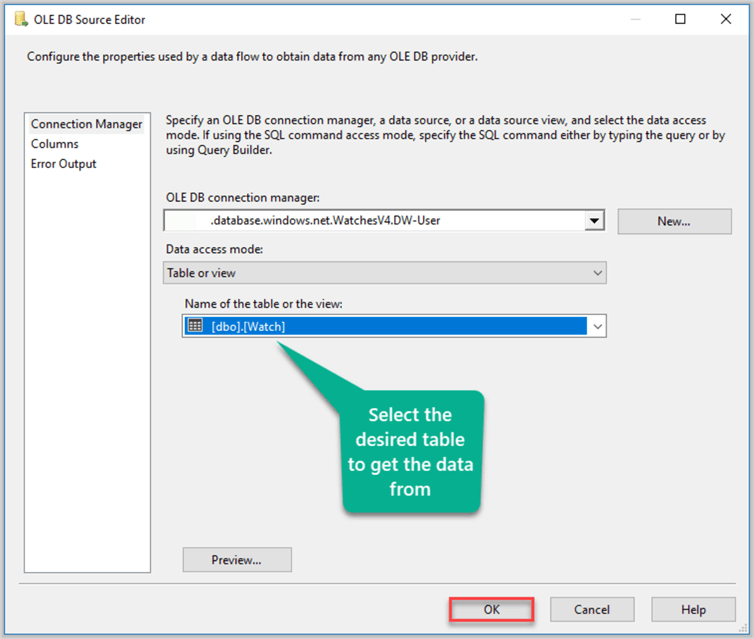

Configure OLE DB Source to point to WatchesV4

Double click the data flow task DFT Run Staging Extract to open Data Flow Task designer.

Create an OLE DB Source component and name it OLE SRC Watch and then double click it to point to the source database by using the SQL user DW-User and the password (AJKD123*) we created earlier:

Select the desired table Watch and click OK:

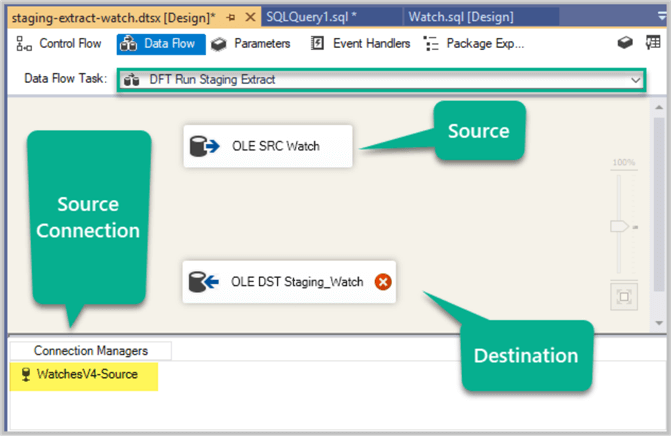

Configure OLE DB Destination to point to Staging.Watch table

Drag an OLE DB Destination from the SSIS Toolbox and drop it onto the Data Flow Task designer surface and name it OLE DST Staging_Watch:

Join source with destination using the data flow path.

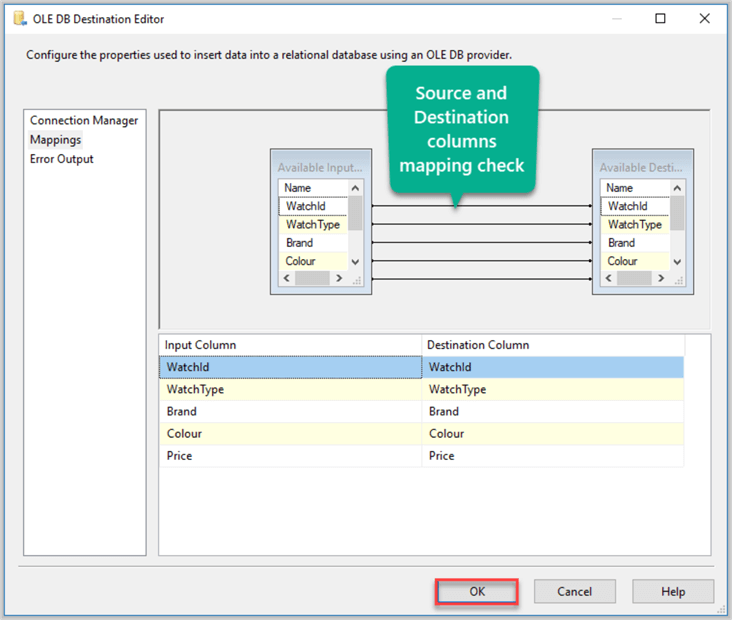

Now configure the OLE DB Destination to point to Staging.Watch in the Data Warehouse WatchesV4DW by creating a destination connection as WatchesV4DW-Destination and then checking columns mappings:

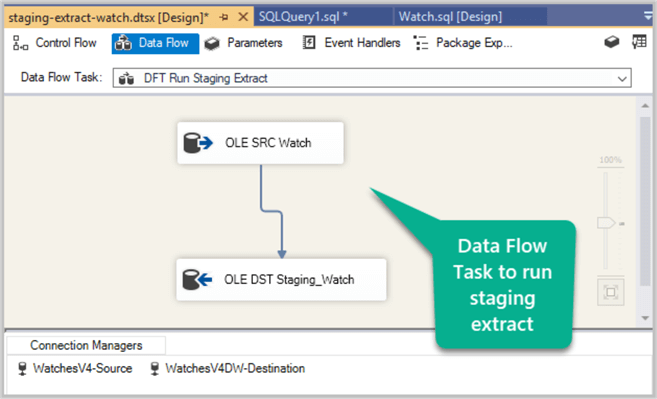

The Data Flow Task now looks as follows:

Setup Simple Event Logging

Once the package to extract data to the staging environment has been setup the next big step is to use an approach to record these events so that they can be traced, if needed.

This can be done by setting up an event logging strategy. We are setting up a very simple event logging strategy to prepare the environment.

Simple Event Logging Logic

We are going to setup an event logging method so that the following information about the package can be stored:

- Process Name

- Package Name

- Package Date (when it ran)

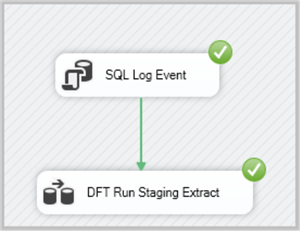

Add Execute SQL Task before Data Flow Task

Now go back to the Control Flow window and please complete the following steps:

- Add Execute SQL Task from SSIS Toolbox just before Data Flow Task

- Name the newly added Execute SQL Task as SQL Log Event

- Join SQL Log Event success path to Data Flow Task DFT Run Staging Extract

Add SQL Log Logic

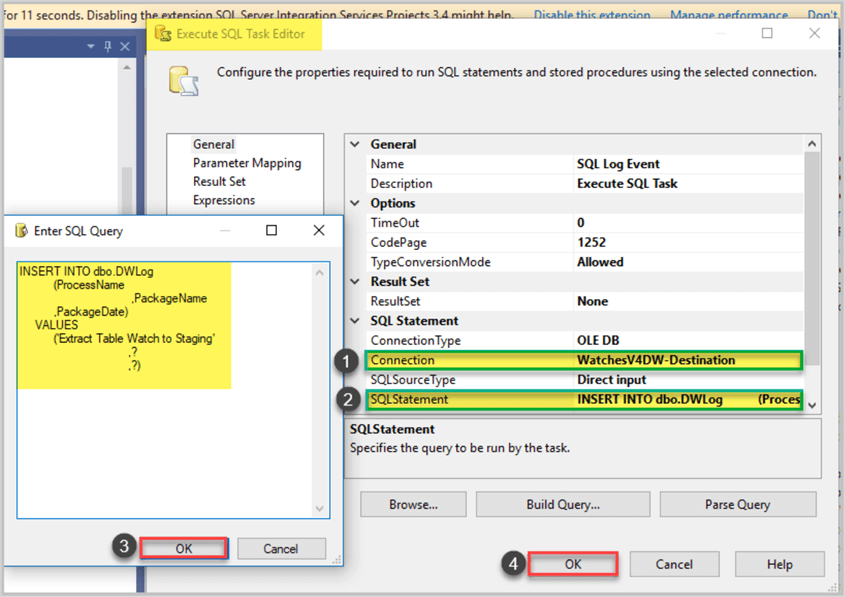

Double click SQL Log task and setup the following values in the editor:

Connection: WatchesV4DW-Destination

Next write the following code for the SQL Statement:

INSERT INTO dbo.DWLog

(ProcessName

,PackageName

,PackageDate)

VALUES

('Extract Table Watch to Staging'

,?

,?)

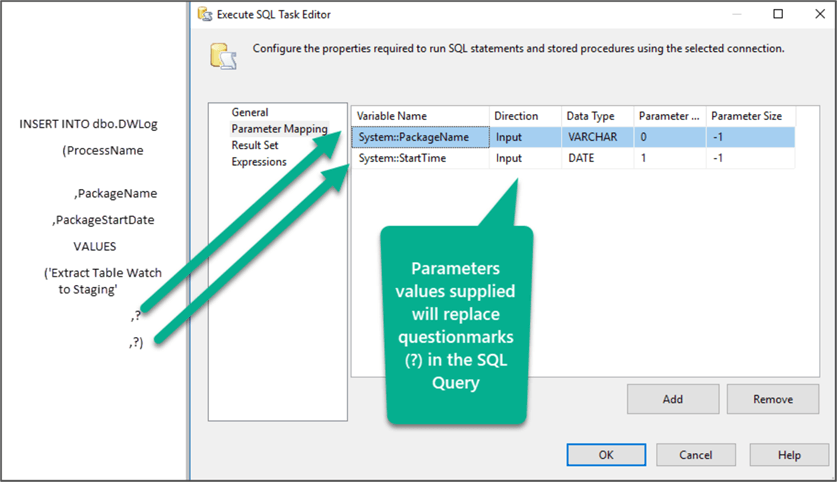

Next, click Parameter Mapping from the left side navigation bar of the SQL Task Editor to add parameter values to map to the SQL Query where (question marks) are used. Please remember the question marks (?) in the SQL query will be replaced with these supplied parameters values when the package runs.

The Parameter Mapping is as follows:

Test Setup

We are now ready to test run the setup.

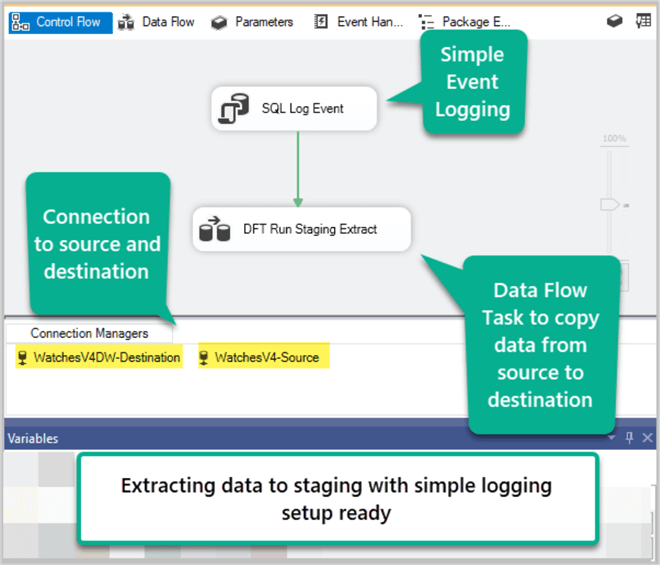

View Setup

Have a quick look at the package now it should be ready to run as follows:

Log Table Check

Please run the following script against the Data Warehouse database (destination):

-- (1) Connect to WatchesV4DW database via SSMS or Azure Portal -- (2) View Log table before running staging package with simple logging SELECT l.LogId, l.ProcessName,l.PackageName,l.PackageDate from dbo.dwlog l

The output is as follows:

Test Run Setup

Right click on Package name and click Execute Package to run the package:

The package has run successfully!

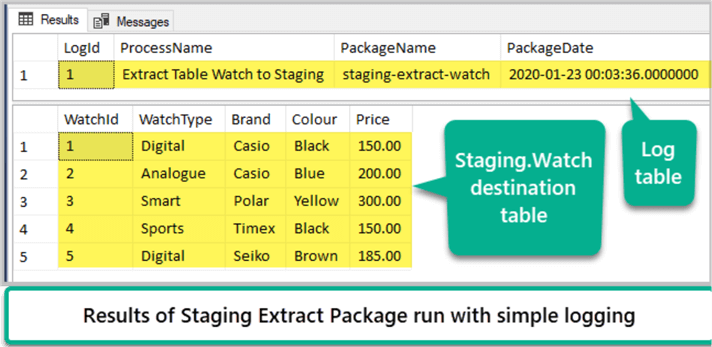

Data Check

Now quickly check both destination and log tables by running the following script:

-- (1) Connect to WatchesV4DW Azure SQL Database via SSMS or Azure Portal -- (2) View Log table after extract to staging package with simple logging ran SELECT d.LogId ,d.ProcessName ,d.PackageName ,d.PackageDate FROM dbo.DWLog d -- (3) View destination table after extract to staging package with simple logging ran SELECT w.WatchId ,w.WatchType ,w.Brand ,w.Colour ,w.Price FROM Staging.Watch w

The output is as follows:

Congratulations, the setup has been prepared and test run successfully alongside simple event logging!

Please stay in touch with MSSQLTips.com since the next part of this tip is on its way soon where we are going to understand and implement the importance of built-in UTC Time function in real world Data Warehouse logging scenarios to avoid incorrect time differences.

Next Steps

- Please try to create WatchOrder staging extract package with simple logging and see if you are able to test run it successfully using the above tip

- Please try to improve log table by adding rows and see if you can log the number of copied rows from source to destination with the help of the above tip

- Please try to add another logical step which resets the staging table before each new package runs to comply with Data Warehouse standard practices

About the author

Haroon Ashraf's interests are Database-Centric Architectures and his expertise includes development, testing, implementation and migration along with Database Life Cycle Management (DLM).

Haroon Ashraf's interests are Database-Centric Architectures and his expertise includes development, testing, implementation and migration along with Database Life Cycle Management (DLM).This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips