By: Tim Ford | Comments (3) | Related: > Fragmentation and Index Maintenance

Problem

I needed to provide information to one of our more-difficult vendors that we were updating our index statistics. We could not get our performance issue escalated past their level 1 support because they were adamant in stating that our performance issues related to out-of-date statistics. I was planning on simply sending them the results of a query against one of the many Dynamic Management Views (DMVs) that ship with SQL Server to give them this information. Statistics update date is stored as metadata on the SQL instance, but after a great deal of research against the system tables and DMVs I realized that this information is not readily exposed to the DBA via any other method than the STATS_DATE() function or the SQL Server Management Studio graphical user interface (GUI). I was left looking for a method to include some general index metadata, along with the date the statistics were last updated to this vendor so we could begin to look into the root cause of my performance problems.

Solution

A little bit about statistics before I begin. Statistics, when discussing them in the context of Microsoft SQL Server, is the information that is collected about how data is distributed in the tables and indexes of a database. The Query Optimizer then uses these statistics to determine what it thinks is the best-performing process for executing a query. There is a database option, AUTO_UPDATE_STATISTICS that is recommended to always be set to ON (TRUE). In order to enable this setting a simple ALTER DATABASE command is executed against the database in question:

ALTER DATABASE Northwind SET AUTO_UPDATE_STATISTICS ON

It is then left up to the database engine on when to update the statistics for any and all tables and indexes for the database. Do not confuse "automatic" for "perfect" however. There will be times when you may observe that database activity patterns may circumvent the updating of statistics when appropriate. Automatic statistics updating occurs when a threshold is reached in the actual data of the index or underlying table. The calculation for when statistics are updated automatically is as follows:

- When data is initially added to an empty table

- The table had > 500 records when statistics were last collected and the lead column of the statistics object has now increased by 500 records since that collection date

- The table had < 500 records when statistics were last collected and the lead column of the statistics object has now increased by 500 records + 20% of the row count from the previous statistics collection date

Using these three criteria as a guide for statistics updating will foster timely updates in situations where data is inserted into moderate or smaller tables on a frequent basis, particularly for fields that are the leading column for a given statistic. However, you may experience statistics falling out-of-date when updating records (row count criteria not affected) or when inserting records into large tables. For example, if you have a table in your database that had 1,000,000 rows the last time the statistics were updated statistics would not be automatically updated again until the row count of the table increased by 500 + (1,000,000 * .20) or 200,500 records. By then, assuredly, your statistics would be out-of-date. Statistics can be manually updated, even when the AUTO_UPDATE_STATISTICS database option is ON. For example, statistics will be updated when rebuilding an index to resolve fragmentation by default. Statistics can also be forced to update when reorganizing a fragmented index as well.

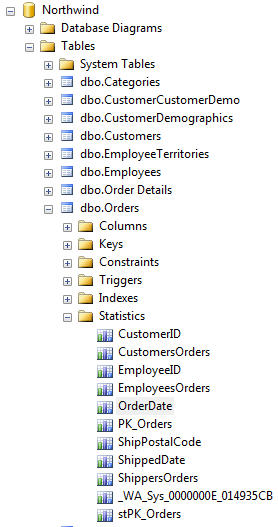

Now that we've briefly reviewed what statistics are and how they are automatically updated we can proceed to take a look at the two methods available for determining the last time the statistics on a given index were last updated. The first method consists of using the SQL Server Management Studio's GUI to look at the properties of a selected statistic. In order to utilize this method you need to expand the Databases node in the Object Explorer. Then expand the specific database and table you wish to review. You'll notice another node under the table called "Statistics". This node contains a record for each statistic associated with the selected table.

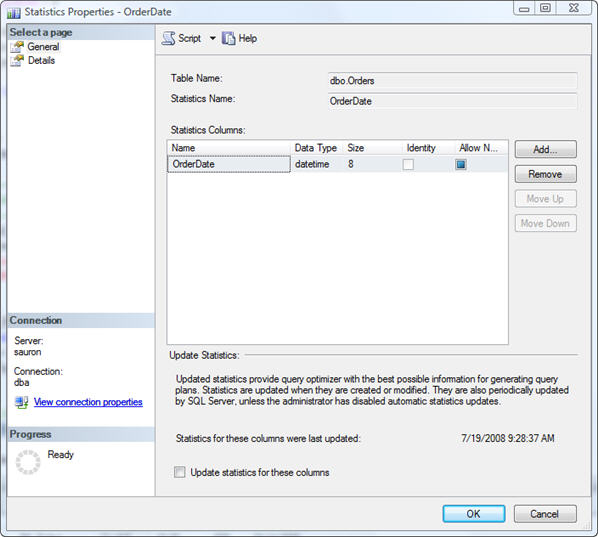

For the purpose of this example I'm going to right-click on the OrderDate statistic and select "Properties"

You'll then see that the information pertaining to the last time the statistics were updated are displayed towards the bottom of this dialog box. The downside with this approach is the one-by-one nature of collecting data. This is a tedious process, and one that does not provide a simple method of compiling data for review with all other statistics on a table. You would need to manually enter this information, a row at a time, into a spreadsheet or email document.

The other method available is the use of the STATS_DATE() system function. This function accepts two arguments: table_id, which is the object_id of a table in the current database, and index_id, which is the index_id of an index on the table provided as the table_id parameter:

STATS_DATE ( table_id , index_id )

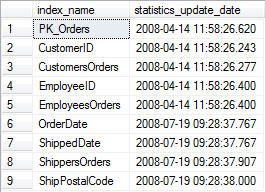

The STATS_DATE function returns a datetime value which is the last time the statistics for that specific index were updated. When I run this function against the Northwinds.dbo.Orders table the syntax and results would look something like this:

USE Northwind;

GO

SELECT name AS index_name,

STATS_DATE(OBJECT_ID, index_id) AS statistics_update_date

FROM sys.indexes

WHERE OBJECT_ID = OBJECT_ID('dbo.Orders');

GO

So, in the first case we can only look at index metadata one index at-a-time, in the second example, we are able to collect more-limited information about all indexes on a given table. Now it's time to look at the best of both situations: returning a wide scope of metadata against all indexes on a table and doing so in such a manner that it can be supplied to other interested parties. To do so I'll utilize a combination of the STATS_DATE() function we just discussed along with a query against one of the most important Dynamic Management Functions associated with SQL - probably the most important DMF related to indexes. The sys.dm_db_index_physical_stats DMF provides an abundance of information about the physical state of your indexes. I will not get into the details of sys.dm_db_index_physical_stats in this tip.

Let's look at the code:

CREATE PROCEDURE [dbo].[usp_Detail_Index_Stats] @table_name sysname

AS

----------------------------------------------------------------------------------

-- ******VARIABLE DECLARATIONS******

----------------------------------------------------------------------------------

DECLARE @IndexTable TABLE

(

[Database] sysname, [Table] sysname, [Index Name] sysname NULL, index_id smallint,

[object_id] INT, [Index Type] VARCHAR(20), [Alloc Unit Type] VARCHAR(20),

[Avg Frag %] decimal(5,2), [Row Ct] bigint, [Stats Update Dt] datetime

)

DECLARE @dbid smallint --Database id for current database

DECLARE @objectid INT --Object id for table being analyzed

DECLARE @indexid INT --Index id for the target index for the STATS_DATE() function

----------------------------------------------------------------------------------

-- ******VARIABLE ASSIGNMENTS******

----------------------------------------------------------------------------------

SELECT @dbid = DB_ID(DB_NAME())

SELECT @objectid = OBJECT_ID(@table_name)

IF @objectid IS NULL

BEGIN

PRINT 'Table not found'

RETURN

END

----------------------------------------------------------------------------------

-- ******Load @IndexTable with Index Metadata******

----------------------------------------------------------------------------------

INSERT INTO @IndexTable

(

[Database], [Table], [Index Name], index_id, [object_id],

[Index Type], [Alloc Unit Type], [Avg Frag %], [Row Ct]

)

SELECT

DB_NAME() AS "Database",

@table_name AS "Table",

SI.NAME AS "Index Name",

IPS.index_id, IPS.OBJECT_ID, --These fields included for joins only

IPS.index_type_desc, --Heap, Non-clustered, or Clustered

IPS.alloc_unit_type_desc, --In-row data or BLOB data

CAST(IPS.avg_fragmentation_in_percent AS decimal(5,2)),

IPS.record_count

FROM sys.dm_db_index_physical_stats (@dbid, @objectid, NULL, NULL, 'sampled') IPS

LEFT JOIN sys.sysindexes SI ON IPS.OBJECT_ID = SI.id AND IPS.index_id = SI.indid

WHERE IPS.index_id <> 0

----------------------------------------------------------------------------------

-- ******ADD STATISTICS INFORMATION******

----------------------------------------------------------------------------------

DECLARE curIndex_ID CURSOR FOR

SELECT I.index_id

FROM @IndexTable I

ORDER BY I.index_id

OPEN curIndex_ID

FETCH NEXT FROM curIndex_ID INTO @indexid

WHILE @@FETCH_STATUS = 0

BEGIN

UPDATE @IndexTable

SET [Stats Update Dt] = STATS_DATE(@objectid, @indexid)

WHERE [object_id] = @objectid AND [index_id] = @indexid

FETCH NEXT FROM curIndex_ID INTO @indexid

END

CLOSE curIndex_ID

DEALLOCATE curIndex_ID

----------------------------------------------------------------------------------

-- ******RETURN RESULTS******

----------------------------------------------------------------------------------

SELECT I.[Database], I.[Table], I.[Index Name], I.[Index Type],

I.[Avg Frag %], I.[Row Ct],

CONVERT(VARCHAR, I.[Stats Update Dt], 110) AS "Stats Dt"

FROM @IndexTable I

ORDER BY I.[Index Type], I.[index_id]

The stored procedure accepts a table name for the only parameter. You must run the stored procedure directly in the database being analyzed. You can not create and run the stored procedure in master since the nature of the underlying system tables require you use the current database for information pertaining to database objects and statistics. The stored procedure requires the creation of a table variable to store the results, and three local variables to reconcile the id values of the current database, table object, and each index. After variable declaration and assignment, the results of a call against sys.dm_db_index_physical_stats for the current database and table (for the purpose of returning index metadata) is joined to the sys.sysindexes system table (to allow reconciling an index id value to the name of the index). A cursor is then created to loop through each index on the table in order to update the @IndexTable table variable with the results of the STATS_DATE() function for the particular index. Finally the results are formatted for presentation and returned via the final SELECT statement. You may note that I collected fields from the sys.dm_db_index_physical_stats DMF that I did not return to the end user. Some may call that unnecessary overhead, but I've included these key fields (index_id and object_id) to allow this stored procedure to be integrated with other metadata stored procedures for my DBA arsenal of tools. The [Alloc Unit Type] field was omitted only for the sake of presentation on this web page.

The syntax for calling the stored procedure is simple: EXEC dbo.usp_Detail_Index_Stats 'tablename'

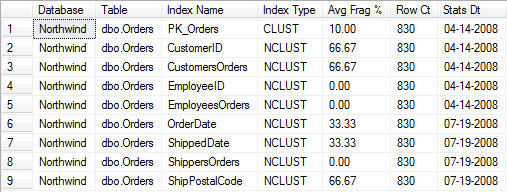

Executing this stored procedure against my instance of the Orders table in the Northwind database shows that some of my indexes have not been updated in quite some time; probably due to low row activity and lack of fragmentation.

EXEC dbo.usp_Detail_Index_Stats 'Orders'

The end results of this stored procedure provide you with a very simple, yet insightful view of some key metadata items for this table's indexes which are just a few that are available to the DBA via the powerful sys.dm_db_index_physical_stats Dynamic Management Function along with the date that the statistics were last updated.

Next Steps

- Review related tips about database statistics and sys.dm_db_index_physical_stats on MSSQLTips.com

- Create this stored procedure in your test environment and review the state of your statistics. You may be surprised if you rely solely on SQL Server to automatically manage your statistics update process.

- Stay tuned for more tips on statistics and mining information from the Dynamic Management Views and Dynamic Management Functions.

About the author

Tim Ford is a Senior Database Administrator with MindBody.

Tim Ford is a Senior Database Administrator with MindBody.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips