By: Atif Shehzad | Comments (7) | Related: More > DBA Best Practices

Problem

ALTER table operations may be required to meet changing logical or operational demands. Once a table is populated with data, then any change to the table structure requires additional concerns in terms of data protection, smooth operation of the system and size of the table. ALTER table operations may be carried out both through T-SQL or SSMS designers. Most of the ALTER TABLE operations if performed using SSMS causes a drop and recreation of the table. This may lead to performance degradation or dead locks. It is therefore recommended to carry on ALTER table tasks through T-SQL except some rare operations that require table recreation even through T-SQL.

In coming lines we will go through performing ALTER table operations through T-SQL and also we will go through those tasks that are not directly supported through T-SQL. These involve table drop and recreation even when using T-SQL, so it is more convenient to carry out these using the SSMS table designer.

Solution

ALTER table operations may have very far reaching effect on your system. So as part of best practices always take time to examine the object dependencies and also consider the data which may be affected by ALTER table operations. The following is based on SQL 2005 and 2008. Older versions of SQL Server may handle things a little differently.

There are few operations that when implemented through SSMS, would cause the table to be dropped and recreated. The same operations through T-SQL would not drop and re-create the table. To avoid the overhead of the table drop and recreation, such operations should be performed through T-SQL. These include:

- Changing ALLOW NULL setting of existing column

- RESEEDING IDENTITY PROPERTY for a column

- Changing data type of any column

These changes can be made using T-SQL or SMSS without causing a recreation of the table.

- Add a new column to the end of an existing table

- Modify the name of existing column

Still there are some operations that involve table drop and recreation both through SSMS or T-SQL. So in these cases using SSMS designer would become more appropriate. Following are those scenarios

- Insert new column other than at the end of the table or changing order of existing columns

- Alter Increment parameter for IDENTITY value

- Removing IDENTITY PROPERTY for a column

To test these changes let us first create a table in AdventureWorks.

--Script #1: Create table for ALTER table operations USE [AdventureWorks] GO CREATE TABLE [Employees] (EmpID SMALLINT IDENTITY (1,1) PRIMARY KEY NOT NULL, Name VARCHAR(50) NULL, Department VARCHAR(50) NULL) GO

Now let us go through carrying on these operations through T-SQL. At this stage you may note the creation time of the above table through following script.

--Script #2: Note creation time for table USE [AdventureWorks] GO SELECT name AS TableName, create_date AS CreationTime FROM sys.objects WHERE name = 'Employees' GO

You may verify the fact later that whenever we would implement the above mentioned changes through T-SQL the creation time of the object would not change. It means that object has not been dropped and recreated. While if same changes were implemented through SSMS designer then creation time would change to time of operation through designer.

In coming sections we will go through the use of T-SQL for the above mentioned tasks. To verify any change you may use system stored procedure sp_help when required.

Add new column in existing table

At any stage we may be required to add a new column in an existing table. This task may be a performance threat if done through the SSMS designer if the column is not added at the end of the table. Inefficiency depends upon amount of data in table. To fulfill the requirement through T-SQL the following ALTER TABLE statement may be used.

--Script #3: ALTER table to add new column USE [AdventureWorks] GO ALTER TABLE [Employees] ADD LName VARCHAR(50) NULL GO

Modify name of existing column

To make compatible with new changes, names of existing columns may need to be changed. In our case, it seems suitable to modify the column <Name> to <FName>.

We would use T-SQL as follows to rename a column.

--Script #4: Rename column USE [AdventureWorks] GO EXEC sp_rename 'Employees.Name', 'FName','COLUMN' GO

Column has been renamed to match the addition of LName column.

This could also be done using SSMS without the impact of having to recreate the table.

Changing ALLOW NULL setting of any column

It is a good practice to explicitly allow or prohibit NULL values. In our case we would prohibit NULL for FName. The following ALTER table statement would be used for that.

--Script #5: Prohibit NULL values USE [AdventureWorks] GO ALTER TABLE [Employees] ALTER COLUMN FName VARCHAR(50) NOT NULL GO

Re-Seeding IDENTITY PROPERTY for a column

SEED of an identity column is the starting point for identity values in a table. In some scenarios it may be required to interrupt the automated sequence by allotting a new SEED value. To avoid the overhead of table drop and recreation, we would reseed the EmpID to 100 through T-SQL.

--Script #6: RESEED identity value USE [AdventureWorks] GO DBCC CHECKIDENT (Employees, RESEED, 100) GO

Instead of the normal sequential flow, as a result of the above script, identity values for new records would start from 100 now. While re-seeding identity values make sure that there is no chance of identity value duplication. For example if you have 150 records in the table with identity values 1 to 150. And you re-seeding identity value to 100 as in above script. In this scenario your very first insert statement would generate a violation of the PRIMARY KEY constraint and the statement would be terminated. The reason is that identity value 101 is present in the table and your statement again tried to insert the same value.

Changing data type of any column

Before changing the data type of a column make sure that data would not be compromised while converting to new data type. This task may be performed by using the following T-SQL command. In our case we would change data type VARCHAR(50) to NVARCHAR(50).

--Script #7: Change data type of a column USE [AdventureWorks] GO ALTER TABLE [Employees] ALTER COLUMN Department NVARCHAR(50) NOT NULL GO

Insert new column at other than default position (at end of columns) or changing order of existing columns

T-SQL would not provide you any extension related to the order of the columns. Through T-SQL you can not create new columns at a position other than at end of previous columns. Similarly you can not change the position of any previously created columns. You would have to use SSMS designer for this purpose or create a new table with the new column and migrate the data from the old table to the table. In either approach table recreation would need to take place.

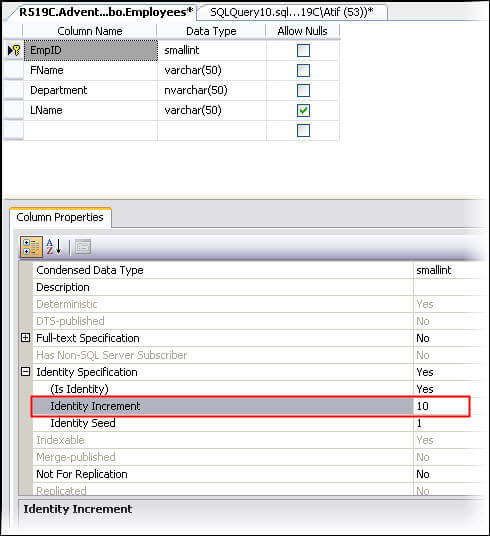

Alter Increment parameter for IDENTITY value

Again this facility is not accessible through T-SQL directly. You would have to use SSMS designer for altering incremental value of IDENTITY column.

- Right click on table in SSMS and go to Design.

- Go to IDENTITY specification in designer and give required value for identity increment

Now each new IDENTITY value would have difference of 10 from the last value.

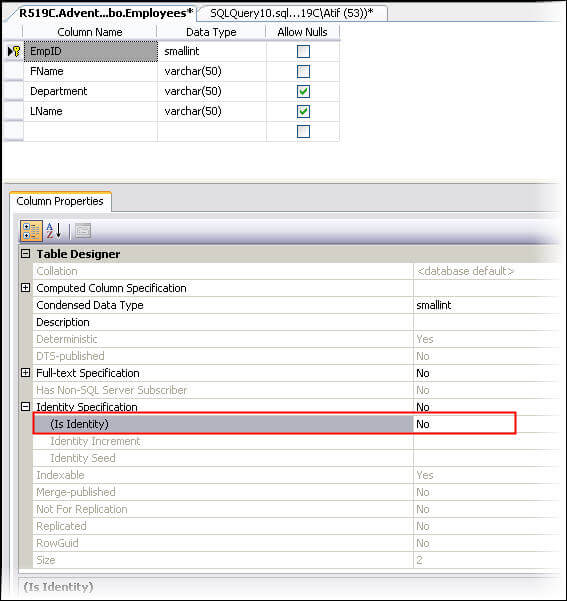

Removing IDENTITY PROPERTY for a column

Removing IDENTITY property is not directly supported through T-SQL. You have to make use of SSMS designer. Go to table design and set (Is Identity) value to No. Upon saving the changes the table would be dropped and recreated.

Now table is without an identity column. Do not forget to make appropriate changes in your INSERT code for this table.

You may find other cases where table drop and recreation needs to take place in SSMS designer. If you are using SSMS 2008 then configure it to not make changes that require table drop and re-creation. So that such changes through designer may only be made after proper analysis.

Next Steps

- Also modify the dependent objects and code to work with the changed table.

- For making changes through SSMS designer, analyze the size of the table and its impact.

- Click here to read about sp_rename system stored procedure

- Click here to read about sp_help system stored procedure

About the author

Atif Shehzad is a passionate SQL Server DBA, technical reviewer and article author.

Atif Shehzad is a passionate SQL Server DBA, technical reviewer and article author.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips