By: Daniel Farina | Comments (8) | Related: > In Memory OLTP

Problem

Achieving mission critical performance is key for most businesses, but sometimes due to storage costs you just can't afford it. Traditional databases were designed assuming that memory resources were expensive and were optimized for disk I/O. Nowadays memory is much cheaper and a working set can be stored entirely in memory. In this tip we look at a new solution in SQL Server 2014 to create memory optimized tables.

Solution

The upcoming version of SQL Server 2014 will provide us a new feature called In-Memory OLTP (codenamed Hekaton). In this tip I will tell you about Memory-Optimized Tables.

Memory-Optimized Tables allow us to store an entire table in the system memory. At this point you may say "Wait! This is not new, DBCC PINTABLE was amongst us for a long time", but this didn't work the same way.

Initially, DBCC PINTABLE does not store the entire table in memory it only stores the read pages. Also, this feature was discontinued since SQL Server 2005 as stated in this Transact-SQL reference.

What is Hekaton?

Basically Hekaton is a new database engine, integrated into SQL Server, optimized for memory resident data and OLTP workloads. With Hekaton, the entire table is stored in memory and stored procedures that only references memory optimized tables can be compiled into machine code with no user interaction just by adding the "WITH NATIVE_COMPILATION" clause in the stored procedure creation. It adjusts completely to the ACID (Atomicity, Consistency, Isolation and Durability) properties.

Hekaton has the following features:

- Algorithms that are optimized for accessing memory-resident data.

- Optimistic concurrency control that eliminates logical locks via automatic SNAPSHOT ISOLATION with multi-versioning.

- Lock free and latch free structures to access all data.

Now you may be asking yourself the following question: Do I get the same performance as with a table in a filegroup on a SSD RAID or even a RAM Drive? The answer is NO. Even if the entire database could be stored in system memory, performance won't be the same. Keep in mind that SQL Server code is optimized for disk storage, and this requires a complex buffer pool where a page must be protected by latching before it can be accessed. In a disk based table, rows are addressed using PAGE ID and ROW OFFSET. By contrast, on Hekaton rows are addressed by a type of B-Tree called Bw-Tree (http://research.microsoft.com/pubs/170384/bw-tree.pdf).

Creating a Memory Optimized File Group for SQL Server

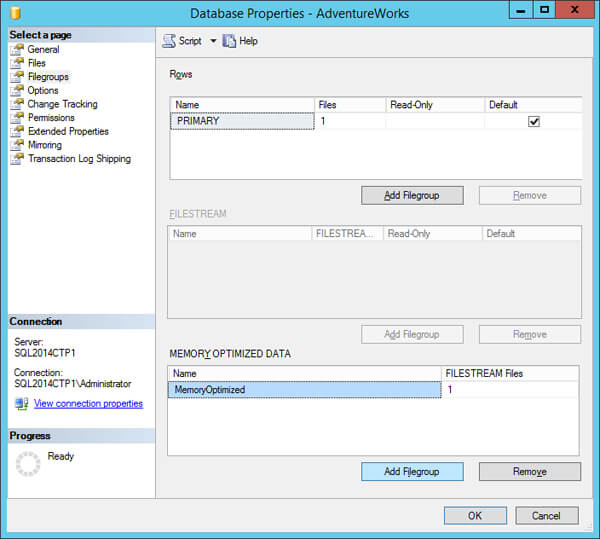

First, we must create a MEMORY_OPTIMIZED_DATA filegroup. which is much like a FILESTREAM filegroup. I am using a copy of AdventureWorks for this tip. Keep in mind that only one MEMORY_OPTIMIZED_DATA filegroup is allowed per database. You can create this filegroup with the GUI as follows.

Step 1

Step 2

Also you can use the following SQL code.

USE [master] GO ALTER DATABASE [AdventureWorks] ADD FILEGROUP [MemoryOptimized] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE [AdventureWorks] ADD FILE ( NAME = N'Hekaton', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\Hekaton' ) TO FILEGROUP [MemoryOptimized] GO

Memory Optimized Filegroup Properties

A memory optimized filegroup contains one or more containers and each container has one or more files. There are three types of files.

- Root File

-

A root file contains metadata including the data and delta file pairs for a complete checkpoint taken for memory-optimized tables.

- Data File

-

A data file contains data rows that were inserted into memory-optimized tables. The rows are appended to the data file in the transaction log order making data access sequential. This increases IO throughput compared to random IO. Data files have a maximum size of 128MB. Beyond this point a new data file is created. Also, a new data file is created when a manual checkpoint is performed.

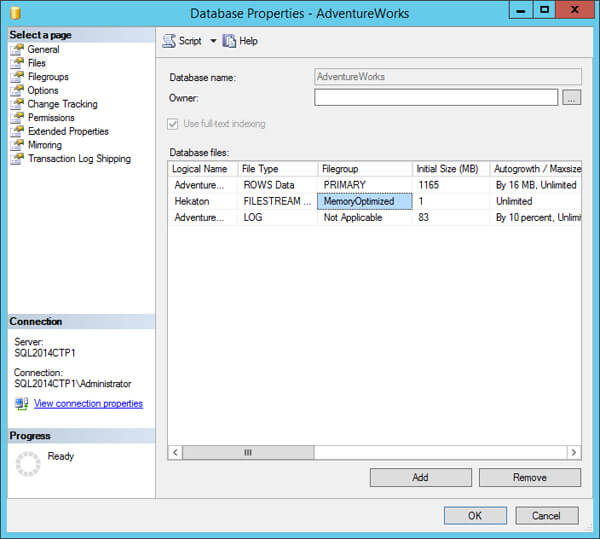

The rows are stored with a header that contains the row timestamp to implement versioning and N index pointers for row chaining, where N is the number of indexes defined on the table. See the image below.

The indexes are not stored in the data files; instead they are recreated at startup.

- Delta File

-

A delta file contains data rows that were deleted. For each deleted row, it inserts minimal information, the ID of deleted rows and the insert / delete transaction ID. The Delta files are stored in transactional log order, so the file can be accessed sequentially.

A note of interest is the way Hekaton handles updates. As I mentioned before, in order to avoid latches and locks, Hekaton handles multiple versions of the data with a timestamp. So, to achieve this, updates are managed as a DELETE followed by an INSERT. This implies that the engine does not need to seek the data file to update the information. Instead, it appends the updated data at the end of the data file.

Storage considerations for Memory Optimized Tables

As data and checkpoint files use streaming IO for data persistence, the limiting factor for this files is the sequential IO speed instead of latency or random access speed. To optimize data storage for database startup times, you can add multiple containers to the memory-optimized data filegroup, each on a different disk. This way SQL Server will read all of the containers in parallel.

When rows are deleted, data files still contain the inactive rows. In order to consolidate data and delta file pairs, Hekaton runs a merge process in the background.

All this processing will degrade file system performance due to fragmentation.

Memory-Optimized tables write to the SQL Server transaction log just like disk based tables. The only difference is that there are no log records for physical structure modifications, indexing or UNDO information. So, if your system has high transaction rates, you may consider using a SSD disk or a PCI-E card for the transaction log.

Table creation for a Memory Optimized Table

The CREATE TABLE statement for Memory-Optimized tables is the same as the statement for standard tables with the addition of the MEMORY_OPTIMIZED = ON table option. Also, you can specify if the data will persist after a server restart by setting the DURABILITY table option to SCHEMA_AND_DATA, the default option, or to SCHEMA_ONLY.

The SCHEMA_AND_DATA options guarantees durability of schema and data, like a disk based table, but does not store index data, as I previously explained, indexes are built on server startup. By contrast, the SCHEMA_ONLY option only stores schema definition of the table and indexes, much like a tempDB table.

You may be asking what is the difference between a tempDB table and a Memory-Optimized Table with SCHEMA_ONLY duration. First, a tempDB table does not keep its schema definition upon server restart, and second and most important, there is the fact that a tempDB table is subject to checkpoints and also generates log entries of table and index operations into the log files. On the other hand, a Memory-Optimized Table with SCHEMA_ONLY duration is unaffected by the checkpoint process and does not store any log information.

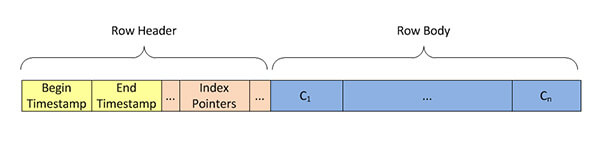

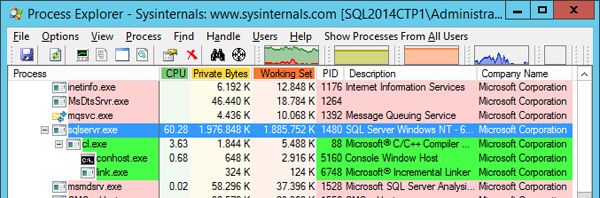

DLL Generation

Every time you create a Memory-Optimized table or a natively compile a stored procedure, which I will explain in an upcoming tip, SQL Server launches a child process that compiles the table structure or the stored procedure into a Windows DLL as shown on the image below.

The compiled DLL's are stored in "..\MSSQL\DATA\xtp\<DB_ID>\", where <DB_ID> is the ID of the database in which the object belongs and the name of the DLL has the following format: "xtp_<t|p>_<DB_ID>_<OBJECT_ID>.dll".

| Tag | Description |

|---|---|

<t|p> |

t = Table; p = Stored Procedure |

|

<DB_ID> |

The ID of the database in which the object belongs to |

|

<OBJECT_ID> |

The ID of the object from sys.objects |

Limitations for Memory Optimized Tables

To date, with SQL Server 2014 CTP1, there are some limitations for Memory-Optimized tables which I will list below.

- ALTER TABLE, sp_rename, alter bucket_count, and adding and removing an index outside of the CREATE TABLE statement is not supported for memory-optimized tables.

- The IDENTITY (for table types only) and PRIMARY KEY constraints are supported.

- UNIQUE, CHECK, and FOREIGN KEY constraints are not supported.

- CREATE INDEX, ALTER INDEX and DROP INDEX statements are not supported for HASH indexes.

- Memory-optimized tables do not support range indexes (in CTP-1), DDL triggers, DML triggers, or constraints (except for primary key).

For more information check MSDN - SQL Server Support for In-Memory OLTP.

Example of a Memory Optimized Table for SQL Server

For this example, I have used the AdventureWorks database and specifically the Sales.CurrencyRate table. This table, as the name suggests, stores currency rates for different currencies. It contains the Average exchange rate for the day and the day's final exchange rate. So, in a production environment, this table would be subject to several queries. The exchange rate for a currency can vary several times a day, so this table would cause a lot of updates. Furthermore, for every sale, this table will be accessed to check the currency rates to determine the sale amount. Consequently, this table is a good candidate for memory optimization.

What I am going to do in this tip is to split the Sales.CurrencyRate table into two. One called Sales.HistoricCurrencyRate to store historical data, and other called Sales.DailyCurrencyRate to keep in memory currency rates of the actual day and then create a view to merge the two tables.

1. Add Memory-Optimized FILEGROUP to AdventureWorks

USE [master] GO ALTER DATABASE [AdventureWorks] ADD FILEGROUP [MemoryOptimized] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE [AdventureWorks] ADD FILE ( NAME = N'Hekaton', FILENAME = N'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\Hekaton' ) TO FILEGROUP [MemoryOptimized] GO

2. CREATE TABLE Sales.DailyCurrencyRate

Here is the Sales.DailyCurrencyRate creation script.

USE AdventureWorks GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE Sales.DailyCurrencyRate( CurrencyRateID int NOT NULL, CurrencyRateDate datetime NOT NULL, FromCurrencyCode nchar(3) COLLATE Latin1_General_100_BIN2 NOT NULL, ToCurrencyCode nchar(3) COLLATE Latin1_General_100_BIN2 NOT NULL, AverageRate money NOT NULL, EndOfDayRate money NOT NULL, ModifiedDate datetime NOT NULL, CONSTRAINT PK_DailyCurrencyRate_CurrencyRateID PRIMARY KEY NONCLUSTERED HASH (CurrencyRateID) WITH (BUCKET_COUNT = 1024), INDEX AK_DailyCurrencyRate_CurrencyRateDate_FromCurrencyCode_ToCurrencyCode HASH (CurrencyRateDate, FromCurrencyCode, ToCurrencyCode) WITH (BUCKET_COUNT = 2048) ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA) GO

In this script you may notice the introduction of HASH INDEXES which are supported only on memory optimized tables. In an upcoming tip I will explain this topic and give you some samples, but for now you can read the available documentation on the TechNet library about the Guidelines for Using Indexes on Memory-Optimized Tables.

Also you will see that this table has no IDENTITY column. That is a limitation of Memory-Optimized tables, but we can solve this with the creation of a SEQUENCE as you will see further down.

Note the COLLATE Latin1_General_100_BIN2 clause. Hekaton tables only allow BIN2 collation on all character columns that participate in indexes. Also, natively compiled stored procedures only support BIN2 collations for comparisons, sorting and aggregate functions.

3. CREATE TABLE HistoricCurrencyRate

Now we create the Sales.HistoricCurrencyRate table with the same definition of Sales.CurrencyRate table but without the identity column. That is because new records will be inserted into Sales.DailyCurrencyRate table.

USE AdventureWorks;

GO

SET QUOTED_IDENTIFIER ON;

GO

CREATE TABLE Sales.HistoricCurrencyRate(

CurrencyRateID INT NOT NULL,

CurrencyRateDate DATETIME NOT NULL,

FromCurrencyCode NCHAR(3) NOT NULL,

ToCurrencyCode NCHAR(3) NOT NULL,

AverageRate MONEY NOT NULL,

EndOfDayRate MONEY NOT NULL,

ModifiedDate DATETIME NOT NULL DEFAULT (getdate()),

CONSTRAINT FK_HistoricCurrencyRate_Currency_FromCurrencyCode FOREIGN KEY

(FromCurrencyCode)

REFERENCES Sales.Currency(CurrencyCode),

CONSTRAINT FK_HistoricCurrencyRate_Currency_ToCurrencyCode FOREIGN KEY

(ToCurrencyCode)

REFERENCES Sales.Currency(CurrencyCode),

CONSTRAINT PK_HistoricCurrencyRate_CurrencyRateID PRIMARY KEY

CLUSTERED

(CurrencyRateID ASC)

WITH ( PAD_INDEX = OFF , FILLFACTOR = 100 , IGNORE_DUP_KEY = OFF , STATISTICS_NORECOMPUTE = OFF , ALLOW_ROW_LOCKS = ON , ALLOW_PAGE_LOCKS = ON , DATA_COMPRESSION = NONE )

ON [PRIMARY])

CREATE UNIQUE NONCLUSTERED INDEX AK_HistoricCurrencyRate_CurrencyRateDate_FromCurrencyCode_ToCurrencyCode

ON Sales.HistoricCurrencyRate(

CurrencyRateDate,

FromCurrencyCode,

ToCurrencyCode)

WITH ( PAD_INDEX = OFF , FILLFACTOR = 100 , IGNORE_DUP_KEY = OFF , STATISTICS_NORECOMPUTE = OFF , ALLOW_ROW_LOCKS = ON , ALLOW_PAGE_LOCKS = ON , DATA_COMPRESSION = NONE ) ON [PRIMARY];

GO

4. INSERT INTO Sales.HistoricCurrencyRate

At this time, we are ready to move the data of Sales.CurrencyRate into Sales.HistoricCurrencyRate.

USE AdventureWorks GO INSERT INTO Sales.HistoricCurrencyRate SELECT CurrencyRateID, CurrencyRateDate, FromCurrencyCode, ToCurrencyCode, AverageRate, EndOfDayRate, ModifiedDate from Sales.CurrencyRate

5. DROP TABLE CurrencyRate

In order to drop the Sales.CurrencyRate table we must first drop the constraint of the Sales.SalesOrderHeader table.

USE AdventureWorks GO ALTER TABLE Sales.SalesOrderHeader DROP CONSTRAINT FK_SalesOrderHeader_CurrencyRate_CurrencyRateID DROP TABLE Sales.CurrencyRate

6. CREATE VIEW Sales.CurrencyRate

After that, we can create the Sales.CurrencyRate view.

USE AdventureWorks GO IF object_id(N'Sales.CurrencyRate', 'V') IS NOT NULL DROP VIEW Sales.CurrencyRate GO CREATE VIEW Sales.CurrencyRate AS SELECT D.CurrencyRateID, D.CurrencyRateDate, D.FromCurrencyCode COLLATE SQL_Latin1_General_CP1_CI_AS AS FromCurrencyCode, D.ToCurrencyCode COLLATE SQL_Latin1_General_CP1_CI_AS AS ToCurrencyCode, D.AverageRate, D.EndOfDayRate, D.ModifiedDate FROM Sales.DailyCurrencyRate D WITH (SNAPSHOT) UNION ALL SELECT H.CurrencyRateID, H.CurrencyRateDate, H.FromCurrencyCode, H.ToCurrencyCode, H.AverageRate, H.EndOfDayRate, H.ModifiedDate FROM Sales.HistoricCurrencyRate H GO

Notice the WITH (SNAPSHOT) hint. This is because accessing memory optimized tables using the READ COMMITTED isolation level is supported only for autocommit transactions. So we must provide a supported isolation level for the memory optimized table using a table hint.

7. CREATE SEQUENCE Sales.DailyCurrencyRate_CurrencyRateID_Seq

To solve Hekaton's lack of support for IDENTITY columns, we must create a SEQUENCE as follows.

USE [AdventureWorks]

GO

DECLARE @StartValue INT

DECLARE @SqlStmt VARCHAR(255)

SELECT @StartValue = MAX (CurrencyRateID) +1 FROM

sales.HistoricCurrencyRate

SET @SqlStmt = N'CREATE SEQUENCE Sales.DailyCurrencyRate_CurrencyRateID_Seq

AS [int]

START WITH ' +CONVERT (NVARCHAR(50), @StartValue )

+ 'INCREMENT BY 1'

EXEC (@SqlStmt)

GO

8. CREATE TRIGGER Sales.TR_CurrencyRate_Insert

After that, we create an INSTEAD OF TRIGGER to handle the inserts.

USE [AdventureWorks]

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TRIGGER Sales.TR_CurrencyRate_Insert

ON Sales.CurrencyRate

INSTEAD OF INSERT

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO sales.DailyCurrencyRate

( CurrencyRateID ,

CurrencyRateDate ,

FromCurrencyCode ,

ToCurrencyCode ,

AverageRate ,

EndOfDayRate ,

ModifiedDate

)

SELECT NEXT VALUE FOR Sales.DailyCurrencyRate_CurrencyRateID_Seq,

CurrencyRateDate ,

FromCurrencyCode ,

ToCurrencyCode ,

AverageRate ,

EndOfDayRate ,

ModifiedDate

FROM INSERTED

END

GO

9. Insert test data

I have made a simple script to insert data into Sales.CurrencyRate with a CTE. You can create your own if you like.

USE AdventureWorks

GO

DECLARE @start DATETIME

DECLARE @end DATETIME

SET @start = '20130903'

SET @end = '20130904'

; WITH CTE AS (

SELECT @start AS I

UNION ALL

SELECT I +1 FROM CTE

WHERE I +1 <= @end

)

INSERT INTO Sales.CurrencyRate (CurrencyRateDate, FromCurrencyCode, ToCurrencyCode,

AverageRate, EndOfDayRate, ModifiedDate)

SELECT

I AS CurrencyRateDate,

'USD' AS FromCurrencyCode,

C.CurrencyCode AS ToCurrencyCode,

RAND()*SQUARE (CONVERT(TINYINT,HASHBYTES('SHA1', C.CurrencyCode))) AS AverageRate,

RAND()*SQUARE (CONVERT(TINYINT,HASHBYTES('MD5', C.CurrencyCode))) AS EndOfDayRate,

I AS ModifiedDate

FROM CTE

CROSS APPLY Sales.Currency C

ORDER BY I, CurrencyCode

OPTION (MAXRECURSION 4000);

At this point the Memory Optimized Table is setup and ready to be used for your database transactions.

Next Steps

Stay tuned for additional tips about SQL Server 2014. In the meantime, take some time to review these tips:

- Read more about SQL Server 2012 Sequence Numbers.

- Read more about FILESTREAM http://www.mssqltips.com/sql-server-tip-category/92/filestream/.

- Read my previous tip about Physical fragmentation to see how to use Sysinternals Contig tool to consolidate hard disk free space http://www.mssqltips.com/sqlservertip/3008/solving-sql-server-database-physical-file-fragmentation/.

- Learn about transaction isolation levels: Demonstrations of Transaction Isolation Levels in SQL Server.

- Review this tip about SNAPSHOT ISOLATION: Snapshot Isolation in SQL Server 2005

- Check out constraints tip category.

- Execute SQL Server SELECT command without locking

- Read this tip to understand SQL Server Indexing: Understanding SQL Server Indexing .

- Take a look at these other tips on indexing.

- Review INSTEAD OF triggers usage with this tip: Using INSTEAD OF triggers in SQL Server for DML operations.

- Learn how to generate test data with Visual Studio 2010: http://www.mssqltips.com/sqlservertip/2190/generating-sql-server-test-data-with-visual-studio-2010/.

- See how you can create your own test data: Populating a SQL Server Test Database with Random Data.

- If you want to create your own test data, probably you would need this: SQL Server Function to return a range of dates.

- Review recursive CTE's here: Recursive Queries using Common Table Expressions (CTE) in SQL Server

- Enrich your knowledge about views.

- If you don't understand the "Sales." In the object names, please review SQL Server Four part naming.

- If you are looking for more information about CROSS APPLY, This is the right tip: SQL Server CROSS APPLY and OUTER APPLY.

- See this tip about how to use different collations: Case Sensitive Search on a Case Insensitive SQL Server.

- Also, this tip will give you more information about table collation: Create SQL Server temporary tables with the correct collation.

- If you are curious about DBCC PINTABLE read this: http://www.mssqltips.com/sqlservertip/1317/keeping-data-available-in-the-sql-server-data-cache-with-pintable/

About the author

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips