By: Derek Colley | Comments (8) | Related: > Locking and Blocking

Problem

Recently, I noticed this intriguing message in one of my SQL Server 2008 R2 error logs:

Warning: Failure to calculate super-latch promotion threshold.

This was new to me. I was aware of the notion and some of the mechanics of locking and latches, particularly with reference to diagnosing blocks and being aware of the different behaviors, i.e. the difference between a shared and an exclusive lock. However, as I began researching this error message I was drawn further and further into the detail of latching and locking, and started learning about their inner workings. I eventually found an explanation for this error message that, while not completely satisfactory, did lead me to diagnose wider-reaching I/O issues on the affected instance and led me to a better understanding of latching in general.

Solution

This article is a whistle-stop tour of my exploration of latches; their different types, their purposes, why they are required and where they fit into the SQL database engine, in the hope it will be interesting and useful to you. I will not cover locks so much except in reference and comparison, since there are already some excellent articles available - see Tim Ford (http://www.mssqltips.com/sqlservertip/1968/understanding-sql-server-locking/), Greg Robidoux (http://www.mssqltips.com/sqlservertip/1036/finding-and-troubleshooting-sql-server-deadlocks/) and on Technet (http://technet.microsoft.com/en-us/library/aa213039(v=sql.80).aspx) that give a more comprehensive level of detail than this article can cover.

What is a latch?

Latches are often confused with locks, as their purposes are similar but not the same. A latch can be defined as an object that ensures data integrity on other objects in SQL Server memory, particularly pages. They are a logical construct that ensures controlled access to a resource and isolationism when required for pages in use. In contrast to locks, latches are an internal SQL Server mechanism that isn't exposed outside the SQLOS. They come in many different types but can be split into roughly two classes - buffer latches, and non-buffer latches.

The Buffer Cache

To understand buffer latches, one must first understand the purpose of the buffer cache. The buffer cache (also known as the buffer pool) is an area of memory used for holding data pages. The buffer cache contains many buffers, each of which are 8192 bytes in size (8KB). This is the area in which a data page is read from disk, and if modified the changes are written back to disk. Data loading into the buffer pool is on a FIFO basis, where pages age out of the cache, or they can be cleared by using CHECKPOINT; DBCC DROPCLEANBUFFERS; (which will write out all dirty pages to disk before clearing out the cache). Each buffer in the buffer cache may contain a copy of a data page which has been read from disk.

The buffer manager is responsible for managing the components of the buffer cache: the hash tables (containing the bucketed collections of pointers to each buffer in specific memory locations); the buffer array (containing the buffers) and the pages written to each buffer. It is the interface between the database engine and the in-memory pages and the mechanism for storing and retrieving data from this area of memory.

Buffer latches are placed on pages as and when they are accessed from the buffer cache. They do not last for the duration of a transaction (unlike locks), and they are released when no longer required. One transaction can have many latches, and the latches can be placed for a variety of reasons. One of the best-known classes of buffer latches is reflected in the PAGE_IO_LATCH wait, where the engine is waiting for a synchronous I/O operation to complete before the latch can be released. This is rightly often interpreted as indicative of I/O contention, and shows up (like all buffer latches) in the DMV sys.dm_os_wait_stats, and also as a wait type in the last_wait_type column of sys.dm_exec_requests.

You can find information on the buffers that are in the cache in the DMV sys.dm_os_buffer_descriptors. This contains one row for each data page in the buffer cache. The structure of this DMV exposes some useful information, such as the database, file and page id for each page, the number of rows on the page, the amount of free space on the page and whether or not a page is dirty. Interestingly, to retrieve a certain page from memory the buffer manager uses an efficient algorithm not unlike that used for index scans to look up a value using a hashed value, which falls into one or more sequentially-arranged buckets. This enables the manager to scan a subset of hashed values rather than the full range of values (index scans also scan a subset of the table data).

Latch Classes and Latch Modes

Buffer latches fall into the BUFFER class, where non-buffer latches can fall into many different classes depending on their function. You can find a fairly comprehensive list of these by issuing the following query:

SELECT latch_class FROM sys.dm_os_latch_stats

The BUFFER class is the most commonly used, and if you query the waiting_requests_count column in the DMV you'll probably find most classes have 0 waiting requests, with the notable exception of BUFFER (555 waiting requests on my installation of SQL Server 2012, which is idle at the moment). As already mentioned, each latch class has latches that perform specific jobs - DBCC_CHECK_TABLE_INIT, to take a random example, will have latches that are invoked when a table is marked for the DBCC CHECKTABLE operation.

Latch modes are best described as the state, or intention, of a latch. Like locks, they can be shared or exclusive, but there's a few other types too - Bolton, Langford et. al in 'Professional SQL Server 2008 Internals and Troubleshooting' (Wiley Publishing, 2010, pp. 258-259) give a comprehensive list, which I have simplified below:

- NULL latch (NL): Not used

- KEEP latch (KP): Have two purposes: to keep a page in the buffer cache while another latch is placed upon it, and the second is to maintain reference counts.

- SHARED latch (SH): Taken out when a request to read the data page is received.

- UPDATE latch (UP): Milder than an EX latch, this allows reads but no writes on the page while being updated.

- EXCLUSIVE latch (EX): Severe latch that allows no access to the page while being written. Only one per page can be held.

- DESTROY latch (DT): Used to destroy a buffer and evict it from the cache (returning the page to the free list).

Latches also don't adhere to a strict priority order over each other - by and large, it's a first-in-first-out system, where a latch will have to wait for another latch to be released before being allowed for a particular page. Keep latches are exempt from this and will take priority over every other latch except destroy latches. Also when a latch is released, sometimes other compatible latches will be taken out simultaneously (i.e. multiple shared latches) from anywhere in the latch queue.

Latch Waits

BUFFER class latches are always reported as PAGELATCH_* waits and are exposed in the DMV sys.dm_os_wait_stats, with I/O-specific latches reported as PAGEIOLATCH_* waits. You can view the number of page latch waits over time by using the 'Page latch waits' performance counter available in perfmon. By taking a sample of readings over time, you can see where and when there are large numbers of waits, and by taking regular readings of sys.dm_os_latch_stats you can determine how long each wait is, on average, or as a total. Peaking latch waits can occur for many reasons - for example, a job might start at a particular time that updates a small range of pages frequently (i.e. some 'CurrentValues' table - or you might be experiencing poor performance from a SAN with a write-heavy load from the application (this was my personal diagnosis of the I/O problem alluded to at the start of this article).

We can demonstrate this point with a simple experiment. On your development instance, execute the following T-SQL code to create a sample table which we will use to simulate write-heavy load on a small range of pages:

SET NOCOUNT ON CREATE TABLE dbo.BigWrites ( DateTimeNow DATETIME ) INSERT INTO dbo.BigWrites (DateTimeNow) SELECT GETDATE() WHILE 1=1 BEGIN UPDATE dbo.BigWrites SET DateTimeNow = GETDATE() END

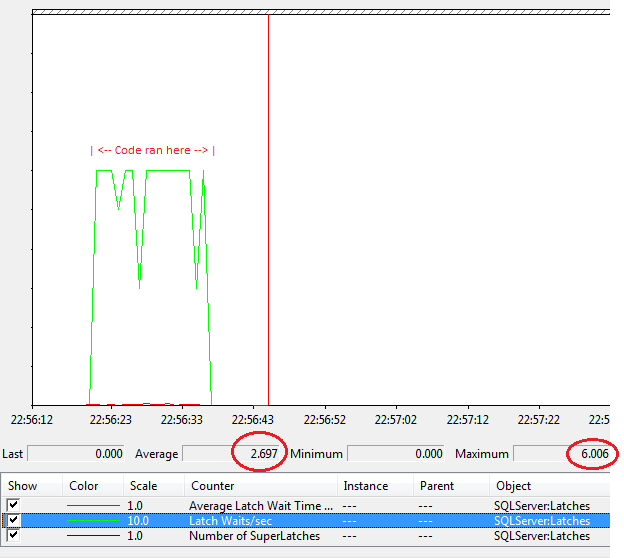

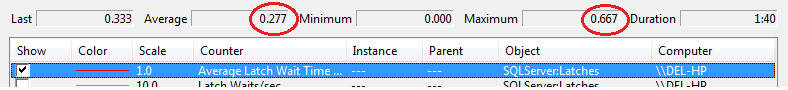

On my idle SQL Server installation (2012 RTM, on dual-core 2.4GHz, 6GB RAM, Windows 7 Pro) this generated up to 6 latch waits per second (EX latches) while queuing UPDATEs tried to access the same page. The average page latch wait time went from 0 to over 200ms! We can see this illustrated below:

Dealing with Latch Contention - Automatic Parallelism using Superlatches

SQL Server, luckily, has a mechanism to deal with buffer latch contention and this is the promotion of latches into 'superlatches' (also, confusingly, called sublatches). This promotion causes the latch to become merely a pointer to an array of latches - in other words, sub-latches are created, each of which can be accessed from a different CPU scheduler (in a NUMA architecture) to enable parallelism on the page where allowed (i.e. with SH latches). Thus, a request to the superlatch can be SH mode while the superlatch can contain multiple sublatches in different modes (grant order as explained above still applies).

This also goes some way to explaining the message I received in the SQL Server error log:

'Warning: Failure to calculate super-latch promotion threshold.'

This is a warning from the SQL Server engine that it was not able to calculate the threshold at which a particular latch is promoted from an ordinary to a superlatch. This means contention (latch queuing, as demonstrated) could not be eased by the parallelism of latch processing, which meant no relief from the evident I/O pressures. Further investigation into the logs and some testing using my scripts corroborated apparent I/O waits as the culprit, however the database engine was misinterpreting network latency between the VM and the SAN as waits on the storage layer (since the network connectivity between the VM and the SAN in the VMWare layer is all but invisible to SQL Server).

The question remains, why wasn't the threshold able to be calculated? Some reading online suggests that this issue is unique to SQL Server 2008 R2 SP2, which happened to be the version of SQL Server on which I'd observed this behavior, and it's known that this message is rare if non-existent on other versions.

Dealing With Latch Contention - Strategies You Can Use

So what are the exact causes of latch contention? As mentioned, they can vary. Sometimes the blame can be laid at the door of the application issuing the calling queries, such as in my example above of perpetual updates to the same page. This is particularly true of a certain Microsoft monitoring product which had better remain unnamed, which pounds the collection tables in the operations database that harbor data prior to rollup and warehousing with writes, then throws in automated reporting against the same tables on top of this (I personally observed 125m rows generated in less than three weeks for simple gathering and storage of perfmon counter data, and an almost perpetual string of blocks, locks and latch waits). In this case, the solution was to calm down the application and scale back the abuse of the database.

Another cause can be an excess of CPU cores used by SQL Server, since competing latches may be acquired by competing schedulers especially if you have configured for high concurrency. You may get better performance by adjusting the affinity mask to use a subset of the number of cores you have available (although if you have 8 or less, this is unlikely to be a major factor).

A further cause can be poor design of your database - you may wish to split out data located on 'hot pages', which are accessed frequently and which you might identify as the causes of your latch contention. For example, if you have a currency table with a data page containing 100 rows, of which 1 is updated per transaction and you have a transaction rate of 200/sec, you could see page latch queues of 100 or more. If each page latch wait costs just 5ms before clearing, this represents a full half-second delay for each update. In this case, splitting out the currency rows into different tables might prove more performant (if less normalized and logically structured).

Next Steps

There is a quite brilliant paper on latches with detailed examples and much more information 'Diagnosing and Resolving Latch Contention on SQL Server' (Microsoft Corporation, 2011) written by the SQLCAT team at Microsoft: http://www.microsoft.com/en-gb/download/details.aspx?id=26665

I cannot recommend enough the book 'Professional SQL Server 2008 Internals and Troubleshooting' by Christian Bolton, Justin Langford, Brent Ozar, James Rowland-Jones and Steven Wort (Wiley Publishing, 2010, ISBN 978-0-470-48428-9). It has a long and detailed section on latches and goes into superlatches in more detail than I have room for here.

'SQL Server 2008 Internals' by Kalen Delaney, Paul Randal, Kimberley Tripp, Conor Cunningham, Adam Machanic and Ben Navarez (Microsoft, 2009) is light on information about latches but has a wealth of information on locks and the buffer cache.

If you really want to drill down into the exact methods (down to the function call names) used by SQLOS for I/O operations then read 'How It Works: Bob Dorr's SQL Server I/O Presentation' by Bob Door, a CSS SQL Server engineer, available at http://blogs.msdn.com/b/psssql/archive/2010/03/24/how-it-works-bob-dorr-s-sql-server-i-o-presentation.aspx It's a little dated, having been originally written for SQL Server 7.0, but the author assures us it is relevant up to at least SQL Server 2008.

As mentioned in the summary, this website also has many tips on locking and latches including contributions from Tim Ford, Greg Robidoux and others - Tim and Greg's links are below. Search the MSSQLTips site to find many more. http://www.mssqltips.com/sqlservertip/1968/understanding-sql-server-locking/ http://www.mssqltips.com/sqlservertip/1036/finding-and-troubleshooting-sql-server-deadlocks/

About the author

Dr. Derek Colley is a UK-based DBA and BI Developer with more than a decade of experience working with SQL Server, Oracle and MySQL.

Dr. Derek Colley is a UK-based DBA and BI Developer with more than a decade of experience working with SQL Server, Oracle and MySQL.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips