By: Edwin Sarmiento | Comments (2) | Related: > PowerShell

Problem

I want to shrink the SQL Server transaction log file to reclaim disk space and reduce the number of virtual log files (VLFs) after I have scheduled regular transaction log backups. I checked the log file free space and ran DBCC SQLPERF (LOGSPACE) to verify that I have more than 90% free space. However, I still can't reclaim all the free space inside the SQL Server transaction log file. How do I know why I can't shrink my log file and what is the minimum possible size for me to shrink it?

Solution

In a previous tip on How to determine SQL Server database transaction log usage, we have seen how we can use the undocumented command DBCC LOGINFO to get information about the virtual log files (VLFs) in our SQL Server databases. The tip also tells us that, if we are to check the rows with Status column value of 2, we can figure out which VLFs are in use (also called Active) or not (also called Inactive.) Also, in a previous tip on How to shrink the transaction log file in SQL Server, we've seen how we can take a log backup to truncate the log and mark the VLFs inactive so we can go ahead and shrink the log file. We can reclaim space from inactive VLFs when we shrink the database transaction log file.

However, the one thing that I tell SQL Server DBAs is that taking a log backup, switching to SIMPLE recovery model or even detaching and re-attaching the database back will not guarantee that you will be able to shrink the SQL Server transaction back to the smallest possible size. We need to understand why it is up to that size in the first place. One reason is when the transaction log file initial size is set to some value higher than the smallest possible size. For example, you will not be able to shrink a log file to 1MB if the initial size is set to 1GB.

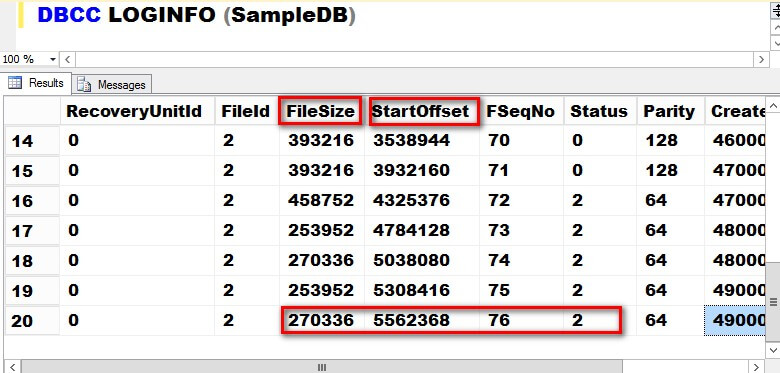

But a not-so-obvious reason is because an active VLF is in between ranges of inactive VLFs as shown in the screenshot below.

Note that row #6, with Status column value of 2, is between rows with Status column value of 0. This could report having a free space in the transaction log of more than 90% but still prevent you from reclaiming the free space in the log file. To determine the minimum possible size that we can shrink the log file, we need to find the last active VLF. Now, imagine having to search thru thousands of VLFs to find the last active VLF. Fortunately, we can use Windows PowerShell to achieve this task. We can use the script provided for in the tip on Check SQL Server Virtual Log Files Using PowerShell.

We'll start by loading the appropriate .NET assemblies used by SMO as defined in this previous tip.

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.SMO")

Next, we'll create an instance of the Server class to represent the SQL Server instance that you will be connecting to, passing the SQL Server instance name. I will be assigning the results to a variable named $serverInstance so we can access the different properties and methods of the Server class.

$serverInstance = New-Object ("Microsoft.SqlServer.Management.Smo.Server") "InstanceName"

We will only focus on a specific database for this purpose, but you can apply the process to all the other databases in your SQL Server instance. Let's create a PowerShell variable to assign a Database object that we are interested in. For this example, I will be using a sample database named SampleDB.

$db = $serverInstance.Databases["SampleDB"]

Similar to the previous tip, we will use the ExecuteWithResults method of the Database class to execute the DBCC LOGINFO command in the context of the selected database. The results of this method is a .NET object type called a DataSet which is commonly used in ADO.NET. A DataSet is an in-memory cache of data with a collection of DataTable objects. Think of it as an in-memory version of a database with different tables, columns, relationships, etc. If there are multiple tables in the resultset, we can access each table using an index in an array. However, since the results of the DBCC LOGINFO command is a single resultset that mimics a table, think of the results of the ExecuteWithResults method as an in-memory table. The PowerShell code to accomplish all of these tasks is shown below.

$db.ExecuteWithResults("DBCC LOGINFO").Tables[0]

Up to this point, we've simply used DBCC LOGINFO with PowerShell to retrieve the VLFs in the log file. This is where an understanding of the transaction log is important. The StartOffset column can lead us into what we are looking for. This column represents the start of the VLF (in bytes) from the beginning of the transaction log file. Now, because of the sequential nature of the log file, the StartOffset column is in increasing value with size depending on the autogrowth increment of the log file. The size (in bytes) of the VLF is determined by the FileSize column.

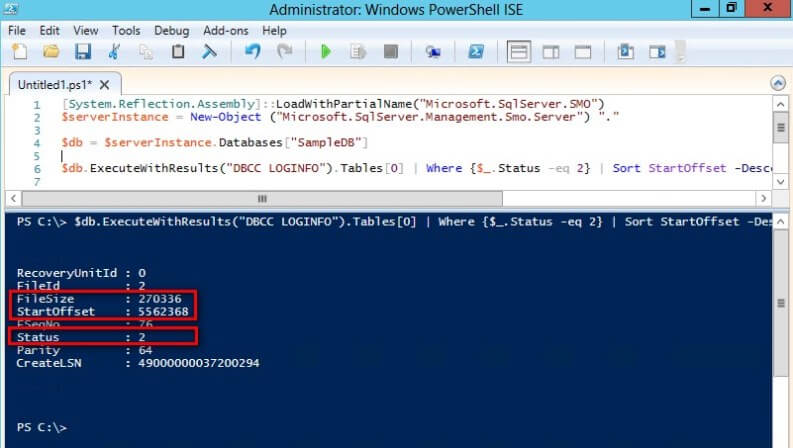

To determine the minimum possible size to shrink the log file, we simply have to find the last active VLF (Status column value of 2,) get the StartOffset column value and add the FileSize column value. Let's use all of these information to write our PowerShell code. Let's also use the pipeline (|) to filter, sort and list the results returned by the ExecuteWithResults method. I will just add some code to the previous one to demonstrate how this can be achieved - all in a single line of code.

$db.ExecuteWithResults("DBCC LOGINFO").Tables[0] | Where-Object {$_.Status -eq 2} | Sort-Object StartOffset -Descending | Select-Object -First 1

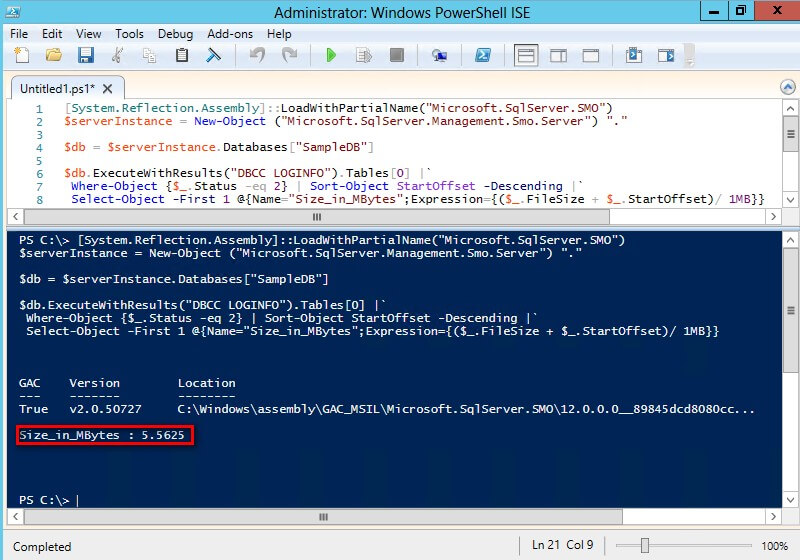

We can be fancy with the results, go all out and use Windows PowerShell to perform simple calculations on the object properties by adding the StartOffset column and the FileSize column using script blocks. I used 1MB for this example for byte conversion since I am working with a relatively small log file. But for large log files, you can use either 1MB or 1GB.

$db.ExecuteWithResults("DBCC LOGINFO").Tables[0] | Where-Object {$_.Status -eq 2} | Sort-Object StartOffset -Descending | Select-Object -First 1 @{Name="Size_in_MBytes";Expression={($_.FileSize + $_.StartOffset)/ 1MB}}

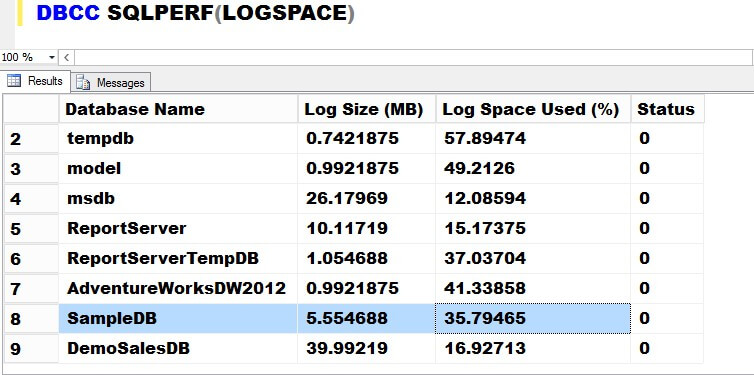

This tells me that, even though my log file usage is only at 35.79% from running DBCC SQLPERF(LOGSPACE), the minimum possible size to shrink the log file is only up to 5.56 MB.

Next Steps

- Review the following tips

- Tutorial on Introduction to the SQL Server Transaction Log

- Other PowerShell-related tips.

About the author

Edwin M Sarmiento is a Microsoft SQL Server MVP and Microsoft Certified Master from Ottawa, Canada specializing in high availability, disaster recovery and system infrastructures.

Edwin M Sarmiento is a Microsoft SQL Server MVP and Microsoft Certified Master from Ottawa, Canada specializing in high availability, disaster recovery and system infrastructures.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips