By: Jeffrey Yao | Comments (6) | Related: > SQL Server 2016

Problem

To load csv files with just T-SQL is often a headache depending on the contents and the format of the csv files. In fact, according to MSDN, SQL Server does not officially support csv file bulk load operations.

"Comma-separated value (CSV) files are not supported by SQL Server bulk-import operations. However, in some cases you can use a CSV file as the data file for a bulk import of data into SQL Server."

There are two challenges:

- If a csv file is separated by, say a comma, and in some fields, say [Address] there are commas, then we cannot load the file unless we replace the field separator from a comma to another character, such as a vertical bar |.

- We need to manually create tables first before loading. When we continue to receive csv files with different number of fields, this can be tedious work.

Is there anyway that we can do to make csv file loading more efficient and pleasant?

Solution

With SQL Server 2016 R service, we can easily solve the two challenges mentioned above as R is very good at processing csv files due to its data analysis nature.

So here is the case scenario, followed up with the algorithm.

Case Description

In a folder, we have multiple csv files and we want to load each csv file into its corresponding staging table in a SQL Server instance. We do not care about data types for these staging tables, so all staging tables just have their columns set to varchar(300) data type. We also assume each csv file has its first line as the header line.

Here are the requirements for the file loading:

- The only input to the loading process is the folder name where all the csv files are located.

- Each staging table will be named after the csv file, for example, a csv file is name "employee salary.csv", the staging table will then be [csv].[employee salary], with [csv] as the schema.

- The column names of each staging table are the same as the csv field names contained in the header line .

Process Algorithm

To meet the requirements, we need to follow these steps:

- Find all .csv files under the specified folder, and save the full path name of each file in a table variable, let's call it @tblFile.

- Loop through @tblFile, read each file's first line with R to get the csv fields, save all the field names into a table variable, @tblColumn.

- Loop through @tblColumn to construct a dynamic SQL statement to create a table for each file, and then execute the dynamic statement.

- Loop through @tblFile to construct a dynamic SQL statement to read the csv file with R and insert the results into the corresponding table created in step 3.

Test Environment Setup

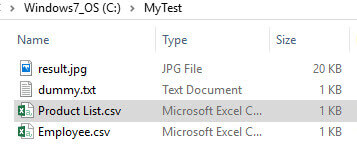

Assume we have a folder c:\MyTest\, which contains two .csv files and another two are just "noise" files to further test our solution's robustness.

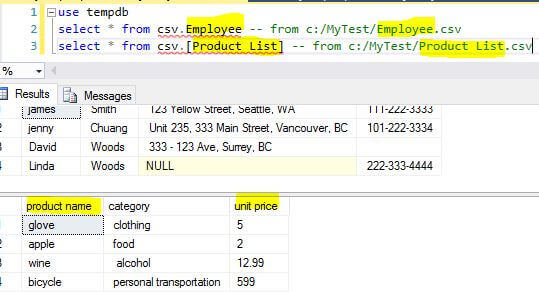

[Product List.csv] has the following contents:

"product name", category, "unit price" glove, clothing, 5.0 apple, food, 2.0 wine, alcohol, 12.99 bicycle, "personal transportation", 599.0

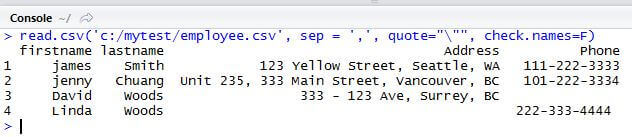

[Employee.csv] has the following contents:

firstname, lastname, Address, Phone james, Smith, "123 Yellow Street, Seattle, WA", 111-222-3333 jenny, Chuang, "Unit 235, 333 Main Street, Vancouver, BC", 101-222-3334 David, Woods, "333 - 123 Ave, Surrey, BC", Linda, Woods,, 222-333-4444

R Code

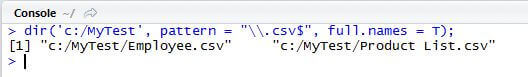

As per the requirements, we will first look at how to read files in a folder with R. If you have RStudio Desktop IDE installed, just run the following:

dir('c:/MyTest', pattern = "\\.csv$", full.names = T);

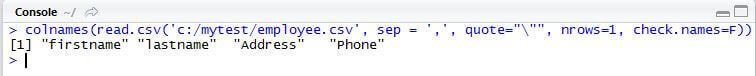

Now, if we want to find the field names of a csv file, in our example, c:/test/employee.csv, we can do this in R as follows:

colnames(read.csv('c:/mytest/employee.csv', sep = ',', quote="\"", nrows=1, check.names=F))

To read a csv file in R, in our example, c:/test/employee.csv, it can be done as follows:

read.csv('c:/mytest/employee.csv', sep = ',', quote="\"", check.names=F)

T-SQL Code

Now we will embed the above R code into the T-SQL code and make the whole logic work as per our requirements.

Please read the comments in the script together with the algorithm description.

-- function: given a folder, read all csv files in the folder and put them into staging tables

-- : the staging tables will be created automatically using file names without suffix

-- : each csv file will have a corresponding staging table under schema [csv]

-- : the columns will all be varchar (or nvarchar) type, and created dynamically

-- let's first crate a schema, called [csv]

use TempDB;

if schema_id('csv') is null

exec ('create schema csv');

/*

If you want to drop all tables with [csv] schema, you can do this way

declare @sqlcmd varchar(max) ='';

select @sqlcmd += 'drop table [csv].[' + name + '];' + char(13) + char(10)

from sys.tables

where schema_id = schema_id('csv')

print @sqlcmd;

exec (@sqlcmd)

*/

-- in @folder, we specify the folder where csv files are located

declare @folder varchar(256) = 'c:/MyTest/'; -- we use / to replace -- define two table variables for storing file names and the csv fields

declare @tmpFile table (fullname varchar(256) )

declare @tmpColumn table (filename varchar(256), colname varchar(256));

declare @fullname nvarchar(256);

-- first read file full names in the @folder

insert into @tmpFile (fullname) -- populate the table variable

EXECUTE [sys].[sp_execute_external_script] @language = N'R',

@script = N'file_list <- as.data.frame(dir(path=filefolder, pattern = "\\.csv$", full.name=T))' -- \\.csv$ means file with suffix as .csv

, @output_data_1_name = N'file_list'

, @params = N'@filepath varchar(256)'

, @filefolder = @folder

--with result sets (([FilePath] varchar(256))) -- "insert execute" cannot allow "with result sets" clause

-- read field names from each csv file

declare curF cursor for

select fullname

from @tmpFile

open curF;

fetch next from curF into @fullname;

while @@FETCH_STATUS = 0

begin

insert into @tmpColumn (colname)

EXECUTE [sys].[sp_execute_external_script] @language = N'R'

, @script = N'col_list <- as.data.frame(colnames(read.csv(filepath, sep=",", quote="\"", nrows=1, check.names=F)))'

, @output_data_1_name = N'col_list'

, @params = N'@filepath varchar(256)'

, @filepath = @fullname;

update @tmpColumn set [filename] = ltrim(rtrim(@fullname))

from @tmpColumn

where [filename] is null;

fetch next from curF into @fullname;

end

close curF;

deallocate curF;

-- now let's create staging tables

-- each staging table is named after the csv file name but without .csv suffix

-- assuming we have [csv] schema

declare @sqlcmd varchar(max), @crlf char(2)=char(0x0d)+char(0x0a);

declare @tblname varchar(255);

declare curFile cursor for

select ltrim(rtrim(fullname)) from @tmpFile

open curFile;

fetch next from curFile into @fullname;

while @@FETCH_STATUS = 0

begin

set @tblname = '[csv].[' + substring(@fullname, len(@fullname) - charindex('/',reverse(@fullname))+2, charindex('/',reverse(@fullname))-5 ) +']';

set @sqlcmd = 'create table ' + @tblname + '(';

select @sqlcmd = @sqlcmd + '[' + colname + '] varchar(300),' + @crlf

from @tmpColumn

where [filename] = @fullname

set @sqlcmd = substring(@sqlcmd, 1, len(@sqlcmd)-3) + ');'

set @sqlcmd = 'if object_id(''' +@tblname + ''', ''U'') is not null' + @crlf + 'drop table ' +@tblname + @crlf + @sqlcmd;

-- print @sqlcmd;

exec(@sqlcmd)

fetch next from curFile into @fullname;

end

close curFile;

deallocate curFile;

-- now we can start to pull the data from the files

-- we will loop through @tmpFile,

-- for each file, we will compose an "insert .. exec" sql statement for the corresponding staging table

declare curFile cursor for

select ltrim(rtrim(fullname)) from @tmpFile

open curFile;

fetch next from curFile into @fullname;

while @@FETCH_STATUS = 0

begin

set @tblname = '[csv].[' + substring(@fullname, len(@fullname) - charindex('/',reverse(@fullname))+2, charindex('/',reverse(@fullname))-5 ) +']';

set @sqlcmd = 'insert into ' + @tblname;

set @sqlcmd = @sqlcmd + '

EXECUTE [sys].[sp_execute_external_script] @language = N''R''

, @script = N''result <- read.csv(filepath, sep=",", quote="\"")''

, @output_data_1_name = N''result''

, @params = N''@filepath varchar(256)''

, @filepath =''' + @fullname + '''' + @crlf;

--print @sqlcmd;

exec(@sqlcmd)

fetch next from curFile into @fullname;

end

close curFile;

deallocate curFile;

/*

-- we can check the tables created

select schema_name(schema_id) as [schema], name from sys.tables

where schema_id = schema_id('csv');

*/

After running the T-SQL code in a SSMS window, we can verify that two new staging tables were created and populated as shown below:

Pay attention to the following naming convention:

- The table name is the same as the csv file name without the suffix.

- The table column names are the same as the field names of the corresponding csv file.

Summary

Importing complex CSV files usually is tedious and labor intensive if using T-SQL directly, but with R integration in SQL Server 2016 this work becomes a breeze as demonstrated in the tip.

I consider R integration probably the most valuable new feature in SQL Server 2016 and it will change lots of traditional ways we usually do our work. For example, we previously rely on CLR functions for complex math or financial functions, now with R we can simply use many excellent R packages to do the same work, saving DBAs quite a bit of overhead managing those CLR objects.

Next Steps

Please read the following articles to learn more about R and think about whether you can use R to solve tasks at hand.

- SQL Server 2016 R Services: Executing R code in SQL Server

- SQL Server 2016 R Services: Guide for Client Configuration

- R tutorial

- In-database Advanced Analytics with R in SQL Server 2016

If you are interested in further expanding this tip, here is homework:

- For a csv file with [N] fields, if I only want to import the first M (M <=N) fields, how can I do it?

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips