By: Tim Smith | Comments | Related: > Application Development

Problem

We've been troubleshooting an application set up involving multiple databases, and this process has required more time than we initially expected. Some of the problems we faced were missing dependencies in the database and application, how the final project should look, and gaps in understanding how applications interacted with the database. What are some best practices to avoid this in the future?

Solution

In this tip, we'll begin by looking at the end of building an application that uses a SQL Server database from the view of the back-end. We are excluding the middle-ware and front-end in this tip. Troubleshooting can consume over half of the development time and if we don't prepare with a validation roadmap, it may consume more time. One pushback I see against writing validation queries or scripts is "it takes some time." This is true, but when I compare environments which use validation scripts to environments that don't, the former environments save 80-90% more time with a significant reduction in troubleshooting. Creating validation scripts prepares for the inevitable troubleshooting that occurs when creating applications.

Save Queries for SQL Server Objects

What SQL Server objects does are application require? From tables to procedures to SQL jobs, we can run queries that return the list of objects we find, or we can keep a list of objects that we use to validate these exist (seen in the below query). Whether we want to run a query that returns all the objects we find (we'll cross-check what's missing), or whether we maintain a script that identifies what's missing, both require some effort. When a unit test or other business validation process is run and fails, these scripts should tell us in a short period if any object is missing. Using an example of an application that requires 5 tables, 3 procedures and 2 SQL Jobs, we'll use a query that returns what doesn't exist:

DECLARE @Objects TABLE(

RequiredObject VARCHAR(1000)

)

INSERT INTO @Objects

VALUES

('Table tbOne')

, ('Table tbTwo')

, ('Table tbThree')

, ('Table tbFour')

, ('Table tbFive')

, ('Procedure stpOne')

, ('Procedure stpTwo')

, ('Procedure stpThree')

, ('Job jbOne')

, ('Job jbTwo')

---- Add new objects here using ,('') syntax

SELECT RequiredObject

FROM @Objects

WHERE RequiredObject NOT IN (

SELECT 'Table ' + name AS FoundObject

FROM sys.tables

UNION ALL

SELECT 'Procedure ' + name AS FoundObject

FROM sys.procedures

UNION ALL

SELECT 'Job' + name AS FoundObject

FROM msdb.dbo.sysjobs

)

If our application requires objects, such as triggers, views, etc, we would include these in our validation step. As an alternative, we could simply query what's present - this query returns the tables, procedures and job names, but it does not tell us if these are required and we would then need to compare to discover what is necessary:

SELECT 'Table ' + name AS FoundObject FROM sys.tables UNION ALL SELECT 'Procedure ' + name AS FoundObject FROM sys.procedures UNION ALL SELECT 'Job' + name AS FoundObject FROM msdb.dbo.sysjobs

The first example might take more time to create and update to as changes occur, but at troubleshooting time, we quickly identify a missing object or requirement. Both will function when troubleshooting, but how much further you go for validation may save significant time. Depending on your application architecture, you may be able to extract the objects used on the back-end from metadata and if possible use this for querying the database. Also, if you use source control for all database objects (including SQL Jobs, SSIS packages, etc.) and you set up source control for a database per application, you can use the objects you find from source control and compare that to what is present. However, if multiple applications use one database, using source control as a "map" may not assist, especially in situations where different teams are responsible for different applications using that database.

I recommend this approach per application. Since databases can be used by multiple applications, each would have a map of what objects are used. For an example, if we had databases of natural gas pricing information (1), weather information (2), and trading details for the natural gas market (3), we would want each application that uses those databases, or a combination of them, to have their own map. How this may look in this example:

- If the customer billing application only used natural gas pricing information database, it would have a map for that database.

- If the commercial hedging application used the natural gas pricing information database, weather details database, and trading details database, it would have a map for each of those databases.

- If the sales application used natural gas pricing information database and the weather details database, it would have a map for each of those databases.

In this example, we have 3 applications and a total of 6 maps or outlines for validating what database objects are used.

Save Queries for Custom Database Information

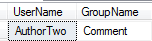

Suppose that we have an application that requires values from a configuration table or a custom application table, like a list of users outside of standard Windows or SQL Users. Because some of the custom information is within a table, we want to take our validation querying a step further by querying these tables to ensure that we see the values we expect. How many tables could be involved? As many tables as required by the application. In the below example, we look at a blog_user table and join it with a blog_groups table, which tells us the users and roles. In the below select query, we validate an AuthorTwo and CuratorOne user and we see one is missing (CuratorOne) and one is in the wrong group (AuthorTwo).

CREATE TABLE blog_groups (

GroupId SMALLINT IDENTITY(1,1) PRIMARY KEY,

GroupName VARCHAR(25),

AuthorizationId SMALLINT

)

CREATE TABLE blog_users (

UserId INT IDENTITY(1,1) PRIMARY KEY,

UserName VARCHAR(150),

BlogGroupId SMALLINT FOREIGN KEY REFERENCES blog_groups (GroupId)

)

INSERT INTO blog_groups (GroupName,AuthorizationId)

VALUES ('Publish',1)

, ('Comment',2)

INSERT INTO blog_users (UserName,BlogGroupId)

VALUES ('AuthorOne',1)

, ('AuthorTwo',2)

---- We want to verify AuthorTwo has Publish group and CuratorOne exists:

SELECT

t.UserName

, tt.GroupName

FROM blog_users t

INNER JOIN blog_groups tt ON t.BlogGroupId = tt.GroupId

WHERE t.UserName IN ('AuthorTwo','CuratorOne')

DROP TABLE blog_users

DROP TABLE blog_groups

In this custom example, we can either explicitly search for what we expect, or we can run a query and get all the results back and compare with what we expect. Since we use this custom setup, we would have a script to validate this setup. We should create validation queries to any custom database configuration information we use. Like validating SQL Server objects, this also helps us begin with the end in mind: what does the final product look like when we query information we're using in our application?

When should we use custom queries?

- Any time custom data is required for an application or process (i.e.: ETL) to function. An example is a custom user and role table where a missing role or missing user will result in an application error.

- Any time an application or process (i.e.: ETL) uses a table for a feature within the application. For an example, when an application loads a menu list from a database.

- Any time an application or process (i.e.: ETL) uses a table for configuration information; in some cases, the application won't even begin for validation.

Even when we consider the downside of updating queries as changes occur - which uses time, we'll still save a significant amount of time when troubleshooting a failure. By writing queries for custom architecture, we think about how our application or process uses the database or databases and we may re-think our design, or we may realize flaws in our design. In addition, if an error occurs, we'll have to write them at troubleshooting time. Finally, the other advantage is that we make hand-offs easier: whether people migrate within our company or to other companies, these queries reduce the learning time of new developers.

In both of these steps, we use queries to outline how our application functions on the back-end. I've seen numerous situations where troubleshooting took hours or days simply because the team didn't take five minutes to write one query that would have prevented the issue - a missing record or object. Troubleshooting consumes more time than development, and these outlines increase the amount of time for development by reducing the amount of time troubleshooting.

Document an Overview of the Database and Application

Whether written or video, with the latter offering the ability to show examples visually, document an overview of the application. The documentation can provide an overview of what the application does, what architecture is required, and how each piece of the application interacts. For instance, if user data comes from ServerOrigin to ServerDestination through an SSIS package that uses configurations from a table, document this information. This helps people quickly determine what may have failed due to what's missing, such as user data missing on ServerDestination indicates a possible issue with the SSIS package or objects it requires.

In the past, I understood pushback on documentation because written documentation can take significant work. However, video documentation is fast and visual and it no longer requires huge amounts of space. MP4s are very light weight and detailed. Most application overviews can be recorded within 10-15 minutes while resulting in hundreds of hours in troubleshooting saved. Some of the advantages:

- Documentation of complex architecture can be done in minutes, not hours.

- New developers see what pieces are involved in applications, ETL, database architecture; a video can actually point to the actual SSIS packages, application classes, etc.

- As more Millennials (born 1980 - 1995) and iGenz (born 1996 - 2011) enter the workforce, they expect this - they tend to use YouTube as a search engine over Google, so video documentation is more familiar to them.

- Video documentation reduces meetings and solves many communication problems, since people see the overview.

With video documentation, I would raise some caution on three points: perfectionism, purpose and indexing. Since software changes, videos must be quick and to the point and making them look and feel perfect wastes time since the application will change in time. If we record a 10 minute overview video and two years later, must record another 10 minute video, we've still only spent 20 minutes in 2 years - a fraction of what written documentation would have cost. However, if we spend time trying to make a video perfect, we end up doing work that doesn't generate results. Keep it simple and to the point. In addition, an overview video should be demarcated from a troubleshooting video - troubleshooting videos should be on specific topic such as, "When I get this error, what do I do?" When developers are troubleshooting, they don't want every detail, but how to solve the problem. When developers want to see how the architecture is constructed, they want the overview. These should be separate videos.

Finally, indexing videos can be a challenge, but this is a challenge with any documentation - "where can we find these?" I suggest keeping videos by specific topic which makes indexing easier.

Next Steps

- Since applications and database architecture can require dependencies, I would suggest keeping a list of requirements that can be used for a checklist in order of what must be created first (or organized by what can be created independently).

- In troubleshooting applications and database architecture, about half of the issues are caused by configuration values missing, data flow or data objects missing, or missing files or other objects related to the application. Queries that map out all the requirements for the back-end not only save time during troubleshooting, but help developers validate the required objects and data are built.

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips