By: John Miner | Updated: 2024-02-29 | Comments | Related: > PostgreSQL

Problem

Microsoft supports two platform-as-a-service (PaaS) offerings for PostgreSQL—the single database and flexible server versions. The first offering is slated to be retired in March 2025. The second offering is what Microsoft is proposing for long-term use. As a developer, how can we deploy and configure the flexible server offering for PostgreSQL?

Solution

Today, I will cover how to manually deploy and configure the Azure SQL Database for PostgreSQL through the Azure portal. Arm Templates, Azure CLI, and Terraform scripts can be used to automate this process. Usually, mature organizations that use agile also use continuous integration and continuous deployment (CI/CD) to make either server or database changes.

Business Problem

Our manager has asked us to investigate the performance and pricing of Azure Database for PostgreSQL (PaaS) versus installing PostgreSQL on an Azure Virtual Machine (IaaS). The first task is to deploy the service in the Azure Cloud. The second task is to configure the service for remote connections. The third task is to execute our benchmark program to get execution timings. Finally, a database without a job scheduler is not that useful. How can we schedule a job within the flexible server offering of PostgreSQL?

Azure Offerings

If we search the marketplace for PostgreSQL, we can see that a single server and flexible server are offered.

The Single Server's documentation reveals that it is deprecated as a service. Therefore, this service should not be deployed when creating new infrastructure. Additionally, it is recommended that the Single Server be migrated to the Flexible Server offering in the near future.

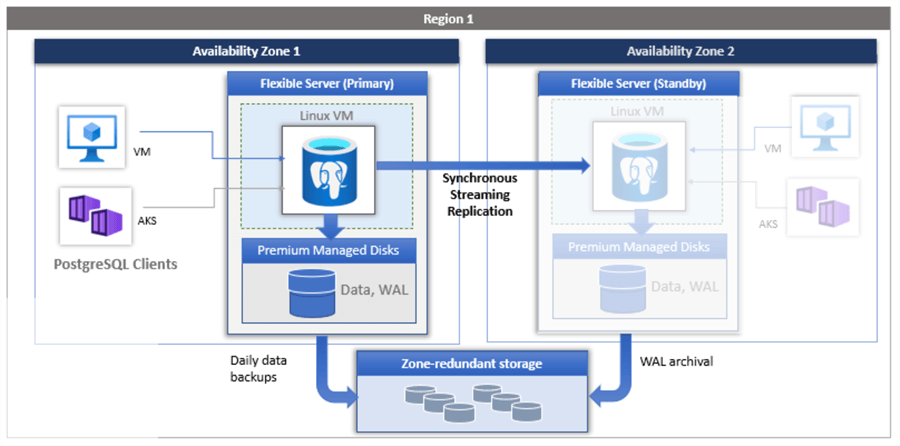

The architectural diagram below depicts the high availability setup of PostgreSQL using production tiers of the offering. The Azure Kubernetes Service (AKS) is used to deploy a Linux virtual machine with PostgreSQL. The premium managed disks are remote to the container image. These disks store both the data and log files. Backups are stored on low-cost Azure storage. High availability is achieved by replicating the changes from the primary to the secondary server, which is in standby mode. A fail-over condition promotes the secondary server to primary.

Azure Portal

Today, we will deploy and configure Azure SQL Database for PostgreSQL. Find the flexible server offering in the marketplace and kick off the deployment process by clicking the create button.

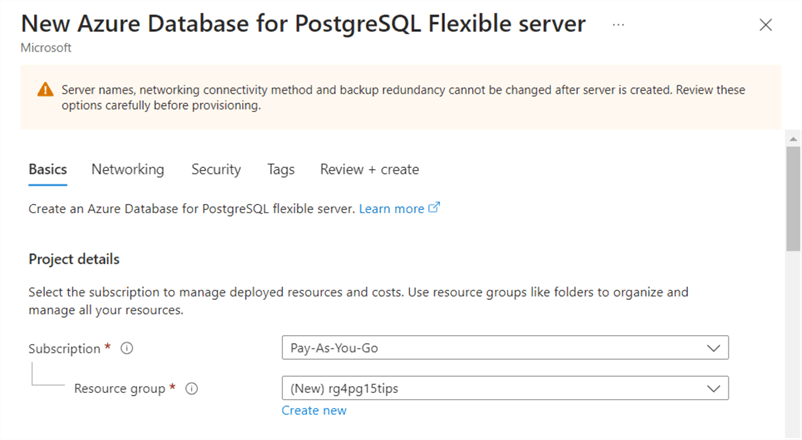

Every deployment in Azure requires a resource group. A resource group is just a container with one or more services. The nice thing about resource groups is that deleting a group removes all services within the container. The name of my resource group is rg4pg15tips.

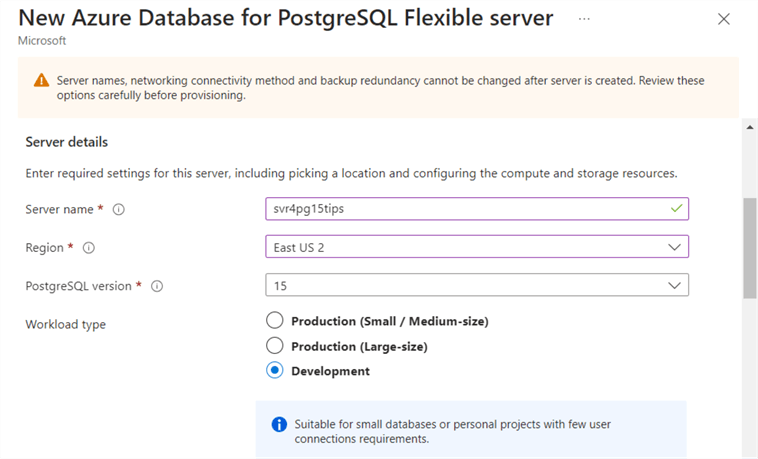

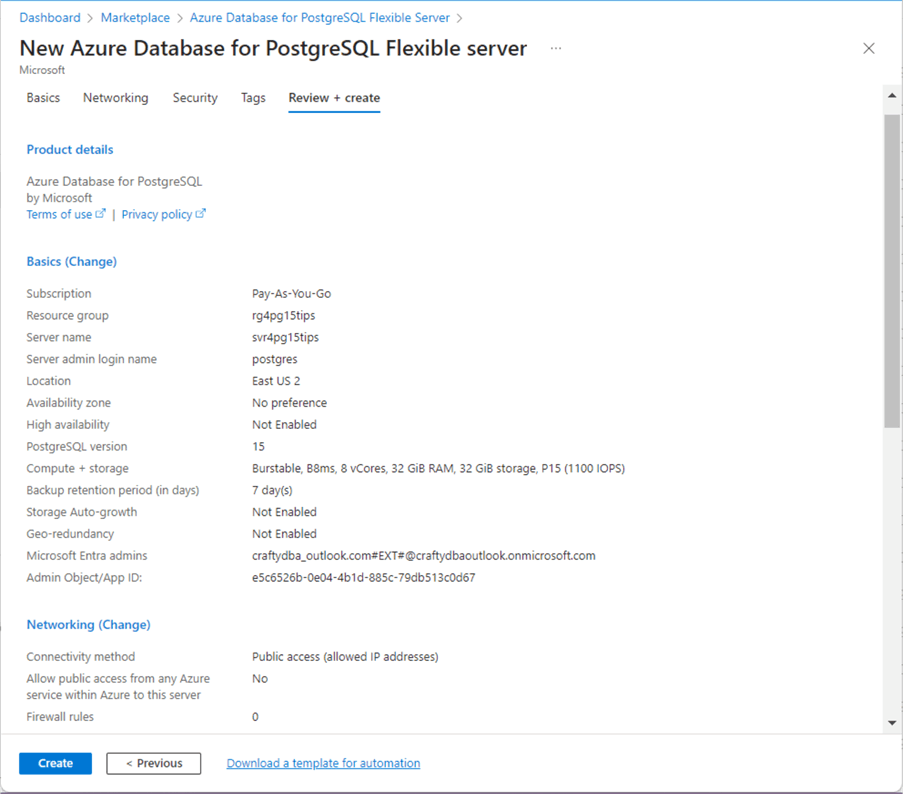

The following screenshots show the choices available on the basics screen of the flexible server deployment. Four more additional pieces of information are needed for the server details. We must choose a server name, pick a region to deploy the service, select the database engine version, and decide the workload type. I suggest we deploy the database server to the same region as the resource group.

My choices for this screen include:

- Server name: svr4pg15tips

- Region name: East US 2

- Database version: 15

- Workload type: Development.

On the next screen, we will show how the workload type corresponds to the computing tier.

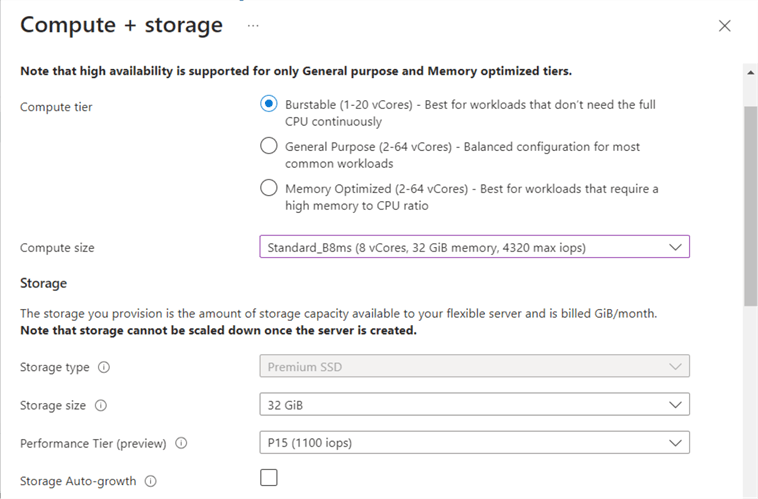

A development server is considered a burstable tier. A small or medium workload is a general-purpose tier. A large workload is a memory optimized tier. Once we pick the computing tier, we can choose the size of the virtual machine as well as the configuration of the premium disks. Note: The IOPS for each component are listed below.

Now that we have chosen our computing tier and storage size, we can talk about redundancy and backups.

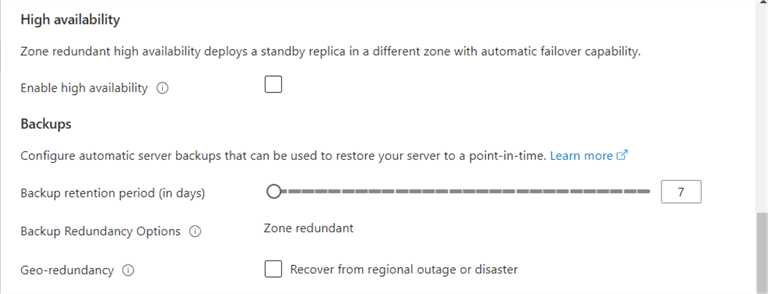

Other basic options are high availability, backup retention, and geo-redundancy. Some options are not available for development workloads.

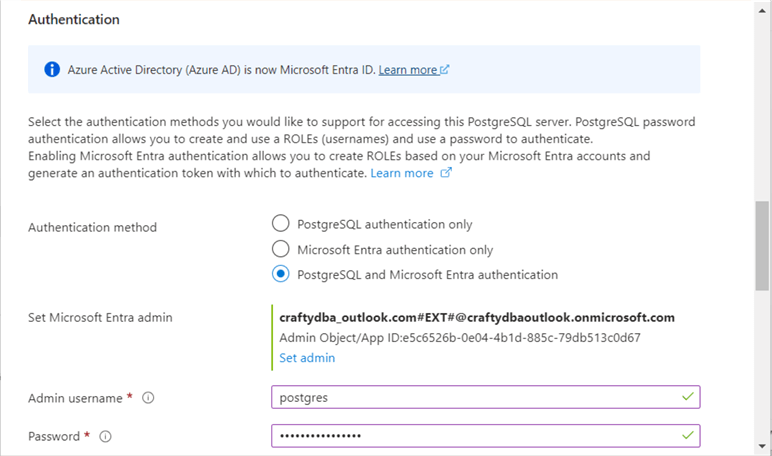

It is not surprising that the flexible server offers a mixed authentication mode. Not only can we specify an Active Directory Administrator ([email protected]), but we can also create a standard super user named postgres.

The estimated monthly cost is displayed to the far right on the basics screen. Note that the virtual machine's computing power accounts for the lion's share of the total cost. To save money, companies should create an automation job to start and stop the service for non-production environments. Keeping the lower environments up for only 8 hours a day can reduce the computing cost from $397.12 to $132.37 monthly.

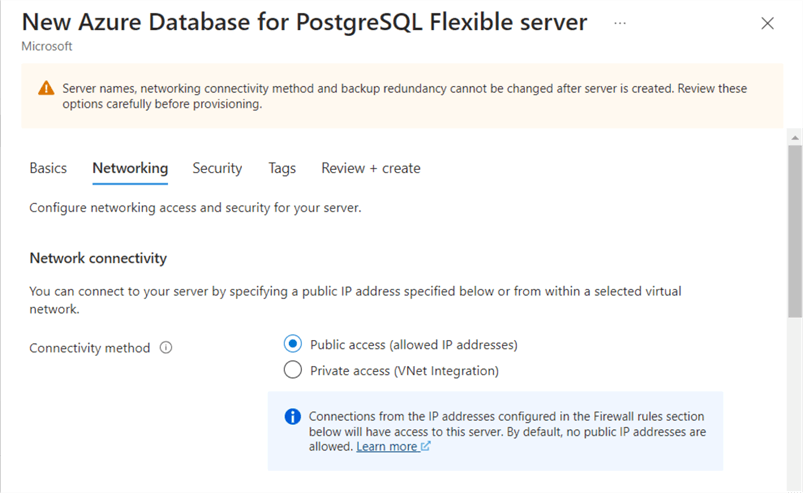

There are two ways to set up networking for the flexible server. The first way involves public internet access and firewall rules. The second way requires virtual network (V-NET) injection. For companies with sensitive data, investigate setting up a V-NET with a jump server.

Today, we are going to use the public access connectivity method.

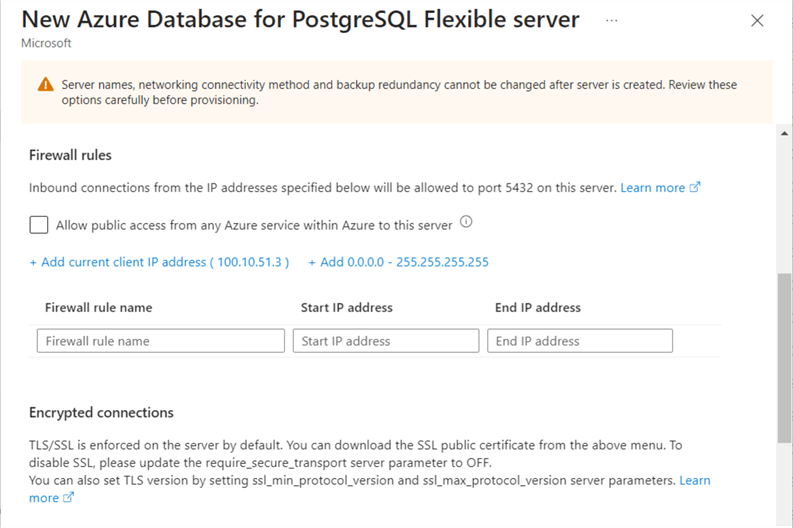

The firewall rules screen is shown below. It is very similar to what we have done with the Azure SQL database. I leave it as a task for you to enter your remote machine information later. Additionally, there is a check box to allow all Azure Services, such as Azure Data Factory, access to this database server.

The bottom of the screen discusses how SSL is required for remote connections. I found that the client does not check the certificate by default. However, you can download the root certificate using this information: Encrypted connectivity using Transport Layer Security in Azure Database for PostgreSQL - Flexible Server. Make sure you set the sslmode and sslrootcert parameters in pgAdmin to enforce an SSL connection.

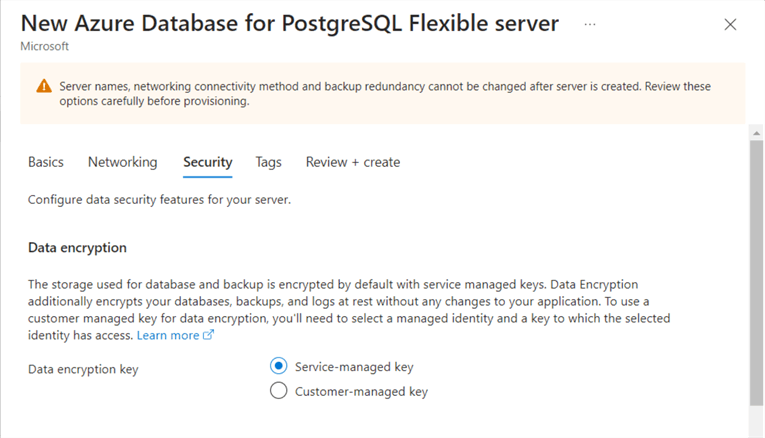

Some companies require the data and backup files to be encrypted with a user-defined key. Since this is only a development server, I will use a service-managed key.

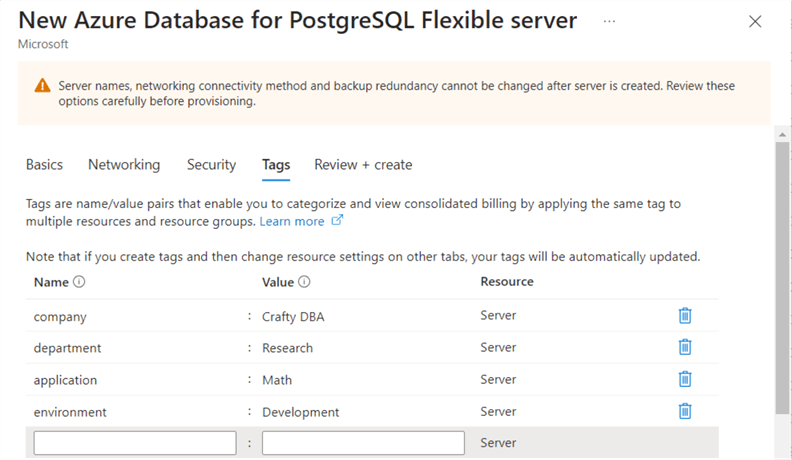

Using tags is very important for organizations that want to charge back OPEX costs incurred by the IT department to the business lines. See the image below for sample information entered for this deployment.

Most services in Azure have a settings review screen before you deploy them, and this one is no different.

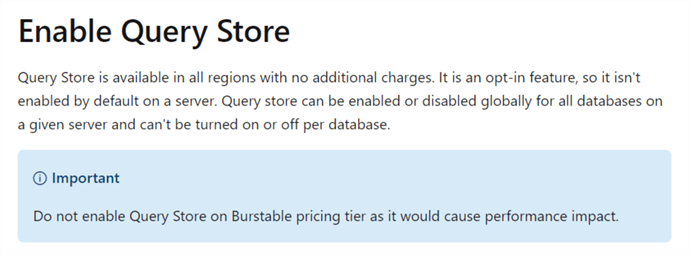

I hoped to showcase the query store in this article, but a non-development tier is required (see the image below). Instead, I will discuss how to configure the collection of query statistics in the future for both IaaS and PaaS offerings.

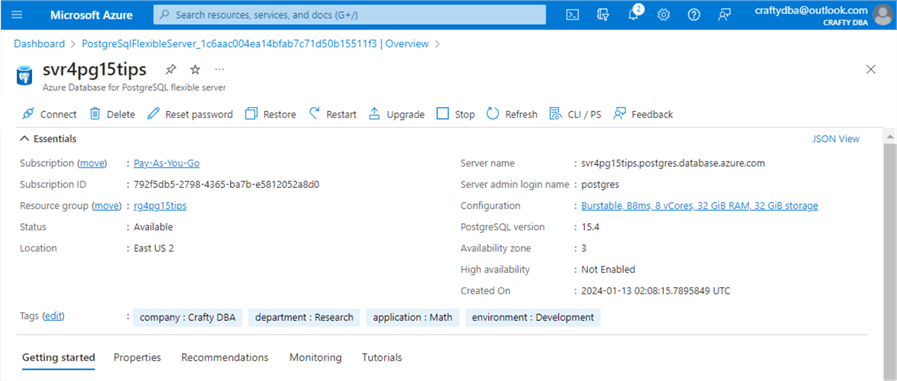

The image below shows that the service was successfully deployed to Azure. Note the server's name. We will need this information to set up the pgAdmin tool on our Azure Virtual Machine.

Remote Clients

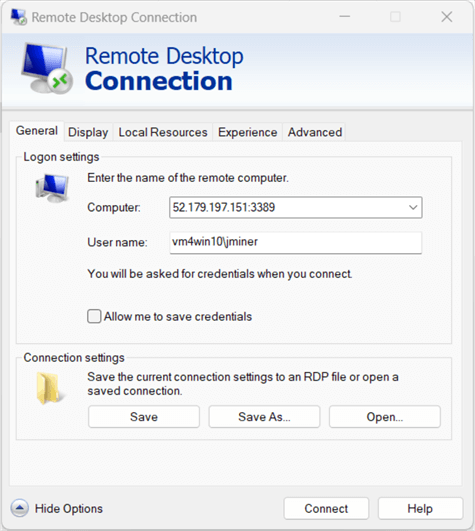

A database server without a remote client is like a ship stuck in a harbor- it cannot service the world. The screenshot below shows the IP address of the Azure Virtual Machine named vm4win10.

Go back to the firewall screen for the PostgreSQL flexible service and add this IP address now.

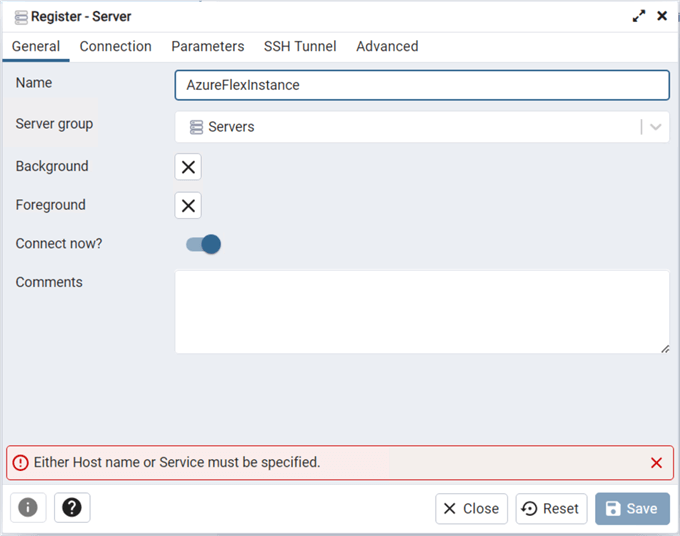

After using the remote desktop protocol to access the remote virtual machine, I want to configure the pgAdmin tool to access this new server. Right-click to start the server registration. On the general screen, we need to give a name to the server. In our case, I choose to name the connection "AzureFlexInstance".

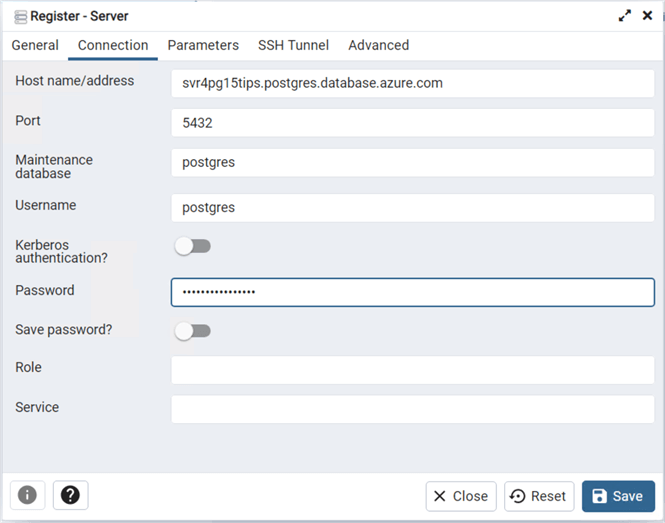

Since the service does not have a dedicated IP address, we need to supply the fully qualified name of Azure Database for PostgreSQL. The default port for PostgreSQL is 5432, the maintenance database is postgres, and the super user is postgres.

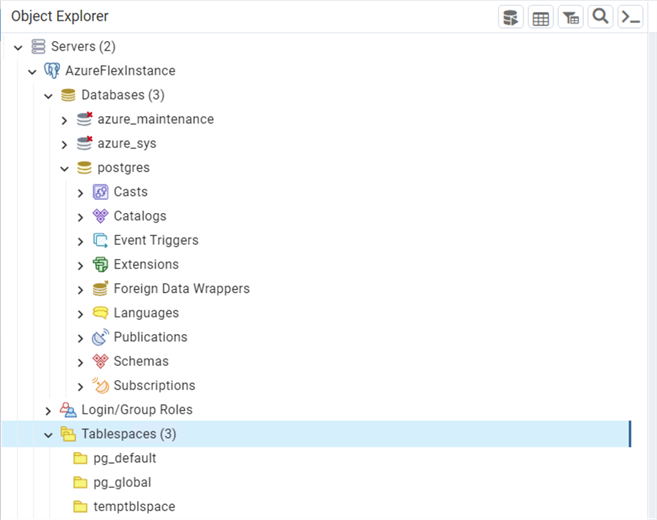

The image below shows a successful connection to the Azure service. Note: There are two Azure-specific databases:

- azure_maintenance: Used by Azure to provide managed services.

- azure_sys: Used by query store to collect information.

Both databases should not be modified since they are used by the Azure Service.

Now that we have deployed and configured Azure SQL Database for PostgreSQL flexible server, we can deploy our benchmark database.

Install Benchmark Database

My prior article, Convert a Database Schema from SQL Server to PostgreSQL, covered the steps necessary to migrate our math database from SQL Server to PostgreSQL. We will manually deploy that script to Azure SQL Database for PostgreSQL with minor changes. First, we do not have any control over tablespaces since the disks are managed by the service. The tablespace code was removed from the PaaS script.

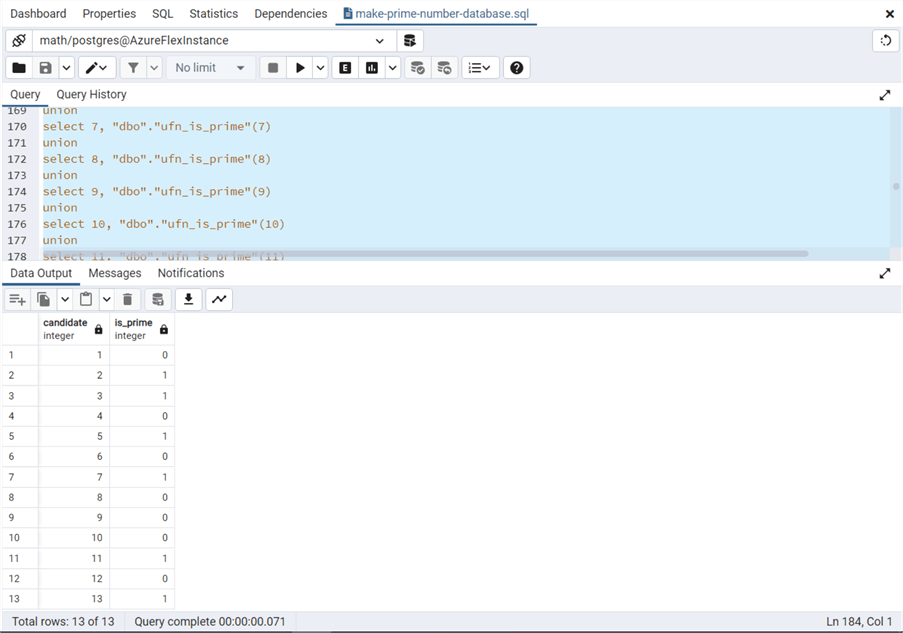

The image below shows a sample call to the ufn_is_prime function. The call determines which of the numbers from 1 to 13 are prime.

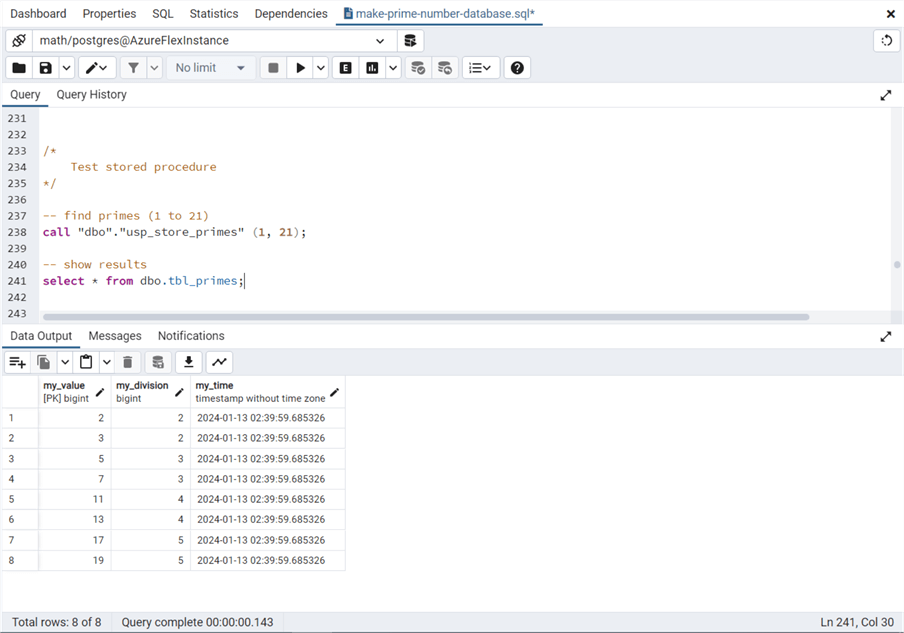

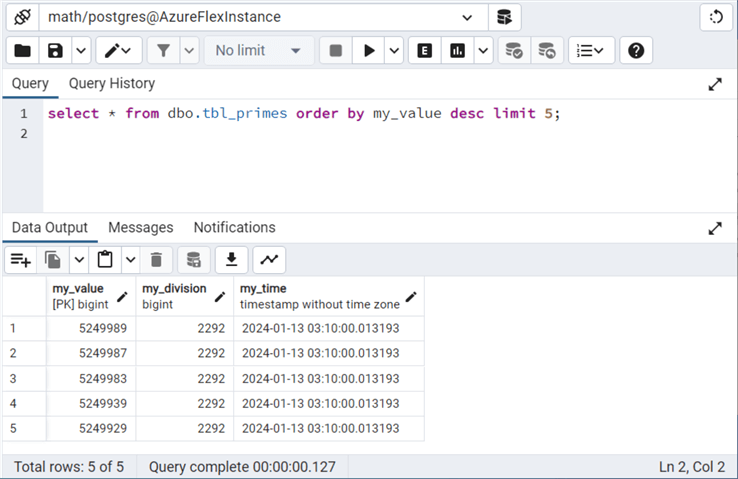

The image below shows a sample call to the usp_store_primes function. It searches the numbers 1 to 21 for prime numbers. A simple SELECT statement shows eight numbers were stored in the table named tbl_primes.

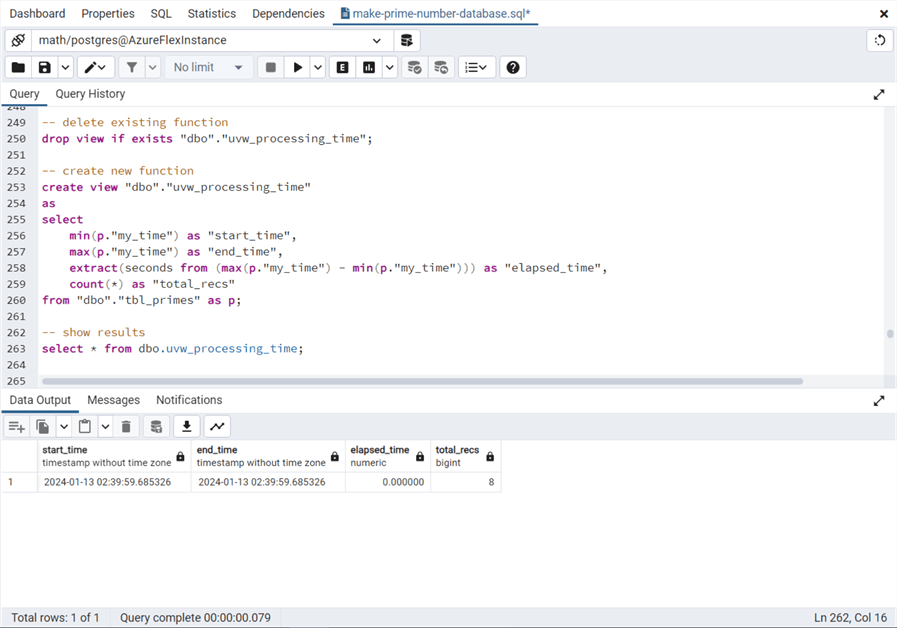

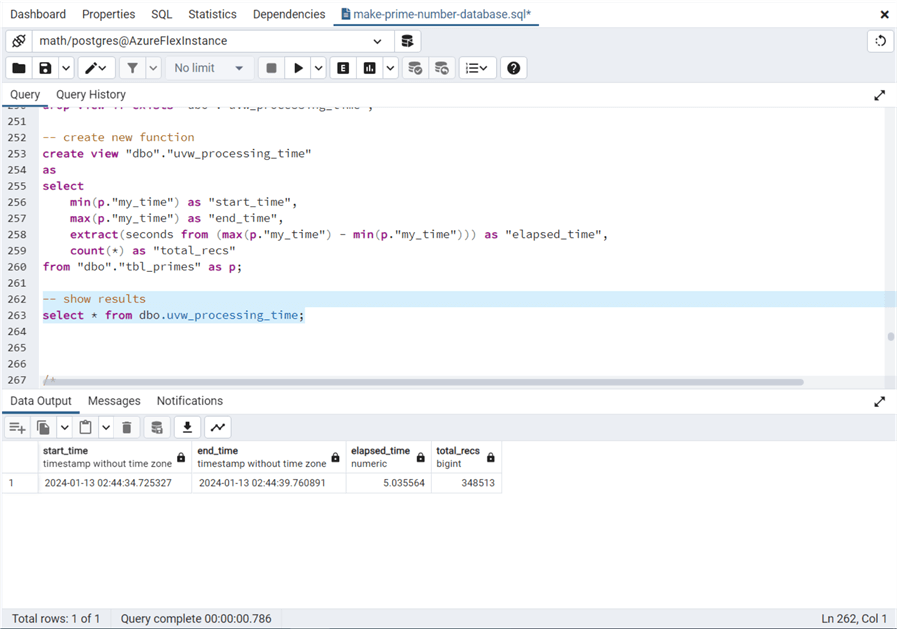

The view named uvw_processing_time is shown below. The SELECT statement that uses the view indicates that eight prime numbers were found quickly.

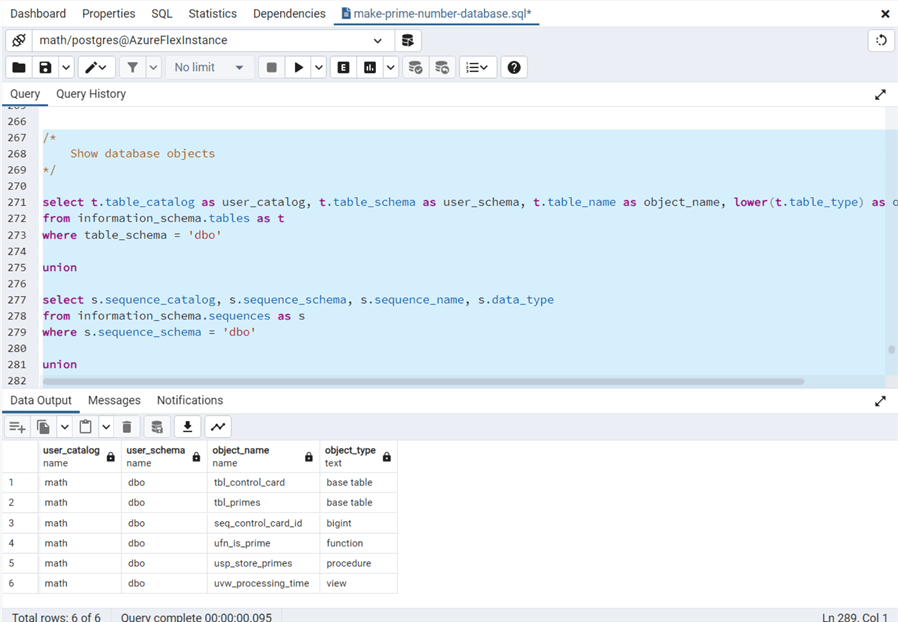

The information schema is available for users to query the PostgreSQL catalog for database objects. The image below shows six objects making up the math database schema.

Now, we are ready to run the benchmark test.

Execute Benchmark Test

Since the tables already contain some data, run the following statements to reset the environment for a benchmark test. It truncates the two tables, resets the sequence, and enters the first record into the control card table.

/*

Reset benchmark test

*/

truncate table "dbo"."tbl_control_card";

truncate table "dbo"."tbl_primes";

alter sequence dbo.seq_control_card_id restart with 1;

insert into "dbo"."tbl_control_card" values (default, 'Starting Point', default);

The run command file executes the call-psql command file 20 times in parallel. The asynchronous programs calculate the prime numbers from 1 to 5 million. A simple query of the view shows that the total execution time is around 5 seconds.

This is faster than the IaaS deployment completed in the past. At the end of this article, I will compare costs and timings for both offerings.

Job Scheduler

My prior article on deploying and configuring a PostgreSQL database on a Windows operating system used the pgAgent service. However, we do not have access to configure the AKS container. How do we schedule jobs within this service?

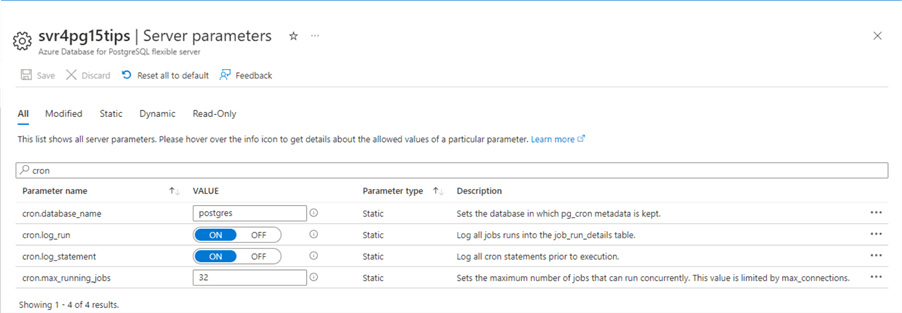

Do not fret. The server parameters section of the Azure Portal shows the default configuration of the Azure SQL Database for PostgreSQL flexible server. The Microsoft product team has already configured the pg_cron extension on the service. See the GitHub repository for details.

The following table shows the objects available in the cron schema:

| Object Type | Schema Name | Object Name | Description |

|---|---|---|---|

| Table | cron | job | A list of jobs for the flexible server. |

| Table | cron | job_run_details | Details about executed jobs |

| Function | cron | schedule_in_database | Create a new job for a user database. |

| Function | cron | schedule | Create a new job for postgres database. |

| Function | cron | unschedule | Drop an existing job. |

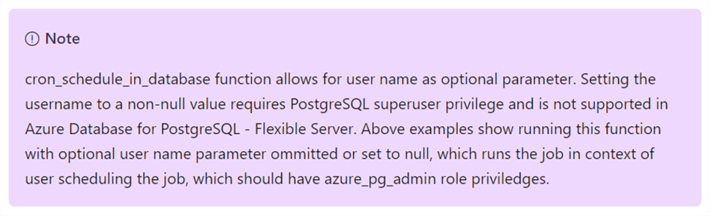

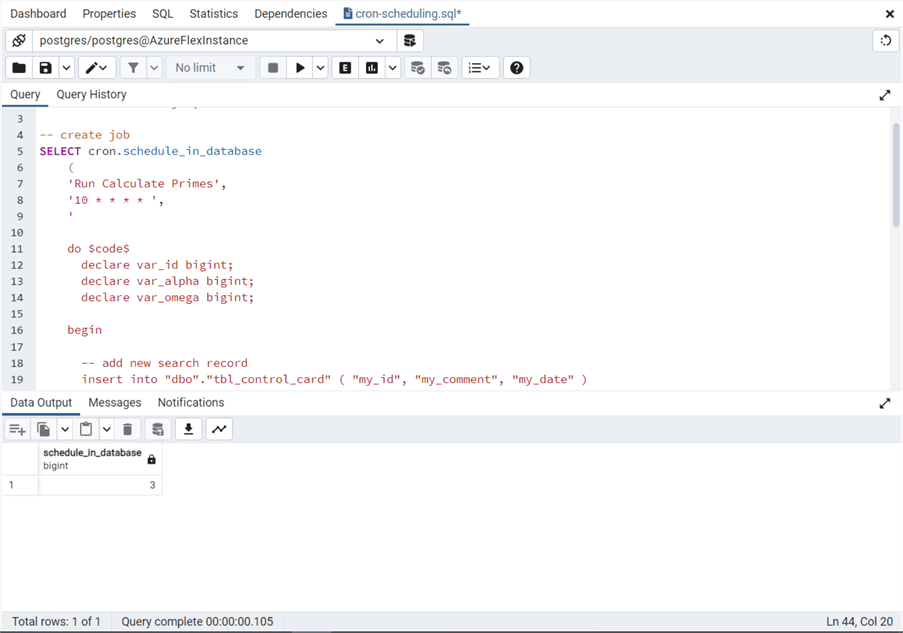

I am going to skip the cron.schedule function since the cron. schedule_in_database allows the execution of code on any database. Note: This function is not in the GitHub fork. The image below shows a job to calculate the next primes given a 250K search block from the control card.

The following is a list of parameters that can be passed to the function:

- job name – What is the name of the job

- job schedule – This is a cron style mask used to describe the schedule

- command code – A text block containing the code to execute

- database name – Which database to execute the SQL code on

- database user – This is not supported by flexible server

- enable job – This is a boolean flag.

The snippet below was taken from Microsoft documentation. It is best to set the user name to NULL.

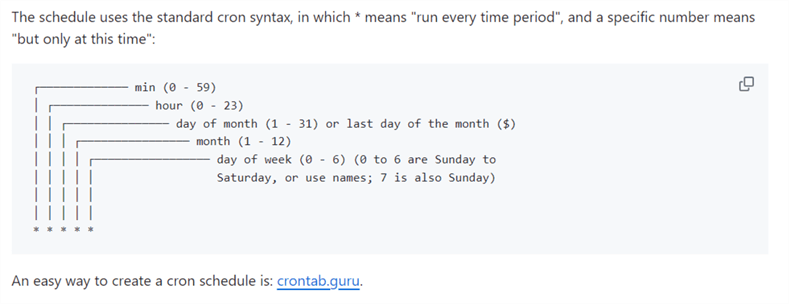

The screenshot was taken from the GitHub repository for pg_cron. I like using crontab.guru to create my schedules.

First, I had to name the statement block ($$) for the code to work. Also, you need to escape single quotes in your code since it exists inside a text block. The return value of the function call is an index (primary key) to the job named "Run Calculate Primes".

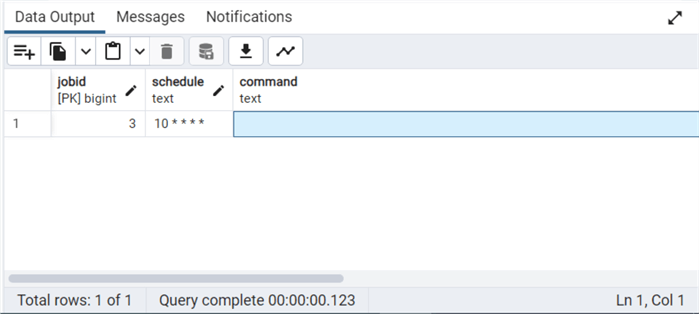

The output below was obtained by querying the cron.jobs table. The command text is multiline, and one must highlight the text block to view the data. This is the third job shown by the jobid; the other two jobs have been dropped.

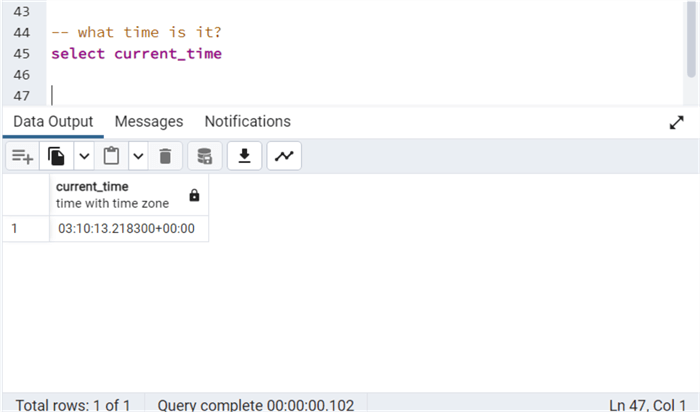

The above job is scheduled to run every hour at 10 minutes past the hour. The current time has just passed the scheduled time.

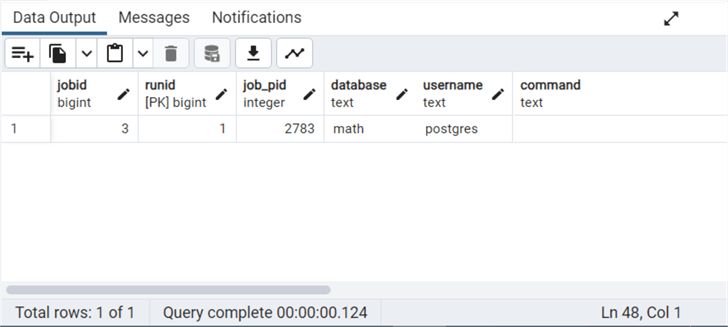

The cron.job_run_details table shows that this is the first run since runid is equal to 1.

Remember, we manually ran a test that calculated the prime numbers from 1 to 5 million. This new scheduled job execution will calculate prime numbers between 5.00 M and 5.25 M. Please see the details below. Each time the job executes, the next 250 K numbers are searched for prime numbers.

Use the cron.unscheduled function to remove the job if you want.

Saving Money

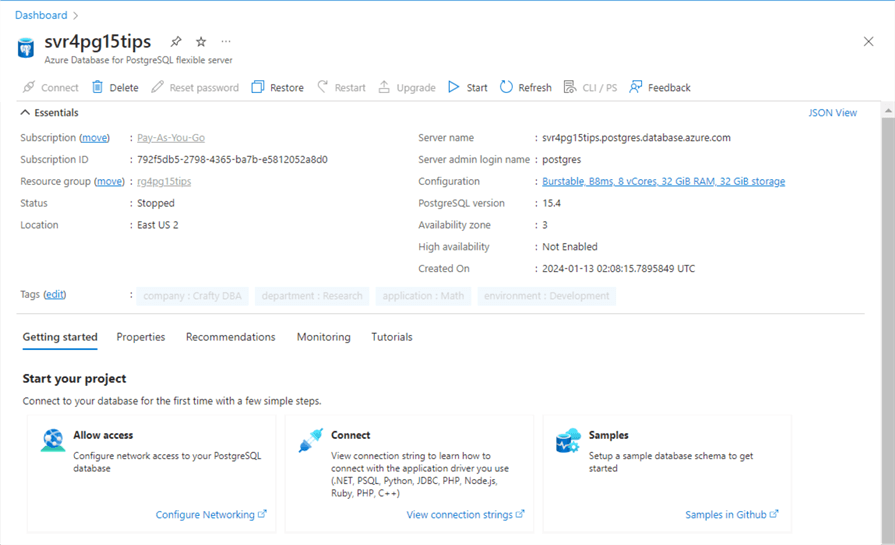

One nice feature of the Azure SQL Database for PostgreSQL is that the service can be stopped. In a stopped state, we only pay for storage, the local SSD (database files), and the remote SSD (backup files). This can be a considerable savings. The image below shows our svr4pg15tips server in a stopped state.

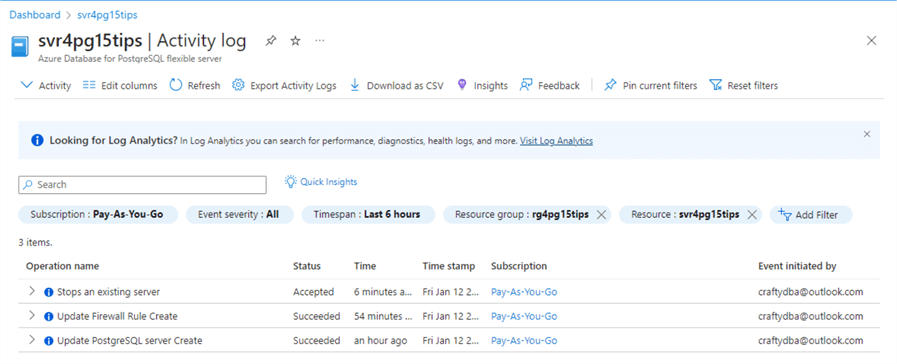

Auditing what happens to the database service is a business requirement for many organizations. If we look at the activity log in the Azure Portal, we can see the following actions have been executed on the service: create server, update firewall rule, and stop server.

Service Comparison

The main difference between the two service configurations (IaaS vs. PaaS) is the operating system on which the database engine is installed. One is on a Windows operating system, and the other is on a Linux operating system.

| Test No | Description | Configuration | OS | Compute | Cores | Time |

|---|---|---|---|---|---|---|

| 1 | IaaS – Virtual Machine | POSTGRES 15.5 | Windows Server 2022 | 8 V-CPUs, 32 GB memory | 8 | 8 |

| 2 | PaaS – AKS Container | POSTGRES 15.4 | Linux – Ubuntu 18.04 | 8 V-CPUs, 32 GB memory | 8 | 5 |

Another key difference is the speed of the storage device. For the IaaS deployment, we are limited to 500 IOPS but have a large 127 GB disk. On the other hand, the PaaS offering has a small 32 GB disk with 1100 IOPS.

| Test No | Description | Storage | IOPS | Time |

|---|---|---|---|---|

| 1 | IaaS – Virtual Machine | 127 GB | 500 | 8 |

| 2 | PaaS – Virtual Machine | 32 GB | 1100 | 5 |

The last table compares the overall cost of the two different deployments.

| Test No | Description | Type | IOPS | Monthly Cost |

|---|---|---|---|---|

| 1 | IaaS – Virtual Machine | Compute | DS8 v5 | $328.50 |

| Storage | 128 GB | $17.92 | ||

| 2 | PaaS – AKS Container | Compute | B8 MS | $397.12 |

| Storage | 32 GB | $3.68 | ||

| Performance | IOPS | $25.76 |

The IaaS deployment assumes that you are renting a Windows operating system license. To reduce costs further, we could install PostgreSQL on a Linux server, which is open-source and has no licensing charges. The same virtual machine using Linux costs only $280.32, a savings of $48.18 per month. We could use those savings to increase the storage tier's performance. Also, the B8 MS-sized Linux Virtual Machine costs only $243.09, which is even more savings.

To recap, the first deployment type (IaaS) costs $346.42, and the second deployment type (PaaS) costs $426.56. The benchmark test showed a 37.5 % decrease in overall execution time, and I am pretty sure the OS and IOPS were responsible for the speedup.

Summary

Today's article focused on deploying Azure SQL Database for PostgreSQL, the flexible server offering. Note: The single server offer is deprecated and will be terminated in March 2025. The Azure Portal allows a system designer to enter settings for the following topics: basic settings, network settings, security settings, and tagging information.

The flexible server offering has three performance tiers: development (burstable), small to medium workloads (general purpose), and large workloads (memory optimized). The ability to adjust the IOPS for storage at a cost is a new feature currently in preview. In the past, the larger storage size equated to the higher IOPS.

The math database, which is used for benchmarking, was deployed to the IaaS deployment. We found that the total execution time of the test decreased by 37.5%. It is an educated guess that the Linux Operating System and Higher Disk IOPS caused this increase in performance. The pg_cron extension is automatically installed on the flexible server offering. Unlike the pgAgent extension, the setup of this job scheduler is relatively easy.

I hope you enjoyed reading the article. Enclosed is the zip file with all the code covered in this article.

Next Steps

- How to use temporary tables in PostgreSQL

- Using materialized views with large datasets in PostgreSQL

- Different ways to create and call functions in PostgreSQL.

- Main differences between functions and procedures in PostgreSQL.

- How to perform PostgreSQL database CI/CD with Liquid Base

- Audit and/or prevent unwanted actions in PostgreSQL.

- Implementing full-text search with PostgreSQL

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-02-29