By: Koen Verbeeck

Overview

Now we know how to create database objects, it’s time to get some data into Snowflake. In this part of the tutorial, we’ll take a look into the COPY INTO statement, which is able to load data quickly and efficiently into a table. The COPY INTO statement can read various file formats, such as CSV, XML, JSON, AVRO, ORC and Parquet.

Create a Stage

The COPY INTO statement will read data from files stored inside a cloud location. This location can be an internal stage of table or an external stage, such as Azure Blob Storage. Let’s create a stage pointing to a blob container. In the top menu, choose databases.

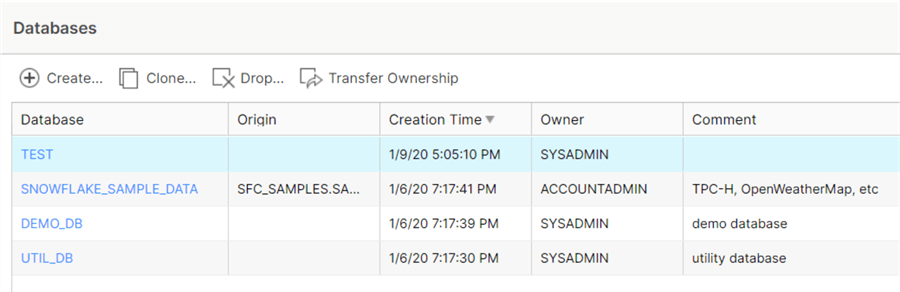

In the list of databases, choose the database for which you want to create a stage.

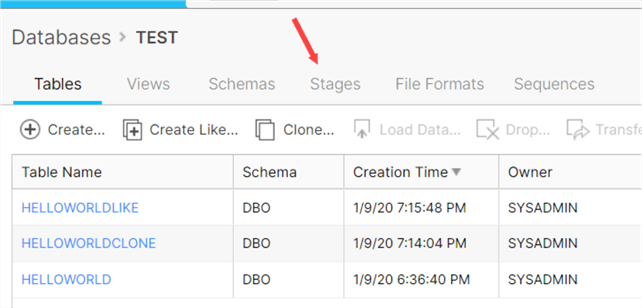

Next, click on the Stages tab.

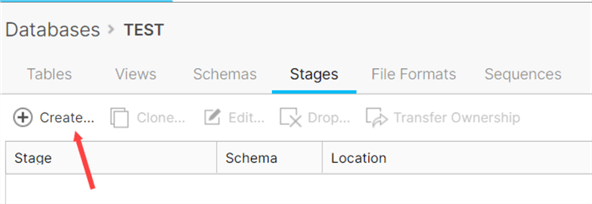

Click on Create… to create a new stage.

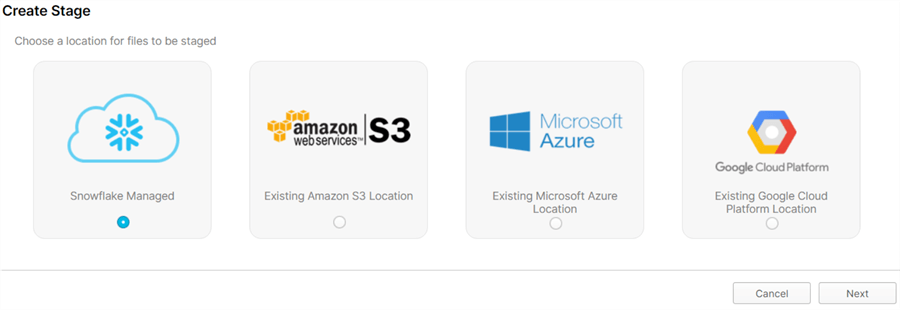

From the dialog, choose Microsoft Azure as the staging location:

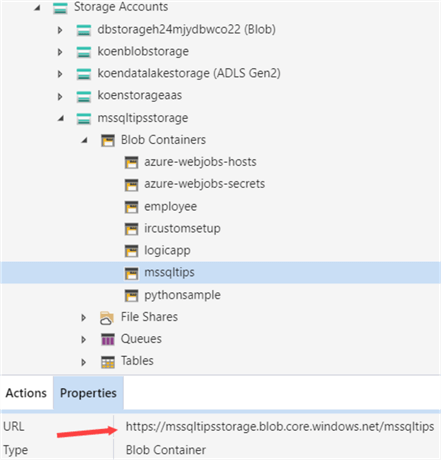

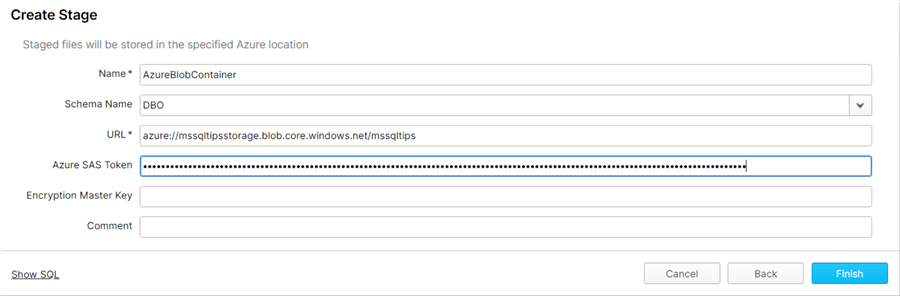

To create the stage, we need the URL of the container and a SAS token. Both can be retrieved using Azure Storage Explorer. When selecting the blob container, you can see the URL in the properties:

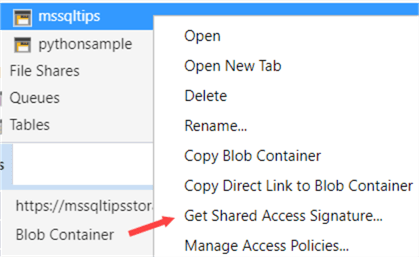

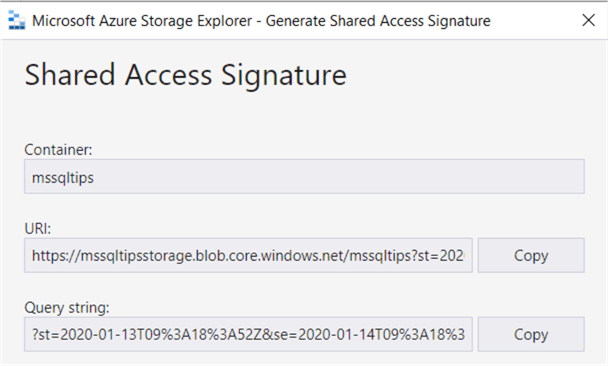

Right-click on the container and select Get Shared Access Signature.

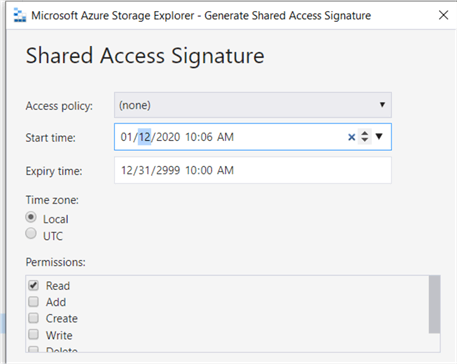

Select a date for into the future and choose the appropriate permissions:

The SAS token is the Query string. Copy it and paste it into the Snowflake dialog.

If you want to see the DDL needed to create the stage using SQL, click on the Show SQL link at the bottom.

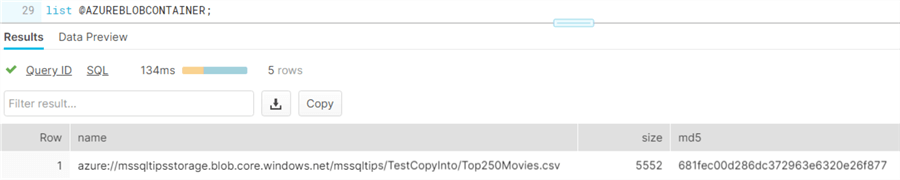

Once the stage is created, you can quickly list all files in it using the LIST command:

Load Data with COPY INTO

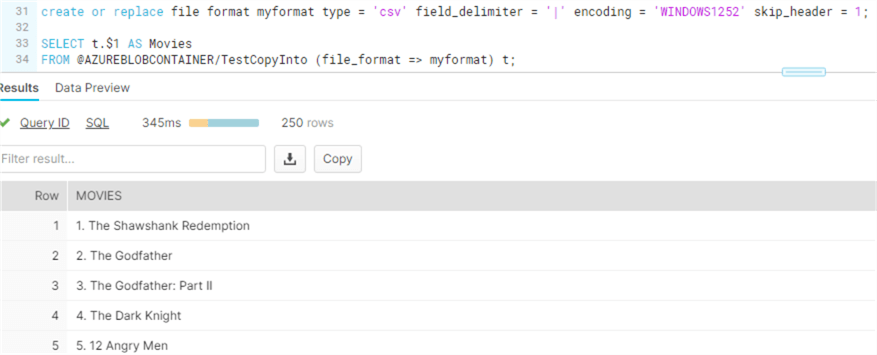

In this example, we’ll load a simple csv file from the stage we created in the previous paragraph. We can verify the data by using a SELECT statement:

The file format is needed to specify the configuration of the csv file, so Snowflake knows how to read it. Notice we didn’t specify a file name, but only a directory (TestCopyInto). This means Snowflake will try to read any file it finds in the directory and its subdirectories. The COPY INTO statement becomes:

COPY INTO dbo.Movies FROM @AZUREBLOBCONTAINER/TestCopyInto file_format = (format_name = myformat) pattern='.*[.]csv';

Keep in mind the destination table has to already exists before running COPY INTO. If everything went successful, you’ll see a line for each file load, together with the number of rows parsed and how many errors were encountered.

The COPY INTO is a powerful and flexible statement. It can also read compressed files (such as gzip compression). To get optimal performance, you can split very large files into files of about 100MB compressed. The COPY into statement will load these parallel into the destination table.

Alternatives

Are there other options to get data into Snowflake? Let’s take a look at some alternatives:

- Any existing ETL tool should be able to write to Snowflake. There are ODBC and JDBC connectors available. Keep in mind Snowflake is a data warehouse solution, not an OLTP database. If the ETL tool writes data row by row, it’s going to be extremely slow. Batches are preferred, or maybe staging the data in gzipped flat files and then loading them into Snowflake using the COPY INTO statement.

- There are also .NET and Python libraries available for working with Snowflake. There’s even a Kafka connector.

- You can use the Snowflake command line tool for uploading data to a stage.

- The Snowpipe feature allows you to load streaming data (in the form of micro-batches) into Snowflake.

- Although not (yet) officially supported in Azure Data Factory, you can load data into Azure Blob Storage using ADF, then run a COPY INTO statement to pull the data into the table. Check out the tip Create an Azure Function to execute SQL on a Snowflake Database - Part 2 to learn more about executing SQL statements for Snowflake from ADF. Official support should come soon.

Additional Information

- To learn more about SAS tokens in Azure blob storage, check out the documentation.

- The COPY INTO statement has many optional parameters and can handle multiple file formats. Check out the docs to see what’s possible.

- You can use COPY INTO also in the opposite direction: to dump data from a table into a stage.

- You can combine the COPY INTO statement with a SELECT statement (using the $ sign to access individual columns as shown earlier). This can be useful when you want a different column order, leave out columns or if you want to apply some transformations such as checking for white space or null values.