By: Koen Verbeeck

Overview

Writing SELECT statements is exactly the same in Snowflake as in any other database. In fact, if you have been writing T-SQL for some time you’ll have no problems adjusting to the SQL dialect of Snowflake, since they overlap greatly. In this part of the tutorial, I use the StackOverflow sample database, which can be downloaded as XML files. The advantage is the size of the database is big enough to run some heavy queries and the data itself is realistic. If you’re interested, there are back-ups specific for SQL Server.

Querying Data

Let’s start with this query:

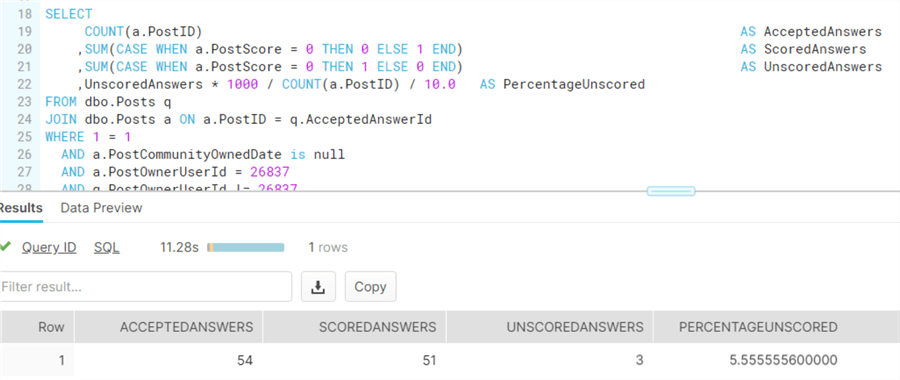

SELECT COUNT(a.PostID) AS AcceptedAnswers ,SUM(CASE WHEN a.PostScore = 0 THEN 0 ELSE 1 END) AS ScoredAnswers ,SUM(CASE WHEN a.PostScore = 0 THEN 1 ELSE 0 END) AS UnscoredAnswers ,UnscoredAnswers * 1000 / COUNT(a.PostID) / 10.0 AS PercentageUnscored FROM dbo.Posts q JOIN dbo.Posts a ON a.PostID = q.AcceptedAnswerId WHERE 1 = 1 AND a.PostCommunityOwnedDate is null AND a.PostOwnerUserId = 26837 AND q.PostOwnerUserId != 26837 AND a.PostTypeId = 2 -- answer ;

The Posts table holds about 40 million rows and is 20GB in size. On a extra small warehouse (make sure to read the part of the tutorial about warehouses) this query runs for about 8 to 11 seconds.

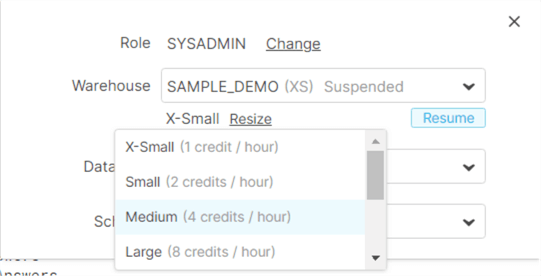

Now let’s scale the warehouse up to size medium.

Scaling the warehouse takes a couple of seconds. Now, before we run our query again, we need to disable caching, which can be done with the following statement:

ALTER SESSION SET USE_CACHED_RESULT = FALSE;

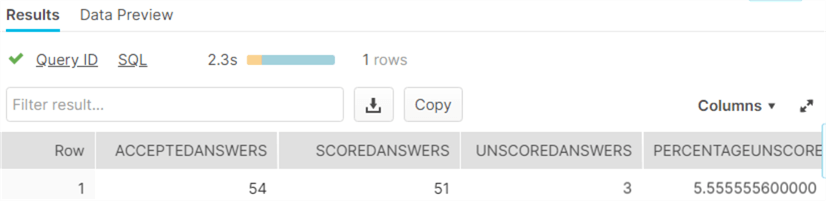

When we run the same query again, we can see the results are returned significantly faster:

This brings us the one of the main advantages of Snowflake: scaling warehouses is super-fast and transparent for the end user. A bigger warehouse will finish queries faster than smaller warehouses most of the time. The reverse correlation between warehouse size and query duration is not 100% linear, but generally you can expect that if you double the warehouse size, the execution time is roughly halved. Of course, this stops at some point in time, there will always be some bottleneck, like I/O for example, that cannot be mitigated by just throwing more processing power at it.

The great part of having a faster query because of a bigger warehouse is that you pay about the same amount of money. Suppose you have a long running query of 10 minutes on a small warehouse (2 credits per hour). This means you pay 10/60 * 2 credits or 1/3 of a credit. Suppose you run the same query on a medium warehouse (4 credits per hour) and the query finishes in 5 minutes. The calculation now becomes: 5/60 * 4 credits, which is again 1/3 of a credit!

It makes sense to test out how big of a warehouse you’ll use to run your data pipelines. You can scale up and your queries go faster, meaning your ETL window gets smaller for the same amount of money. At some point this trend will stop and you have found your optimal warehouse size. Keep in mind you pay for the amount of time a warehouse is running, not for the number of queries, so take advantage of parallelism as much as you can.

Performance Tuning

Performance is great out of the box, but if a query is still slow, you do not have many options unfortunately. There are no indexes you can create on a table. Your options are:

- Scale up the warehouse. If it doesn’t help, you need to resort to one of the other options.

- Re-write your query. Maybe you did an inefficient join or perhaps you can use window functions to speed things up.

- Split up the query in multiple parts and store each intermediate result in a (temporary) table. This is sometimes the only option if the query plan becomes too complex for Snowflake to handle.

- You can also change the clustering of a table. Typically used for larger tables (over 1 TB) but it might also help for smaller tables. This option should be your last resort.

Additional Information

- If you don’t have the Stack Overflow database, you can write your own query on the provided sample databases in Snowflake. The tables in the TPCH_SF10000 schema in the Snowflake_Sample_Database database are up to 1.7TB in size, so you can use those for performance testing.