By: Maria Zakourdaev | Updated: 2022-06-16 | Comments | Related: > Other Database Platforms

Problem

"MemoryError: Unable to allocate …" is the last thing that you want to see during data loading into Pandas Dataframe. I get this error here and there and my first reaction usually is "I need a bigger machine with more memory!". But I will show you in this tip how to avoid unnecessary expenses.

Solution

Python has become a very popular tool for data exploration, processing, and analysis. One of the most useful packages to work with data is Pandas. Pandas stand for "Python Data Analysis Library". Pandas library is a great tool if we want to read, visualize, or aggregate small datasets in memory by using objects, called Dataframes. Dataframes are very similar to the database table. Here you can read more on Pandas Dataframes.

When we are using the Pandas library on top of the Spark cluster engine, we are not able to utilize all cluster nodes. We only have access to the Spark driver node. Here you can read more on Spark cluster architecture. If the data file is big, we might get an out of memory error. Pandas Dataframes are being processed totally in memory; their size is limited to the driver node memory. Pandas Dataframes usually work very fast if there is enough memory and scalability is not an issue. Therefore, we are limited in the amount of data that we can work with.

Reproduce Out of Memory Error

Let me reproduce the memory errors.

I will load the New York yellow taxi trip data. This is one of the most popular test datasets available online. Recently, all data files have been converted into parquet format. I will first use old CSV files available in the backup location: 'https://s3.amazonaws.com/nyc-tlc/csv_backup/yellow_tripdata_<year>-<month>.csv'. We will compare to parquet files later and will discuss the parquet format benefits.

I want to see how many months of data I can load into pandas Dataframe using Synapse Analytics Spark cluster of Memory Optimized nodes (4vCores and 32GB each). This cluster can scale from 3 to 10 nodes but, as I have already mentioned, we will not be able to utilize the Spark cluster scalability feature. In this link, you will find all Synapse Spark Pool configurations available.

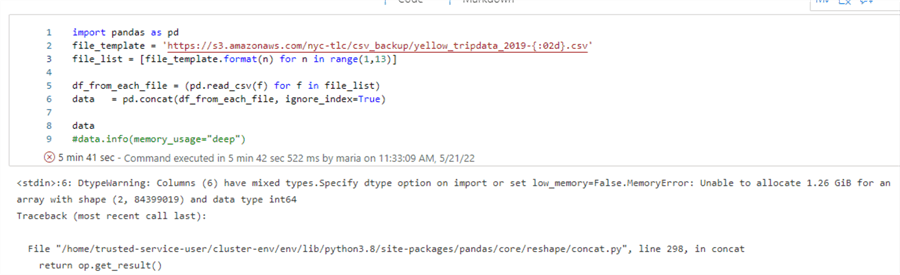

If I want to load a full year, 12 months of data, I will get an "Out Of Memory" error:

MemoryError: Unable to allocate 3.39 GiB for an array with shape (8, 56842912) and data type float64

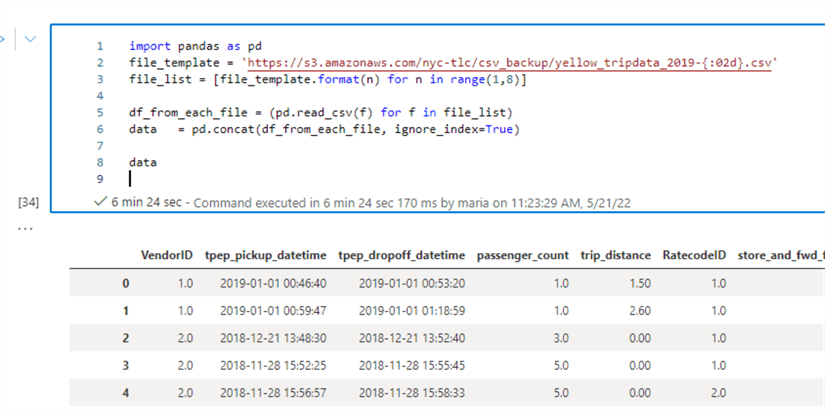

First, I will try to load the first 7 months of 2019, the below script will print out all of the generated file locations:

Loading files in a loop, concatenating the result into a single result set using Pandas read_csv function:

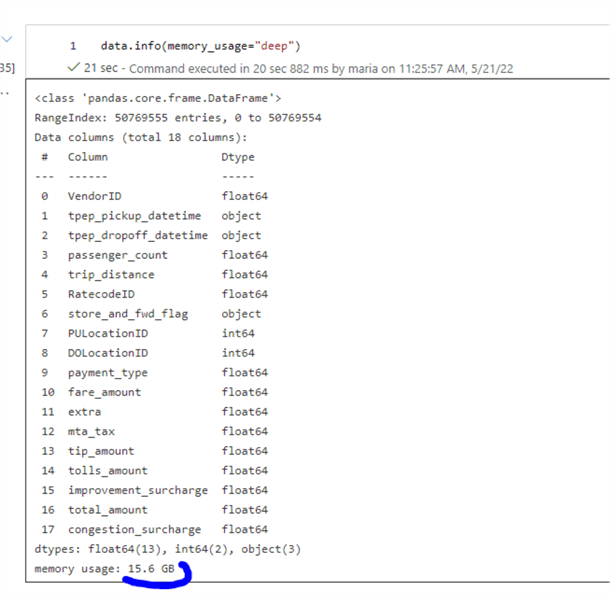

Great, I have enough memory and have loaded 7 months of data into a single Pandas Dataframe. Let me check how much memory the Dataframe uses: 15.6 GB , more than 50 million rows.

My goal was to load 12 months, a full year of data, the issues is that this goes over 20GB of data and causes an out of memory error as shown below.

This error occurs on the Spark Driver, which is a JAVA process that executes our code. The driver is only supposed to be an orchestrator and use less memory that executors.

Here is the script I used to load the data.

file_template = 'https://s3.amazonaws.com/nyc-tlc/csv_backup/yellow_tripdata_2019-{:02d}.csv'

file_list = [file_template.format(n) for n in range(1,9)]

file_list

import pandas as pd

df_from_each_file = (pd.read_csv(f) for f in file_list)

data = pd.concat(df_from_each_file, ignore_index=True)

data.head()

data.info(memory_usage="deep")

Let's discuss some ways to work around the memory issues.

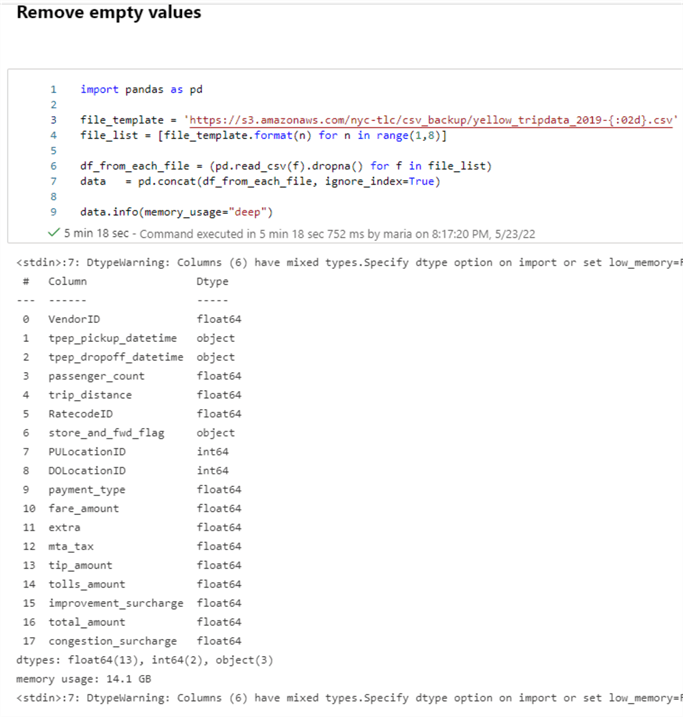

Tip 1. Remove empty rows or missing values

There are sometimes empty values (also referred as NA) and if we remove them, we will use less memory. We can use the function dropna().

After loading the same 7 months of data and removing the empty values, my dataset is now 14.1GB – already a bit less than the original 15.6GB.

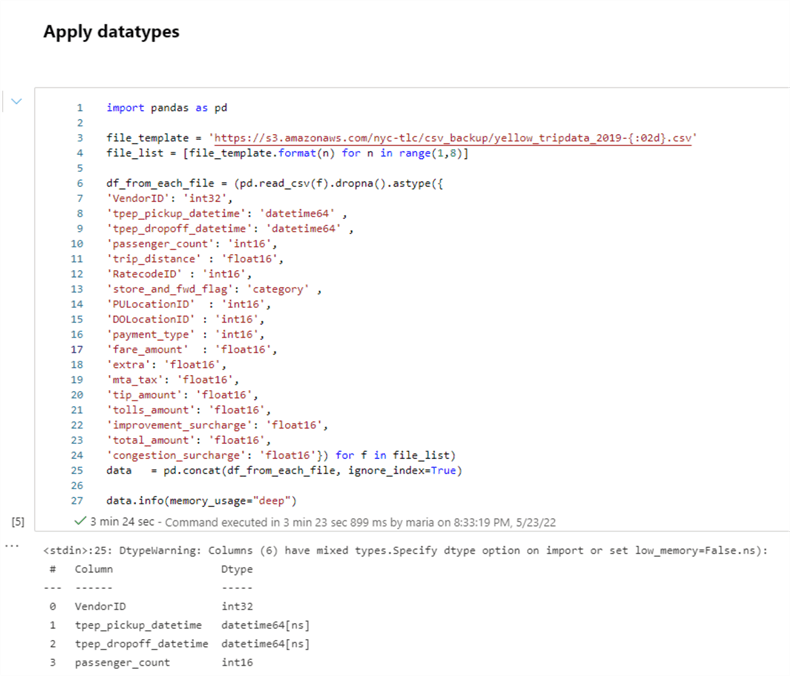

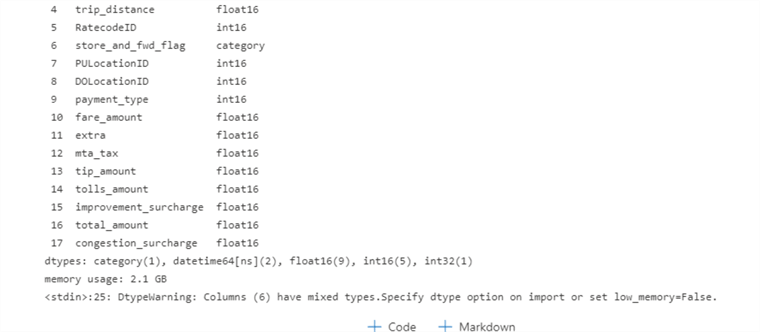

Tip 2. Providing dataset Data types

Guessing data types is a very time and memory-demanding process. We can reduce loading time and Dataframe memory usage by providing column data types and using smaller data types. Smaller data types take less memory.

See below the main data types that we can use to lower memory usage as well as use unsigned subtypes if there are no negative values.

| Data type | Memory usage | Range |

|---|---|---|

| int8 / uint8 | 1 byte | -128/127 or 0/255 |

| bool | 1 byte | true or false |

| float16 / int16 / uint16 | 2 bytes | -32768 and 32767 / Half precision float / 0/65535 |

| float32 / int32 / uint32 | 4 bytes | -2147483648 and 2147483647 / Single precision float / 0 to 4294967295 |

| float64 / int64 / uint64 | 8 bytes | -9223372036854775808 to 9223372036854775807 / Double precision float / 0 to 18446744073709551615 |

| object | Will vary | Stored text or columns with mixed data types |

| category | Will vary | For string values, we could use a category type that can be used for columns with a finite number of possible values |

| datetime64 | 8 bytes | Date and time data |

I will convert datatypes in every chunk of data before merging the chunks.

My dataset is now only 2.1GB! And it took much less time to load it: 3 min vs 5 min

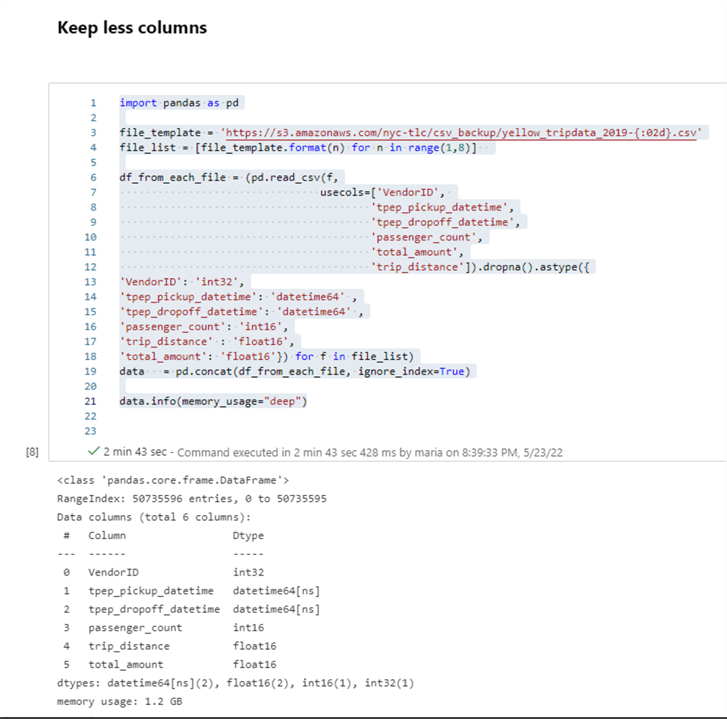

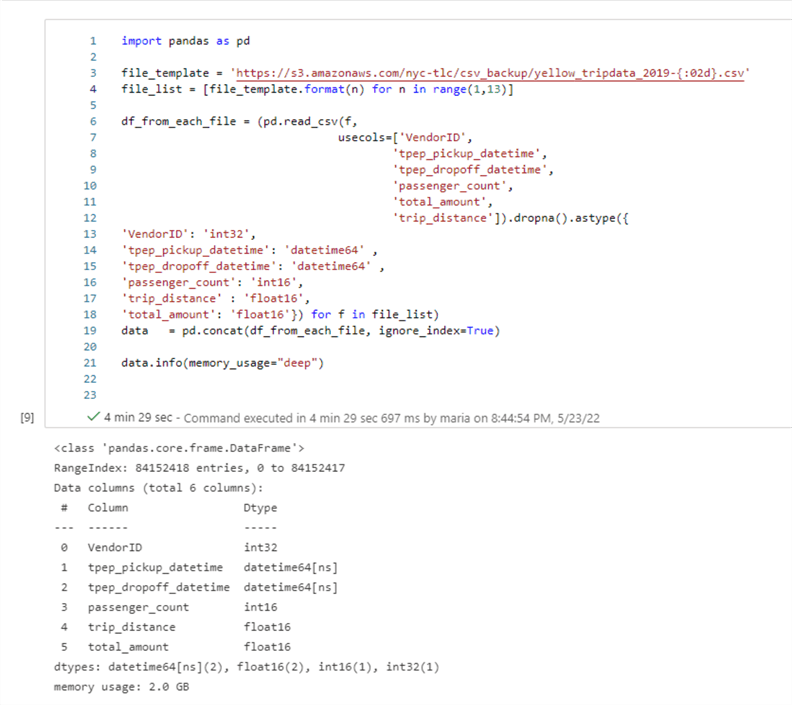

Tip 3. Limiting the number of dataset columns

To use even less memory, we can load only part of columns into the Dataframe.

In this example I will combine all 3 methods mentioned above, limiting a number of columns, providing datatypes, and dropping missing values, my 7-month dataset is now only 1.2 GB:

Now I can load 12 months of data without getting an out of memory error and it's using only 2 GB of memory!

Tip 4. Use parquet data type instead of csv

As I have mentioned above, the Yellow Taxi dataset is now available in the parquet format. Parquet format is easier to work with because it has the columns metadata which helps python to define proper datatypes. Parquet files organize data in columns while CSV files organize data in rows. Columnar storage allows much better compression so Parquet data files need less storage, 1 TB of CSV files can be converted into 100GB of parquet files – which can be a huge money saver when cloud storage is used. This also means that scanning parquet files are much faster than CSV files – easier to skip unneeded columns quickly and aggregations will run faster.

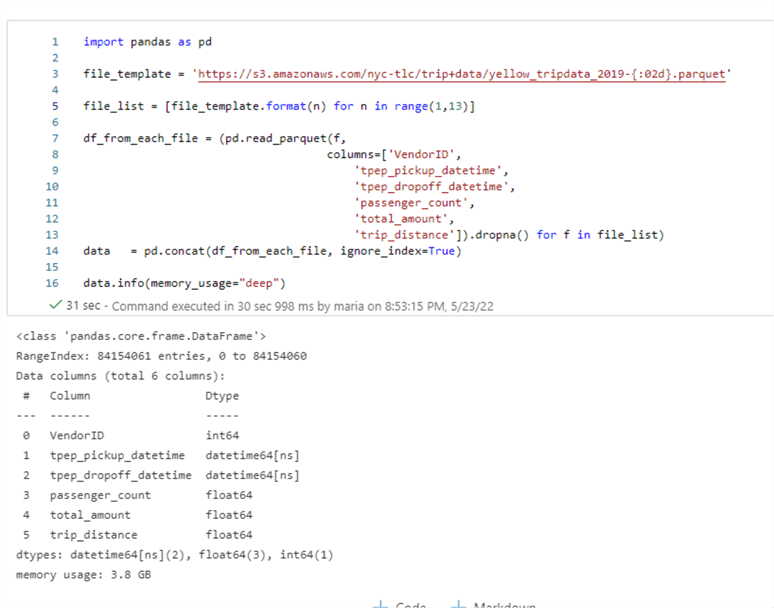

Let's look how fast I can load 12 months of data stored in parquet files.

Loaded 84 million rows in 30 sec vs 4 min loading from CSV format.

Should you have more tips on how to reduce the memory footprint of the Pandas datasets, drop a comment below.

Next Steps

- Check out these related items:

About the author

Maria Zakourdaev has been working with SQL Server for more than 20 years. She is also managing other database technologies such as MySQL, PostgreSQL, Redis, RedShift, CouchBase and ElasticSearch.

Maria Zakourdaev has been working with SQL Server for more than 20 years. She is also managing other database technologies such as MySQL, PostgreSQL, Redis, RedShift, CouchBase and ElasticSearch.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-06-16