By: Koen Verbeeck | Comments (30) | Related: More > Integration Services Connection Managers

Problem

You want to incorporate transactions in your SQL Server Integration Services Packages. For example, you insert data into a table with a data flow and after the data flow has finished an update statement is issued against the same table. If this update statement fails, you want to roll back the data inserted by the data flow. Normally you can enable the built-in transaction model of SSIS which relies on the Distributed Transaction Coordinator (MSDTC), but for certain reasons you cannot use the MSDTC in your environment. How can you still enable transactions in your SSIS package? Check out this tip to learn more.

Solution

Luckily the solution is pretty straightforward: for every task using a database connection, we can use database transactions. Let's find out how to make this work.

Sample SSIS package

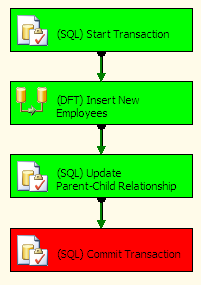

As an example for this tip, we have a very basic SSIS package. It reads employee data from a source and stores the new employees in the employee dimension.

After the data flow has finished, the Execute SQL Task issues an update statement against the dimension that will update the parent-child relationship. In other words, it will set the foreign key relationship from a child pointing to the primary key of the parent. The update statement also allows employees to change who their manager is for example.

The requirement is that when the update statement fails, the inserts from the data flow are rolled back. Since we cannot use MSDTC, we need an alternative.

Using SQL Server Database Transactions

If the tasks that need transactions all use the same connection manager, we can leverage database transactions to achieve our requirement. Let's start by putting an Execute SQL task before the data flow and connect both of them with a Success precedence constraint (the green arrow). This task uses the same connection manager as the data flow and the Execute SQL Task. The task specifies one simple SQL statement:

BEGIN TRANSACTION;

This statement will start an explicit transaction on the current connection. After the Execute SQL Task we put another Execute SQL Task and we connect both of them again with a Success precedence constraint. Again, this task uses the same connection manager as the other tasks. The tasks has the following SQL statement:

COMMIT TRANSACTION;

This statement will commit the explicit transaction on the current connection if the Execute SQL Task with the update statement has finished successfully.

But what if the update statement fails? In that case, the SSIS package will fail because an error occurred and it will stop running. Any remaining open connections will be closed when the SSIS package is shut down. The SQL Server database engine will roll back the transaction when the connection is closed. If you prefer to have more control over when the transaction is rolled back, another Execute SQL Task can be added to the control flow. This task uses a Failure precedence constraint (the red arrow). This task explicitly rolls back the transaction with the following statement:

ROLLBACK TRANSACTION;

This approach is more robust and it allows you to add some possible clean-up code.

SQL Server Integration Services Connection Manager

However, when we run the package, the following error is returned by SSIS:

Executing the query "COMMIT TRAN;" failed with the following error: "The COMMIT TRANSACTION request has no corresponding BEGIN TRANSACTION."

This is caused by the fact that the connection manager actually doesn't create one single connection. When running the package, multiple connections are created for the different tasks. You can look at the connection manager as if it is a little factory, creating connections for all the tasks. This means that when SSIS reaches the final Execute SQL Task, the connection from the first Execute SQL Task - the one that starts the transaction - is already closed. Hence, SQL Server doesn't find the corresponding BEGIN TRAN, so it returns an error. How can we solve this issue? The connection manager has a very useful property called RetainSameConnection. By default this property is set to false. When we change it to true, we tell the connection manager to create only a single connection which will be shared amongst the different tasks.

The package will now complete successfully. Unless an error occurs of course and in that case everything is rolled back nicely.

Next Steps

- Try implementing this tip in your own packages. Try to evoke an error and check if everything is rolled back as you expect.

- If you're interested in parent-child relationships: Parent-Child Dimensions

- For more information about database transactions and how SQL Server handles them: Transactions (Database Engine)

- Read this tip about implementing transactions in SSIS using MSDTC: How To Use Transactions in SQL Server Integration Services SSIS

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips