By: Fikrat Azizov | Comments (5) | Related: > Maintenance

Problem

Have you ever wondered how many statistics objects there are in a database? Unlike other objects, statistics are often created and managed by SQL Server automatically and unless database performance starts to crawl or statistics update jobs exceed maintenance windows, we probably don't think much about this.

Some DBA's prefer to let SQL Server handle the statistics updates (by keeping the 'auto update statistics' option on) while others prefer to update them manually or have a combination of auto and manual updates. Regardless of what approach you choose, as your database grows, statistics maintenance will require more time and resources and there will be a point at which you're faced with questions like: "Are all my statistics useful?, Which statistics are used frequently? Which ones could possibly be dropped?".

In this tip I'll describe different approaches to maintain statistics and show how you can use the data from your servers for intelligent statistics updates.

Solution

The Importance of SQL Server Statistics

Statistics are one of the most important factors that impacts SQL Server performance. The topic of statistics is well described on the web and I've provided links to some articles at the end of this tip.

For those of you who are not familiar with statistics here are some key points, which will help with understanding this topic:

-

SQL Server statistics contain statistical information about the distribution of data in your tables and is always specific to certain columns. The SQL Server Query Optimizer uses this information when creating execution plans to arrive at optimal performance.

-

There are two types of statistics: index statistics and column statistics. Index statistics are created automatically, when we create indexes and include columns specific to that index. Column statistics can be created manually or automatically if the AUTO_CREATE_STATISTICS option of your database is turned on. By default this setting is on, which allows SQL Server to create useful statistics when required and it's recommended to keep this setting on.

-

Statistics could be updated manually (by running scripts or maintenance plans) or automatically, if the AUTO_UPDATE_STATISTICS setting of your database is turned on (it is on by default).

-

If the AUTO_UPDATE_STATISTICS property for a database is turned on, statistics would be updated when certain conditions are met such as a number of data changes since the last update, etc.

-

Statistics updates could be done either on the full or partial data set. When a partial update is selected (sampling) a percentage of rows against entire data volume could be specified. If the 'sampling' parameter is not specified, by default SQL Server will choose a full update for small tables and partial updates for large tables.

-

If full statistics update is not feasible for certain tables (say due to size constraints) it's preferable to provide as high a sampling as possible when updating statistics. This will ensure that statistics reflect accurate, up to date distribution of your data and would lead to better performance. I've seen cases where SQL Server has created sub-optimal execution plans, because certain statistics have been partially updated.

SQL Server Statistics Update Challenges

As mentioned above, there are a few approaches for updating statistics and unfortunately each one has its challenges.

Here are some of these challenges:

Automatic Update SQL Server Statistics

With AUTO_UPDATE_STATISTICS setting on, statistics would be updated when:

- Table with less than 500 rows has an increase of 500 rows or more since the last update

- Table with over 500 rows has an increase of 500 rows + 20% of the table size since the last update

This logic may work well for relatively small tables, however for larger tables it may require a lot of changes, before it's marked for update. As you can see from this article, with SQL Server 2008 R2 - SP 1 and later versions you can lower this threshold by applying Trace Flag 2371. This will ensure that query plans use more accurate statistics and perform better. However, as update statistics requires large scans on the underlying tables, they may consume significant server resources and impact TempDB in some cases. Take a look at this article for more details about the process running in the background which may be impacting performance.

Manual Update

With this approach you have more controls on the timing, sampling and intensity of statistics update. However, you'd be restricted to the maintenance window, because running intensive statistics updates may seriously impact business applications. As your tables grow, some compromises would need to be made to fit into a maintenance window, like:

- Reducing update sampling by either providing a low sampling or skip the sampling parameter and let SQL Server choose the sampling. The problem with this approach is the SQL Query Optimizer may not choose statistics with low sampling and therefore create sub-optimal execution plans, as I've mentioned above.

- Update statistics for a limited number of tables in each session. There are a few popular conditions for choosing best statistics candidates, such as using the STATS_DATE() function to get last update date/time for each statistic, while others prefer using the number of updates on the underlying table columns since the last statistics update. You can get this number by querying the modification_counter column from sys.dm_db_stats_properties system function available on SQL Server 2008 R2 and above.

There's no simple answer for the problems I've described above and these challenges grow as your database grows.

Are all my SQL Server statistics really useful?

The short answer is no, as I mentioned above. When AUTO_CREATE_STATISTICS option is on, SQL Server will create statistics when it thinks it is beneficial for the query. Over time your database will collect many obsolete statistics, which were created a long time ago for queries with a single use purpose or queries which are no longer in use. Unfortunately it's not easy to find usage frequency for existing statistics. So, some people suggest dropping all column statistics from time to time and let SQL Server create new statistics, see this article for more info. Although this seems to be a reasonable approach, some DBA's would hesitate dropping all statistics in a production environment as this can temporarily impact query performance. So, the approach described below is based on real knowledge collected from your server.

How to identify useful SQL Server statistics?

With SQL Server 2012 and later versions you can use Trace Flag 8666 to obtain some debugging information from the SQL Server query cache which includes the names of the statistics used within each execution plan, see this article for more information. So, if we can collect execution plans for some period, we can get usage frequency of statistics and make intelligent decisions on cleaning up obsolete statistics or maintaining useful statistics.

In this previous tip, I discussed a simple query collection tool which could be used for statistics analysis purposes. Here is the link to download the stored procedure discussed below.

Here are the steps required to achieve this:

The stored procedure CollectQueryStats includes statistics as part of the execution plans, if @vCollectStatsInfo parameter is set to 'True'. That would enable Trace Flag 8666 for the duration of this procedure. The procedure checks the SQL Server version and enables the flag only if it's SQL Server 2012 or later. Please also note that this flag is undocumented. I've used it with SQL Server versions 2012 and 2014 and didn't have any problems. This will allow you to collect query statistics for a certain period from your production server if the @vCollectStatsInfo parameter is set to 'True'.

Transfer the PerfStats database into a non-production server and enable these XML indexes by executing the following script on that server:

USE [PerfStats] GO ALTER INDEX [PXML_QueryPlans] ON [dbo].[QueryPlans] REBUILD ALTER INDEX [IXML_QueryPlans_Path] ON [dbo].[QueryPlans] REBUILD ALTER INDEX [IXML_QueryPlans_Value] ON [dbo].[QueryPlans] REBUILD GO

Execute the below query to get total number of statistics calls:

USE [PerfStats]

GO

;WITH XMLNAMESPACES

(DEFAULT 'http://schemas.microsoft.com/sqlserver/2004/07/showplan')

,GetStatsCTE As (

SELECT DISTINCT

cp.SqlHandle,

cp.PlanHash,

cp.DbName,

schnds.value('(@FieldValue)[1]', 'varchar(128)') AS Schema_Name,

tblnds.value('(@FieldValue)[1]', 'varchar(128)') AS Table_Name,

obj.value('(@FieldValue)[1]', 'varchar(128)') AS Stats_Name,

cp.ExecutionCount

FROM vQueryStats_Plans AS cp

CROSS APPLY cp.queryplan.nodes('//Recompile/Field[@FieldName="wszTable"]') AS StatNodes(tblnds)

CROSS APPLY tblnds.nodes('../Field[@FieldName="wszSchema"]') AS SchNodes(schnds)

CROSS APPLY tblnds.nodes('../ModTrackingInfo') AS TblNodes(stnds)

CROSS APPLY stnds.nodes('./Field[@FieldName="wszStatName"]') AS vidx(obj)

Where MinElapsedTime_Msec >50

)

Select DbName,Schema_Name,Table_Name,Stats_Name,Sum(ExecutionCount) As TotalScanCount

FROM GetStatsCTE Where Schema_Name<>'sys' Group by DbName,Schema_Name,Table_Name,Stats_Name

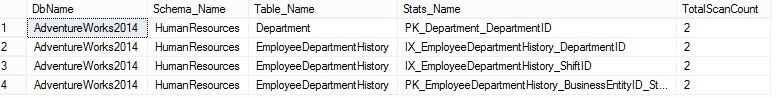

Here is what my results look like and from here we can see what is being used.

Next Steps

- Read more about statistics in these articles:

- Read about statistics related performance problems in these articles:

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips