By: Simon Liew | Comments (2) | Related: > Compression

Problem

This tip introduces the new built-in COMPRESS and DECOMPRESS functions in SQL Server 2016.

Solution

Data compression was first introduced in SQL Server 2008 to allow compression of data inside a database. Row and page compression can be configured at the table, index, indexed view, or partition level. However, row and page compression does not work well on binary large objects (BLOB) such as text documents, images, and videos.

Starting in SQL Server 2016, two built-in functions for compression and decompression were introduced and available on any edition of SQL Server 2016.

- COMPRESS - Compresses binary or text using the GZIP algorithm. The result of the compression is a byte array of type varbinary(max).

- DECOMPRESS - Decompress binary using GZIP algorithm. The result of the compression is a byte array of type varbinary(max).

Use Case Example

A customer uses FILESTREAM and compresses archive files on the client (ZIP) using a third party compression utility before upload. The compression is an additional step prior to upload and prone to error. The data retrieval process involves manual decompression of the files. When the customer upgraded to SQL Server 2016, they modified their data upload and retrieval process to leverage the COMPRESS and DECOMPRESS function instead. This was a simple change, yet tremendously simplified the ETL process and support.

SQL Server Compress and Decompress Demonstration

The steps below demonstrate the process as mentioned in the use case above. Assuming FILESTREAM is already enabled on the SQL Server instance, the command below will create a database and a table for storing FILESTREAM data.

CREATE DATABASE [CompressTest]

CONTAINMENT = NONE

ON PRIMARY

( NAME = N'CompressTest', FILENAME = N'D:\SQLDATA\MSSQL13.MSSQLSERVER\MSSQL\DATA\CompressTest.mdf',

SIZE = 8192KB , FILEGROWTH = 65536KB ),

FILEGROUP [FSG] CONTAINS FILESTREAM

( NAME = N'FSG', FILENAME = N'D:\SQLDATA\MSSQL13.MSSQLSERVER\MSSQL\DATA\FSG' )

LOG ON

( NAME = N'CompressTest_log', FILENAME = N'E:\SQLDATA\MSSQL13.MSSQLSERVER\MSSQL\Log\CompressTest_log.ldf' ,

SIZE = 8192KB , FILEGROWTH = 65536KB )

GO

CREATE TABLE CompressTest.dbo.Records

(

[Id] [uniqueidentifier] ROWGUIDCOL NOT NULL UNIQUE DEFAULT NEWSEQUENTIALID(),

[Descr] VARCHAR(100),

[Files] VARBINARY(MAX) FILESTREAM NULL

)

GO

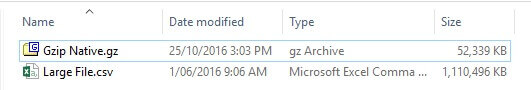

We will be working with two sample files: "Large File.csv" is a flat file in CSV format and "Gzip Native.gz" is a compressed file of "Large File.csv" using the GZIP utility manually.

SQL Server COMPRESS Function

The commands below will load the same file "Large File.csv" twice. The first load of the file will be as is and the second load of the file will use the COMPRESS function. "Gzip Native.gz" file will be uploaded once as it is.

DECLARE @NonCompressedFile VARBINARY(MAX) DECLARE @CompressedFile VARBINARY(MAX) DECLARE @GzipCompressedFile VARBINARY(MAX) SELECT @NonCompressedFile = CAST(bulkcolumn AS VARBINARY(MAX)) FROM OPENROWSET(BULK '\\filesrv\CompressionTest\Large File.csv', SINGLE_BLOB) AS x SELECT @CompressedFile = COMPRESS(CAST(bulkcolumn AS VARBINARY(MAX))) FROM OPENROWSET(BULK '\\filesrv\CompressionTest\Large File.csv', SINGLE_BLOB) AS x SELECT @GzipCompressedFile = CAST(bulkcolumn AS VARBINARY(MAX)) FROM OPENROWSET(BULK '\\filesrv\CompressionTest\Gzip Native.gz', SINGLE_BLOB) AS x INSERT INTO CompressTest.dbo.Records (Descr, [Files]) SELECT 'Non Compressed File', @NonCompressedFile INSERT INTO CompressTest.dbo.Records (Descr, [Files]) SELECT 'Compressed File', @CompressedFile INSERT INTO CompressTest.dbo.Records (Descr, [Files]) SELECT 'Gzip Native Compressed File', @GzipCompressedFile

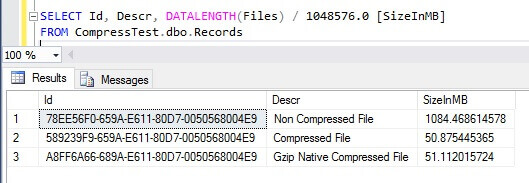

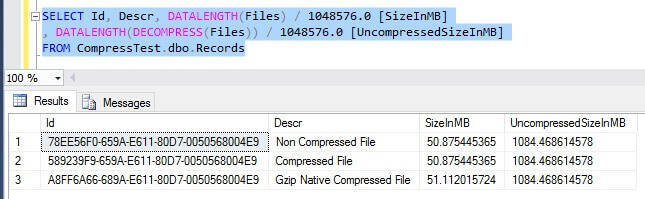

Checking the loaded file sizes, the compressed "Large File.csv" file size is 50MB and the file size is very close to the "Gzip Native.gz". The uncompressed file size "Large File.csv" remains the same at around 1.1GB.

SELECT Id, Descr, DATALENGTH(Files) / 1048576.0 [SizeInMB] FROM CompressTest.dbo.Records

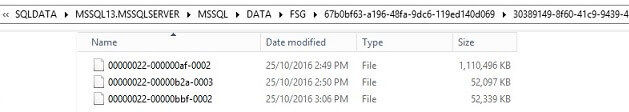

The file size retrieved using the DATALENGTH function corresponds to the actual physical size on disk.

SQL Server DECOMPRESS Function

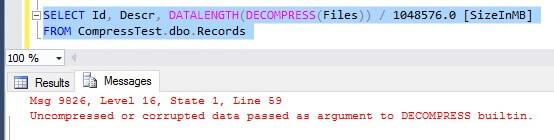

The DECOMPRESS function will produce an error if it tries to decompress data that is not compressed using the GZIP algorithm. In the example, the Non Compressed File row will produce this error.

SELECT Id, Descr, DATALENGTH(DECOMPRESS(Files)) / 1048576.0 [SizeInMB] FROM CompressTest.dbo.Records

We can UPDATE uncompressed data using the COMPRESS function as follows.

UPDATE CompressTest.dbo.Records SET Files = COMPRESS(Files) WHERE Descr = 'Non Compressed File'

The DECOMPRESS command will work on data which is compressed using the COMPRESS function or data which was manually compressed using the GZIP utility. Now that all three rows are compressed, we can decompress and send the files back to client using the DECOMPRESS function.

SELECT Id, Descr, DATALENGTH(Files) / 1048576.0 [SizeInMB], DATALENGTH(DECOMPRESS(Files)) / 1048576.0 [UncompressedSizeInMB] FROM CompressTest.dbo.Records

Summary

In versions prior to SQL Server 2016, FILESTREAM can store data on a compressed NTFS drive or the files can be pre-compressed (zipped) before uploading to save disk space. If your organization is using the latter method, SQL Server 2016 now offers an alternative to compress and decompress data in the SELECT query itself using the COMPRESS and DECOMPRESS functions.

This tip uses FILESTREAM in the example to store the data, but the compression and decompression functions can also be used to store the data in the SQL Server database itself.

For columns with numeric or fixed-length character data types, ROW or PAGE compression would still be the preferred method because of its simplicity to implement and not requiring any code changes.

With any design involving compression, deciding what to compress is important. Some data patterns are not suitable for compression such as compressing PDFs which will not yield a good compression ratio and the solution would waste CPU cycles.

Next Steps

About the author

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Master’s Degree in Distributed Computing.

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Master’s Degree in Distributed Computing.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips