By: Simon Liew | Comments (4) | Related: > Transactions

Problem

I noticed that when some SQL Server queries run a lot of transaction log space is utilized. Can you explain how the SQL Server transaction log gets used and why it doesn't always free up space after issuing transaction log backups.

Solution

The nature of the SQL Server transaction log utilization is circular. This means when logging reaches the end of the transaction log file and assuming the log records at the beginning of the transaction log have been truncated, it then wraps around to the beginning of the transaction log and starts overwriting what was there before. Log truncation (or log clearing) refers to the mechanism that marks an active Virtual Log File (VLF) as inactive, so that the VLF can be reused.

If there is a single transaction that takes a long time to complete, then log truncation will be delayed. As a consequence of delayed log truncation, the transaction log would need more space to accommodate the single long running transaction as well as all other transactions that occur during this time period. Even with frequent LOG BACKUPs for databases in FULL or BULK-LOGGED recovery model, or setting the database to SIMPLE recovery model will not allow the log to be truncated.

In the scenario indicated in the problem statement, the SQL Server transaction log file space used would be a lot higher when log truncation is delayed. So, optimizing the data modification query does have benefits in terms of transaction log file space usage.

There are other factors that can delay log truncation. This tip provides a contrived example to demonstrate the impact of a long running transaction and delayed log truncation.

Test Environment

All Transact-SQL in this tip was executed using SQL Server 2016 Enterprise Edition Service Pack 1.

Step 1 Create test database and data

A database is created with data file size of 1GB and an initial transaction log file size of 64MB. Transaction log auto-growth is set to 64MB.

A newly created database using the FULL recovery model will behave like the SIMPLE recovery model (typically referred to as pseudo-simple) until a full backup is taken. Hence, the script performs a full database backup immediately after the creation of the database to set the database to FULL recovery model. This is followed by creation of two tables and insertion of 50K rows into both tables.

USE [master] GO -- Make sure all folders exists prior to executing this script CREATE DATABASE [TLogTruncate] CONTAINMENT = NONE ON PRIMARY ( NAME = N'TLogTruncate', FILENAME = N'D:\SQLDATA\MSSQL13.MSSQLSERVER\MSSQL\DATA\TLogTruncate.mdf' , SIZE = 1024000KB , FILEGROWTH = 65536KB ) LOG ON ( NAME = N'TLogTruncate_log', FILENAME = N'E:\SQLDATA\MSSQL13.MSSQLSERVER\MSSQL\Log\TLogTruncate_log.ldf' , SIZE = 65536KB , FILEGROWTH = 65536KB ) GO ALTER DATABASE [TLogTruncate] SET RECOVERY FULL WITH NO_WAIT GO BACKUP DATABASE [TLogTruncate] TO DISK = 'F:\SQLDATA\TLogTruncate.BAK' WITH COMPRESSION GO USE [TLogTruncate] GO CREATE TABLE dbo.HeavyDutyTab1 (ID uniqueidentifier DEFAULT NEWID() , randomDate datetime DEFAULT GETDATE() - ((21 * 365) + RAND()* (39 * 365)) , FixedCol CHAR(10) DEFAULT 'Test Data1' CONSTRAINT PK_HeavyDutyTab1_ID PRIMARY KEY CLUSTERED(ID) ) GO CREATE TABLE dbo.HeavyDutyTab2 (ID uniqueidentifier DEFAULT NEWID() , randomDate datetime DEFAULT GETDATE() - ((21 * 365) + RAND()* (39 * 365)) , FixedCol CHAR(10) DEFAULT 'Test Data2' CONSTRAINT PK_HeavyDutyTab2_ID PRIMARY KEY CLUSTERED(ID) ) GO SET NOCOUNT ON; INSERT INTO dbo.HeavyDutyTab1 DEFAULT VALUES GO 50000 INSERT INTO dbo.HeavyDutyTab2 DEFAULT VALUES GO 50000

Step 2 Determine log used space

In this tip, we need to have the ability to determine the transaction log used space at a point in time. Here are three commonly known methods to obtain size or percentage of the log file currently in-use to store transaction log information:

- DBCC SQLPERF(LOGSPACE)

- Dynamic Management View (DMV) sys.dm_os_performance_counters

- Windows Performance Monitor (SQLServer:Database > Percent Log Used)

For simplicity, this tip will query DMV sys.dm_os_performance_counters, so that we can join to DMV sys.databases to obtain log_reuse_wait_desc.

SELECT instance_name

, [Data File(s) Size (KB)] * 1.0 / 1024 [Data File(s) Size (MB)]

, [Log File(s) Size (KB)] * 1.0 / 1024 [Log File(s) Size (MB)]

, [Log File(s) Used Size (KB)] * 1.0 / 1024 [Log File(s) Used Size (MB)]

, [Percent Log Used]

, log_reuse_wait_desc

FROM ( SELECT os.counter_name, os.instance_name, os.cntr_value

, db.log_reuse_wait_desc

FROM sys.dm_os_performance_counters os

JOIN sys.databases db ON os.instance_name = db.name

WHERE os.counter_name IN

(

'Data File(s) Size (KB)'

, 'Log File(s) Size (KB)'

, 'Log File(s) Used Size (KB)'

, 'Percent Log Used'

)

AND os.instance_name = 'TLogTruncate'

) as SourceTable

PIVOT (

MAX(cntr_value) FOR counter_name IN

([Data File(s) Size (KB)]

, [Log File(s) Size (KB)]

, [Log File(s) Used Size (KB)]

, [Percent Log Used])) as PivotTable

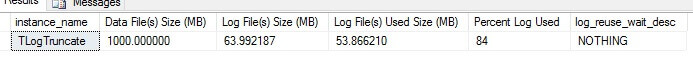

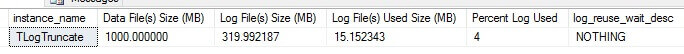

Currently, the database transaction log file size is 64MB and the log used size is 54MB.

Step 3 Transaction Log backup

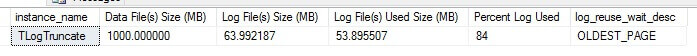

After taking the first transaction log backup, log_reuse_wait_desc shows OLDEST_PAGE. Log_reuse_wait_desc of OLDEST_PAGE occurs when indirect checkpoints are being used. It indicates that the oldest page of the database is older than the checkpoint LSN.

BACKUP LOG [TLogTruncate] TO DISK = 'F:\SQLDATA\log1.trn'

Step 4 - Normal log truncation

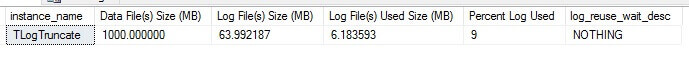

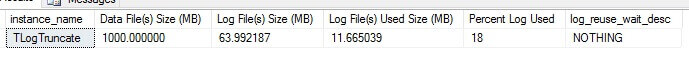

SQL Server log truncation does not reduce the actual physical size of the transaction log file. The physical transaction log file size remains at 64MB, but now the log file size used is only 6MB after log truncation.

BACKUP LOG [TLogTruncate] TO DISK = 'F:\SQLDATA\log2.trn'

Step 5 Generating log records

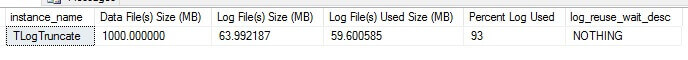

An additional 50K rows will be inserted into both tables. The INSERT query will push the log file used size to 59MB.

SET NOCOUNT ON; INSERT INTO [TLogTruncate].dbo.HeavyDutyTab1 DEFAULT VALUES GO 50000 INSERT INTO [TLogTruncate].dbo.HeavyDutyTab2 DEFAULT VALUES GO 50000

Step 6 Simulate long running transaction

To simulate a long running transaction, launch a new query window. Insert one row into table HeavyDutyTab1 using an explicit transaction and leave the explicit transaction uncommitted.

Whether a single tiny transaction or a long running INSERT / UPDATE / DELETE query, the transaction will remain open until the implicit or explicit transaction is complete and committed.

SET NOCOUNT ON; BEGIN TRAN INSERT INTO [TLogTruncate].dbo.HeavyDutyTab1 DEFAULT VALUES GO -- COMMIT

Step 7 Subsequent log backups

Two log backups are taken. The first log backup will have OLDEST_PAGE in log_reuse_wait_desc column and the next transaction log backup shows log file used size is 11MB after log truncation.

BACKUP LOG [TLogTruncate] TO DISK = 'F:\SQLDATA\log3.trn' BACKUP LOG [TLogTruncate] TO DISK = 'F:\SQLDATA\log4.trn'

Step 8 - Resume workload

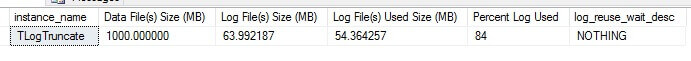

In a new query window, 80K new rows are inserted into table HeavyDutyTab2. The log file used size is 54MB. No abnormality so far.

SET NOCOUNT ON; INSERT INTO [TLogTruncate].dbo.HeavyDutyTab2 DEFAULT VALUES GO 80000

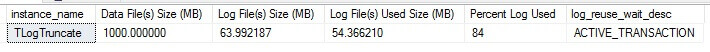

Step 9 Delayed log truncation

The next log backup is taken. Now the log_resuse_wait_desc value has changed to ACTIVE_TRANSACTION. ACTIVE_TRANSACTION indicates an active transaction typically a long-running transaction that is holding all subsequent VLFs active. It might or might not be an issue, but as long as this transaction is active and its VLF is marked as active, all subsequent VLFs cannot be made inactive even if it contains log records which are not related to this active transaction.

BACKUP LOG [TLogTruncate] TO DISK = 'F:\SQLDATA\log5.trn'

Step 10 - On-going workload

To simulate an on-going workload, rows are inserted into table HeavyDutyTab2 in a loop. The active transaction is on table HeavyDutyTab1, so this workload is working on a completely separate table.

SET NOCOUNT ON; WHILE 1 = 1 INSERT INTO [TLogTruncate].dbo.HeavyDutyTab2 DEFAULT VALUES

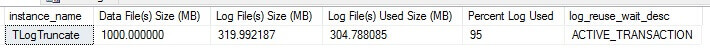

Step 11 - Transaction log keeps growing

A log backup is taken in the background every 1 minute. After few minutes, observe the physical transaction log file auto-growth has kicked-in 5 times and the transaction log size is now 320MB. The log file used size also keeps growing and the log_reuse_wait_desc is still ACTIVE_TRANSACTION.

In this scenario, log truncation is delayed due to the uncommitted transaction. The transaction log will keep growing as long as the transaction remains active. Once the physical transaction log size hits the drive maximum space capacity and is unable to auto-grow any further, an error message will be returned to client and logged in SQL Server error log "The transaction log for database 'xx' is full due to 'ACTIVE_TRANSACTION'".

Step 12 - Transaction commits and log truncated

Finally, the explicit transaction in step 6 is issued with a COMMIT to commit the active transaction. Log backups are still occurring on the background every 1 minute. The log file used size is now 15MB indicating the transaction log has now been truncated and the inactive portion of the log file has been marked to be reused. From the original transaction log size of 64MB, the physical transaction log size has auto-grown to 320MB.

Typically, you would want to leave the physical transaction log size as it is if this is the size required for your workload in correlation to your log backup process.

Summary

Under the SQL Server full recovery model or bulk-logged recovery model, the inactive part of the log cannot be truncated until all its log records have been captured in a log backup.

An active transaction delays log truncation in all recovery models. The same steps can be repeated to simulate the behavior on databases in the SIMPLE recovery model with the updated instructions below:

- Change the database recovery model to SIMPLE at Step 6

- Log backup is not required after Step 6

Next Steps

- Learn about

About the author

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Masters Degree in Distributed Computing.

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Masters Degree in Distributed Computing.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips