By: Tim Smith | Comments | Related: > Replication

Problem

We have several lower-level SQL Server environments where our developers and junior DBAs have access to make changes to how our environment is configured. We have found that sometimes we see changes made to replication in this environment that is incorrect. Rather than limit the access, we'd like to identify a way of catching this problem since we suspect it may be only one or two developers. Is there a way to identify when changes to replication have happened, or drill into a specific time of when a change is made?

Solution

SQL Server Replication can be set up to make tracking changes easier, such as using objects like stored procedures to transmit data. However, it's possible to create replication using direct insert, delete and update scripts that are then removed after replication, which would add a challenge to tracking changes. In this tip we'll look at some examples to track both, depending on how replication is set up and I will warn that more overhead will be required in the monitoring script creation than when using procedure to transmit data.

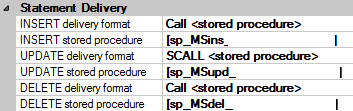

One way to set up replication is to use to objects to pass the insert, update or delete statements (the statement delivery method - see below this). When we click on the properties of an article, we can choose how we want the data delivered. The default of this delivery with stored procedure is the naming convention of sp_MS. If we change our naming convention, then we need to audit what we've changed and we'll want to make sure that we choose a naming convention that is intuitive. I've seen several replication issues caused by poor naming convention. When a stored procedure for replication is created or changed, this will be reflected in the metadata on the destination server and database where the stored procedures reside:

SELECT name , SCHEMA_NAME(schema_id) , create_date , modify_date FROM sys.procedures WHERE name LIKE 'sp_MS%'

This name filter assumes that we're using the default naming convention. This will tell us when a stored procedure was either created or modified. In addition, we can also check the metadata of the actual replication objects, such as the table create and modify date:

SELECT name , create_date , SCHEMA_NAME(schema_id) , modify_date FROM sys.tables WHERE name = 'OurReplicatedTable'

If changes are made to replication and we're using stored procedures to deliver data, we'll be able to identify using both the stored procedure objects and (or) the table objects if a change happened. As an example of this in practice, I've used the metadata of both tables and procedures to identify when replication was completely removed and re-created, which is a solution popular among DBAs, even though this might be a questionable practice if no one can identify what caused the original problem. In addition, I've also been able to identify when a destination table was moved to a new schema, even though the procedure still pointed at a default schema for the destination table (like dbo). If we're in a situation where we also want to track any removals, we will want to store this information with a timestamp and we can deduce from the stored data what object is missing and when this object was removed.

/*

---- On the remote server, create:

CREATE TABLE tblReplicationMetadata(

TableName VARCHAR(100),

SchemaName VARCHAR(50),

CreateDate DATETIME,

ModifyDate DATETIME,

TrackDate DATETIME DEFAULT GETDATE()

)

CREATE CLUSTERED COLUMNSTORE INDEX CCI_tblReplicationMetadata ON tblReplicationMetadata

*/

---- On the remote server, run periodically:

INSERT INTO tblReplicationMetadata

SELECT

name

, SCHEMA_NAME(schema_id)

, create_date

, modify_date

, GETDATE()

FROM [Remote_DestinationServer].[DestinationDatabase].sys.tables

WHERE name IN ('OurFirstTable','OurSecondTable','OurThirdTable')

In the above code snippet, we're tracking three tables on a remote server and saving the table name, schema name, and dates it was created and modified along with a timestamp of when this was saved. In environments where many objects change, this allows DBAs to quickly drill into what changed, when, and how this can be stopped or prevented in the future. If I'm seeing changes multiple times a day, I will track this more frequently - like every 15 minutes in a job schedule, or if I seldom see this, I'll reduce the tracking time.

In some cases, replication might be set up with snapshot files using direct insert, update or delete statements on a reset. These files are created, used and removed later in a clean up job, though this may be done quickly after all data are transmitted.

For this set up, we will use the PowerShell code below to track the last write date of all files and folders within a folder.

<#

CREATE TABLE tblReplicationFiles(

ReplicationFileName VARCHAR(500),

FileWriteTime DATETIME,

TrackTime DATETIME DEFAULT GETDATE()

)

CREATE CLUSTERED COLUMNSTORE INDEX CCI_tblReplicationFiles ON tblReplicationFiles

#>

$replicationfilelocation = ""

$folderAll = Get-ChildItem $replicationfilelocation -recurse

foreach ($item in $folderAll)

{

$saveitemname = ($item.FullName).Replace("'","")

$save = "INSERT INTO tblReplicationFiles VALUES ('$saveitemname','" + $item.LastWriteTime + "',GETDATE())"

Invoke-Sqlcmd -ServerInstance "OURINSTANCE\OURSERVER" -Database "OurDatabase" -Query $save

}

This job or task schedule should run faster than old files and folders are cleaned up, which may mean more frequent or less frequent run times. This will be pointed where snapshots are created and stored and will catch restarts that involve snapshots. By tracking the created files, I can determine the time in which something may have changed and by using the file names, I can derive what might have changed, or check the metadata on the object - such as checking the create date and modify date if the file indicates that table OurTable was added or changed. This information can also be extracted from the MSdistribution_history table on the distribution database by looking at the columns comments and time, provided that this information is retained.

In both examples where I store data in a table for logging purposes, I've chosen to use the clustered columnstore index since I occasionally look at the logging data and it's more efficient for the data to be stored effectively than allow for faster query performance. In an example involving one million replication files on the latter script, I had a compression ratio of 0.0016 MB (clustered columnstore index) to 32.04 MB (clustered index). This isn't always the guarantee ratio, but in my own view, I see few - if any - advantages of creating a table built for queries when I rarely look at the table and really need the data compressed.

Next Steps

- This tip look at different ways to track possible changes with replication. If saving information, make sure to timestamp the information, so that you can precisely identify when a change happened. In addition, while there are other ways to track this information, these approaches use the metadata provided by the system so that the overhead is reduce in environments where replication may be intense.

- The main purpose of tracking this information whether directly from the metadata or from what we're storing from metadata is to quickly identify what and when an object is changing or when replication as a whole has changed. Once we know this information, we can take action to either stop or prevent it from occurring.

- Check out other Replication Tips

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips