By: Dan Quinones | Comments (7) | Related: > Clustering

Problem

The traditional configuration of a letter assigned for each volume on a Windows Server imposes limitations on our SQL Server cluster design. The primary constraint is the twenty-six letters in the alphabet limiting us to that number of drives that our server can use. This limits the number of instances we can fit onto a cluster as well as limits the ability to distribute the workload across disks for a particular instance or database. What alternative configuration can we utilize to overcome the restrictions placed on our design by the limited number of letters left over for our SQL install? What additional benefits can we achieve with another configuration?

Solution

The use of Mount Points allows us to overcome the challenges discussed above. In addition to overcoming the available letter limitation, utilizing mount points also allows for greater flexibility, reduced administrative overhead, higher utilization of hardware investments and even cost-savings. You may be asking, what is a mount point? A mount point is a mounted volume within another folder or volume. Essentially a mount point is a disk, usually a SAN LUN, that is mapped and ends up being a folder within a folder. In Author Edwin Samiento's tip Configuring Volume Mountpoints on a SQL Server 2008 Failover Cluster running on Windows Server 2008 he outlines the steps to creating and configuring mount points. In this tip we will further explore the advantages of using mount points and how it may benefit you. In this tip we will discuss the reasons and benefits for using mount points.

Overcoming Drive Letter Limitations

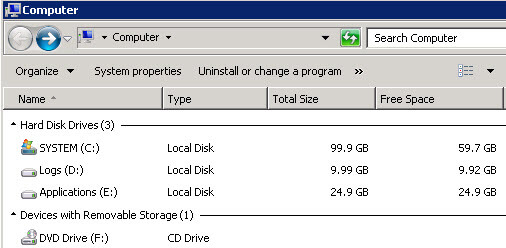

Let's look at how mount points can help overcome the limitations on the number of available letters remaining. Most organizations have standard conventions defining items such as disk layout on their windows server builds. Typically a Windows server has a system drive (C:), a DVD/CD drive (we'll call this F:) and in some cases dedicated local drives for log and/or application files. It would not be too far-fetched to assume that an organization that is willing to spend the money and time in deploying a high availability solution such as a SQL cluster would employ such standard conventions. This leaves us with twenty-two available letters for a configuration such as the one described. Then consider how often you use A: or B: as volumes on your Windows servers. Most of us think of 3.5" disks when seeing A:. Although we rarely see A: or B: used in the real world, it is generally a best practice to not use them and keep them reserved. This brings our pool of available letters down to twenty.

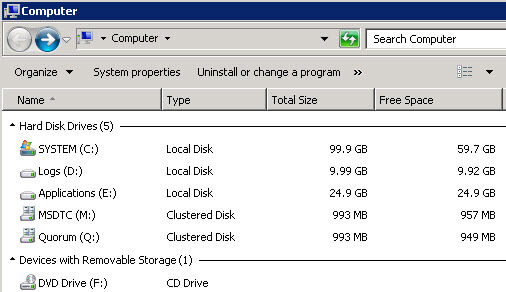

Once we setup our Cluster and then the SQL server cluster prerequisite shared Distributed Transaction Coordinator, we have two more letters used up, quickly taking our pool of available letters down to eighteen.

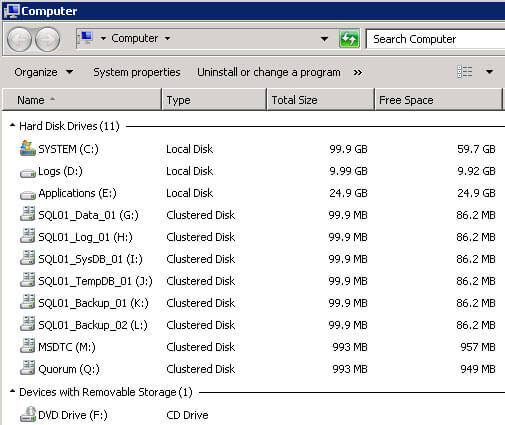

Eighteen drive letters may sound like a lot but anyone with experience using dedicated LUNs for the various SQL database and log files will realize that number can quickly be exceeded with even one or two instances. In this example we see how quickly we can consume letters with only a single instance:

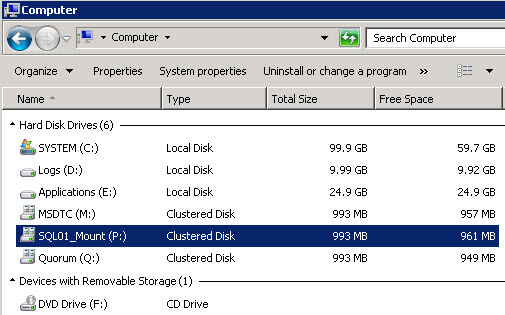

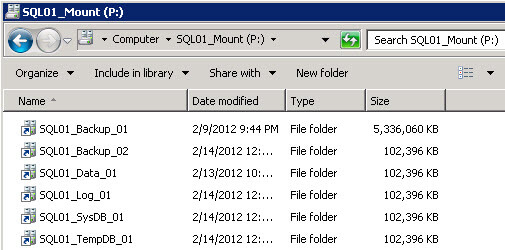

With only a single instance installed we have used up roughly half of the available drive letters. We are already becoming constrained if we want to add additional luns for filegroups or install a second or third instance on the cluster. Utilizing a mount point the same SQL configuration above could be configured utilizing a single letter - the mount point host volume.

The contents of the mount point are the same physical disk LUNs as above without using the additional drive letters.

Per MSDN's Maximum Capacity Specifications for SQL Server SQL Server supports 25 instances on a failover cluster. Let your hardware constraints limit the maximum number of instances you can install onto your SQL clusters, not the limited number of letters left to you from the alphabet.

Flexibility

When using mounted volumes you have increased options available for your use. If you want to add additional volumes to spread filegroups across you can easily do this. All you would have to do is add a new volume under the existing mount letter. Another scenario would be if you want to try a different RAID configuration on some of the volumes as part of performance tuning. You can easily have different RAID implementations configured under the same mount. Storage tiering also can be utilized easily, in the above example we see a Backup_01 and 02, the 01 volume may be comprised of smaller, higher speed disks that could be used to complete a backup within a limited backup window. The 02 volume could then be a slower, larger volume that would archive the backup from 01 for long(er) term archiving. You can even have storage presented from different storage platforms (as long as they are on the same storage network that the cluster is attached to). These are a few examples of how utilizing a mount point can offer additional flexibility in your SQL solution design.

Reduced Administrative Overhead

In conjunction with the increased flexibility discussed above reduced administrative workload can be achieved as a result of the additional flexibility. Additionally the complexity surrounding managing multiple san volumes between 2 or more cluster nodes is reduced with the use of a single root mount volume dedicated to each SQL instance. In our example we know that all our volumes associated to the SQL01 instance are located under the P:\ drive. Gone are the days of keeping track of which drive letters are in use on which cluster nodes. I have personally seen issues where a drive letter was available on one node but when a cluster group using that letter was failed over the letter was in use and that volume would fail to come online, causing a outage situation. There may be a small increase in complexity during the initial setup but the simplicity that follows helps reduce your administration workload in many ways.

Increased Hardware Utilization

As discussed during the drive letter limitation section the number of instances that can be installed on a single cluster can be increased significantly. Theoretically, without the limitation on the number of letters available to use, we can install up to the product maximum twenty-five instances on a cluster. This can be scaled even higher to fifty instances on a stand-alone server, where mount points can also be used. Theoretically, without the limitation on the number of letters available to use, we can install up to the hardware resource limitations or the maximum number of instances, whichever comes first.

We also can achieve greater utilization through the use of "Active-Active" clustering. Active-Active works in a configuration where instead of allowing the second, traditionally passive, node in a cluster to remain idle, a SQL instance or instances can be run on both nodes concurrently. This allow us to take advantage of the otherwise idle resources on that second node. This is also easier to manage with mount points as described in the reduced administrative overhead section above.

Cost Savings

Along with the increased hardware utilization come the inherent cost-savings of having to deploy less hardware to support more instances. The extent of the cost-savings does not end with having to buy less hardware. A significant cost of any SQL Server solution comes from the SQL Server licensing. As we know SQL Server is licensed based on physical hardware, so the licensing cost would be the same for a server regardless if you have 1 instance or 10 instances on it. The savings that can be realized from both hardware and license savings can be significant. Similar to the way virtualization has taken numerous less utilized servers and consolidated them onto common hardware as virtual machines, We are effectively consolidating our SQL physical server footprint and reducing costs as a result.

Simple cost-savings analysis

The following is just an example of how savings can be achieved through utilizing a greater number of instances on a single cluster. The example uses an estimated cost of $25,000 for a SQL license, and assumes we are only purchasing one license per physical cluster we need.

Traditional Cluster Deployments:

| # of Instances | # of Servers | Total # of Servers | # of SQL Licenses: | Total # of licenses | Total Licensing Costs |

|---|---|---|---|---|---|

| Instance 1 | 2 - Cluster 1 | 2 | 1 License | 1 | $25,000 |

| Instance 2 | 2 - Cluster 1 | 2 | 1 License | 1 | $25,000 |

| Instance 3 | 2 - Cluster 2 | 4 | 2 Licenses | 2 | $50,000 |

| Instance 4 | 2 - Cluster 2 | 4 | 2 Licenses | 2 | $50,000 |

| Instance 5 | 2 - Cluster 3 | 6 | 3 Licenses | 3 | $75,000 |

Consolidated Cluster Deployments using multiple instances with mount points:

| # of Instances | # of Servers | Total # of Servers | # of SQL Licenses: | Total # of licenses | Total Licensing Costs |

|---|---|---|---|---|---|

| Instance 1 | 2 - Cluster 1 | 1 | 1 License | 1 | $25,000 |

| Instance 2 | 2 - Cluster 1 | 1 | 1 License | 1 | $25,000 |

| Instance 3 | 2 - Cluster 1 | 1 | 1 License | 1 | $25,000 |

| Instance 4 | 2 - Cluster 1 | 1 | 1 License | 1 | $25,000 |

| Instance 5 | 2 - Cluster 1 | 1 | 1 License | 1 | $25,000 |

In the above example we were saved on 4 physical servers (the 2 additional clusters needed to support instances 3-5) as well as $50,000 in SQL licensing. Again this is an example only, use this as a starting point for your own cost-analysis.

Caveats/Known Issues

With all of the benefits listed above, I am obligated to point out some of the caveats that should be considered. One thing to take into careful consideration is the amount of instances on a single cluster. The old adage "don't put all your eggs in one basket" applies here. With the relative simplicity that instances can be stacked onto a cluster make sure to properly plan for adequate resources in the event that one of the cluster nodes were to fail. Highly consolidated clusters running many instances would be best suited for development and test environments where hardware failure would not have as significant an impact as it would in the event numerous instances were to go down in production.

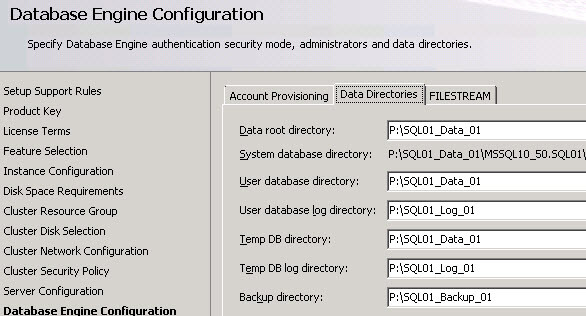

An issue that I have seen comes in the form of installation errors when attempting to specify the mount point folder itself as a data or log directory during SQL install. KB Article 2216461 states that "SQL Server does not support installing to the root of the Volume Mount point because the permissions are not propagated to the Mount Point since Windows does not let you replace permissions on the root of a volume. To prevent problems during new installations and applications of updates to SQL Server, create a folder on the root of the mount point and install to that folder. At this point, permissions are set correctly.

This would cause installation to fail:

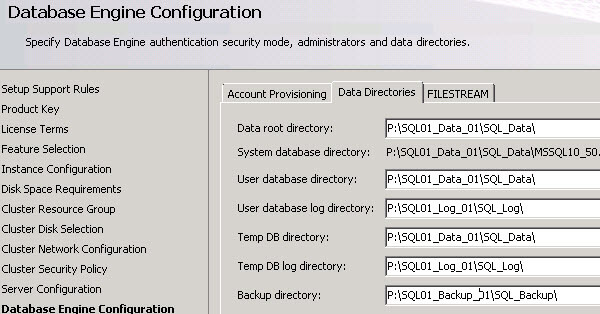

This would be the correct configuration, creating a folder under each mounted volume to specify as your data or log directory during install.

Next Steps

- Review the tip Configuring Volume Mountpoints on a SQL Server 2008 Failover Cluster running on Windows Server 2008.

- Analyze your environment and determine if any of the benefits noted in this tip could help your organization.

- Review Microsoft KB Article SQL Server 2008 setup fails to install on a Windows Server 2008-based cluster mount point.

- Review Maximum Capacity Specifications for SQL Server to familiarize yourself with SQL Server 2008 capacity figures.

- For further information on SQL Server licensing look at A Guide to Assessing SQL Server Licensing - Microsoft.

About the author

Dan Quinones is an Architect/Systems Engineer with over 11 years of experience specializing in Microsoft Server and Database technologies.

Dan Quinones is an Architect/Systems Engineer with over 11 years of experience specializing in Microsoft Server and Database technologies.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips