By: John Miner | Comments (4) | Related: > Compression

Problem

Large object (LOB) data types are stored in special pages in a SQL Server database, see this books online article for details on pages and extents. These pages can not be compressed to save space, so how can we reduce the disk space footprint for our table that stores images?

Solution

Microsoft SQL Server 2016 was released to manufacturing on June 1, 2016, see this SQL Server Team BLOG for the full details. This product release contains a new function called COMPRESS() that solves this business problem. You can read this MSDN entry for full details on the function. Microsoft has adopted a cloud first release strategy and therefore it is not surprising that this function is already available for Azure SQL Database.

Business Problem

This article assumes you know how to create a Azure SQL Server and Azure SQL Database. If you are not familiar with these tasks, please see my recent article on that goes over these basic steps. You should be familiar with PowerShell since we will be leveraging this language to automate our Azure deployment.

We will be continuing the NASA theme with this article. The

Hubble

Telescope was launched into low order space in 1990 and still remains in operation

today. Many stunning images have been taken from the telescope. Today, we will be

looking at the angel, cat eye, crab, lion and veil nebulas. Our task is

to load five image files into a Azure SQL Database using the

PowerShell ISE. The stretch goal is to save database space using the new

COMPRESS() function.

Azure Objects

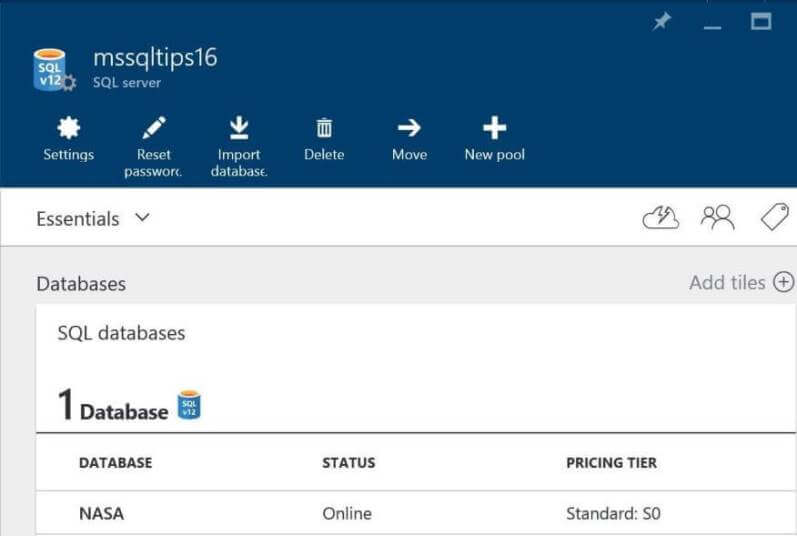

Our first task is to re-use the Azure SQL Server that I created with my Visual Studio Enterprise subscription. The server is named mssqltips2016 and the administrator login name is jminer.

Our second task is to create

a Azure SQL database named NASA that resides in a resource group called rg4nasa

that is located in the US EAST data center. I choose the SO Standard

as the database size. This gives us 10 database transaction units to perform data

processing.

Our third task is to create an Azure Firewall rule named MyLaptop

that will allow my computer to talk to the Azure SQL Server. This is a critical

step; otherwise, we will not be able to connect to the server.

Execute Non Query

Before we can actually do work, we need to build some custom PowerShell (PS) functions. The .Net Library is wide open to the PS language to leverage. We are going to use the SqlClient library to communicate with our Azure SQL Database.

The Exec-NonQuery-SqlDb custom function takes a connection string and TSQL query as input. The query can be any DELETE, INSERT, UPDATE or DDL statement that does not return a record set. We will use this function heavily to create database objects and manipulate data.

When designing functions, it is good practice

to use the CmdLetBinding function with strongly typed parameters.

#

# Name: Exec-NonQuery-SqlDb

# Purpose: Execute a DELETE, INSERT, UPDATE or DDL statement.

#

function Exec-NonQuery-SqlDb {

[CmdletBinding()]

param(

[Parameter(Mandatory = $true)]

[String] $ConnStr,

[Parameter(Mandatory = $true)]

[string] $SqlQry

)

# Create & open connection object

$Conn = New-Object -TypeName System.Data.SqlClient.SqlConnection

$Conn.ConnectionString = $ConnStr

$Conn.Open()

# Create command object

$Cmd = $Conn.CreateCommand()

$Cmd.CommandTimeout = 300

$Cmd.CommandText = $SqlQry

# Execute query with no return sets

$Result = $Cmd.ExecuteNonQuery()

# Close the session

$Conn.Close()

# Return the result

return $Result

}

Get Data Set

The developer must be able to query the database to see what objects exist and

what data is stored. The Get-DataSet-SqlDb custom function takes a connection

string and TSQL query as input and returns the output record set that can be view

in the PowerShell ISE.

#

# Name: Get-DataSet-SqlDb

# Purpose: Retrieve data from SELECT query.

#

function Get-DataSet-SqlDb {

[CmdletBinding()]

param(

[Parameter(Mandatory = $true)]

[String] $ConnStr,

[Parameter(Mandatory = $true)]

[string] $SqlQry,

[Parameter(Mandatory=$false)]

[ValidateSet("DataSet", "DataTable", "DataRow")]

[string]$As="DataRow"

)

# Create connection object

$Conn = New-Object -TypeName System.Data.SqlClient.SqlConnection

$Conn.ConnectionString = $ConnStr

# Create command

$Cmd = $Conn.CreateCommand()

$Cmd.CommandText = $SqlQry

# Create adapter & dataset objects

$Adapter = New-Object -TypeName System.Data.SqlClient.SqlDataAdapter $Cmd

$DataSet = New-Object -TypeName System.Data.DataSet

# Fill dataset and return as result

$Adapter.Fill($DataSet)

# Close the session

$Conn.Close()

# Return the result

Switch ($As)

{

'DataSet' { Write-Output ($DataSet) }

'DataTable' { Write-Output ($DataSet.Tables) }

'DataRow' { Write-Output ($DataSet.Tables[0]) }

}

}

Create Database

To solve our business problem, we want to create and load the Azure SQL database each time we run our PowerShell script. I am going to create data files that contain TSQL statements for each step.

Instead of specifying the full path for these TSQL scripts, we can use the Set-Location cmdlet in PowerShell to set our working directory. See step 1 in the PowerShell snippet below.

To drop and create a new database, we need to be working in the master database. The connection string below has detailed information on the Azure SQL Server, default database, administrator account and password. The Get-Content cmdlet can be used to read up the TSQL script in text format. Be default, data is broken into a string array at every line break. We need to specify a delimiter that will not be found so that the file is read in as one big string.

Last but not least, we call the Exec-NonQuery-SqlDb cmdlet to create a new database named NASA.

Please see step 2 that creates the database.

#

# Step 1 - Set path for scripts & images

#

# Set the path

Set-Location "C:\MSSQLTIPS\MINER2016\ARTICLE-2016-03-COMPRESS"

#

# Step 2 - Create database

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=master; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Grab the script

[string]$SqlQry = Get-Content -Delimiter "`n" "create-database.sql"

# Run the script

Exec-NonQuery-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry

Listed below is the contents of the create-database.sql

data file. Please note the GO batch statement has been removed from the TSQL

code. This identifier is only valid in the SSMS tools.

/*

Create a new database

*/

-- Delete existing database

IF EXISTS (SELECT name FROM sys.databases WHERE name = N'NASA')

DROP DATABASE NASA;

-- Create new database

CREATE DATABASE NASA

(

MAXSIZE = 2GB,

EDITION = 'STANDARD',

SERVICE_OBJECTIVE = 'S0'

);

Create Tables

I am going to create a table named [DBO].[HUBBLE_TELESCOPE] that will contain the final results of our work. The table name [DBO].[TEMP_IMAGE] will contained the temporarily staged images. The PowerShell Snippet below is almost the same as above. However; we changed the default database to NASA.

Please see step 3 below that creates the tables.

#

# Step 3 - Create tables

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=NASA; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Grab the script

[string]$SqlQry = Get-Content -Delimiter "`n" "create-tables.sql"

# Run the script

Exec-NonQuery-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry

Listed below is the contents of the create-tables.sql

data file.

/*

Create a table to hold hubble pictures

*/

-- Delete existing table

IF EXISTS (SELECT * FROM sys.objects

WHERE object_id = OBJECT_ID(N'[DBO].[HUBBLE_TELESCOPE]') AND type in (N'U'))

DROP TABLE [DBO].[HUBBLE_TELESCOPE];

-- Add new table

CREATE TABLE [DBO].[HUBBLE_TELESCOPE]

(

[MY_ID] [SMALLINT] IDENTITY (1, 1) NOT NULL,

[MY_TITLE] [VARCHAR] (64) NULL,

[MY_FILE] [VARCHAR] (512) NULL,

[MY_IMAGE] [VARBINARY] (MAX) NULL

);

/*

Create a table to hold single picture

*/

-- Delete existing table

IF EXISTS (SELECT * FROM sys.objects

WHERE object_id = OBJECT_ID(N'[DBO].[TEMP_IMAGE]') AND type in (N'U'))

DROP TABLE [DBO].[TEMP_IMAGE];

-- Add new table

CREATE TABLE [DBO].[TEMP_IMAGE]

(

[MY_ID] [SMALLINT],

[MY_IMAGE] [VARBINARY] (MAX) NULL

);

Insert Non-Binary Data

The table named [DBO].[HUBBLE_TELESCOPE] contains integer and text data in addition to the binary images. This non-binary data needs to be loaded before processing the image files. Key columns in the table are [MY_FILE] which is the full name of each image file and [MY_ID] which is the surrogate key for each row of data.

Please see Step 4 below that inserts data.

#

# Step 4 - Insert non-binary data

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=NASA; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Grab the script

[string]$SqlQry = Get-Content -Delimiter "`n" "insert-nonbinary-data.sql"

# Run the script

Exec-NonQuery-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry

Listed below is the contents of the insert-nonbinary-data.sql

data file.

/*

Add non-binary data to table

*/

-- Clear the table

TRUNCATE TABLE [DBO].[HUBBLE_TELESCOPE]

-- Image 1

INSERT INTO [DBO].[HUBBLE_TELESCOPE] ([MY_TITLE], [MY_FILE]) VALUES

('angel nebula', 'nasa-hubble-telescope-angel-nebula.bmp');

-- Image 2

INSERT INTO [DBO].[HUBBLE_TELESCOPE] ([MY_TITLE], [MY_FILE]) VALUES

('cats eye nebula', 'nasa-hubble-telescope-cats-eye-nebula.bmp');

-- Image 2

INSERT INTO [DBO].[HUBBLE_TELESCOPE] ([MY_TITLE], [MY_FILE]) VALUES

('crab nebula', 'nasa-hubble-telescope-crab-nebula.bmp');

-- Image 3

INSERT INTO [DBO].[HUBBLE_TELESCOPE] ([MY_TITLE], [MY_FILE]) VALUES

('lion nebula', 'nasa-hubble-telescope-lion-nebula.bmp');

-- Image 4

INSERT INTO [DBO].[HUBBLE_TELESCOPE] ([MY_TITLE], [MY_FILE]) VALUES

('veil nebula', 'nasa-hubble-telescope-veil-nebula.bmp');

Listing Non-Binary Data

So far, we have been blindly running PowerShell snippets without looking at the results. Let's SELECT all the data from the table named [DBO].[HUBBLE_TELESCOPE]. We will finally be calling the Get-DataSet-SqlDb cmdlet we defined above.

Please see Step 5 below that lists our five records.

#

# Step 5 - Show the table data

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=NASA; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Make TSQL stmt

[string]$SqlQry = "SELECT * FROM [DBO].[HUBBLE_TELESCOPE];"

# Show the data

Clear-Host

Get-DataSet-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry

The output from the PowerShell snippet is listed below. It is important to note

that two result sets are being returned. The number of rows and the actual table

data represented as an object.

Insert Image Into Temp Table

The two custom PowerShell functions that were written before are generic in nature. They will work with any database.

However, to INSERT binary data to our [DBO].[TEMP_IMAGE] table will have to be very specific. The function below takes the connection string, row id and image data as input. It uses a parameterized query so that the binary data does not need to be passed in a string format. Parameters allow the developer to specify strongly typed data.

Please see the Insert-2-Temp-Table-SqlDb cmdlet below.

# Name: Insert-2-Temp-Table-SqlDb

# Purpose: Code very specific to images.

#

function Insert-2-Temp-Table-SqlDb {

[CmdletBinding()]

param(

[Parameter(Mandatory = $true)]

[String] $ConnStr,

[Parameter(Mandatory = $true)]

[int32] $MyId,

[Parameter(Mandatory = $true)]

[byte[]] $MyImage

)

# Create & open connection object

$Conn = New-Object -TypeName System.Data.SqlClient.SqlConnection

$Conn.ConnectionString = $ConnStr

$Conn.Open()

# Create command object

$Cmd = $Conn.CreateCommand()

$Cmd.CommandTimeout = 300

# TSQL & Parameters

$Cmd.CommandText = "INSERT DBO.TEMP_IMAGE VALUES (@MY_ID, @MY_IMAGE)"

$Cmd.Parameters.Add("@MY_ID", [System.Data.SqlDbType]"SMALLINT")

$Cmd.Parameters["@MY_ID"].Value = $MyId

$Cmd.Parameters.Add("@MY_IMAGE", [System.Data.SqlDbType]"VARBINARY", $MyImage.Length)

$Cmd.Parameters["@MY_IMAGE"].Value = $MyImage

# Execute action

$Result = $Cmd.ExecuteNonQuery()

# Close the connection

$Conn.Close()

# Return the result

return $Result

}

Hubble Images

The images below are bit maps located in the DATA directory. I found the images

doing a BING search for nebula pictures taken by the Hubble Telescope. If you have

not had the chance to look at these images, check out the NASA/ESA

webpage with

the top 100 hubble pictures.

Inserting Binary Data

Again, we are going to SELECT data from the [DBO].[HUBBLE_TELESCOPE] table. The data is sent down the pipeline to the Select-Object cmdlet so that only the MY_ID and MY_FILE columns are returned. There is a blank row in the result set. I am assuming it is the count. The Where-Object cmdlet is inserted on the end of the pipeline to remove this empty row. The final result is stored in the variable named $List.

A foreach loop is used to pick a row at a time and save the information into the $Item variable. We need to format the input parameters before calling our new custom cmdlet named Insert-2-Temp-Table-SqlDb.

The Get-Content cmdlet has an option to read in a whole file as a byte array. Last but not least, we are going to pipe the output from our call to Out-Null. This action tosses away the details about each parameter object that is created. In short, this data is not needed.

Please see step 6 below

that loads each image by row id into a temporary staging table.

#

# Step 6 - Load each image into temp table

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=NASA; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Grab the script

[string]$SqlQry = "SELECT * FROM [DBO].[HUBBLE_TELESCOPE];"

# Remove empty row (count), grab id & file name list

$List = Get-DataSet-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry | `

Select-Object MY_ID, MY_FILE | Where-Object { $_.MY_ID -gt 0 }

# For each file, load the image to temp table

foreach ($Item in $List)

{

$MyId = $Item.MY_ID

$Path = (Get-Location).Path + '\data\' + $Item.MY_FILE

[byte[]]$MyImage = Get-Content $Path -Encoding byte

Insert-2-Temp-Table-SqlDb -ConnStr $ConnStr -MyId $MyId -MyImage $MyImage | Out-Null

}

Listing Binary Data

Again, we have been blindly running PowerShell snippets without looking at the results. Let's call the Get-DataSet-SqlDb cmdlet to list all the data in the table named [DBO].[TEMP_IMAGE].

Please see Step 7 below that lists our five records.

#

# Step 7 - Show the table data

#

# Set connection string

[string]$ConnStr = 'Server=mssqltips16.database.windows.net;Database=NASA; `

Uid=JMINER;Pwd=MS#tips$2016;'

# Make TSQL stmt

[string]$SqlQry = "SELECT [MY_ID], LEN([MY_IMAGE]) AS MY_BYTES, [MY_IMAGE] `

FROM [DBO].[TEMP_IMAGE];"

# Show the data

Clear-Host

Get-DataSet-SqlDb -ConnStr $ConnStr -SqlQry $SqlQry

The output from the PowerShell snippet is listed below. We can see that each

image is around 200K bytes in size. When displaying data returned from the Write-Output

cmdlet, the format is changed. In SQL Server, we have a hex pattern but what is

displayed in the PowerShell ISE is an array of integers.

Before Adding Images

At this point, we can solve the business problem with one more call to Exec-NonQuery-SqlDb to update the final table from the temp table using the new COMPRESS function applied to the VARBINARY field. I am going to break out SQL Server Management Studio since I want to show you what is internally going on with the table.

The ALTER TABLE statement with the REBUILD PARTITION clause can be used to rebuild our final table with different compression levels (NONE, ROW, PAGE). Like I said at the beginning of the article, the database engine does not apply the compression to the LOB pages. I will leave testing this fact for you to try.

I am going to show three different ways that we can look at what is going on with the data in our table.

- The sp_spaceused will return the total number of data and index pages as well as bytes used and bytes reserved.

- We can return the size of the MY_IMAGE column using the LEN function.

- The sys.dm_db_index_physical_stats dynamic

management function returns detailed storage information.

-- Adjust table compression (NONE, ROW, PAGE)

ALTER TABLE [dbo].[HUBBLE_TELESCOPE]

REBUILD PARTITION = ALL

WITH (DATA_COMPRESSION = NONE);

-- Set image field to null

UPDATE A

SET A.MY_IMAGE = NULL

FROM [DBO].[HUBBLE_TELESCOPE] A;

-- Get table space used

EXEC sp_spaceused '[dbo].[HUBBLE_TELESCOPE]';

-- Show image field size

SELECT [MY_TITLE], LEN([MY_IMAGE]) MY_BYTES

FROM [dbo].[HUBBLE_TELESCOPE];

-- Physical pages used

SELECT

database_id,

hobt_id,

index_type_desc,

alloc_unit_type_desc,

record_count,

avg_record_size_in_bytes

FROM sys.dm_db_index_physical_stats

(DB_ID(N'NASA'), OBJECT_ID(N'[dbo].[HUBBLE_TELESCOPE]'), NULL, NULL , 'DETAILED');

The output below is from running the above TSQL in SSMS connected to our Azure

SQL database. Basically we have 5 records stored in one data page. All information

is stored IN_ROW_DATA pages.

Adding Uncompressed Images

The TSQL statement below will update the final table with uncompressed images from the temp table.

-- Update final table w/ uncompressed images

UPDATE

A

SET

A.MY_IMAGE = B.MY_IMAGE

FROM

[DBO].[HUBBLE_TELESCOPE] A JOIN

[DBO].[TEMP_IMAGE] B ON A.MY_ID = B.MY_ID;

The output below shows the LOB_DATA pages are now being used. About 1.2 MB of storage is being used. The total number of bytes being used by the image column is 1,135,170.

Adding Compressed Images

The TSQL statement below will update the final table with compressed images from the temp table. If we add a Step 8 to the PowerShell script calling the Exec-NonQuery-SqlDb cmdlet with this TSQL, we will have a complete solution. I'll leave this exercise for you to complete.

-- Update final table w/ compressed images

UPDATE

A

SET

A.MY_IMAGE = COMPRESS(B.MY_IMAGE)

FROM

[DBO].[HUBBLE_TELESCOPE] A JOIN

[DBO].[TEMP_IMAGE] B ON A.MY_ID = B.MY_ID;

The output below shows the LOB_DATA pages are being used again. However, the number and size of pages are smaller. A little over 860 KB of storage is being used. The total number of bytes being used by the image column is 780,146. If we calculate percent change, we are now saving 31.27 percent on storage size.

Why use PowerShell?

If I was coding for an on-premises solution to load binary images into a SQL Server, I would use the OPENROWSET function with the SINGLE_BLOB parameter and the CODEPAGE equal to RAW. If you look at the books on line article, this functionality is not supported in Azure SQL Database. The bcp utility program was built to load rows of data quickly into storage, not to load a single binary file. Therefore, this utility can not help us.

This problem could have been solved with SSIS, but we are moving away from the tools that are handy for the DBA.

One more reason why I introduced the SqlClient library

is the fact that Azure Automation can be done with

RunBooks that are coded in PowerShell.

Summary

The new COMPRESS() function can be used to save database space for columns that contain Large object (LOB) data types.

Today, we tried compressing images and ended up with about 35 to 40 percent in savings on storage space. Just like any compress technique, the g-zip algorithm works better on some data types than others. For instance, a VARCHAR(MAX) column that contains the text of "War and Peace" will probably have a higher compression ratio.

In addition to this new function, we were introduced to some custom cmdlets that can be used to access and manipulate data in SQL Server. If you change the namespace from SqlClient to OleDb, you will now be able to play with any type of database that you have a Ole DB driver for.

In conclusion, next time we will talk about saving

our compressed images from our database to a local file system using the DECOMPRESS()

function.

Next Steps

- Check out these other compression related tips.

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips