By: Jeffrey Yao | Comments (1) | Related: More > Professional Development Career

Problem

To be a DBA, one has to be stress and pressure proof to deal with daily multiple tasks, flooding alerts, on-call standbys or some midnight wake-up calls. Without some principles, one can easily get drown in troubled water. As a DBA for many years, do you have any thoughts about how to be a happy DBA?

Solution

There are tons of DBA best practices articles / blogs online about how to be good DBAs, all we need is to google them out. But almost all of these best practices focus on some technical or communication skills.

What I try to outline in this tip are some high-level rules about how to practice as a DBA, these rules have helped me in reducing the work fatigue/stress as a DBA, and I hope they will be useful to my fellow DBAs.

Rule 1 – An alert not handled is an alert not needed

In all fairness, DBA work can be stressful and tiresome. To make our career life enjoyable, we need to reduce all unnecessary distractions in our work, even if the distraction is just a few seconds long.

Here is a real case, I have seen many alerts set up to monitor various aspects of SQL Server systems, but not all alerts need to be handled immediately. For example, a scheduled job (run every 30 min) failed during the weekend, and thus sent alert upon each failure. Since nobody is required to take care of it in weekend as per business rule, we are flooded with the alerts. The consequence is that this type of alerts may cause alert fatigue and lead you to miss a critical alert that needs immediate attention. For those no-need-to-respond alerts, at the minimum, we still need to spend a few seconds to scan and then delete.

All alerts should be managed in a way that we can customize their frequency, recipient and silence mode. For example, if an alert is not addressed after [X] times, the recipient will include higher level staff or if an alert occurs in the weekend, and we know such alert is not of primary concern, the alert can be muted and thus not sent, while the failures can be reported in a summary report on Monday morning.

The point is: life is already busy, one second saved is one second free.

Rule 2 – A fail-safe way is the right way

DBAs usually set up various maintenance and monitoring jobs according to business requirements. But often, all we do is to set up a notification when the job fails. This may be ok most of the time, but it would be better if we could put some thought into the design of the job to consider what mechanism should we invoke once the job fails, a few points can be:

- Can we let the job retry after a failure, if so, retry how many times before we give up?

- Can we ignore the current step and continue and later do a retry on this failed step individually? For example, I am doing a log backup of 10 databases in sequence, if for whatever reason, for database [D5], the log backup fails, I should be able to mark [D5] as log backup not done, and continue for the other databases, such as [D6], [D7], until [D10], and later via another job to do the log backup for [D5] again.

- If the job fails, can I notify a central monitoring process and let the monitoring process add an extra schedule to the job to run [X] minutes later? Or the central process can re-run the failed job once CPU or number of user sessions is low within the next [Y] hours? For example, when we do index maintenance, in SQL Server 2014+, during an index rebuild, if it is involved in a block, we can allow the index maintenance process to be auto killed, but after it is killed, we want the job to rerun after 10 minutes or after a specific environment condition is mature.

The point here is: if a task’s design is failure resilient, we will be worry-free about failures.

Rule 3 – Code implementation without production monitoring

For this rule, I will start with two real cases experienced.

The first case happened when we were still using SQL Server 2000. One DBA implemented an index maintenance job to be run at mid-night, this was actually part of 911 system (dispatch for ambulance), everything had been tested and looked OK. The problem is in the test environment, we did not pay attention to front-end application. So what happened was when the index maintenance started to run, after about 1 hour, it started to rebuild the largest table, and all of sudden, both CPU and I/O usage topped to the maximum, and the front-end application simply could not connect to the back-end database (totally timed out). But this was 911 system, within 5 min, all team leaders/managers and on-call people were wakened up to address the emergency. The chaos was beyond description.

The 2nd case was kind of a comedy. One DBA implemented a monitoring script that when an error occurred, it sent out an email. He set it up as a job to run every hour, and after implementation at around 10:30pm, he closed his laptop and went to sleep, and at 11:00pm when the job started, the script has a bug and was in a dead loop, and more than that the alert email in the script was sent out at about 30+ emails/second, and within 1 hour, the alert emails saturated the Exchange server, and also severely impacted those receiving accounts. It really did not do any harm to SQL Server environment, but it did crumble the email system. The cleanup of these emails to get the Exchange server back to order took about 1 day.

What I learned from the incidents is that I always stay to see my implementation run once either via smoking test or real run (like a scheduled job) before I dare to go to sleep.

The point is: rare incidents do happen; close monitoring will ensure negative impacts be minimized at an early stage.

Rule 4 – A solution out of SQL Server could be a better solution

When we DBAs develop our administration work, we naturally think of solutions in T-SQL. There is nothing wrong here, it is just such solutions usually compromise something somewhere. For example, I want to collect database information (let’s make it simple, just database name and size) across multiple servers from a central location, if using pure T-SQL, I may need to create multiple linked servers.

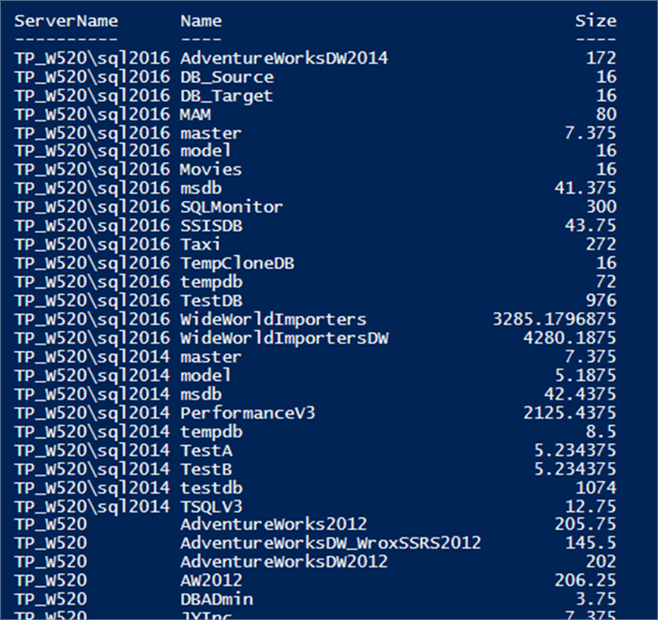

In such case, if we can use PowerShell + SMO, it just needs a few lines of code. The following PS code snippet will collect all databases in three SQL instances on my laptop.

import-module sqlserver # assume I have installed SQL Server 2016 PS module #I have 3 sql instances installed, one is default, and two are named instances, i.e. sql2016 and sql2014 '.\sql2016', '.\sql2014', '.' | % { $svr = new-object Microsoft.sqlserver.management.smo.server $_; $svr.databases | select @{l='ServerName'; e={$svr.name}}, name, size } | ft -auto

If I run it, I will get the following results:

The point is: There usually lies a better solution outside of our traditional comfort zone. Once we find it, we will be rewarded hugely for productivity and efficiency, i.e. smart work vs hard work.

Rule 5 – 80/20 rule is a happy rule

As a DBA, we may have tens of small tasks to do daily. Each task can be done either quickly (yet good enough) or perfectly.

Let’s say if you are asked to add a new login account (a windows domain account). I can come up with three ways to do this:

- Use SSMS GUI interface (fair way) to manually add the login account

- Good way (better than GUI way)

use master create login [Domain\Account] from windows

- Better way by doing some check to prevent error

use master if not exists (select * from sys.server_principals where name ='Domain\Account') create login [Domain\Account] from windows; else raiserror ('The login [%s] already exists', 16, 1, 'Domain\Account');

- Best way by checking whether [Domain\Account] is normal and exists in AD directory (via PowerShell) before we create it.

#requires -version 4.0 import-module ActiveDirectory; import-module sqlserver; #check whether [Domain\Account] exists [bool]$found = $false; $user = 'account'; try { # check if the account is an user get-ADUser -identity $user -Server '<your_AD_DomainController>' | out-null $found = $true; } catch { try { # check if the account is a group get-ADGroup -identity $user -Server '<AD_DomainController>' | out-null $found = $true; } catch { $found = $false; } } if ($found) { [string]$qry = @" use master if not exists (select * from sys.server_principals where name ='Domain\Account') create login [Domain\Account] from windows; else raiserror ('The login [%s] already exists', 16, 1, 'Domain\Account'); "@ invoke-sqlcmd -server "<target_server>" -Database master -query $qry; } else { write-host "[$user] is not a valid domain account"; }

To me, method 3 is good enough, and it is usually a senior DBA’s natural choice. While method 4, though it is more robust, it takes too much time and the added-value may not be worth the cost.

Such scenario can be applied to DBA’s basic work, like backup and restore, do you need to check the free disk space before doing backup / restore? Or check whether the subfolder exists before you do backup/restore? Or whether check backup file validity before restore?

The point is: No need to over-do our work as the net value declines quickly after a specific point.

Rule 6 – Working with those better than yourself

Here “better than yourself” means those people who are more experienced or more knowledgeable in your technical field. Such a work opportunity is priceless, it will help you grow much faster than if you try to learn everything by yourself.

I remember long time ago, in SQL Server 2000 days, there was a bug in SSMS, that is in you block comment with /* */ if you have a “go” statement, SSMS will report error, complaining something like ‘syntax error’ and it could not pinpoint the correct line either. Like the following snippet will report an error, because there is “go” inside the block comment

use master /* print 'hello world' go */ print 'good morning'

I remember when I was working on the lengthy script, I kept on getting this error and I could not figure it out for almost a whole day until my then team leader (a senior DBA) came to my help and he just reviewed code once and then deleted that “go” line and suddenly everything went well.

Since then I know that working with a more knowledgeable colleague is not only pleasant, but also valuable. In my later years when I become more mature in my DBA work, I find it is very precious to work with colleagues who can challenge your ideas with better solutions or discuss with you to generate super cool ideas. That makes my daily work both interesting and rewarding, and no doubt generating huge happiness and satisfaction.

The point is: you grow quickly in a strong team and you will rarely feel the pain and helplessness of long time trial and failure before getting something done.

Summary

In this tip, I have discussed a few rules to be a happy DBA. The bottom line is that trying to find ways to make our work easy, and not burn out ourselves. At the end of the day, we should avoid that work is a burden to us instead of something to provide us happiness and sense of achievement.

Next Steps

I am pretty sure many DBAs have their own ideas about how to be happy DBAs, so if you do, please share with our community.

Of course, before you can be a happy DBA, the first step is to be a good DBA (i.e. good at what you are doing as a DBA), in this regard, you may find the following links useful:

- Starting a SQL Server DBA Job

- How to Grow Your SQL Server DBA Career

- Enhancing your SQL Server Career

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips