By: Tim Smith | Comments | Related: More > Database Administration

Problem

In this tip, we look beyond the simple logging of data insert, update and delete (DML) scripts into the next step of what we may want if we experience a problem. If a developer submits a script to our team for review, what might we want to look for in the script? In addition, what improvements can we suggest (or make) to scripts in the case of when a failure occurs and we need to roll them back. Finally, we'll look at an architectural consideration for our data that's especially useful in priority configuration data or data that cannot experience any downtime (or elimination of data), in situations where a delete script would be too costly to revert.

Solution

Suppose that a developer submits a DML script to our DBA team with the following T-SQL:

DELETE FROM tblOurBigTable

From this script, consider the following questions:

- How many records are being removed from the table?

- If it turns out this script was written incorrectly, how would we reverse it?

- If we need to clear absolutely everything from the table, why are we using a delete over a truncate (consider the log impact, especially if this is a large table)?

I recommend the best practice of requiring all data scripts to involve a validation check. It requires some extra effort, but it reduces the probability of errors by raising a developer's consciousness about what the data script is doing and it clarifies what should be removed. In the above example of the delete statement, a check could be selecting the count of the table, which after a delete should be zero. It could also be a hard count of how many records should be removed. If the developer simply forgot the where clause in the delete statement (and I've seen this too), the check may have stopped this because the check wouldn't be checking if the table is empty, but whether a transaction count was hit, or whether data didn't exist or still existed (in the latter case, the check may fail if one is simply looking for "if data still exist" since everything being removed would pass that validation).

In the below code snippet, we create a validation test by saving the count of records that should be updated to a variable, running our data change (update), and then checking that the correct rows are updated before committing the transaction.

---- VALIDATION TEST: DECLARE @countUpdate INT SELECT @countUpdate = COUNT(Id) FROM tbExecScript WHERE Id > 2 BEGIN TRAN UPDATE tbExecScript SET DatabaseName = 'TestDB' WHERE Id > 2 ---- VALIDATION CONFIRMATION: IF @@ROWCOUNT <> @countUpdate BEGIN ROLLBACK TRAN SELECT 'Script failed; an erroneous amount of records were impacted.' OutputMessage END ELSE BEGIN COMMIT TRAN SELECT 'Script successfully executed.' OutputMessage END

While I show the example with a dynamic check, in some cases a hard count may be superior - such as:

BEGIN TRAN UPDATE tbExecScript SET DatabaseName = 'TestDB' WHERE Id > 2 ---- VALIDATION CONFIRMATION: IF @@ROWCOUNT <> 7 BEGIN ROLLBACK TRAN SELECT 'Script failed; an erroneous amount of records were impacted.' OutputMessage END ELSE BEGIN COMMIT TRAN SELECT 'Script successfully executed.' OutputMessage END

In this case, if new records were added to the table from the time we wrote this script to where more than 7 records would be updated, the script would fail. On failure, the developer might then have us run a script with the where clause of WHERE Id BETWEEN 3 AND 9, or another derivative. We should also consider other best practices with this:

- With few exceptions, we should isolate one data operation at a time. The exception is if an update and insert are required together or a combination are required together (see latter example). In these cases, we may want to have checks for both operations before committing the transaction.

- While I use a dynamic number for a check in the above example, in most production environments, I prefer the hard count - the exact number of records that should be either removed or updated (like 20). If a developer tells me, "I'm not sure how many records will be removed [or updated]" I will deny the script until its known. The reason is that even with a where clause, I've seen too many cases where there were "more" records in production than in the development environment and this caused a problem when this was discovered, after something failed. This is less of a problem with inserts and truncates than updates or deletes.

- When we consideration validation, we want to validate what operations should have happened. Some validation may pass, even though the transaction caused a problem, such as a validation check if records exist after a delete when we didn't want to delete everything. This is one example why hard counts offer advantages in some cases.

- All data scripts should be investigated for performance as well. In the first example, if we truly can wipe the entire table, we should press why a delete over truncate is being used. In many cases, developers may not be aware they have other options with T-SQL. This applies more to deletes and updates than inserts, unless we're inserting huge batches on tables with many indexes.

We also have other alternatives to this, as some production environments cannot risk any downtime (or lengthy downtime) with data changes. In these environments, we may want to consider using a combination of inserts and updates without ever touching deletes (except in rare situations where we archive records - if this is applicable).

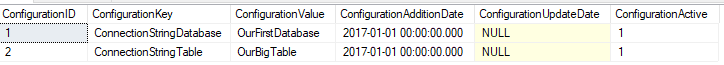

In the below example, we'll use a configuration table as an example where we never want to remove records, but set records to either active or inactive in statements. The table is designed with the intent to set records to active after an insert (the column ConfigurationActive). When we're ready to set old records to inactive and add two records, are update statement will update both the update time and active columns. The below records will be set to inactive with new records automatically set to active.

/*

---- Example table with two data values:

CREATE TABLE tblExampleConfigurationTable(

ConfigurationID INT IDENTITY(1,1),

ConfigurationKey VARCHAR(100),

ConfigurationValue VARCHAR(100),

ConfigurationAdditionDate DATETIME DEFAULT GETDATE(),

ConfigurationUpdateDate DATETIME,

ConfigurationActive BIT DEFAULT 1

)

INSERT INTO tblExampleConfigurationTable (ConfigurationKey,ConfigurationValue)

VALUES ('ConnectionStringDatabase','OurFirstDatabase')

, ('ConnectionStringTable','OurBigTable')

*/

SELECT *

FROM tblExampleConfigurationTable

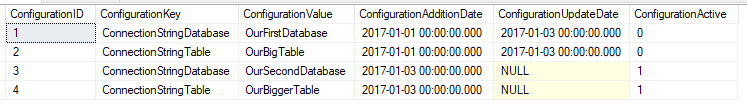

---- RUN A NEW TRANSACTION:

BEGIN TRAN

UPDATE tblExampleConfigurationTable

SET ConfigurationActive = 0, ConfigurationUpdateDate = GETDATE()

WHERE ConfigurationKey IN ('ConnectionStringDatabase','ConnectionStringTable')

INSERT INTO tblExampleConfigurationTable (ConfigurationKey,ConfigurationValue)

VALUES ('ConnectionStringDatabase','OurSecondDatabase'), ('ConnectionStringTable','OurBiggerTable')

COMMIT TRAN

SELECT *

FROM tblExampleConfigurationTable

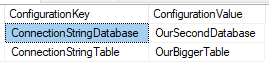

While this is what's happening on the back-end, on the front-end our application would only be running this statement:

SELECT ConfigurationKey, Configurationvalue FROM tblExampleConfigurationTable WHERE ConfigurationActive = 1

While this design offers many advantages by reducing outages or the length of outages since any reversal requires much less effort, as no record is ever removed, keep in mind the following architectural points:

- All of our select statements must include a minimum where clause of active records only, unless we're looking for inactive records. This means we'll want to index all of our active columns for filtering.

- With larger data sets where we use this model, we must consider scale and archiving data from the beginning, or this could become costly. How would we partition the data? When will we archive inactive records, if ever? Without knowing this upfront and having a design in place, we may end up with one table with billions of records in a short period of time.

- We must be willing to pay for size, as without any removal of data our environment will grow. The upside is that we have few, if any, outages (or incredibly short outages) while the downside is that we pay more for storage than in an environment where we're removing data.

Next Steps

- If the purpose of logging success or failure with a script involves whether to solve the next problem, with data scripts in many cases, we can create the next step and use that as a validator for the script.

- In environments where no downtime is allowed, every script should be written with a validation before adding, removing or updating data. In general, this is a best practice for all environments.

- With configuration data where downtime may cause an entire application to fail, avoid using delete, as reversing it can be too costly. With other data that cannot allow for downtime, apply the ActiveRecord architectural approach that eliminates deletes by using inserts and updates.

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips