By: John Miner | Comments | Related: > Azure

Problem

The concept of a network of smart devices was discussed as early as 1982, with a modified Coke vending machine at Carnegie Mellon University becoming the first Internet-connected appliance. This machine was able to report its inventory and whether newly loaded drinks were cold or not (Source - WikiPedia). The use of an application specific integrated circuit (ASIC) and sensors made this vision possible at that time.

Roll forward thirty years, the "internet of things" (IOT) idea has become common place with home products such as Google Nest thermostat control and Sense electricity monitor. The electronic hobbyist can purchase IOT prototyping kits such as Arduino Uno, Raspberry PI and Intel Edison for less than seventy five dollars. This industry is due to grow in the future with new ways to apply this technology.

Today, let’s revisit the first use case of a smart device using a Coca-Cola vending machine as our target appliance.

How can we simulate soda vending and send telemetry events to the Azure cloud?

Solution

Microsoft introduced the event hub service on November 5, 2014. Since then, many companies have been collecting on-premises telemetry and storing the messages (data) in the Azure Cloud. The deployment and configuration of the event hub service will be skipped in this article. It will be assumed that an event hub, that can receive secured HTTPS packets, exists in your subscription.

Business Problem

Our boss has asked us to investigate connecting the machines owned by Collegiate Vending, Inc. to the cloud to capture soda product sales. The ultimate goal is to save the messages in an Azure SQL database for analysis and reporting. Our fictitious company has a contract to distribute Coca-Cola products and vending machines to various educational institutions in the United States of America. Our job is to create a test program that sends simulated soda machine telemetry to an event hub service.

Historical Vending

The first Coca-Cola vending machine was invented in 1929 by the Glascock Brothers Manufacturing Co. It was an ice box that held 72 x 6-ounce bottles sold for 5 cents, which was payable to the clerk. Post World War II Coke vending machines were the first coin-operated machines. They operated by depositing a nickel, opening the door, and retrieving a soda bottle. Over the decades, the coke vending machines evolved to dispense change and allow for cans instead of bottles.

As a kid, I remember going to the gas station with my dad and using vending machine similar to the one shown above. Of course, the cost of Coca-Cola in 1975 was 45 cents. A 12 oz glass bottle was dispensed by opening the door and pulling out selection. The front of the machine even had a bottle opener for the occasion.

Modern Vending

There are several vending machine companies doing business in America today. Many of these companies have a colorful history. The Dixie-Narco company was initially located in West Virginia as a Maytag subsidiary. This company was involved in a legal dispute (1991) with Donald Trump before a Federal Bankruptcy Court related to machines at the Taj Mahal Casino. Today, the company is known as Crane Merchandising Systems and produces the BevMax line of machines.

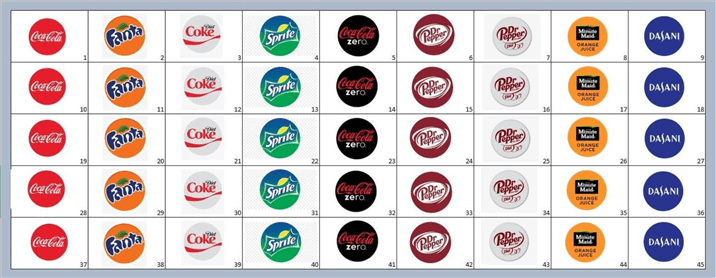

The BevMax4 machine shown below has 5 rows and 9 columns allowing for 45 different products to be displayed at a given time. It has the capacity to chill 360 x 20 oz bottles or 405 x 12 oz cans. All modern features such as key pad, money reader, and credit card scanner are bundled into this one machine.

In short, the vending machine today has a lot of electronic pieces compared to the historical machines that were mechanical in nature.

Educational Data

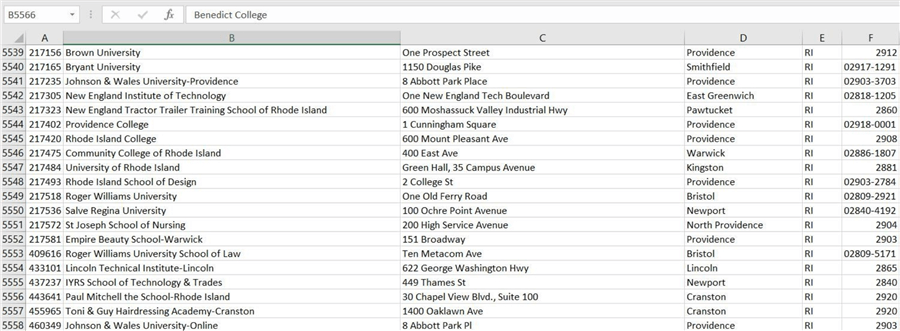

To simulate telemetry messages, we need to discover and define data that supports our business problem. First, we need to define the location of each Coca-Cola machine. The National Center of Educational Statistics keeps track of all American institutions. There are over 6800 entries in the data set that I downloaded from the web site.

The above image shows all the institutions in Rhode Island. At the top of the list of schools is Brown University. I am extremely proud of my daughter who will be attending this college in the Fall of 2020. It is interesting to note that not all institutions offer a four-year degree. Some fall into the area of trade schools.

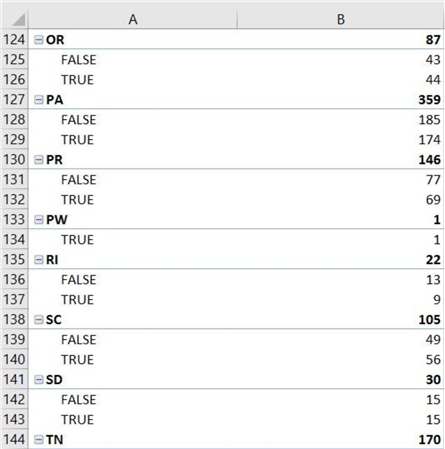

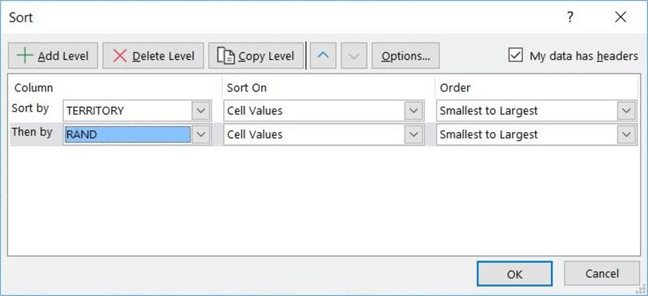

I decided to allocate fifty percent of the market to Collegiate Vending, Inc. Thus, the RAND function can be used in Microsoft Excel to generate a number from 0 to 1. Make sure you copy the data as values to another column. Every time a sheet is refreshed, the function generates a new random number.

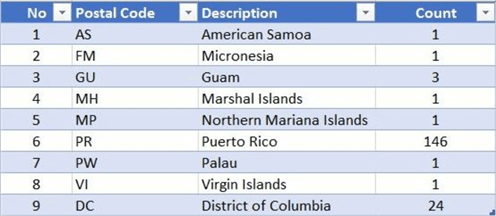

Pivot tables are a great way to explore the data at a summary level. The image above shows 22 institutions in Rhode Island. We are going to pick 9 of them as clients in our simulation. One problem with using unknown data sets is the possible occurrence of bad data. We can see that the state abbreviations of PR and PW are included in our data set.

Should these rows be included in our selection?

If we take a closer look at the data set, we can see that the abbreviations are related to territories and islands owned by the United States. The enclosed link was handy in translating the postal code to an appropriate description. I was quite surprised that Puerto Rico has more institutions for learning than Rhode Island, my home state.

However, our fictitious company only does business in 50 states. The RAND column can be used to select 50 percent market share. We are going to include the District of Columbia by marking the TERRITORY column as FALSE. Therefore, we will need to sort and filter the data to extract just the records that we want.

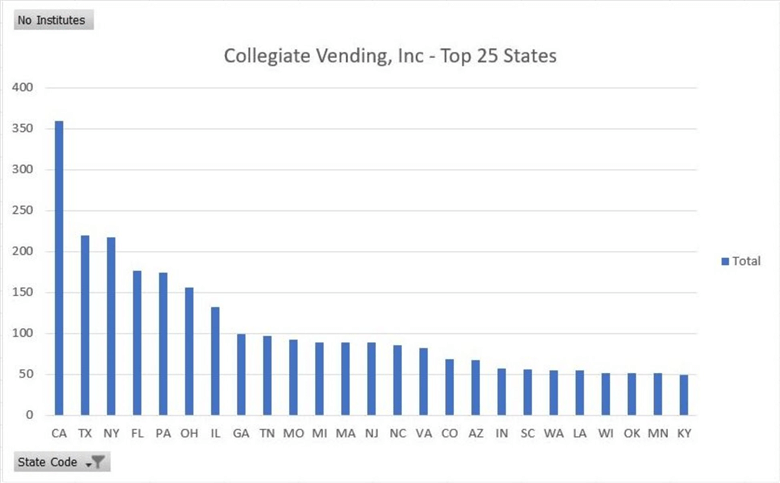

Our resulting data set contains 3,397 records for use in our simulation. A chart of the institutions by state shows that California, Texas and New York are the top locations for our vending machines.

We now need to convert this data into something that can be identified as a machine.

Machine Data

We are going to make some assumptions about the data to make preparation easier. The educational data set contains an institution number which is unique. We are going to assume that only one machine exists at an educational site and identified by this number. Obviously, this is not true in the real world.

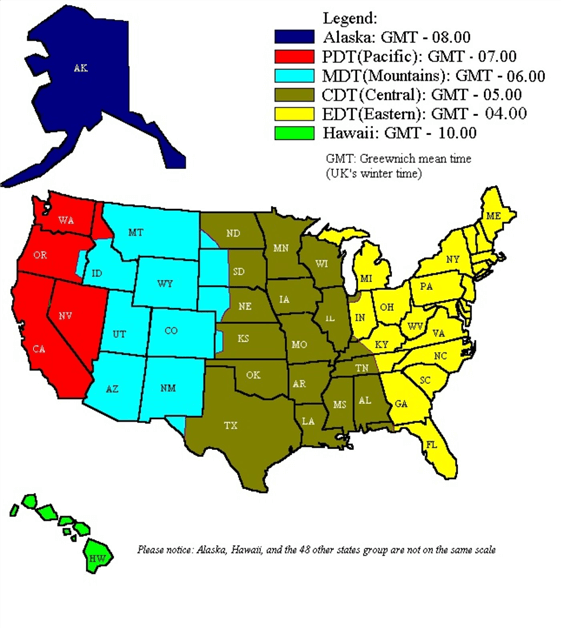

Also, it is import to understand the local time versus the Greenwich Mean Time (GMT). In the real world, vending sales are dependent upon time of day. For instance, more sales of soda might happen during lunch and dinner. However, we do not sell as much soda in the middle of the night.

This means the data file that describes the machine should have a machine id, state code and UTC offset. The below image from Wikipedia shows time zones per state. This is a complex problem since some states are split between two time zones. To make things worse, some states do not honor daylight savings time. Thus, not all people subtract an hour from the clock in winter time.

For simplicity, we are going to assume everything is in daylight savings time. Also, a state that has two time zones will be assigned the time zone with the smallest offset. Thus, Tennessee will be considered in the Eastern, not Central time zone.

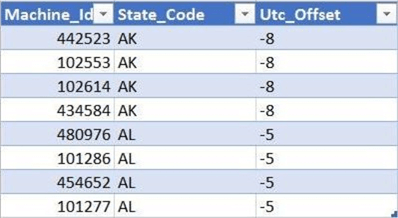

The final machine data file is enclosed in JSON format. A sampling of data in Microsoft Excel format is shown below.

Vending Data

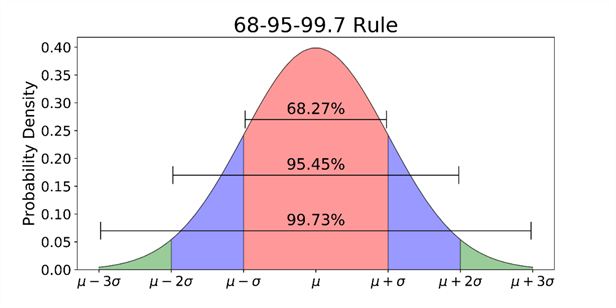

Our simulation must take in account the fact that different amount of sales will happen at different hours. If we had a ton of historical data, we could figure out the average vends per hour per time zone. Hopefully, plotting the data points would reveal a bell curve in which we could use standard deviation to figure out the range in which 95 percent of the data would fall within.

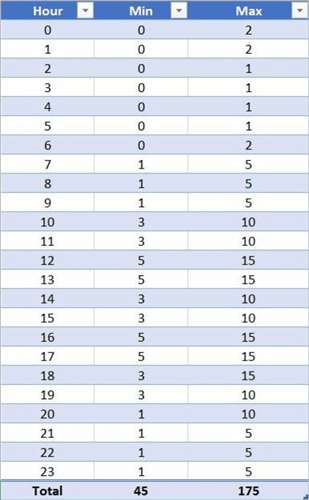

In reality, we are just making up some telemetry to capture with our Azure Event Hub. The table below shows that a vending machine will sell between 45 and 175 sodas. The minimum total is always guaranteed and maximum total is randomly achieved.

For example, we are guaranteed to have 5 sodas sold at 5 pm. But we do not know what products are being sold. This is important information if you are trying to figure out soda deliveries.

The Coca-Cola bottling company offers a variety of products including Coke, Dr. Pepper, Sprite, Fanta, Minute Maid and Dasani. In the real world, the products could be vended in any of the 45 slots. However, we are going to use the above product placement chart to make life easy.

There are two algorithms that we could use when selecting sodas using this chart: selection with replacement or selection without replacement. We are going to use the first algorithm since all 5 sales could have come from the same location (slot).

To recap, a vending data file will be used in the process of randomly picking the number of total sodas sold per hour. The slots data file will be used to assign which products are vended at a given location. The sum of all the vends will equal the total sodas per hour. The data files are enclosed in JSON format.

Architectural Diagram

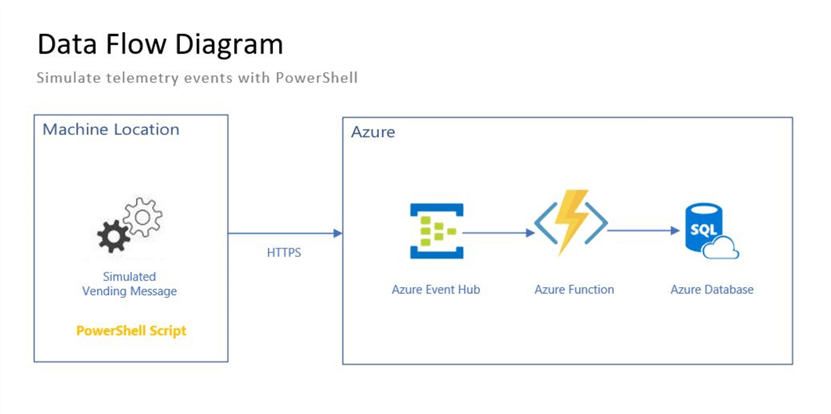

The data flow diagram below shows Azure objects used in the system design. The Power Shell script will generate 45 messages per the 3,397 machines per every hour. That means 152,865 messages are sent to the event hub per hour. There is a hierarchal relationship between event hub name space and event hub name. The parent (name space) can have multiple children (event hubs).

I chose to label the name space as ehns4tips2020and the event hub as ehub4tips2020. This service can use a shared access signature (SAS) instead of an access control list (ACL). The connection credential consists of a policy name and access key. I will use the default policy called RootManageSharedAccessKey and the primary access key. One way to hide this sensitive information is to store the data in a configuration file. The configuration file has the same name as the PowerShell script but has an extension of JSON.

Please note, the transmission of the messages from on premise to the cloud will be using HTTPS. There are many different ways to process the data once it is received by the event hub. The roadmap above depicts an Azure Function reading from the hub and writing to a SQL database.

PowerShell Script

The table below depicts the algorithm steps implemented in the PowerShell script named simulate-telemetry.ps1.

| Step No | Description |

|---|---|

| 1.0 | Set working directory. |

| 2.0 | Convert config file to PowerShell object. |

| 3.0 | Convert machine file to PowerShell object. |

| 4.0 | Convert vending pattern file to PowerShell object. |

| 5.0 | Convert slots file to PowerShell object. |

| 6.0 | For each machine, do the following. |

| 6.1.0 | Convert time to local hour. |

| 6.2.0 | Get vending grid array. |

| 6.3.0 | For each slot, create a JSON message. |

| 6.3.1 | Send message to event hub. |

The first step in the PowerShell script it to set the working directory path so that the various JSON files can be loaded into memory without using a full path.

# # Start exec script # # Set the path Set-Location "C:\Event Hub\" # Logging Write-Host "Starting vending simulator script at $(Get-Date -Format u)."

I like adding output messaging to the PowerShell script. This allows me to keep track of the execution path and makes debugging of the script easier.

The snippet below is a design pattern which is used to load the 4 JSON files as PowerShell objects.

# # Read x file # # Read file $config = Get-Content -Raw -Path ".\<insert file name>.json" | ConvertFrom-Json # Logging Write-Host "Convert json <insert file name> file to PS Object."

Having a cmdlet that creates an integer vending grid which represents sales per slot for a given min and max random value will be useful. The custom cmdlet named Get-Vending-Grid implements that task.

#

# Get-Vending-Grid() - For a given pattern, create a vending grid

#

function Get-Vending-Grid {

[CmdletBinding()]

param(

[Parameter(Mandatory = $true)]

[Int] $minval,

[Parameter(Mandatory = $true)]

[Int] $maxval

)

# Total vends this hour

$total = Get-Random -Minimum $minval -Maximum $maxval;

# Empty array of 0's

$array = @(0) * 45

# Make a sale

for ($i = 1; $i -le $total; $i++)

{

$idx = Get-Random -Minimum 1 -Maximum 45;

$array[$idx-1] += 1;

}

# Return object

Return $array;

}

The code to send a message to an event hub started with a forum response by Dominic Betts @ Microsoft in 2015. I packaged up the code into a nice re-usable cmdlet called Post-EventHub-Message. The following parameters are passed to the function: name space, hub name, key name (policy name), key value (SAS value), and JSON message.

The custom script creates a correctly formatted header and calls the event hub service using the Invoke-RestMethod cmdlet. Please see enclosed code for details of the completed solution.

Execution Times

The first execution of the PowerShell script took almost 3 hours to execute. That is because 3,397 machines sent 45 messages to the event hub. That is over 152 K total messages send to the service. However, we want have the script execute on an hourly schedule. Therefore, the script needs to complete in less than one hour.

Starting vending simulator script at 2019-12-24 07:01:57Z.

Ending vending simulator script at 2019-12-24 10:00:01Z.

There are two ways to fix solve this business problem.

The first way is to use the divide and conquer design. If one script can’t send X messages in one hour, can M scripts do it in parallel? We might run into a network saturation problem if we try to implement this solution. If we max out our network card bandwidth but still not meet the total time window, then we might have to execute this script on a cluster of machines C machines each executing N programs.

The second way is to send more information in each message to the service which reduces the total number of REST API calls. Thus, each machine could send an JSON array of 45 messages instead of 1 message. A fraction of the calls to the event hub service are needed to transfer the same amount of data. This revised algorithm moves the task 6.3.1 to task 6.4.0 which is outside the slots loop. Now, it takes less than 5 minutes to send all the telemetry for the vending machines.

Starting vending simulator script at 2019-12-26 17:06:26Z.

Ending vending simulator script at 2019-12-26 17:11:00Z.

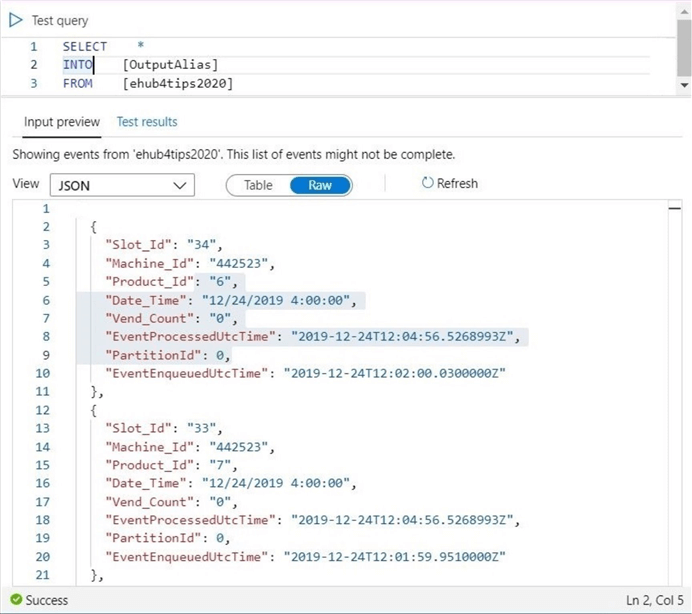

The screen shot below shows is an input preview of the data in the process data blade of the Azure Portal. If we truly liked the query results, we could convert it into an Azure Analytics job with a click of a button.

In a nutshell, we have solved the business problem at hand. I am including two versions of the final program. The large number of messages script might be useful in testing scalability of the event hub. Right now, we are sending messages for every slot in both programs. In the future, we might want to use Azure Stream Analytics to just capture the events that have meaning-full data.

Summary

The hardest part about simulating telemetry for an Azure Event Hub is the creation of data sets (objects) that match the business problem. Once these objects are defined, the transition from theoretical to practical application is quite straight forward.

In our case, we used a listing of educational institutions to outline two tables: client – list of current clients and machine – list of vending machines. The assumption of the model was to assign one machine per client with a fixed vending product grid. Right now, the client table is not needed to generate telemetry messages. However, it will become important in the future when generating reports. Other tables that might be needed are: products – a list of vending products, machine slots – mapping of product to slot for machine x, location – mapping of machine x to physical location y and vending events– the collection of telemetry messages over time. In short, this is a sketch of a database schema for this business problem that we will create in the future.

If you have not encountered an IOT business problem at work yet, reading this series of article will give you an idea of what to expect. One of the problems with capturing every telemetry event in an Azure SQL database is a dramatic increase in the amount data. If we save 45 records to the database for every machine for every hour, we end up with 1.34 B rows in one year. If we save one record to the database for same time period, we end up with 29.78 M rows. The actual number of records to store lies somewhere in between these two numbers. Thus, skipping empty records might be a good idea to save space.

The real time processing of data has been studied by computer scientists for a while. The Lambda architecture has two consumption lanes: speed and batch. Our current architectural diagram falls into the first lane. We will explore different ways to process and save telemetry events in future articles.

Next time, I will be talking about how to deploy an event hub using PowerShell cmdlets. In this future article, I will talk about the differences between IOT Hub and Event Hub service.

Next Steps

- Deploy and configure the event hub service with PowerShell.

- Trigger an Azure Function to store event hub messages.

- Filtering or aggregating event hub data with Stream Analytics.

- Capturing events to Azure Storage for batch processing

- Use Azure Data Factory to batch load telemetry data

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips