By: Jeffrey Yao | Comments | Related: More > Database Administration

Problem

SQL Server DBAs usually need to respond to lots of requests every day, from simple requests such as "can you please run a query in prod and send me the result in a spreadsheet?" to "can you please deploy these scripts (maybe hundreds) to PreProd?". All these requests are not technically challenging, but they can be very disruptive to a DBA’s work. These requests take a lot of time in communication, especially if the original request has some typo that needs to be clarified. So, generally speaking this type of DBA service is a low value-add, yet unavoidable. So, is there way we can solve this issue?

Solution

In my practice, I find the best way to address these random and repeatable requests, like running queries, deploying scripts or refreshing environments is to provide a self-service mechanism that can serve the requests automatically with no or minimized DBA involvement.

In this tip, we will design a simple self-service framework, with which a requestor can do a deployment of SQL scripts to non-production environments, like to a QA or pre-prod environment instead of involvement of a DBA.

For any self-service task, we first need to design a communication protocol so a requested service can be executed precisely as expected. This means the protocol should provide sufficient information about who asks what to do, where to do and when to do. The information can also serve as an auditing item for approval and archive.

The protocol design can be very flexible, from content to format. For example, we can have as many items as needed in a protocol as long as they are warranted by the business. The protocol format can be JSON, XML, csv, ini file, or even a database table.

But before designing any protocol, we need to consider our business requirements. So here is my requirement statement.

When users (developers / QAs) need to ask DBA to deploy SQL scripts to QA/Pre-Prod environments, they should be able to do so via a self-service process by providing information about the script location and the target environment where these scripts should be run.

Protocol Design

We want to have a simple protocol as much as possible to reduce misunderstanding, so we come up with a configuration file that has four items.

# comment line with # at the beginning # requestor needs to fulfill info on the right side of = [ScriptPath]=[path to scripts to be deployed] [requestor]=[email of the requestor] [Target]=[environment code, or sql instance name] [Tag]=[an arbitrary name to this deployment request]

The requestor needs to fulfill the mandatory information on the right side of each item, and each item is self-evident in meaning.

Self-Service Work flow

The work flow basically contains three steps:

1. A requestor prepares deployment scripts and put them under a folder in a shared drive.

2. A requestor fills out the configuration protocol file named DeployConfig.txt file under a specific drive, \\shared_drive\request\DeployConfig.txt.

3. The DeployConfig.txt file will be processed by a PowerShell (PS) script from a scheduled SQL Server Agent Job, and then deploy scripts to the designated environment.

The key component is step 3, i.e. the process logic of the PS. Here is a simplified logic in step 3:

(1). Check if the DeployConfig.txt file is filled with the needed information by a user, if not, no process. Otherwise, move to the next step.

(2). Check if the deployment has been deployed before by checking [Tag] value, if deployed, send out email to the requestor and no process. Otherwise, move to the next step.

(3). Check whether [ScriptPath] is valid and also whether [TargetServer] is accessible, if not, send out email to the requestor to fix the configuration ini file. Otherwise, move on to the next step.

(4). Reiterate files in the [ScriptPath] and sort the scripts by their full path names in alphanumeric order and deploy to [TargetServer] and log the deployment to [DeploymentHistory] table. If there is an error, stop and send an email to the requestor with the error message. Otherwise, finish and send email to report the deployment completion.

Detailed Code Design of the Process

In the code below, I will make a few assumptions to simplify the code so we can focus more on the process itself.

Here are three assumptions:

1. [TargetServer] will have a SQL Server instance name instead of an environment code. If we want to put an environment code there, like [App_QA1], then we have to do a code resolution, which can be via a lookup in a table or hard-code it in the PS script.

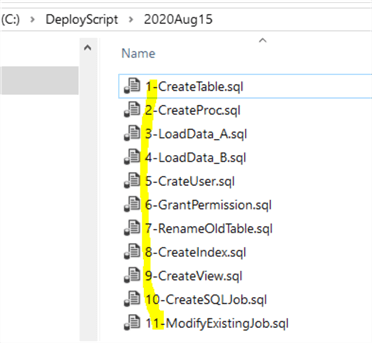

2. If multiple scripts are be deployed, if there is a requirement of deployment sequence, i.e. which script should be run before another, the sequence should be reflected in the script full path name, an example is shown below.

3. There is no parallel deployment design in the solution, i.e. at any time, the DeployConfig.txt can only have one set of deployment information and the next requestor needs to wait until the previous request is processed. After each process, the file will be reset with default values, and not until then, can the next requestor fulfill the file with new values. Otherwise, the previous request will be lost.

Source Code for the Deployment Engine

The following is the PowerShell source code, which does three functions in simple terms:

(1) Read deployment configuration file with basic verification test, and save the configuration into [DeploymentConfig] table.

(2) Deploy the script and log the execution in both a log file and [DeploymentHistory] table.

(3) Notify the requestor (and cc DBA team if needed) of the deployment result.

We assume in our central server, we have a database named [DBA], and we will then create two tables to log the deployment request and the deployment execution history.

USE [DBA]

GO

drop table if exists [dbo].[DeploymentHistory], [dbo].[DeploymentConfig]; --SQL 2016 and later syntax

go

-- log the details of each Deployment request

CREATE TABLE [dbo].[DeploymentConfig](

[InitFile] [varchar](2000) NOT NULL,

[InitFileDate] [datetime] NOT NULL,

[TargetServer] [varchar](100) NOT NULL,

[ScriptFolder] [varchar](600) NULL,

[Requestor] [varchar](100) NOT NULL,

[Tag] [varchar](150) not NULL,

[id] [int] IDENTITY(1,1) primary key,

)

GO

-- log the deployment result of each script

CREATE TABLE [dbo].[DeploymentHistory](

[FullPath] [varchar](800) NULL,

[Tag] [varchar](150) NULL,

[TargetServer] [varchar](60) NULL,

[Status] [varchar](20) NULL,

[Message] [varchar](6000) NULL,

[DeployDate] [datetime] NULL,

[ConfigID] [int] NULL,

[id] [int] IDENTITY primary key

);

GO

ALTER TABLE [dbo].[DeploymentHistory] ADD DEFAULT ('not started') FOR [Status];

ALTER TABLE [dbo].[DeploymentHistory] ADD DEFAULT (getdate()) FOR [DeployDate];

ALTER TABLE [dbo].[DeploymentHistory] WITH NOCHECK ADD FOREIGN KEY([ConfigID])

REFERENCES [dbo].[DeploymentConfig] ([id]);

GO

The following is the PowerShell code that will do the real deployment work. You may need to replace some information with your own values, such as $log_file, $central_svr and $IniFile, also @mycompany.com needs to be changed.

#Function: this is to deploy scripts based on one config file #$log_file, $central_svr and $IniFile, also @mycompany.com need to be changed import-module sqlserver; $log_file ="d:\dba\log_$((get-date).ToString('yyyyMMdd_HHmm')).txt" # deployment log $central_svr = 'DBACentralServer'; # from this central server, the deployment will be made $IniFile = '\\<sharefolder>\DeployConfig.txt'; #requestor prepares this deployment request file $deploy_status = 'SUCCEEDED'; if (test-path -Path $log_file) { remove-item -Path $log_file; } if (-not (test-path -Path $IniFile)) { throw "[$IniFile] does not exist, please double check";} $dtbl = new-object System.Data.DataTable; $dc = new-object System.Data.DataColumn ('InitFile', [System.string]); $dtbl.Columns.add($dc); $dc = new-object System.Data.DataColumn ('InitFileDate', [System.DateTime]); $dtbl.Columns.add($dc); $dc = new-object System.Data.DataColumn ('TargetServer', [System.string]) $dtbl.Columns.add($dc); $dc = new-object System.Data.DataColumn ('ScriptPath', [System.string]) $dtbl.Columns.add($dc); $dc = new-object System.Data.DataColumn ('Requestor', [System.string]); $dtbl.Columns.add($dc); $dc = new-object System.Data.DataColumn ('Tag', [System.string]); $dtbl.Columns.add($dc); #read the DeployConfig.txt file $ini = @{}; switch -Regex -file $IniFile { "\[(.+)\]=\[(.+)\]" { $name, $value = $matches[1..2]; $ini[$name]=$value; } } # make it mandatory the [Requestor] has a valid email address if ($ini.Requestor -notmatch '@mycompany.com' -or $ini.tag -eq '' -or $ini.ScriptPath -eq 'the sql script path in a shared folder' -or $ini.TargetServer -eq 'sql server name') { write-host 'nothing to run'; return; } $c = get-content -Path $IniFile | ? { $_ -match '^\s?\[.+'}; # excluding comment and only extracting the key data $FileDate = dir -Path $IniFile | Select-Object -Property LastWriteTime; $c | out-file -FilePath $log_file -Encoding ascii -Append; "`r`n`r`nImplementation starts at$(get-date)..`r`n `r`nDeployment Starts" | out-file -FilePath $log_file -Encoding ascii -Append; # when an error is encountered, the script will stop, you can rerun the whole script, and it will skip the failed script and contine to next one [string]$requestor = $ini.Requestor [string]$ScriptPath = $ini.ScriptPath; [string]$tag = $ini.Tag; $target_server = $ini.TargetServer; if (test-path $ScriptPath) { $script_folder = $ScriptPath } else { invoke-sqlcmd -ServerInstance . -Database msdb -Query "exec dbo.sp_send_dbmail @recipients='$($requestor)',@copy_recipients='[email protected]', @subject='Cannot find Script folder', @Body='[$($ScriptPath)] is invalid'"; throw "Invalid Folder [$ScriptPath]" } #check whether the $Target_server is correct try { invoke-sqlcmd -ServerInstance $target_server -Database master -query "select getdate()" | Out-Null } catch { invoke-sqlcmd -ServerInstance . -Database msdb -Query "exec dbo.sp_send_dbmail @recipients='$($requestor)',@copy_recipients='[email protected]', @subject='Cannot connect to$($target_server)', @Body='The server$($target_server) cannot be accessed'"; throw "The server$target_server cannot be accessed"; } #check whether the $Tag is already there, if so, we need to change it $qry = @" if exists (select * from dbo.DeploymentHistory where Tag='$($Tag)') select isTagInside = 1; else select isTagInside = 0; "@ $result = invoke-sqlcmd -ServerInstance $central_svr -Database dba -Query $qry -OutputAs DataRows; if ($result.isTagInside -eq 0) { #we save the DeploymentConfig.txt $r = $dtbl.NewRow(); $r.InitFile = $c -join "`r`n"; $r.InitFileDate = $FileDate.LastWriteTime ; $r.TargetServer = $ini.TargetServer; $r.ScriptPath = $ini.ScriptPath; $r.Requestor = $ini.Requestor; $r.tag = $ini.tag; $dtbl.Rows.Add($r); Write-SqlTableData -ServerInstance $central_svr -DatabaseName dba -SchemaName dbo -TableName DeploymentConfig -InputData $dtbl; } [string]$deployment_name = $ini.tag; # choose your own name if needed,my pattern is: Application_Date_VerNum $continue = 'N'; #adding another layer of protection in case the prod server is used... IF ($target_server -in ('prod01', 'prod02', 'prod03')) #adding your prod list here { $continue = 'n' ; throw "we do not allow to deploy to production [$target_server] at this time"; } else { $continue ='y';} if ($continue -ne 'y') { throw "you are going to deploy to prod, not continuing";} $dt = New-Object System.Data.DataTable; $col = New-Object System.Data.DataColumn('FullPath', [system.string]); $dt.Columns.Add($col); $col = New-Object System.Data.DataColumn('Tag', [system.string]); $dt.Columns.add($col); $col = New-Object System.Data.DataColumn('TargetServer', [system.string]); $dt.Columns.add($col); dir *.sql -Path $Scriptpath -Recurse -File | Sort-Object { [regex]::replace($_.FullName, '\d+', { $args[0].value.padleft(10, '0')})} | ForEach-Object { $r = $dt.NewRow(); $r.FullPath = $_.FullName; $r.Tag = $deployment_name; $r.TargetServer = $target_server; $dt.Rows.add($r); } #check whether we need to populate the table again $qry = @" if exists (select * from dbo.DeploymentHistory where Tag='$($deployment_name)') select isRunBefore = 1; else select isRunBefore = 0; "@ $result = invoke-sqlcmd -ServerInstance $central_svr -Database dba -Query $qry -OutputAs DataRows; if ($result.isRunBefore -eq 0) # the deployment never run before { Write-SqlTableData -ServerInstance $central_svr -DatabaseName dba -SchemaName dbo -TableName DeploymentHistory -InputData $dt; } $qry = @" select FullPath, id, TargetServer, Tag, [Status] from dbo.DeploymentHistory where Tag = '$($deployment_name)' and [Status] = 'not started' --'success' order by id asc "@; $rslt = Invoke-Sqlcmd -ServerInstance $central_svr -Database dba -Query $qry -OutputAs DataRows; foreach ($dr in $rslt) { try { write-host "Processing [$($dr.FullPath)] with id=$($dr.id)" -ForegroundColor Green; "Processing [$($dr.FullPath)] with id=$($dr.id)" | Out-File -FilePath $log_file -Encoding ascii -Append [string]$pth = $dr.FullPath; invoke-sqlcmd -ServerInstance $dr.TargetServer -Database master -InputFile $dr.FullPath -QueryTimeout 7200 -ConnectionTimeout 7200 -ea Stop ; [string]$myqry = "update dbo.DeploymentHistory set [Status]='Success' where id =$($dr.id);" invoke-sqlcmd -ServerInstance $central_svr -Database dba -Query $myqry; } catch { $e = $error[0].Exception.Message; $e = $e.replace("'", '"'); # [string]$myqry = "update dbo.DeploymentHistory set [Status]='Error', [Message]='$($e)' where id = $($dr.id);" if ($e.Length -gt 6000) { $e = $e.Substring(1, 6000);} [string]$myqry ="update dbo.DeploymentHistory set [Status]='Error', [Message]='" + $e + "' where id =$($dr.id);" write-host "Error found on id=$($dr.id) with message =`r`n [$e]"; "`r`nError occurred `r`n`r`n$($e)"| out-file -filepath $log_file -Encoding ascii -Append; $deploy_status = 'FAILED'; invoke-sqlcmd -ServerInstance $central_svr -Database dba -Query $myqry -QueryTimeout 7200 -ConnectionTimeout 7200; write-host "error found, plese get out of here"; break; } } $qry = @" set nocount on; UPDATE h set ConfigID = c.id from dba.dbo.DeploymentHistory h inner join dba.dbo.DeploymentConfig c on h.Tag = c.Tag where h.ConfigID is null; exec msdb.dbo.sp_send_dbmail @recipients ='$($requestor)',@copy_recipients='[email protected]', @subject='Deployment of [$($deployment_name)] on [$Target_server]$($deploy_status)', @body='please verify and let DBA know if there is any issue', @file_attachments='$($log_file)'; "@; invoke-sqlcmd -ServerInstance $central_svr -Database DBA -Query $qry; #move the origina DeployConfig to an archive folder for later verification Move-Item -Path $IniFile -Destination "\\<share_folder>\DeployConfig_Archive\DeployConfig_$((get-date).tostring('yyyyMMdd_hhmm')).txt"; $txt = @" #config file, please fill the [] on the equation right side WITHOUT any quotes #[TargetServer] should be the sql instance name, such as [MyQA_1], [PreProd_2] etc #[ScriptPath] is where you put the sql script, such as [\\<share_folder>\Deployment\2020Aug20_QA\] #[Requestor] should be the requestor's email, so once the deployment is done, an notification email will be sent out #[Tag] this is more like a deployment name, can be anything to indicate the deployment, for example [QA_V1.2.3] [TargetServer]=[sql server name] [ScriptPath]=[the sql script path in a shared folder] [Requestor]=[your email addr] [Tag]=[] "@; #re-generate the DeployConfig.txt $txt | out-file -FilePath $IniFile -Encoding ascii;

Fig-1 PowerShell Code of Deployment Engine

Demo Of the Process

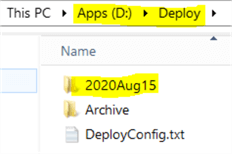

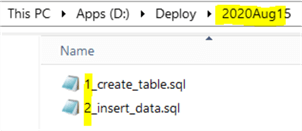

I have prepared the following folder structure, where I put the deployment scripts in d:\Deploy\2020Aug25\ and D:\Deploy\Archive\ will be used to save DeployConfig.txt after each deployment. In my case, I create two scripts and put them in the following folder D:\Deploy\2020Aug15\.

The code of the two scripts is as follows:

-- 1_create_table.sql -- this is sql server 2016 sp2 CU14 use MSSQLTips; go drop table if exists dbo.tblTest; go create table dbo.tblTest(id int identity, a varchar(120)); go -- 2_insert_data.sql -- just insert a few dummy records use MSSQLTips; go insert into dbo.tblTest(a) values('hello world 1'),('hello world 2') ,('hello world 3'),('hello world 4'); go

Now we need to prepare the DeployConfig.txt file, the original, unfilled file is like the following:

#config file, please fill the [] on the equation right side WITHOUT any quotes #[TargetServer] should be the sql instance name, such as [MyQA_1], [PreProd_2] etc #[ScriptPath] is where you put the sql script, such as [\\<share_folder>\Deployment\2020Aug15_QA\] #[Requestor] should be the requestor's email, so once the deployment is done, an notification email will be sent out #[Tag] this is more like a deployment name, can be anything to indicate the deployment, for example [QA_V1.2.3] [TargetServer]=[sql server name] [ScriptPath]=[the sql script path in a shared folder] [Requestor]=[your email addr] [Tag]=[]

And I will fill the last four lines and it becomes like the following:

#config file, please fill the [] on the equation right side WITHOUT any quotes #[TargetServer] should be the sql instance name, such as [MyQA_1], [PreProd_2] etc #[ScriptPath] is where you put the sql script, such as [\\<share_folder>\Deployment\2020Aug15_QA\] #[Requestor] should be the requestor's email, so once the deployment is done, an notification email will be sent out #[Tag] this is more like a deployment name, can be anything to indicate the deployment, for example [QA_V1.2.3] [TargetServer]=[localhost\sql2016] [ScriptPath]=[d:\deploy\2020Aug15\] [Requestor]=[[email protected]] [Tag]=[MyFirstTest]

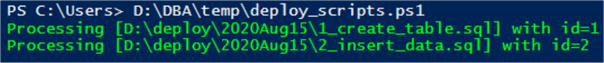

Now if we start PowerShell ISE and load the PS script in Fig-1 and run the script, we can see the following output:

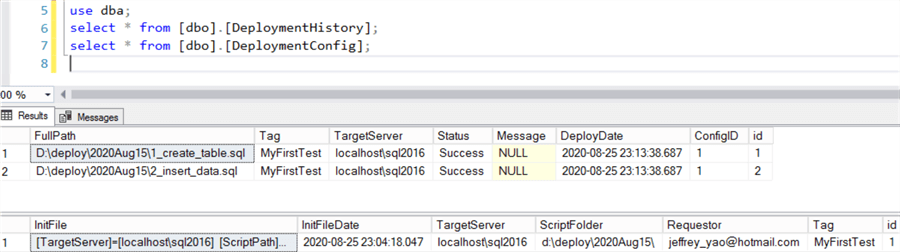

And if we check back the deployment request and history tables, we will see the following:

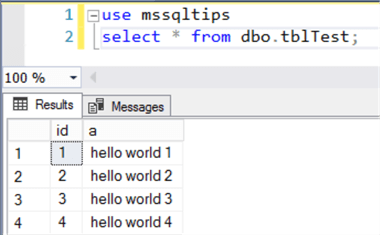

If we check the deployment result, we will see the table is created with four records inserted.

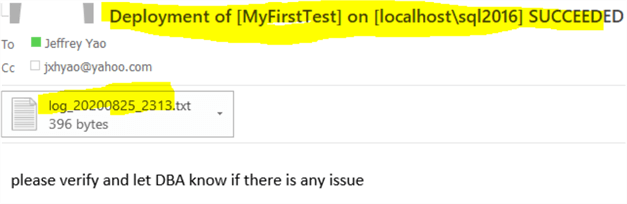

Also, I will receive a notification email with execution details.

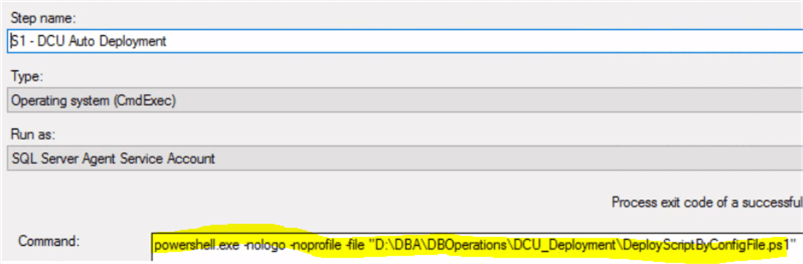

In my work environment, I created a SQL Server Agent Job in the central server and in the job, one job step is to call this PS script. The job is scheduled to run every 5 minutes.

Summary

In this tip, I have presented one self-service framework for users who may request a SQL Server DBA’s help. This self-service framework design is straight forward, it initiates from the requestor by filling the designated configuration file and then will be executed by the deployment engine and the deployment will be communicated via email.

When designing such framework, we need to consider fully the communication protocol used in the framework, for example, in one of my other versions, I added [DeploymentTime] into the protocol because some users want deployment to be done after a specific time (like after business hours), and with that information added, the deployment engine needs to have the logic implemented to handle it.

The self-service topic in DBA domain can be huge, anything like refreshing data of non-prod databases (or tables) with data from production, creating a new user with the same privileges as an existing user, or disabling some job schedule so the job(s) will not run during a maintenance window, etc., can all be good candidates for self-service targets.

Next Steps

Try to consider whether in your company, you can automate some of your DBA services by creating a self-service framework which will not only reduce your burden as a DBA, but also give your customers more control in their own task, i.e. less dependent on availability of a DBA, it is a win-win to all stakeholders in boosting productivity.

The following articles may provide some interesting information that have techniques similar to this tip:

- Monitoring SQL Server with PowerShell Core Object Setup

- A Self-Serve System that Allows Developers to Refresh Databases (Part 1)

- Automate Registering and Maintaining Servers in SQL Server Management Studio (SSMS)

- SSIS Package for Automated Code Deployment of SQL Server Database Changes

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips