By: Jeffrey Yao | Comments (1) | Related: > SQL Server Agent

Problem

In an environment with hundreds of SQL Server instances managed by a team of DBAs, it is difficult to know exactly which SQL Server Agent Job on which SQL Server instance gets changed during a deployment by one of the DBAs. This gets even more complex when some SQL jobs are handled by other teams. The problem of this potential "not-knowing-what-happened" can easily make an on-call DBA frustrated when an issue occurs, and the DBA needs to figure out what happened and why it happened.

Querying against the msdb database may tell me when a job is changed, but it will be hard to tell me what changed, so is there a way to audit SQL Server Agent job changes?

Solution

Auditing SQL job changes is important as a DBA, MSSQLTips has a good tip about how to do the auditing using system tables/views inside msdb, please see the reference in [Next Steps] section.

This solution uses a different approach, basically it does the following two steps

- Script a job to a SQL file

- Get the hash value of the SQL file, and compare it to its previous value, if the hash value is the same, no change, otherwise, the job is changed.

The advantage of this method is that we can easily use the script files to recreate the pre-modification job and compare it to the existing job (i.e. post-modification) and see the change.

Script out SQL Job for Hash Value

To script out a SQL job, we need to use the SQL Server Management Object job class and the easiest way is to use PowerShell to do the work.

In this tip, we will use a well-known open-source SQL Server PowerShell module, i.e. dbatools, which contains the cmdlet we need to script out a SQL job so we do not need to reinvent the wheel (but if you want, is not that difficult to re-create the process).

I created a SQL job named "Test Job" with just one step, containing only one SQL statement as shown below, with a schedule of a daily run at 8am.

print 'hello world';

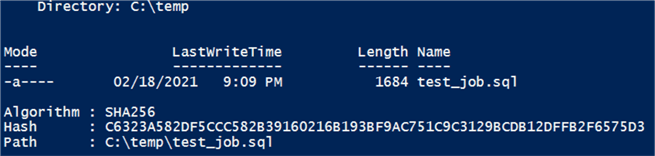

Assuming dbatools is installed, we can script out this job and then get the hash value of the file via get-filehash, as follows:

#script out a sql job import-module dbatools; $pth = 'c:\temp\test_job.sql'; if (test-path -Path $pth) { del -Path $pth;} Get-DbaAgentJob -SqlInstance localhost\sql2019 -Job 'test job' | Export-DbaScript -FilePath "c:\temp\test_job.sql" -NoPrefix -Encoding ASCII; #get the filehash value Get-FileHash -Path $pth;

When running the script, I will see the result displayed as follows:

Real World Solution

Now we have explained the mechanism behind the solution, so we will create a workable solution to monitor multiple jobs on a server. Here are the key design points.

1. We first create three tables for the solution to store the collected information:

use MSSQLTips; go -- the tables are used for monitoring job change --1. JobList_Current contains info for current jobs drop table if exists dbo.JobList_Current; create table dbo.JobList_Current( SQLInstance varchar(128) , JobName varchar(128) , FilePath varchar(256) -- sql script path , [Hash] varchar(128) , LogDate datetime default getdate() , id int identity primary key ); --2. JobList_History contains info for job history drop table if exists dbo.JobList_History; create table dbo.JobList_History( SQLInstance varchar(128) , JobName varchar(128) , FilePath varchar(256) -- sql script path , [Hash] varchar(128) , [Status] char(1) -- U = Updated, D = Deleted , LogDate datetime default getdate() , id int identity primary key ); -- 3. JobList_Staging is used for loading job info, it will be truncated frequently drop table if exists dbo.JobList_Staging; create table dbo.JobList_Staging( SQLInstance varchar(128) , JobName varchar(128) , FilePath varchar(256) -- sql script path , [Hash] varchar(128) , [Status] char(1)-- N = New, U = Updated );

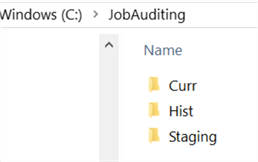

2. We will create three folders on a local drive: Staging, Curr and Hist.

#create necessary folders #change the path according to your own environment $main_folder = 'c:\JobAuditing'; $stg_path = 'c:\JobAuditing\Staging'; $curr_path = 'c:\JobAuditing\Curr'; $hist_path = 'c:\JobAuditing\Hist'; if (-not (test-path -Path $main_folder -PathType Container)) { New-Item -Path $main_folder -ItemType container; } if (-not (test-path -Path $stg_path -PathType Container)) { New-Item -Path $stg_path -ItemType container; } if (-not (test-path -Path $curr_path -PathType Container)) { New-Item -Path $curr_path -ItemType container; } if (-not (test-path -Path $hist_path -PathType Container)) { New-Item -Path $hist_path -ItemType Container; }

3. Here is the general work flow logic, for detailed implementation, please check the PS script below.

- Delete any files in $stg_path (i.e. c:\JobAuditing\Staging\), truncate table dbo.JobList_Staging table

- Loop through each job and script it out to a file in $stg_path, and get the hash value of the file

- Save the job name, file path and hash value into dbo.JobList_Staging table.

- Compare hash values in dbo.JobList_Staging with those in dbo.JobList_Current to decide whether this is a new job, an updated job or a deleted job.

- If updated / deleted, move the records from dbo.JobList_Current to dbo.JobList_History, and then move the job from dbo.JobList_Staging to dbo.JobList_Current, finally send out a report to DBA team for any job changes.

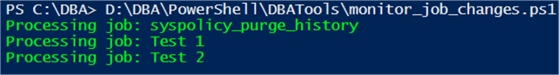

4. The following PowerShell script implements the work flow mentioned above.

#function: monitor job change and email for any changesimport-module dbatools, sqlserver; #[boolean] $debug = 1; # 0 = $false; , $stg_path = 'c:\JobAuditing\Staging'; # better not have \ at the end $curr_path = 'C:\JobAuditing\Curr'; $hist_path = 'C:\JobAuditing\Hist'; $central_svr = 'localhost\sql2017'; # central sql instance to host monitored job info $central_db = 'MSSQLTips'; $dba_email = '[email protected]'; # replace it with your own email $monitored_server = 'localhost\sql2017'; # does NOT need to be the same as $central_svr if (test-path -Path "$stg_path\*.sql") { remove-item -Path "$stg_path\*.sql";} $tbl = new-object "system.data.DataTable"; $col = new-object System.Data.DataColumn ("SQLInstance", [system.string]); $tbl.Columns.Add($col); $col = new-object System.Data.DataColumn ("JobName", [system.string]); $tbl.Columns.Add($col); $col = new-object System.Data.DataColumn ("FilePath", [system.string]); $tbl.Columns.Add($col); $col = new-object System.Data.DataColumn ("Hash", [system.string]); $tbl.Columns.Add($col); #clean up joblist_staging table invoke-sqlcmd -ServerInstance $central_svr -Database $central_db -Query "truncate table dbo.JobList_Staging;" -QueryTimeout 120 -ConnectionTimeout 120; try { $jobs = get-dbaAgentJob -SqlInstance $monitored_server; foreach ($j in $jobs) { write-host "Processing job:$($j.Name)" -ForegroundColor Green; #replace special chars inside [\/":'] to blank or $ $fpath="$stg_path\$(($j.sqlInstance).replace('\', '$'))_$(($j.name-replace '[:"'']', '')-replace '[\\\/]','$').sql" $j | Export-DbaScript -NoPrefix -Encoding ASCII -FilePath $fpath | Out-Null; $hs=Get-FileHash -Path $fpath; $r = $tbl.NewRow(); $r.SQLInstance =$j.SqlInstance; $r.JobName = $j.Name; $r.FilePath = $fpath; $r.Hash = $hs.Hash; $tbl.Rows.add($r); } #foreach $j Write-SqlTableData -ServerInstance $central_svr -DatabaseName $central_db -SchemaName dbo -TableName JobList_Staging -InputData $tbl; $tbl.Clear(); $svr = $j.sqlinstance; $JobChange_qry = @" -- check whether there is new job if exists ( select Jobname from dbo.JobList_staging where SQLinstance= '$($svr)' except select Jobname from dbo.JobList_Current where SQLInstance = '$($svr)') begin ; with c as ( select Jobname from dbo.JobList_staging where SQLInstance= '$($svr)' except select Jobname from dbo.JobList_Current where SQLInstance = '$($svr)' ) update s set [Status]='N' -- new from dbo.JobList_Staging s inner join c on s.SQLInstance = '$($svr)' and s.JobName = c.Jobname; insert into dbo.JobList_Current (SQLInstance, JobName, [FilePath], [Hash]) select s.SQLInstance, s.JobName, replace(s.[FilePath], '$($stg_path)', '$($curr_path)'), s.[Hash] from dbo.JobList_Staging s where s.SQLInstance = '$($svr)' and s.[Status]='N'; end -- check whether there is any job changed since last check if exists (select s.Jobname from dbo.JobList_staging s inner join dbo.JobList_Current l on s.SQLInstance = l.SQLInstance and l.SQLInstance = '$($svr)' and s.JobName = l.JobName and (s.[Status] <> 'N' or s.[Status] is null) and s.[Hash] <> l.[hash]) begin update s set [Status]='U' -- update from dbo.JobList_Staging s inner join dbo.JobList_Current l on s.SQLInstance = l.SQLInstance and s.JobName = l.Jobname and s.SQLInstance = '$($svr)' and s.[Hash] <> l.[hash]; update l set l.[Hash]=s.[Hash] , l.[LogDate]=getdate() output deleted.[SQLInstance], deleted.[JobName], deleted.[Hash], 'U' into dbo.JobList_History ( [SQLInstance], [JobName],[Hash],[Status]) from dbo.JobList_Current l inner join dbo.JobList_Staging s on s.SQLInstance = l.SQLInstance and s.JobName = l.Jobname and s.SQLInstance = '$($svr)' and s.[Status]='U'; end -- check whether there is a job deleted since last check if exists ( select l.SQLInstance, l.Jobname from dbo.JobList_Current l where SQLInstance = '$($svr)' except select s.SQLInstance, s.JobName from dbo.JobList_Staging s where s.SQLInstance = '$($svr)' ) begin ; with c as ( select l.SQLInstance, l.JobName from dbo.JobList_Current l where SQLInstance = '$($svr)' except select s.SQLInstance, s.JobName from dbo.JobList_Staging s where s.SQLInstance = '$($svr)' ) delete dbo.JobList_Current output deleted.[SQLInstance], deleted.[JobName], deleted.[Hash], 'D' into dbo.JobList_History ([SQLInstance], [JobName], [Hash], [Status]) from dbo.JobList_Current l inner join c on c.SQLInstance = l.SQLInstance and c.Jobname = l.Jobname; end -- we need to report the change select SQLInstance, JobName, [Status]='Deleted' from dbo.JobList_History where [Status] ='D' and LogDate > dateadd(minute, -1, getdate()) -- to avoid reporting previous records and SQLInstance = '$($svr)' union all select SQLInstance, JobName, [Status]= case [status] when 'U' then 'Updated' else 'New' end from dbo.JobList_Staging where [status] in ('N', 'U') and SQLInstance = '$($svr)'; "@; $rslt = Invoke-Sqlcmd -ServerInstance $central_svr -Database $central_db -Query $JobChange_qry -OutputAs DataRows; if ($rslt -ne $null) { foreach ($r in $rslt) { $fp="$($svr.replace('\', '$'))_$(($r.jobname-replace '[:"'']', '')-replace '[\\\/]','$').sql"; switch ($r.Status) { 'New' { Move-Item -path "$stg_path\$fp" -Destination $curr_path -Force; break;} 'Updated' { # we need to find the original file and rename it according to its original write time $fn = dir -path "$curr_path\$fp"; $fpath= $fn.fullname.substring(0, $fn.fullname.Length-4) + '_' + ($fn.LastWriteTime).tostring('yyyyMMdd') +'_original.sql'; $fname = Split-Path $fpath -leaf; Move-Item -Path $($fn.FullName) -Destination $hist_path\$fname; Copy-Item -Path "$stg_path\$fp" -Destination $curr_path -Force; break; } # 'update' #we need to mark the file that is deleted 'Deleted' { $fn = dir -path "$curr_path\$fp"; $fpath= $fn.fullname.substring(0, $fn.fullname.Length-4) + '_' + ($fn.LastWriteTime).tostring('yyyyMMdd') +'_deleted.sql'; $fname = Split-Path $fpath -leaf; Move-Item -Path $($fn.FullName) -Destination $hist_path\$fname -force; break; }# 'deleted' } #switch } #foreach ($r in $rslt) } #if $rslt -ne null }#try catch { write-error $_; }#catch $qry = @" drop table if exists #tmp; declare @minutes int = -1; select SQLInstance, JobName, [Status]='Deleted' , [OriginalFile]= '$($hist_path)' + replace(SQLInstance,'\', '$') + '_' +replace(Jobname, ':', '') + '_' + format(logdate, 'yyyyMMdd') +'_deleted.sql' into #tmp from dbo.JobList_History where [Status] = 'D' and LogDate > dateadd(minute, @minutes, getdate()) union all select SQLInstance, Jobname, [Status]= 'Update' , [FilePath]= '$($hist_path)' + replace(SQLInstance,'\', '$') + '_' +replace(Jobname, ':', '') + '_' + format(getdate(), 'yyyyMMdd') +'_original.sql' from dbo.JobList_Staging where [status] in ('U') union all -- new jobs for non-first loading select SQLInstance, Jobname, [Status]= 'New' , [FilePath]= replace(FilePath, '$($stg_path)','$($curr_path)') from dbo.JobList_Staging where [status] in ('N ') and SQLInstance in (select distinct SQLInstance from dbo.JobList_Current where LogDate < dateadd(minute, @minutes, getdate())); DECLARE @xml NVARCHAR(MAX); DECLARE @body NVARCHAR(MAX); SET @xml = CAST(( select SQLInstance as 'td','', Jobname as 'td','', Status as 'td','', OriginalFile as 'td' from #tmp t FOR XML PATH('tr'), ELEMENTS ) AS NVARCHAR(MAX)) SET @body ='<html><body><H3>Job Change Notification</H3> <table border = 1> <tr><th> SQLInstance </th> <th> Job </th> <th> Status </th> <th> FilePath </th></tr>' SET @body = @body + @xml +'</table></body></html>'; if exists (select * from #tmp) begin EXEC msdb.dbo.sp_send_dbmail @profile_name = 'SQLAgent', @body = @body, @body_format ='HTML', @recipients = '$($dba_email)', @subject = 'Job Change History on$((Get-Date).toString('yyyy-MMM-dd')) ' ; end "@; invoke-sqlcmd -ServerInstance $central_svr -Database $central_db -Query $qry; ;

Demonstrate PowerShell Script

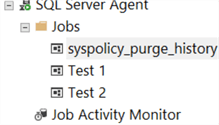

First create two SQL jobs [Test 1] and [Test 2] as follows:

If we run the PowerShell script in PS ISE for the first time, it will return nothing, but the previously empty tables and folders will be populated as follows:

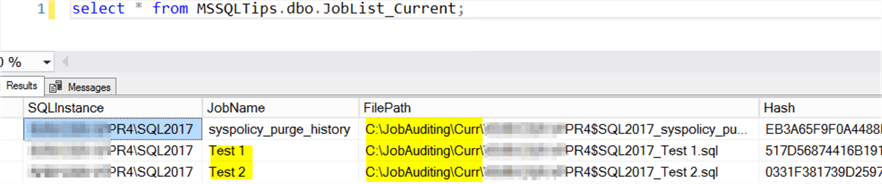

We can see that the dbo.JobList_Current table is populated with 3 records:

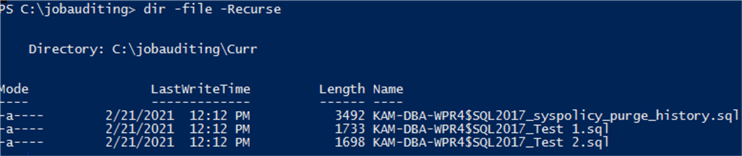

We can also list all files under c:\JobAuditing\ folder:

We will now do the following job updates

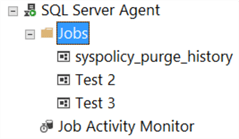

- Delete job [Test 1]

- Update job [Test 2] (such as adding a new step or update a job step)

- Create a new job [Test 3]

After the changes, we have the following job list in my SQL Server instance:

When I rerun the PS script, I expect the following:

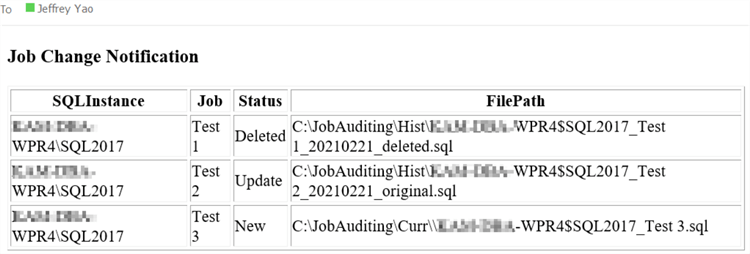

- I will receive an alert email reporting the changes

- The dbo.JobList_History table should be populated with two records, one for the deleted job [Test 1] and another for the updated job [Test 2]

- There will be files in folder C:\JobAuditing\Hist\ for the deleted and the updated jobs

So I just rerun the PowerShell script inside PS ISE, and here are results:

- I have received an alert email like the following:

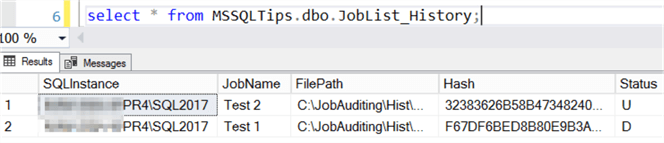

- The dbo.JobList_History is populated with two records, one for the deleted job and another for the updated job:

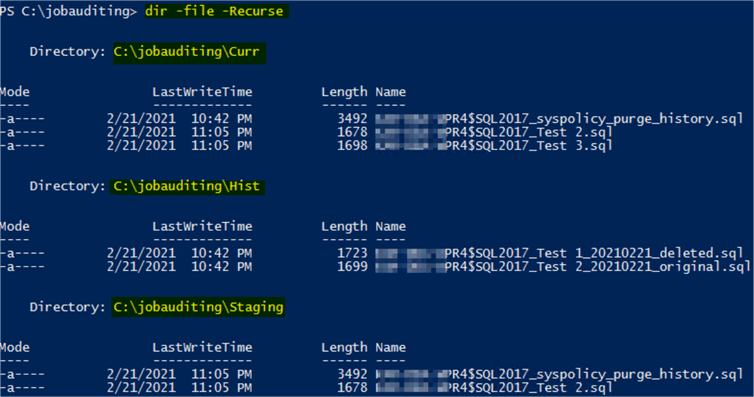

- Files in C:\JobAuditing\ subfolders are exactly as expected:

- In C:\JobAuditing\Curr\ this contains the scripts for the current SQL jobs

- In C:\JobAudting\Hist\ this contains the scripts for the previous scripts that can be used to re-generate the deleted job and get us back to the job before the update.

- In C:\JobAuditing\Staging\ this contains the scripts for the jobs that are not changed. (If changed, the scripts will be moved to the C:\JobAuditing\Curr\ folder)

Summary

In this tip, we introduced a framework to audit SQL Server job changes, all changes will be logged and reported.

In a complex environment where there are multiple DBAs or multiple teams that can change SQL Server jobs, this framework will help everyone know about the changes in a centralized way and another benefit is that if you made a mistake by updating or deleting a job, you can always restore the job by going to the C:\JobAuditing\Hist\ folder to find the script.

Of course, this auditing framework is not real-time monitoring, i.e. like a trigger where any changes can be immediately captured.

From a big picture perspective, this framework can be easily modified to audit any type of SQL Server object that can be scripted out, for example, views, stored procedures, user permissions, etc.

Next Steps

You can modify the PowerShell script in the tip to monitor multiple SQL Server instances and then schedule this monitoring to run at fixed time. I schedule mine to run twice a day in my environment. So, if someone makes a change, such as disabling / modifying a job, I can always catch it.

On MSSQLTips.com there are many tips about SQL Server Agent job monitoring and each comes from a different angle and for different purpose. Read more about them here:

- Monitor SQL Server Agent Job Activity with Power BI

- SQL Server Agent Jobs Monitoring Script

- SQL Server Agent Job Monitoring with PowerShell

- Automate Custom SQL Server Agent Job Monitoring with Email Notification

- Auditing for New SQL Server Agent Jobs

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips