By: Hadi Fadlallah | Updated: 2022-04-19 | Comments | Related: > Integration Services Performance

Problem

While only claiming to stage the data, many SSIS developers report poor performance of inserting data from flat files into SQL Server. In this article we will explain what data staging is and available data staging methods in SSIS.

Solution

This article explains data staging and staging methods in SSIS, and how to choose the appropriate approach while designing your data pipeline.

What is data staging?

Data staging is the process of storing data in an intermediate place between the source and destination repository. Staging is mainly done to minimize the in-memory load and to split the data processing operations into different phases, especially when the data volume is reasonable, the flow of data is high, and the type of data is diverse.

Data staging allows developers to apply data transformations, clean data, or even integrate the data using the staging database engine rather than in-memory.

Staging data using the Data Flow task

The most popular method to insert data into a database using SSIS is using a Data Flow task. As mentioned in the SSIS documentation, "the Data Flow task encapsulates the data flow engine that moves data between sources and destinations and lets the user transform, clean, and modify data as it is moved."

Using a Data Flow task, mainly two components are used to load data into SQL Server: the OLE DB destination and the SQL Server destination. You can read more about both components here SQL Server Destination vs. OLE DB Destination.

Is a Data Flow task always a good choice to stage data?

Data is loaded into the ETL server memory and transferred as batches within the data pipeline using a data flow task. This means that if no transformation is needed using data pipelines is useless. Even if optimizing the data flow components may increase the performance, it will not guarantee high performance, especially when dealing with huge flat files. Still, using a data flow task may be your best choice when transformations are required outside of the database engine or when reading the data source requires implementing a complex logic such as reading data from a web service.

Staging data using the Bulk Insert task

As described in the official documentation, "the Bulk Insert task provides an efficient way to copy large amounts of data into a SQL Server table or view". It is one of the most efficient alternatives of staging data from flat files into SQL databases, especially when implementing an ELT paradigm. The SSIS Bulk Insert task is invoked from the T-SQL Bulk insert command.

There are several considerations for using the SSIS Bulk Insert task:

- The source file should be a text file; if you are dealing with different file formats, you should convert it into text before using the Bulk Insert Task.

- The Bulk Insert destination should be an SQL Server database (as mentioned above, this task is invoked from the T-SQL Bulk Insert method).

- Only the sysadmin fixed server role members can execute a Bulk Insert task.

- The Bulk Insert task only appends the data in the destination table.

- Views can be used as a destination only if they are designed using a single table (views used for security purposes or to minimize the number of available columns in a table).

- Implementing the same logic of a Bulk Insert Task using a T-SQL command in an Execute SQL Task is possible.

- Data transformation cannot be applied while inserting data using the Bulk Insert task.

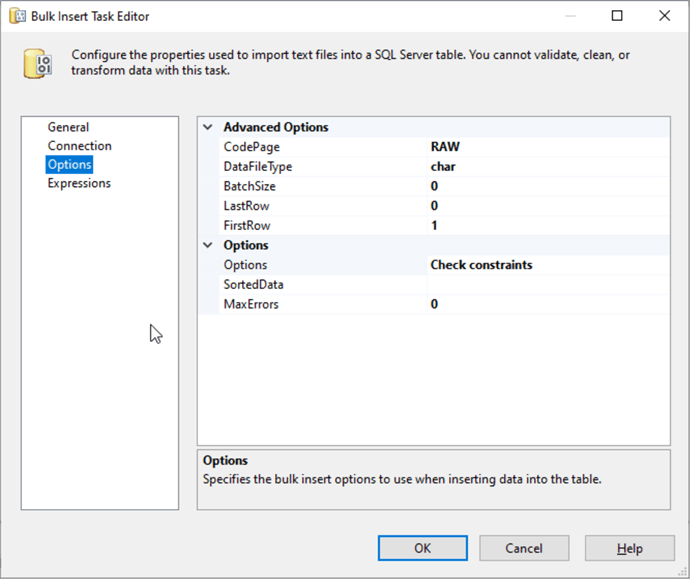

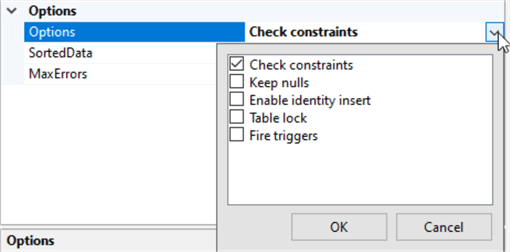

If you are familiar with the T-SQL Bulk Insert command, you will notice that all available configurations are found in the SSIS Bulk Insert task editor, as shown in the code and screenshots below.

T-SQL Bulk Insert syntax:

BULK INSERT

{ database_name.schema_name.table_or_view_name | schema_name.table_or_view_name | table_or_view_name }

FROM 'data_file'

[ WITH

(

[ [ , ] BATCHSIZE = batch_size ]

[ [ , ] CHECK_CONSTRAINTS ]

[ [ , ] CODEPAGE = { 'ACP' | 'OEM' | 'RAW' | 'code_page' } ]

[ [ , ] DATAFILETYPE =

{ 'char' | 'native'| 'widechar' | 'widenative' } ]

[ [ , ] DATA_SOURCE = 'data_source_name' ]

[ [ , ] ERRORFILE = 'file_name' ]

[ [ , ] ERRORFILE_DATA_SOURCE = 'errorfile_data_source_name' ]

[ [ , ] FIRSTROW = first_row ]

[ [ , ] FIRE_TRIGGERS ]

[ [ , ] FORMATFILE_DATA_SOURCE = 'data_source_name' ]

[ [ , ] KEEPIDENTITY ]

[ [ , ] KEEPNULLS ]

[ [ , ] KILOBYTES_PER_BATCH = kilobytes_per_batch ]

[ [ , ] LASTROW = last_row ]

[ [ , ] MAXERRORS = max_errors ]

[ [ , ] ORDER ( { column [ ASC | DESC ] } [ ,...n ] ) ]

[ [ , ] ROWS_PER_BATCH = rows_per_batch ]

[ [ , ] ROWTERMINATOR = 'row_terminator' ]

[ [ , ] TABLOCK ]

-- input file format options

[ [ , ] FORMAT = 'CSV' ]

[ [ , ] FIELDQUOTE = 'quote_characters']

[ [ , ] FORMATFILE = 'format_file_path' ]

[ [ , ] FIELDTERMINATOR = 'field_terminator' ]

[ [ , ] ROWTERMINATOR = 'row_terminator' ]

)]

SSIS Bulk Insert task editor:

Why could it guarantee higher performance than the Data Flow task?

The BULK INSERT command is the in-process method for bringing data from a text file into SQL Server. Because it runs in process with sqlservr.exe, it is a very fast way to load data files into SQL Server.

Is bulk insert always a good option?

I met many people during my career who always pushed the developers to use the Bulk Insert method since it guarantees a higher performance while inserting data. But in several cases, this is not recommended, especially when data transformations are not allowed or do not have sufficient resources in the database engine. In that case, using a data flow task is more appropriate since it will transform the data before loading it into the staging table.

The Data loading performance guide

In 2009, Microsoft published a technical article entitled "The data loading performance guide". This article compared the different data loading methods from a performance perspective.

They mentioned that using the Bulk Insert command usually is the fastest loading data into SQL Server. But in the following cases, a Data Flow task may guarantee a higher performance:

- Using an OLE DB destination component: If you run DTEXEC on a different server than SQL Server, Integration Services can deliver very high speed by offloading data conversions from the Database Engine.

- Using a SQL Server destination component: SQL Server Destination will use more CPU cycles than BULK INSERT, limiting max speeds. But because it offloads the data conversion, the throughput of a single stream insert may be faster than BULK INSERT.

Summary

To summarize, there is no ideal solution for all data staging operations. Instead of just thinking about the data staging phase, you should study the whole ETL process requirements, the available resources, and the working environment.

Noting that we didn't do any experiments with these different techniques since all needed information can be found in the previously mentioned data loading performance guide.

Next Steps

- After choosing the right strategy to stage data using SSIS, you should read more about configuring the Bulk Insert task and how to optimize its performance.

- If you decide to go with the Data Flow task, you should read more about configuring the OLE DB destination.

- It would be best if you read more about the SSIS package performance optimization methods: SQL Server Integration Services SSIS Performance Tuning Techniques (mssqltips.com)

About the author

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing.

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing. This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-04-19