By: Mohammed Moinudheen | Comments (4) | Related: 1 | 2 | 3 | 4 | 5 | > Replication

Problem

I have noticed that available disk space on my SQL Server is getting low and I see a number of files getting generated in the replication snapshot folder. Because of these files there is a space issue on my server. Why are these files here and what can I do to resolve this problem?

Solution

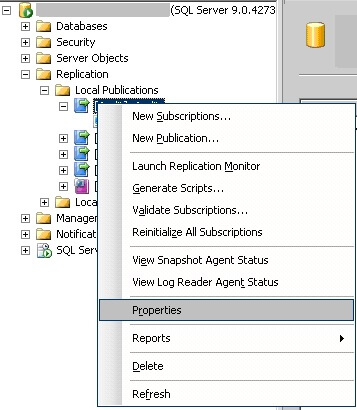

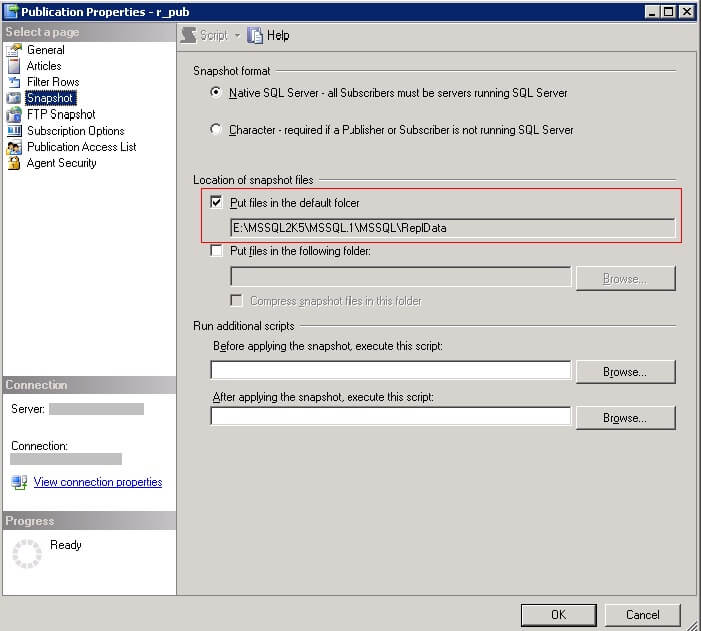

The snapshot agent job is used by default in all types of replication in SQL Server. It is used for generating an initial snapshot of all the articles that need to be replicated to subscribers. Refer to the screenshots below to check the location of the snapshot folder.

On clicking 'Properties', we can see the snapshot folder location.

Based on the schedule of the snapshot agent job, there will be snapshot agent files getting generated in the snapshot folder. There will be folders with names like 20110420061766 getting created. This depicts the time the snapshot agent was run. There are usually files similar to below that are generated in the snapshot folder for each article that is published.

Directory of E:\MSSQL2K5\MSSQL.1\MSSQL\repldata\unc\ServerName_R_PUB_R_PUB\20110421070872

NULL

04/21/2011 07:08 AM.

04/21/2011 07:08 AM..

04/21/2011 07:08 AM 55 emp_2.bcp

04/21/2011 07:08 AM 172 emp_2.idx

04/21/2011 07:08 AM 468 emp_2.pre

04/21/2011 07:08 AM 622 emp_2.sch

Some of these files are related to copying the schema of the article, indexes, constraints and the actual data in the BCP files through which the snapshot gets generated.

In this example, only one table 'emp' was selected to be published and we could well imagine the amount of files that would get generated if we are taking a snapshot of a large number of articles. Depending on the type of replication we chose; snapshot, transactional or merge, a new snapshot will get generated every time the snapshot agent job runs. Considering this, if a snapshot agent job is scheduled to run often on a server, then we would see similar set of files as shown above getting generated per published article every time the job runs. This would lead to considerable space constraints on the server if left unchecked.

Cleanup Jobs

In order to counter this, we need to be aware of the important replication maintenance jobs that gets created when replication is configured on the server. The maintenance job Distribution clean up: distribution is of considerable significance as it removes replicated transactions from the distribution database. This clean up job also deletes any old snapshot files that were created by the snapshot agent job keeping only the latest files that get generated the last time this job ran. So, we need to ensure that this job and the Agent history clean up: distribution job is scheduled to run periodically to mitigate space issues that arises due to the replication configuration.

Compression

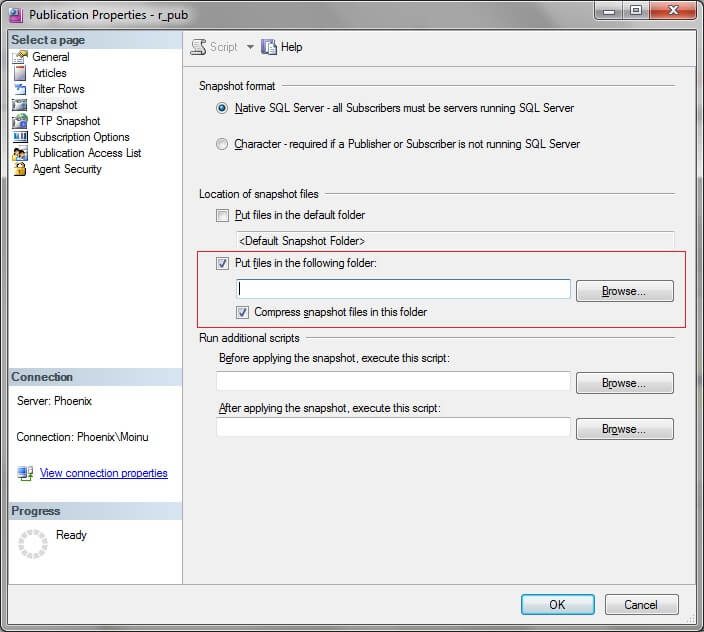

Another option that is available to minimize the space requirement for snapshot files is the 'Compress snapshot files in this folder option'. Similar to previous screenshots, go to Replication-> Local Publication-> Right click and go to 'Properties' and click on 'Snapshot' tab as shown below.

As compression is not supported in the default snapshot folder, we need to enter the relevant path in the 'Put files in the following folder' option. Any UNC network share location or a relevant path with the necessary read/write privileges to the snapshot, distributor or merge agent could be provided in the 'Put files in the following folder' option. Enabling the 'Compress snapshot files in this folder' option would ensure that the snapshot files get compressed when they are generated. This would help in reducing the size of the files, but the trade off would be more CPU utilization with the additional overhead of uncompressing the files at the subscriber when applying the snapshot. This option must be thoroughly rehearsed based on your specific requirement before implementing in a production environment to verify the pros and cons of this feature.

Run Additional Scripts

Another notable feature that is available is the 'Run additional scripts' option as seen in the above screenshot. In this, we could run the scripts on the subscriber before and after applying the snapshot. Basically, we need to provide the location of the script in the 'Before applying the snapshot, execute this script' option. Similarly, provide the valid path in the 'After applying the snapshot' option. This would be handy especially in cases where the publisher, distributor and subscriber are on the same server. In this scenario, we could do a validation check of space availability on the server before and after applying the snapshot which would be a useful alerting mechanism in case of known space issues on the replication server. In the case where there were dedicated servers for publisher, distributor or subscriber, this option could be used based on the requirement to run relevant checks on any of the servers.

Time and Space Requirements

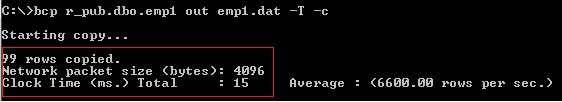

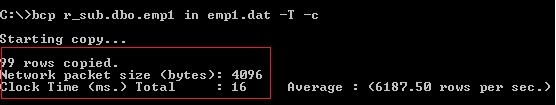

When dealing with large databases, it is recommended to verify the time and space requirement of generating a snapshot by performing a sample BCP of selected articles as the snapshots are created using the BCP utility. This gives better judgment for scheduling the snapshot agent appropriately as it would be quite resource intensive on the server while the snapshot agent is running. Estimating the space requirement of the snapshot agent job depends on the size and the number of articles that are chosen to be published. Below are some sample BCP commands for reference.

Run this command in command prompt if logged on using windows authentication. You would need to substitute the name of the table that you are testing with. This command will copy rows from a table to a data file.

The output for the command is as below. From this, we could get an estimate of the time taken using BCP and also the size of the BCP file.

It may not be easy to get an exact value of the space that would be needed by just issuing a BCP command. This is because additional files get generated by running the snapshot agent job directly depending on the items that are chosen for publication like indexes, constraints, stored procedures, etc. But running the sample BCP commands and maintaining a baseline when dealing with huge publisher databases would be useful especially if we face issues during initial replication set up. Similar to the above BCP command, we could copy the contents from the data file to the table in subscriber database using below command.

Additional Note

Moreover, based on the kind of replication we configure; snapshot or transactional, the snapshot agent would be acquiring relevant locks on the replication tables. Keeping this in mind, it is advisable to schedule the snapshot agent job to run during non-business hours so as to enhance performance in replication.

Next Steps

- Ensure to check the schedule of your snapshot agent job while configuring SQL Server replication

- Ensure to check that the replication maintenance jobs are scheduled appropriately and are enabled

- Ensure to configure alerting mechanism for the replication maintenance jobs in case it fails

- Check out these other replication tips

About the author

Mohammed Moinudheen is a SQL Server DBA with over 6 years experience managing production databases for a few Fortune 500 companies.

Mohammed Moinudheen is a SQL Server DBA with over 6 years experience managing production databases for a few Fortune 500 companies.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips