By: Pavel Pawlowski | Comments (51) | Related: More > Integration Services Development

Problem

It may happen that you need to execute a child SQL Server Integration Services (SSIS) package many times like in the case of processing a large number of flat files from a directory. You can execute a child package in a sequential way using a Foreach Loop Container, however this can take a very long time. In such scenarios, running SSIS packages in parallel comes into mind, but how can this be done? Check out this tip to learn more.

Solution

There are third party SQL Server Integration Services (SSIS) components which allow parallel execution of child packages, unfortunately these components do not work as expected every time and of course, most of these components are not free.

Therefore, in this tip we will demonstrate an alternate approach for executing child SSIS packages in parallel without the use of third party components. We will be using SSIS 2012 in Project deployment mode for this tip.

Retrieving a List of Files to Process in SQL Server Integration Services

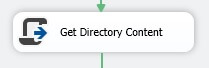

We will use a Script Task to get a list of files to process and store this data in a Queue collection which will be further stored in the [User::DirectoryContent] variable of type Object.

For the purpose of passing the folder location for the files, we use a project parameter [$Project::SourceFolder].

The file retrieval is very easy using the GetFiles method of the Directory class which returns the list as a string array which we pass to the Queue<string> constructor.

System.Collections.Generic.Queue<string> filesQueue = new System.Collections.Generic.Queue<string>(files);

Dts.Variables["User::DirectoryContent"].Value = filesQueue;

Preparing the For Loop Container for Parallel Processing in SSIS

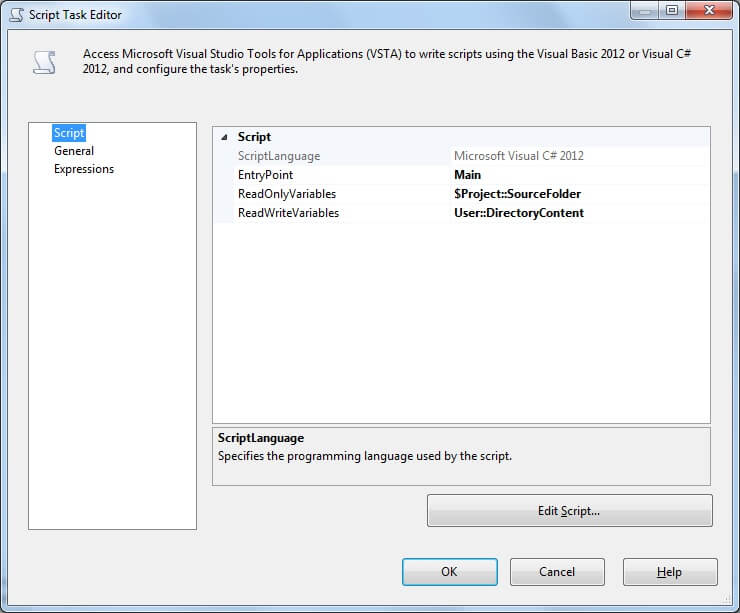

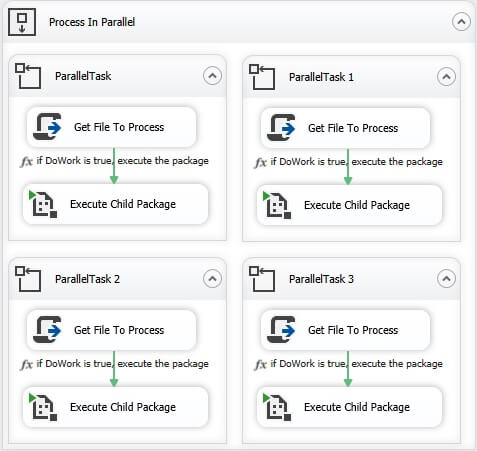

Once we have the file list stored in a queue, we can start getting the files out of the queue and process them. As mentioned at the beginning of this tip, the parallel processing will be done by multiple For Loop Containers. Basically we design one and copy it multiple times depending on the maximum parallelization level we want to achieve. Also we setup a project level parameter [$Project::MaximumParallelism] to allow us to limit the level of parallelization at runtime whenever necessary. The basic design of the For Loop Container will be based on the image below.

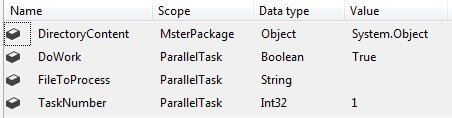

After adding the For Loop Container to the design surface, we have to define a set of variables within the Scope of the ParallelTask.

The [User::DoWork] variable will control when the ParallelTask For Loop Container should exit its loop cycle. Its initial value has to be set to True to allow the code to enter the loop.

The second variable [User::FileToProcess] will store the file to be processed by the child package.

The third variable [User::TaskNumber] identifies the individual instance of the For Loop Container. Its value will start with 1 and will be increased by one for each copy of the For Loop Container. This will allow us control the maximum parallelism on the fly by using the above mentioned project level parameter [$Project::MaximumParallelism]. For example we have 16 copies of the For Loop Container for a maximum of 16 parallel tasks, but the MaximumParallelism parameter will allow us to limit the number of parallel tasks at the time of package execution.

Once the variables are set up, we can setup the ParallelTask For Loop Container.

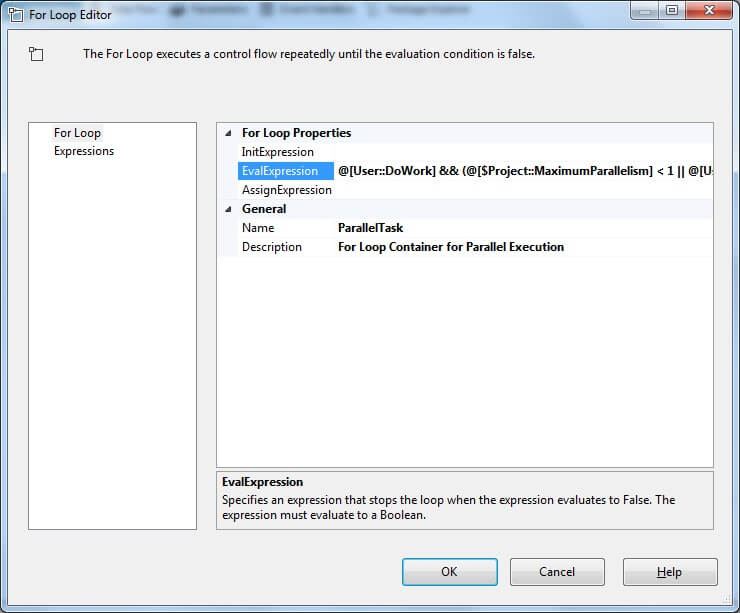

The only thing we need to setup in the properties of the For Loop Container is the EvalExpression. This will tell the For Loop Container when to end or even whether this loop should start at all, based on the [$Project::MaximumParallelism] project parameter setting. We set the EvalExpression as below.

The above expression means that when [User::DoWork] is false (there will be nothing to process) then the For Loop will end. Also the For Loop will not even start if [User::TaskNumber] is greater than the [$Project::MaximumParallelism]. In case the [$Project::MaximumPrallelism] is less than one, there will be no restriction on the number of parallel tasks and all copies of the For Loop Container will run.

Of course we need to take into account the number of logical processors and the MaxConcurentExecutables property setting on the Package level. We cannot achieve greater parallelism than allowed by this property.

Retrieving the File to be Processed by Child SSIS Package

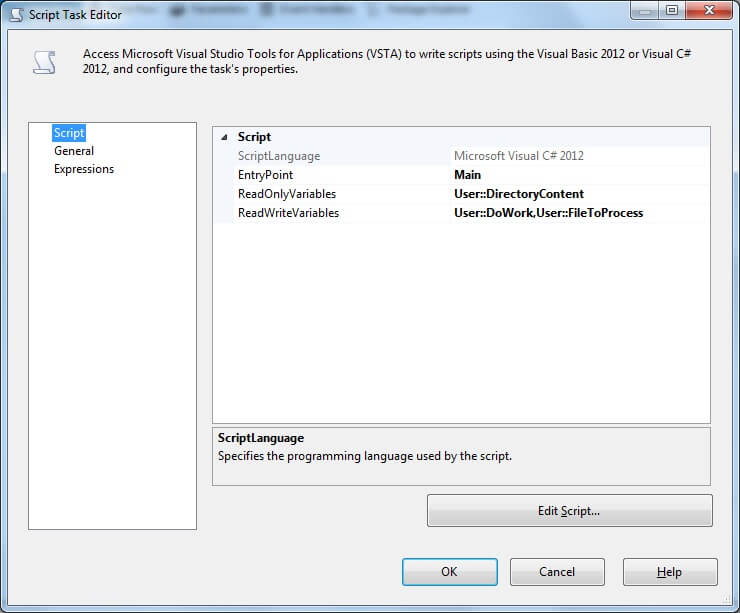

The next step after configuring the ParallelTask For Loop Container is retrieval of the file to be processed in a particular loop. This is the most critical part as one file can be retrieved by only one For Loop Container and the retrieval cannot collide - it must be thread safe as each For Loop Container can be operated by a different thread. For this purpose we will use the Script Task again. We will pass the [User::DirectoryContent] as a ReadOnly variable and [User::DoWork] as well as [User::FileToProcess] as ReadWrite variables.

The [User::DirectoryContent] variable contains the Queue with filenames, but because the variables are passed as an object type into the script, we will cast it back to the Queue<string> type.

Once we have the queue with files, we need to do two things. First, we have to determine if there are items in the queue or not. If yes, then we de-queue a file to be processed and set the [User::DoWork] variable to true. In the other case we will set the [User::DoWork] variable to false which will cause the For Loop Container to end.

To ensure that the de-queuing is done by a single thread and also that no other thread will de-queue an item while we are checking the number of items in the queue, we have to execute the checking and de-queuing inside a critical section. We create the critical section using the lock C# statement which requires global variables which are shared among all threads. The lock statement will ensure that no thread will enter the critical section while another thread is inside the critical section. This ensures a secure way to check the number of items in the queue as well as proper de-queuing.

//Get the queue with files to process

System.Collections.Generic.Queue<string> filesQueue = (System.Collections.Generic.Queue<string>)Dts.Variables["User::DirectoryContent"].Value;

/*

* For the Dqueuing to be thread safe, we need to ensure that multiple concurent

* threads will not dequeue at the smae time.

* This we ensure by dequeuing files inside the critical section using the lock

* statement

*/

lock (filesQueue)

{

//If number of files in queue is greater thatn 0 then dequeue file for processing

//and set DoWork to true. Otherwise set DoWork to false as there is nothing to process

if (filesQueue.Count > 0)

{

Dts.Variables["User::FileToProcess"].Value = filesQueue.Dequeue();

Dts.Variables["User::DoWork"].Value = true;

}

else

{

Dts.Variables["User::DoWork"].Value = false;

}

}

Executing the Child SQL Server Integration Services Package

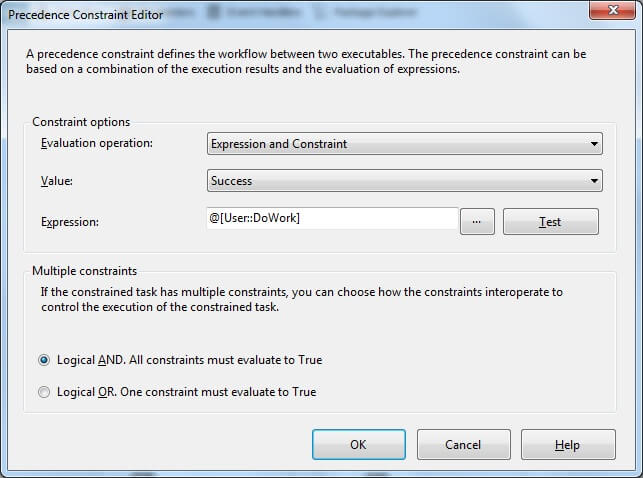

After a file is retrieved from the queue, we can execute a child package and pass the file. To ensure that the child package is executed only if there was something retrieved from the queue, a precedence constraint with the expression has to be used.

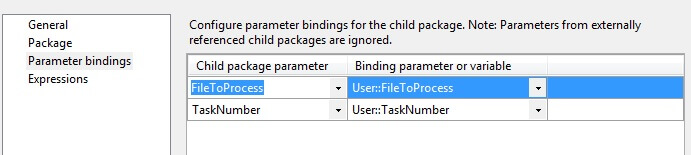

If the [User::DoWork] variable is true, then we execute the package. In our sample solution we will pass the [User::FileToProcess] as well as the [User::TaskNumber] into the child package. The TaskNumber will allow us to see which For Loop Container the child package was executed by.

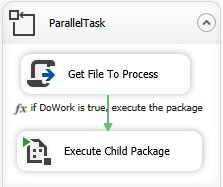

Parallel Execution of the Child SSIS Packages

After successful setup of the ParallelTask For Loop Container and all the child tasks, we can parallelize it by simply copying it inside a single Sequence Container. Again, as mentioned above, the maximum degree of parallelism is defined by the number of copies of this For Loop Container. Also we set an appropriate value of the [User::TaskNumber] in each copy of the container by increasing it by one. The image below shows a sample with four copies of the For Loop Container, with a maximum degree of parallelism of 4.

Testing the Parallel Execution

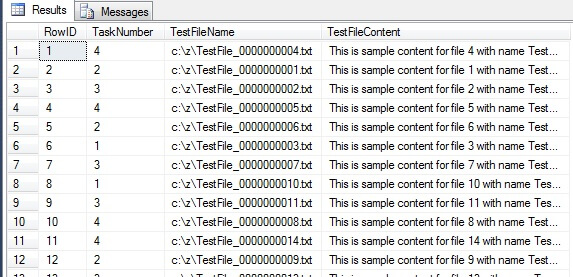

For the purpose of parallel execution testing, we generated a reasonable amount of files inside a directory specified by the [$Project::SourceFolder] parameter. The parallel loops will retrieve paths to the files one by one and pass those to the child package. The child package will read content of each single file and store it with the file name and the TaskNumber to a table in a SQL Server database. For the sample child package details, please refer to the sample project in the Next Steps section. Once we execute the master package, we will receive a result similar to the one in the image below.

As we can see, some loops were executed multiple times prior to others. Also due to the fact that the RowID in the sample table is an identity we can also see the order of execution.

Next Steps

- Please review the lock Statement (C# Reference) for details related to the lock statement.

- You can also check Thread Synchronization (C# and Visual Basic) on MSDN.

- Here you will find reference of the Queue<T> Class.

- Details of For Loop Container are as well on MSDN.

- You can download complete sample SSIS 2012 project here.

About the author

Pavel Pawlowski is Lead BI Specialist in Tieto with focus on Microsoft SQL Server and Microsoft BI platform.

Pavel Pawlowski is Lead BI Specialist in Tieto with focus on Microsoft SQL Server and Microsoft BI platform.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips